Building a news canary

I’ve been toying with the idea of a new website, (because obviously what I need to do is own more domains and produce more content…)

Anyway, what do you think of the following? It’s something I’ve been refining since the start of the year, so that every Sunday I (personally) get an email focused on what’s happened, and what happens next.

I’ve been refining a (looooong) prompt to find interesting news stories, press releases, viral posts, etc. that might suggest things are changing culturally, socially, politically, and economically. I’ve asked for the inclusion of something from each continent over the 10 news stories — and, of course, not to be too US-focused.

Is this something you’d subscribe to? 🤔

(again, this wouldn’t be a Thought Shrapnel thing)

1. US–Israeli strikes on Iran and Khamenei’s death

US and Israeli forces have launched large air and missile strikes against targets in Iran, with reports of explosions in Tehran and other cities and widespread fear inside the country. Iran’s Supreme Leader Ayatollah Ali Khamenei has been reported killed, with US President Donald Trump publicly confirming his death and analysts quickly turning to the question of succession and the balance of power among Iranian elites. Iran has responded with missile attacks on Israel and multiple Gulf states, killing at least one person in Abu Dhabi and shaking cities that normally promote an image of safety and stability.

What happens next?

Key watchpoints include the internal succession process in Iran, potential escalation through proxies across the region, and the reaction of energy and shipping markets to the risk of prolonged instability around the Gulf.

Links:

- Reuters — Iranian leader Khamenei killed in air strikes as U.S., Israel launch attacks

- BBC — Iran’s Supreme Leader Ayatollah Ali Khamenei killed in US strikes

- Al Jazeera — Iran confirms Supreme Leader Ali Khamenei dead after US-Israeli attacks

2. Global reaction and Brazil’s condemnation of the strikes

Brazil’s government has condemned the US–Israeli strikes on Iran, voicing “grave concern” and distancing itself from Washington’s approach to the crisis. Reactions from governments worldwide show a mix of criticism, appeals for restraint, and guarded support, revealing fractures in traditional alliances and a more fragmented diplomatic field. Several states that backed sanctions on Russia are clearly less willing to endorse this round of military action, signalling fatigue with sanctions‑plus‑strikes as a default Western toolkit.

What happens next?

Brazil and other large democracies in the Global South are likely to continue presenting themselves as independent actors and potential mediators, which complicates efforts by Washington and its allies to portray responses to Iran as a simple binary choice.

Links:

- Reuters — Brazilian government condemns strikes on Iran

- Al Jazeera — World reacts to US, Israel attack on Iran, Tehran retaliation

- Critical Threats — Iran Update Evening Special Report: February 28, 2026

3. Bolivia’s crashed cash plane and burned banknotes

A Bolivian Air Force Hercules carrying new central‑bank banknotes crashed on a busy avenue in El Alto, killing around 20 people and injuring dozens, with cash scattered across the crash site. Residents rushed to collect the notes and clashed with security forces, who then burned large quantities of recovered money on site, arguing that it was not yet legal tender and that possession would be illegal. The scenes, captured and shared widely, have touched a nerve in a city already feeling the effects of fuel subsidy cuts and price rises.

What happens next?

The combination of mass casualties and the spectacle of the state incinerating money in front of poor residents is likely to become a powerful reference point in Bolivian politics, feeding anger about inequality, austerity, and institutional trust.

Links:

- Reuters — Residents protest as authorities burn cash left on ground by Bolivian plane crash

- ABC News (Australia) — Bolivian plane carrying banknotes crashes, leaving 20 people dead

- The Guardian — At least 15 killed as cash-laden military cargo plane crashes in Bolivia

4. Vietnam’s AI law takes effect

Vietnam’s Law on Artificial Intelligence comes into force on 1 March 2026, making it the first country in Southeast Asia with a detailed AI statute covering developers, deployers, and users. The law requires clear labelling of AI‑generated content, notification when people interact with AI rather than humans, and stricter controls for high‑risk applications in areas such as health, finance, and security. It is tied to an industrial strategy that includes building national AI computing capacity, strengthening Vietnamese‑language data resources, and encouraging domestic AI firms.

What happens next?

Global platforms and AI companies face a choice between adapting products to meet Vietnam’s standards and potentially drawing on them elsewhere, or limiting services in a market that is positioning itself as a digital hub in the region.

Links:

- France 24 — Vietnam AI law takes effect, first in Southeast Asia

- IAPP — Vietnam’s first standalone AI Law: An overview of key provisions and future implications

- Baker McKenzie — Vietnam: Artificial Intelligence Law – Foundation and Outlook

5. Zimbabwe bans exports of raw minerals and lithium concentrates

Zimbabwe has ordered an immediate halt to exports of all unprocessed minerals and lithium concentrates, including consignments already in transit, citing malpractice and a need to capture more value at home. The ban affects foreign miners and downstream processors who rely on Zimbabwean ore for global battery and electronics supply chains. It aligns with a broader push across several African states to renegotiate their place in extractive industries and to reduce dependence on external financing.

What happens next?

Mining companies must weigh investment in local processing against sourcing from other countries, and other resource‑rich governments may view Zimbabwe’s stance as a precedent, reshaping bargaining power in critical mineral markets.

Links:

- Reuters — Zimbabwe bans exports of all raw minerals and lithium concentrates, cites malpractices

- S&P via Reuters — Africa faces $90 billion debt wall in 2026, S&P says

- Chatham House — Africa in 2026: Global uncertainty demands regional leadership

6. Africa CDC challenges pathogen‑data conditions in US health deals

Africa CDC Director Jean Kaseya has raised “major concerns” over proposed US health‑security agreements that require rapid sharing of pathogen samples and genomic data as a condition for funding. Zimbabwe has already pulled out of talks on a large deal, and Zambia has pushed back over equity and sovereignty issues, arguing that past arrangements have seen data and samples leave the continent without fair access to resulting products. The dispute comes as African governments seek more voice in global health governance after the Covid‑19 experience.

What happens next?

Future pandemic treaties and bilateral agreements are likely to feature much tougher African demands on benefit sharing, local manufacturing, and data governance, which may slow negotiations but could rebalance long‑standing patterns of extraction.

Links:

- Reuters — Africa CDC head cites major concerns over data, pathogen sharing in US deals

- BMJ Global Health — COP27 climate change conference: urgent action needed for Africa and the world

- Africa CDC — Director-General addresses equity and data governance in global health partnerships

7. EU social fund can help pay for access to abortion

The European Commission has clarified that EU member states may use money from the European Social Fund Plus to finance access to safe abortion, including cross‑border care for people from countries with tighter laws. The clarification does not impose new obligations on governments but gives legal and financial cover to those that decide to support such services. It lands in a context where abortion access within the EU is diverging, with some states restricting and others expanding rights.

What happens next?

Expect legal and political disputes about whether EU‑level money is being used to bypass national restrictions, and debates about how far EU social and cohesion tools should reach into sensitive areas of bio‑politics.

Links:

- Reuters — EU says social fund can be used to allow access to safe abortions across bloc

- Cambridge — Governing Europe’s Recovery and Resilience Facility: Between Discipline and Discretion

- Politics and Governance — Governing the EU’s Energy Crisis: The European Commission’s Geopolitical Turn and its Pitfalls

8. Draft EU–India trade deal with MFN clause and digital provisions

A draft text of the EU–India trade agreement shows plans for each side to grant the other Most Favoured Nation treatment for five years after entry into force, limiting scope to offer better terms to other large partners. The draft also includes provisions on digital trade, including data, online services, and rules for tech firms, set against the backdrop of broader EU moves on AI and platform regulation. For India, the deal is part of a diversification effort away from China and towards multiple partners.

What happens next?

If ratified on current lines, the agreement will influence India’s choices on data governance and digital regulation and will set a reference point for trade talks between advanced economies and large emerging markets that seek more autonomy.

Links:

- Reuters — India and EU lock in WTO guardrails, digital trade rules in draft trade deal

- Reuters — Europe sets benchmark for rest of the world with landmark AI laws

- Global Policy Watch — EU Regulators Issue Opinion on Revisions of GDPR and Other Data Laws

9. Canada’s Bill C‑16 and changing online‑harms rules

Analysis from regulatory commentators notes that Canada’s Bill C‑16 broadens obligations from telecoms and ISPs to a wider group of “internet services”, including large platforms and infrastructure providers. The bill introduces new criminal offences around coercive control and updates non‑consensual intimate image provisions to include deepfakes and other AI‑generated content. Civil liberties organisations warn about possible conflicts with Canada’s Charter of Rights and Freedoms, while groups working on gender‑based violence argue that stronger tools are overdue.

What happens next?

Once passed, the law is expected to face court challenges and will force platforms to adapt moderation, evidence, and transparency practices, feeding into a larger pattern of diverging national approaches to online harms.

Links:

- The Modern Regulator — February 2026 regulatory update: from passage to practice

- MoFo — Key Digital Regulation & Compliance Developments (February 2026)

- Canadian Civil Liberties Association — Online Harms Legislation and Charter Rights

10. Fiji and Tuvalu to host pre‑COP31 climate meetings

The Pacific Islands Forum has announced that Fiji and Tuvalu will host key meetings in the run‑up to COP31, which will take place in Australia in 2026. Fiji will host the pre‑COP gathering and Tuvalu will host a dedicated leaders’ segment, giving some of the most climate‑vulnerable states greater influence over agenda setting. Forum leaders present this as a chance to centre Pacific priorities such as loss and damage, adaptation finance, and fossil‑fuel phase‑out.

What happens next?

With pre‑COP and COP activity concentrated in and around the Pacific, larger emitters face stronger diplomatic pressure from small island states on issues such as fossil‑fuel production, climate‑related migration, and debt relief linked to resilience.

Links:

- Reuters — Fiji and Tuvalu to host pre-COP31 climate meetings

- AP News — What is the Pacific Islands Forum? How a summit for the world’s tiniest nations became a global draw

- Climate Action Tracker — Australia

Image: César Ardilla

Enough is enough

Today, the pedo-authoritarian US and genocidal Israel attacked Iran to distract from respective domestic problems. At the time of writing, reports are that 85 people have died after strikes hit a girls school.

So, to put that in perspective, innocent girls have died in attempt to cover up a president’s obvious involvement in a sex trafficking ring, and another leader’s issues caused by starving a neighbouring country to death.

Source: Bluesky

Super Mario World Map

I don’t know the original source of this, but I think it’s quite old. A reverse image search led to 118 pages of results…

Source: Klaus Zimmerman

WAO is closing

I know some people only read Thought Shrapnel and not the rest of my work, so I’m just going to give a quick update to say that the co-op I helped set up 10 years ago, and which has been a source of creative and collaborative inspiration, will be closing on 1st May 2026.

We’re all still friends. I’m going to be working through my consultancy business, Dynamic Skillset so get in touch if I can help you or your organisation!

After ten years of creative cooperation, we’re announcing that We Are Open Co-op (WAO) will close its doors on our 10th birthday: 1st May 2026. This has been a carefully considered, collaborative decision — the kind we’ve always tried to model as a co-op.

But, as you can imagine, we’re a bit emotional about it, and it also feels a bit weird to finally say it out loud and in public.

WAO is a strong, well-respected brand. We continue to have wonderful clients, meaningful work and good relationships with each other. People are finally waking up to the idea that flat hierarchies and consent-driven businesses are the future.

In some ways, this is a continuation rather than an ending, as we’ll be carrying forward the cooperative principles we’ve practised into new systems and relationships.

Source: WAO blog

Image: CC BY-ND Visual Thinkery for WAO

Agentic commerce is a catastrophe for every business whose moat is made of friction

I’m too young for memories of the original game on the Commodore 64, but I do remember the SEGA Master System version of Spy vs. Spy. While I wasn’t allowed to have a console myself, I would play on friends' devices. The thing I really remember, though, is my parents leaving me to play for a while which was on a demo unit in Fenwick’s toy department in Newcastle-upon-Tyne. It was a game in which you, as either the ‘black’ or ‘white’ spy had to outwit the other spy.

This homely introduction serves to explain the graphic accompanying both this post and the one I’ve take it from — an extremely clear-eyed view of what “agentic commerce” means for brands. Usually, this kind of thing wouldn’t interest me, but I do like clear thinking on things orthogonal to my line of work.

Let’s start with the setup, which involves a post on an influential blog which was more “speculative fiction” than “investor advice”:

For context: Citrini Research is a financial analysis firm focused on thematic investing research with a highly influential Substack. The piece in question, published this past Sunday, is a fictional macro memo written from June 2028, narrating the fallout of what they call the “human intelligence displacement spiral” – a negative feedback loop in which AI capabilities improve rapidly, leading to mass white collar layoffs, spending decreases, margins tightening, companies buying more AI, accelerating the loop. It’s all a “scenario, not a prediction,” and not really all that new: the idea that a (unit of AI capex spend) < (unit of disposable income) circulating in the real economy has been doing the rounds for a while now. Yet Citrini caused a very real market selloff earlier this week. Apparently this scenario wasn’t priced in.

What the smart people at NEMESIS point out is that our current neoliberal version of capitalism in advanced service-based economies has “built a rent-extraction layer on top of human limitations” as “[m]ost people accept a bad price to avoid more clicks or cognitive labor [with] brand familiarity substituting for diligence.”

In Citrini’s scenario, AI agents dismantle this layer entirely. They price-match across platforms, re-shop insurance renewals, assemble travel itineraries, route around interchange fees etc. Agents don’t mind doing the things we find tedious.

Basically what Citrini is describing as the destruction of habitual intermediation is, from the POV of Nemesis HQ, a description of one of the primary economic functions of brands dissolving.

Essentially, brands “reduce the friction of decision-making” because it’s a shortcut to buying more of what you know you like. There’s a reason I’ve got three Patagonia hoodies, four Gant jumpers, and all of my t-shirts are from THG. But what happens when an agent which knows your preferences can go off and do your shopping for you? Book your holiday? Organise your insurance renewals?

In Citrini’s 2028 world of agentic commerce, brand loyalty is nothing but a tax. When given license to do so, the agent doesn’t care about your favorite app or branded commodity, feel the pull of a well-designed checkout UX flow or default to the app your thumb navigates to the easiest on your home screen. It evaluates every option on price, fit, speed, or whatever other parameters you’ve set. The entire edifice of brand preference built over decades of advertising, design and behavioral psychology becomes, from the agent’s perspective, noise.

Though its not a wholesale “end of brands” it is a catastrophe for every business whose moat is made of friction. And a remarkable number of moats are made pretty much of only friction (think: insurance, financial services, telecom, energy, SaaS, most platforms, Big Grocery, airlines, cars, etc.). Generally speaking, this is a good thing (creative destruction!), and frees the labor of large swaths of branding and marketing professionals to societally more useful ends.

What I think scares people is uncertainty. I probably have a higher tolerance for different forms of ambiguity than most people I know. Well, cognitively, at least; sometimes the body keeps the score.

Source & image: NEMESIS

Units of attention

Good stuff from Jay Springett, who also links to a 60-page PDF called Paying Attention that I… haven’t paid attention to (yet!)

The attention economy rewards the same behaviours regardless of whether the content being spread is a coordinated disinformation campaign or a genuinely interesting essay. The platform does not distinguish between the two. It just measures the engagement.

There is a practical implication buried somewhere in the above though I think.

That if the first units of attention are the ones that change behaviour most, then being liberal with the like button actually matters. dLeaving a comment, sharing a post, replying to something you found worthwhile are small acts that actually work. In the face of “the firehose” the modest counter-move is to be deliberate about where your attention goes, and cultivating the things that you want to see more of.

This is interesting when juxtaposed with something Nita Farahany says in the transcript of a conversation posted on The Exponential View:

This gets at a fundamental question about what it means to act autonomously. Are you acting in a way consistent with your own desires, or are you being steered by somebody else’s desires? There’s very little we do these days that is steered by our own desires.

I’m teaching a class this semester at Duke on mental privacy, advanced topics in AI law and policy. I ran an attention audit on my students. They recorded how many times they picked up their devices over three days, and what they spent their attention on. Day one: record it, don’t change your behaviour. Day two: no apps that algorithmically steer you — which meant basically nothing was allowed. Day three: do whatever you want, record it again. Day two was remarkable. Somebody read a book. They couldn’t remember the last time they’d done that. These are students. Someone finished a puzzle they’d been convinced they didn’t have time for. And day three? Worse than day one. Utterly sucked back in.

I was flicking back through my notebook, which I have not used enough in the last 18 months or so, and came across a note I’d made while reading Oliver Burkeman’s excellent book Four Thousand Weeks. He quotes Harry Frankfurt as saying that our devices distract us from more important matters — it’s as if we let what they show us define what counts as “important”. As such, our capacity to “want what we want to want” is sabotaged.

Sources:

Image: Marcus Spiske

👋 A reminder that I’ve been on holiday this week, so no Thought Shrapnel.

However, I’ve still been saving things to my Are.na channels if you’d like to peruse those…

– Doug

TechFreedom

As explained in this post, Tom Watson and I are putting together an offer to help organisations with their digital sovereignty.

Sign up if you’re interested in joining our first cohort. No obligation.

You cannot manage what you cannot see. Most organisations operate in a fog of invisible reliance; you rely on platforms that obscure their workings behind slick interfaces and terms you never read.

TechFreedom helps you clear the air. We give you the tools to look past Big Tech’s version of the Cloud and build a digital infrastructure that is open, visible, and under your control.

Source: techfreedom.eu

A range of authentic selves?

Jo Hutchinson reshared this on LinkedIn a few days ago, and I wanted to save/share my comments on it. The original post was by Tricia Riddell, a Professor of Applied Neuroscience and includes a short video. In it, she talks about there being “a range of authentic selves” that people can bring to a situation rather than a single “authentic self.”

Riddell wonders whether this is what leads to imposter syndrome, which is usually suffered by high-achievers. “You cannot feel like an imposter,” she says, “unless there is something you believe you’re failing to live up to.”

My response, because it’s easier for me to re-find things here rather than on social media:

As someone who suffers from imposter syndrome most days of my life, it resonates.

I am, though, reminded of something that John Bayley wrote in one of the biographies of his wife, the philosopher Iris Murdoch. He said that Iris believed that she “didn’t have a strong sense of self.” I’ve thought about this over two decades, and I think it maps onto what’s being discussed here.

On the one hand, you could say that those with a strong sense of self want to bring a single “authentic” self to every situation. Which, as Tricia Riddell points out, would mean that they’re not bringing other stories into the situation.

Or, on the other hand, you might say that those without a strong sense of self have a fragmented view of themselves. The imposter syndrome is the price that is paid for an integrated sense of self.

I haven’t figured it out yet, but I find the whole thing fascinating.

Source: LinkedIn

Image: Amy Vann

Each culture is made of shared framings—ontologies of things that are taken to exist

Most of this post talks about the differences between Japan and Italy, which makes it well worth reading in full. What I like about it, though, is that it goes beyond “mental models” to talk about framings and how these are the unseen, and somewhat hidden things that make cultures different. Fascinating.

A mental model is a simulation of “how things might unfold”, and we all build and rebuild hundreds of mental models every day. A framing, on the other hand, is “what things exist in the first place”, and it is much more stable and subtle. Every mental model is based on some framing, but we tend to be oblivious to which framing we’re using most of the time […].

Framings are the basis of how we think and what we are even able to perceive, and they’re the most consequential thing that spreads through a population in what we call “culture”.

[…]

Each culture is made of shared framings—ontologies of things that are taken to exist and play a role in mental models—that arose in those same arbitrary but self-reinforcing ways. Anthropologist Joseph Henrich, in The Secret of Our Success, brings up several studies demonstrating the cultural differences in framings.

He mentions studies that estimated the average IQ of Americans in the early 1800’s to have been around 70—not because they were dumber, but because their culture at the time was much poorer in sophisticated concepts. Their framings had fewer and less-defined moving parts, which translated into poorer mental models. Other studies found that children in Western countries are brought up with very general and abstract categories for animals, like “fish” and “bird”, while children in small-scale societies tend to think in terms of more specific categories, such as “robin” and “jaguar”, leading to different ways to understand and interface with the world.

But framings affect more than understanding. They influence how we take in the information from the world around us.

Source: Aether Mug

Image: Maksym Tymchyk

Like it or not, it is a basic fact of human cognition that we think and act politically as members of social groups.

This post on the blog of the American Philosophical Association (APA) talks about political epistemology. Samuel Bagg is Assistant Professor of Political Science at the University of South Carolina and argues that the reason presenting people with facts doesn’t change their political opinions is due to our social identities.

This resonates with me more than the usual framing where in which we’re told that we need to appeal to people’s emotions. Social identity makes much more sense, and so (as Bagg argues) the way out of this mess isn’t to “ensure every citizen employs optimal epistemic practices” but instead to strengthen our “broadly reliable collective practices.”

I’ve often said that one of the reasons for Brexit was the [attack on expertise by Michael Gove]9www.ft.com/content/3…). Attacking institutions which are proxies through which people can know and understand the world is corrosive, divisive, and ultimately, a cynically populist move.

On the individual level, we are right to worry about the epistemic impact of motivated reasoning, cognitive biases, and “political ignorance.” And at the systemic level, we are right to lament the rise of talk radio, cable news, social media, and the attention economy, along with the corresponding decline of local news, professional journalism, content standards, and so on. Trends in our political economy have indeed conspired with underlying human frailties to undermine the epistemic foundations of a stable and functioning democracy—even in its most minimal form.

Yet this diagnosis is also crucially incomplete, in ways that distort our search for solutions. When we understand the problem in epistemic terms, we naturally seek epistemic answers: better fact-checking, more deliberation, better education, greater media balance. We aim to develop the “civic” and “epistemic virtues” of unbiased, fair-minded citizens. And indeed, such projects are surely worth pursuing. The epistemic failures they aim to address have deeper roots in our political psychology, however—and overcoming them requires grappling with those roots more directly.

In short, decades of research have demonstrated that our political beliefs and behavior are thoroughly motivated and mediated by our social identities: i.e., the many cross-cutting social groupings we feel affinity with. And as long as we do not account for this profound and pervasive dependence, our attempts to address the epistemic failures threatening contemporary democracies will inevitably fall short. More than any particular institutional, technological, or educational reform, promoting a healthier democracy requires reshaping the social identity landscape that ultimately anchors other democratic pathologies.

[…]

We do not generally decide which groups to identify with on the basis of their epistemic credentials regarding political questions. And even if we were to use such a standard, our judgments about which groups satisfy it would be inescapably colored by our pre-existing identities, formed on other bases or inherited from childhood. Developing certain “epistemic virtues” may mitigate some of our blind spots, finally, but it is hubris to think anyone can escape such biases entirely. Like it or not, it is a basic fact of human cognition that we think and act politically as members of social groups.

[…]

The trouble is not that reliable collective truth-making practices no longer exist, but that significant portions of the population no longer trust them—and that as a result, the truths they establish no longer constrain those in power.

[…]

What best explains contemporary epistemic failures… is not the decay of individual epistemic virtues, but the growing chasm between reliable collective truth-making practices and the social identities embraced by large numbers of people. And as a result, the only feasible way out of the mess we are in is not to ensure every citizen employs optimal epistemic practices, but to weaken their social identification with cranks and conspiracy theorists, and strengthen their identification with the broadly reliable collective practices of science, scholarship, journalism, law, and so on. The most visible manifestations of the problem might be epistemic, in other words, but its roots lie in social identity. And the most promising solutions will therefore tackle those roots.

Source: Blog of the APA

Image: Steve Johnson

Digital sovereignty, French-style

In the wake of Big Tech propping up Trump’s increasingly authoritarian regime, European countries and organisations are taking seriously the issue of digital sovereignty.

This initiative from the French didn’t spring out of nowhere — their Open Source Docs offering was something I experimented with last year — but they’ve now got ‘La Suite’ which offers more. The site is, probably entirely intentionally only in French (of which I only have a schoolboy understanding) so I translated their About page.

It’s pretty awesome that they’re a co-op, that they see digital technology as political (which of course it is), and that they want to contribute to the digital commons 🤘

A political ambition

Digital technology is political. The choice of our creation and collaboration tools can either perpetuate the captive income of proprietary publishers or support open source alternatives that set us free. This choice comes at a price: most organisations do not have the means to design tailor-made tools or manage their own free software instances.

With LaSuite.coop, we are creating a modular offering to enable associations, cooperatives, social and solidarity economy enterprises, institutions and universities to equip themselves with the best open source solutions and be supported by dedicated teams. A world is dying, and this project is our contribution to the future that is being built thanks to the actions of all these organisations.

A model for contributing to the commons

To compete in the long term with proprietary software financed by venture capital and its quest for hegemony and profitability, we want every LaSuite.coop application to be based on a true digital commons. That is to say, free software developed, financed and governed in an open and transparent manner by a plurality of public and private actors. The Decidim project and the French government’s digital suite are among our main sources of inspiration.

With LaSuite.coop, we donate a portion of our revenue to the communities that maintain and develop the software we offer. In this way, we contribute directly to a virtuous and resilient circle of mutualisation and collective decision-making.

A cooperative venture

The organisations behind LaSuite.coop have been committed to promoting free and democratic digital technology since their inception. We pool our years of complementary experience in hosting, development, technical project management, training and strategic consulting.

Source: La Suite

How to Be Less Wrong in a Polycrisis

A rare cross-post for me from my Open Thinkering blog. I’m really rather pleased with this and would like you to read it.

Source: Open Thinkering

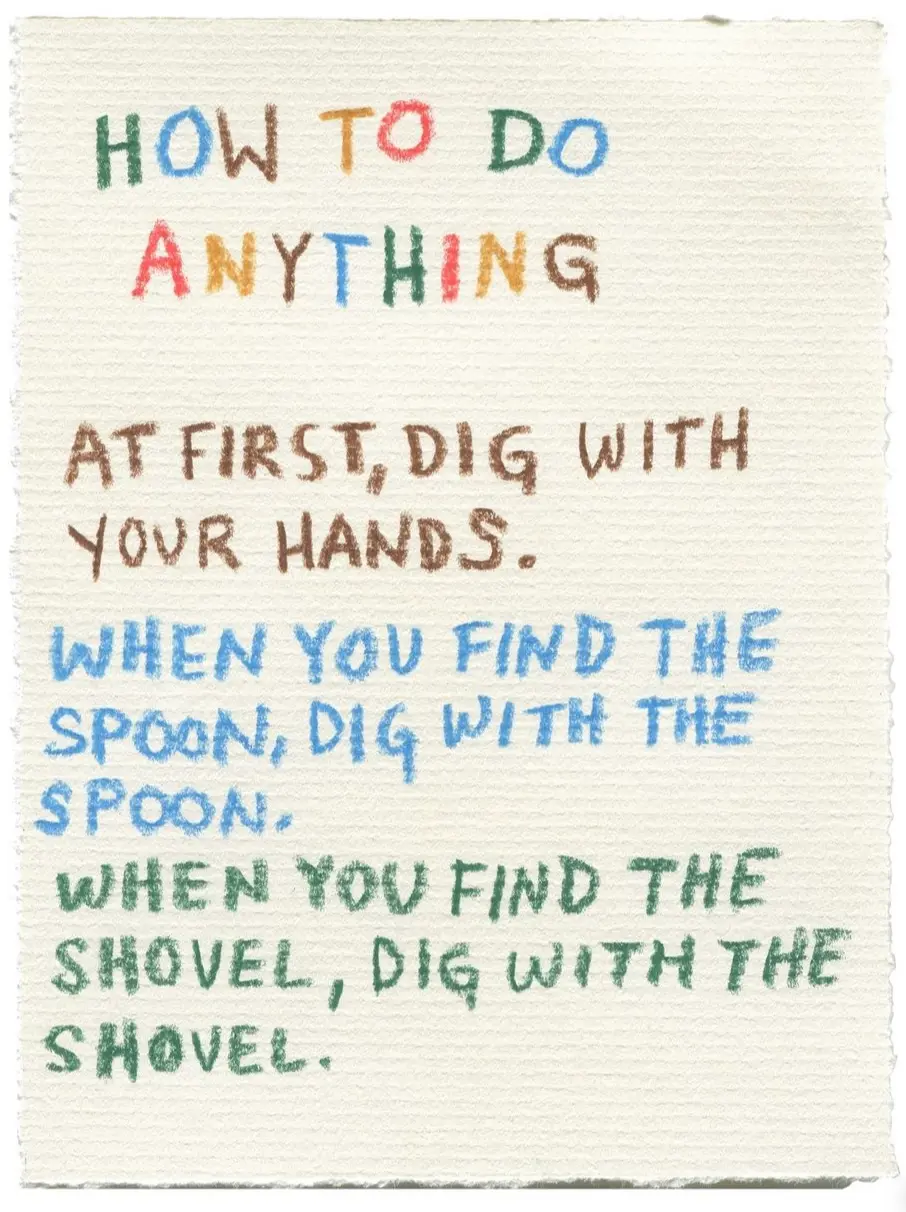

Hands, spoon, shovel

I’ve been using Claude Opus 4.6 over the last couple of weeks, and let me tell you: it feels like digging with a SHOVEL.

Source: Are.na

A rough attempt at laying out what in philosophy is most relevant for AI.

While I expected studying Philosophy as an undergraduate to be personally useful and indirectly useful to my professional career, I didn’t forsee how relevant it would be to our increasingly AI-infused world.

While I expected studying Philosophy as an undergraduate to be personally useful and indirectly useful to my professional career, I didn’t forsee how relevant it would be to our increasingly AI-infused world.

In this post, Matt Mandel, after “[coming] to realize that using LLMs is pushing all of us to more closely examine our philosophical assumptions” has sketched out “a rough attempt at laying out what in philosophy is most relevant for AI.”

I’ve taken the list — covering things with which I’m familiar and those things I’d like to follow-up on — and added links. The “What is agency?" section is particularly timely, as I’ve been thinking about this a lot recently but need to do more reading.

Part 1: Philosophical Concepts

What is a mind?

- Nagel, What is it like to be a bat

- Chalmers, Facing up to the problem of consciousness

- Putnam, The Nature of Mental States

- Searle, Minds, Brains, and Programs

What is agency?

- Anscombe, Intention

- Dennett, The Intentional Stance

- Bratman, Shared Cooperative Activity

- Applbaum, Legitimacy (group agents)

Who has moral status?

- Kant, The Metaphysics of Morals (indirect duties)

- Singer, Animal Liberation

- Korsgaard, Fellow Creatures

What are reasons and values?

- Hume, Enquiry Concerning the Principles of Morals

- Parfit, On What Matters (Volume II, Part 6)

- Mackie, Ethics: Inventing Right and Wrong

- Korsgaard, The Sources of Normativity

Part 2: How should we build AI

How might AIs come to implement our values?

- Kripke, Wittgenstein on Rules and Private Language

- Bostrom, The Superintelligent Will

- Plato, The Republic (Book I)

- Anthropic, Emergent Misalignment

How should AI be involved in governance?

- Applbaum, Legitimacy (“domination by a stone”)

- Séb Krier, Coasean Bargaining at Scale

- Newsom, Snell v United Specialty Insurance Company (concurrence)

- Cass Sunstein and Vermeule, Law and Leviathan

How might AI impact human flourishing?

- Aristotle, Nicomachean Ethics

- Mill, On Liberty

- Frankfurt, Freedom of the Will and the Concept of a Person

- Brendan McCord, Live by the Claude, Die by the Claude

How do we find meaning in a post-AGI world?

- Camus, The Myth of Sisyphus

- Nozick, Philosophical Explanations (Philosophy and the Meaning of Life)

- Bostrom, Deep Utopia

- Mandel, The Top Three AGI-Proof Careers and What They Reveal About Our Humanity and The Future

In terms of Part 1, to the What is a mind? section it’s definitely worth adding Turing’s foundational paper from 1950 asking “can machines think?” It’s the one that introduces the “Turing test” and directly sets up the questions that Searle and Nagel are responding to.

I’d also add a section entitled What is knowledge? and include:

- Dreyfus, What Computers Can’t Do — which argues that embodied, situated knowledge resists formalisation.

- Wittgenstein, Philosophical Investigations — not the easiest of reads, but it’s where we get notions of the impossibility of a private language and the difficulty of defining terms such as “game”.

I had to ask Perplexity for help with Part 2. I haven’t read any of these but apparently they’re relevant additions:

- How should we build AI?

- Vallor, Technology and the Virtues — A virtue-ethics framework for emerging technology which Perplexity describes as “arguably the most important contemporary work bridging classical ethics and AI practice.” The original list focuses on alignment as a technical problem, whereas Vallor asks what kind of people we need to be to build good technology.

- How might AI impact human flourishing?

- Crawford, Atlas of AI — I’ve had this book on my shelf for a while but haven’t read it yet. It’s a materialist analysis of AI’s supply chains, labour exploitation, and environmental costs, which sounded too depressing for me to read last year.

- What does AI mean for how we know things?

- Floridi, The Ethics of Artificial Intelligence — A framework of five principles for ethical AI (beneficence, non-maleficence, autonomy, justice, and explicability) which apparently is “the standard reference in AI governance circles.”

- Nguyen, Games: Agency as Art — Explores how gamification narrows our values into simplified metrics and therefore relevant to AI alignment. If we have to specify values precisely enough for machines to optimise, do we inevitably impoverish them?

Source: Substack Notes

Image: Hanna Barakat