A deliberate willingness to be helped

I really enjoyed reading this post by Kevin Kelly about hitch-hiking, the kindness of strangers, and, most importantly, the generosity of being willing to be helped.

Kindness is like a breath. It can be squeezed out, or drawn in. You can wait for it, or you can summon it. To solicit a gift from a stranger takes a certain state of openness. If you are lost or ill, this is easy, but most days you are neither, so embracing extreme generosity takes some preparation. I learned from hitchhiking to think of this as an exchange. During the moment the stranger offers his or her goodness, the person being aided can reciprocate with degrees of humility, dependency, gratitude, surprise, trust, delight, relief, and amusement to the stranger. It takes some practice to enable this exchange when you don’t feel desperate. Ironically, you are less inclined to be ready for the gift when you are feeling whole, full, complete, and independent!

[…]

Altruism among strangers, on the other hand, is simply strange. To the uninitiated its occurrence seems as random as cosmic rays. A hit or miss blessing that makes a good story. The kindness of strangers is gift we never forget.

But the strangeness of “kindees” is harder to explain. A kindee is what you turn into when you are kinded. Curiously, being a kindee is an unpracticed virtue. Hardly anyone hitchhikes any more, which is a shame because it encourages the habit of generosity from drivers, and it nurtures the grace of gratitude and patience of being kinded from hikers. But the stance of receiving a gift – of being kinded — is important for everyone, not just travelers. Many people resist being kinded unless they are in dire life-threatening need. But a kindee needs to accept gifts more easily. Since I have had so much practice as a kindee, I have some pointers on how it is unleashed.

I believe the generous gifts of strangers are actually summoned by a deliberate willingness to be helped. You start by surrendering to your human need for help. That we cannot be helped until we embrace our need for help is another law of the universe. Receiving help on the road is a spiritual event triggered by a traveler who surrenders his or her fate to the eternal Good. It’s a move away from whether we will be helped, to how: how will the miracle unfold today? In what novel manner will Good reveal itself? Who will the universe send today to carry away my gift of trust and helplessness?

Source: The Technium

Image: David Guevara

What content are you really trying to provide and how do you get to it?

If you visit dougbelshaw.com it will appear pretty much instantly, no matter what speed of internet connection you’re on. Why? Because it’s 4.9kB in size. Over 3kB of that is the favicon so I could reduce it even further by not having that little ⚡ emoji show up on the web browser tab. But you’ve got to have some flair…

There are sites that are under 512kB in size. That’s easy. You can get sites under 1kB too. But why would anyone care? Well, as this post explains, sometimes accessibility and page speed is a matter of life and death.

We recently passed the one-year anniversary of Hurricane Helene and its devastating impact on Western North Carolina. When the storm hit, causing widespread flooding, it wasn’t just the power that was knocked out for weeks due to all the downed trees. Many cell towers were damaged, leaving people with little to no access to life-saving emergency information.

[…]

When I was able to load some government and emergency sites, problems with loading speed and website content became very apparent. We tried to find out the situation with the highways on the government site that tracks road closures. I wasn’t able to view the big slow loading interactive map and got a pop-up with an API failure message. I wish the main closures had been listed more simply, so I could have seen that the highway was completely closed by a landslide.

[…]

With a developing disaster situation, obviously not all information can be perfect. During the outages, many people got information from the local radio station’s ongoing broadcasts. The best information I received came from an unlikely place: a simple bulleted list in a daily email newsletter from our local state representative. Every day that newsletter listed food and water, power and gas, shelter locations, road and cell service updates, etc.

Limited connectivity isn’t something that only happens during natural disasters. It can happen all the time in our daily lives. In more rural areas around me, service is already pretty spotty. In the past, while working outdoors in an area without Wi-Fi, I’ve found myself struggling to load or even find instruction manuals or how-to guides from various product manufacturers.

Just using Semantic HTML and the correct native elements, we also can set a baseline for better accessibility. And make sure interactive elements can be reached with a keyboard and screen readers have a good sense of what things are on the page. Making websites responsive for mobile devices is not optional, and devs have had the CSS tools and experience to do this for over a decade. Information architecture and content is important to plan and revisit. What content are you really trying to provide and how do you get to it?

Source: Sparkbox

Image: Pierre Borthiry - Peiobty

Celebrating the lesser-known at the Internet Archive

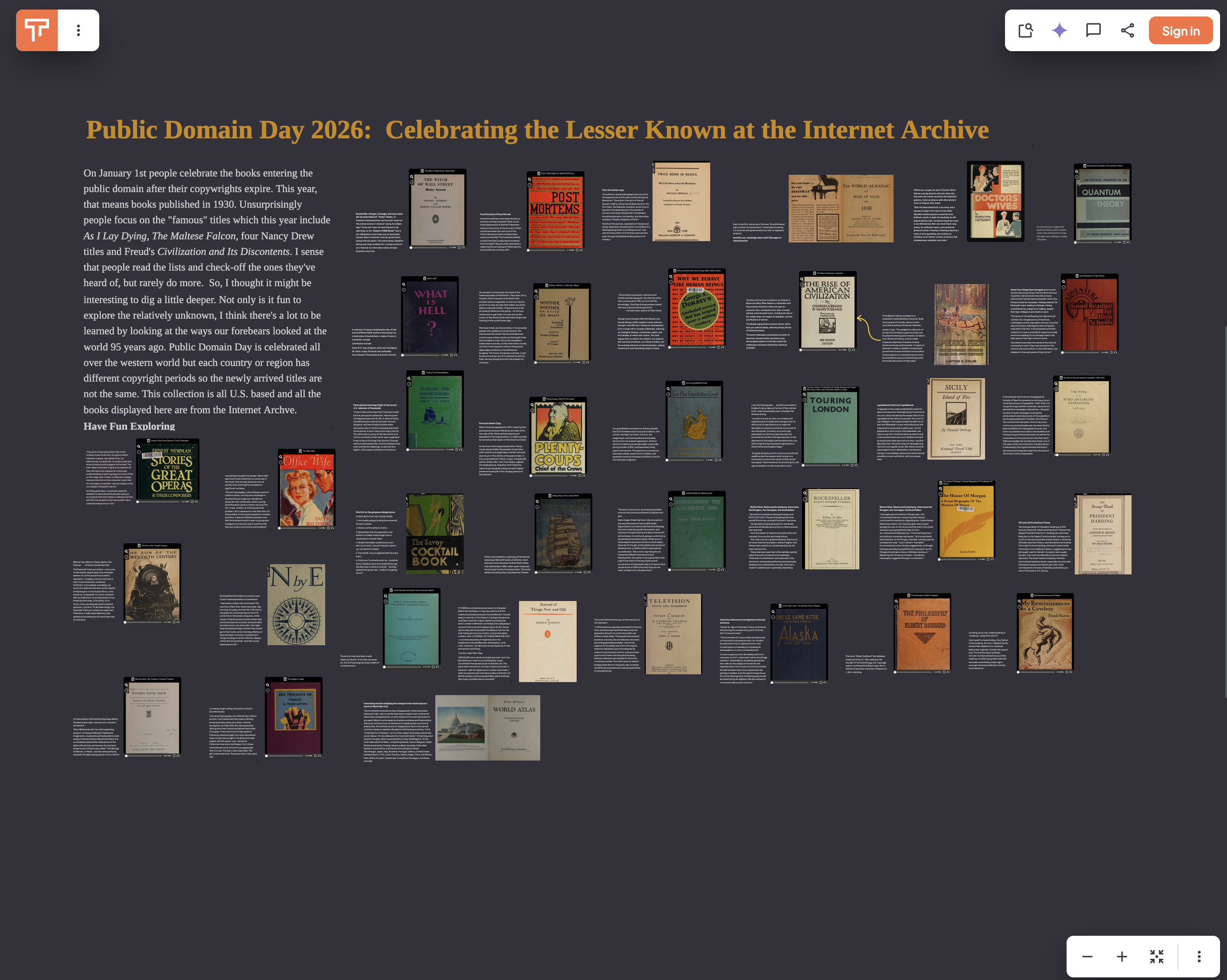

Bryan Alexander, longtime reader of Thought Shrapnel shared this with me. January 1st is known as ‘Public Domain Day’ as new works become freely available as their copyright expires.

This interactive ‘tapestry’ was created by Bob Stein, who is founder and co-director of The Institute for the Future of the Book. It’s worth investigating!

On January 1st people celebrate the books entering the public domain after their copywrights expire. This year, that means books published in 1930. Unsurprisingly people focus on the “famous” titles which this year include As I Lay Dying, The Maltese Falcon, four Nancy Drew titles and Freud’s Civilization and Its Discontents. I sense that people read the lists and check-off the ones they’ve heard of, but rarely do more. So, I thought it might be interesting to dig a little deeper. Not only is it fun to explore the relatively unknown, I think there’s a lot to be learned by looking at the ways our forebears looked at the world 95 years ago. Public Domain Day is celebrated all over the western world but each country or region has different copyright periods so the newly arrived titles are not the same. This collection is all U.S. based and all the books displayed here are from the Internet Archive.

Source: tapestries.media

The Questions

One of the great things about Are.na is that you find all kinds of links and clips that people have shared. Sometimes, though, you don’t know where they came from, which is the case for this great list of questions.

They’re very pertinent to my life right now, and I should imagine quite a few people at the beginning of a new year.

Source: thorge xyz

Avoiding 'hacklore'

I mentioned a few months ago the hysteresis effect which means there’s always a lag — and sometimes a big lag — between the state of things and our response to it.

Advice around privacy and security is a good example of this. People are often way out date with what constitutes good practice. This open letter from “current and former Chief Information Security Officers (CISOs), security leaders, and practitioners” points to advice to avoid public wifi, never charge from public USB ports, and to regularly ‘clear cookies’ as being pointless.

This constitutes what they call “hacklore” (a blend of “hacking” and “folklore”): modern urban legends about digital safety. It “spreads quickly and confidently… as if it were hard-earned wisdom” but “like most folklore, it isn’t grounded in reality, no matter how plausible it sounds.”

Instead of this hacklore they suggest the following, and have a newsletter which you might want to subscribe to if this piques your interest:

Keep critical devices and applications updated: Focus your attention on the devices and applications you use to access essential services such as email, financial accounts, cloud storage, and identity-related apps. Enable automatic updates wherever possible so these core tools receive the latest security fixes. And when a device or app is no longer supported with security updates, it’s worth considering an upgrade.

Enable multi-factor authentication (“MFA”, sometimes called 2FA): Prioritize protecting sensitive accounts with real value to malicious actors such as email, file storage, social media, and financial systems. When possible, consider “passkeys”, a newer sign-in technology built into everyday devices that replaces passwords with encryption that resists phishing scams — so even if attackers steal a password, they can’t log in. Use SMS one-time codes as a last resort if other methods are not available.

Use strong passphrases (not just passwords): Passphrases for your important accounts should be “strong.” A “strong” password or passphrase is long (16+ characters), unique (never reused under any circumstances), and randomly generated (which humans are notoriously bad at doing). Uniqueness is critical: using the same password in more than one place dramatically increases your risk, because a breach at one site can compromise others instantly. A passphrase, such as a short sentence of 4–5 words (spaces are fine), is an easy way to get sufficient length. Of course, doing this for many accounts is difficult, which leads us to…

Use a password manager: A password manager solves this by generating strong passwords, storing them in an encrypted vault, and filling them in for you when you need them. A password manager will only enter your passwords on legitimate sites, giving you extra protection against phishing. Password managers can also store passkeys alongside passwords. For the password manager, use a strong passphrase since it protects all the others, and enable MFA.

Source: Stop Hacklore! Open Letterwww.hacklore.org/letter

Image: CC BY Robert Lord

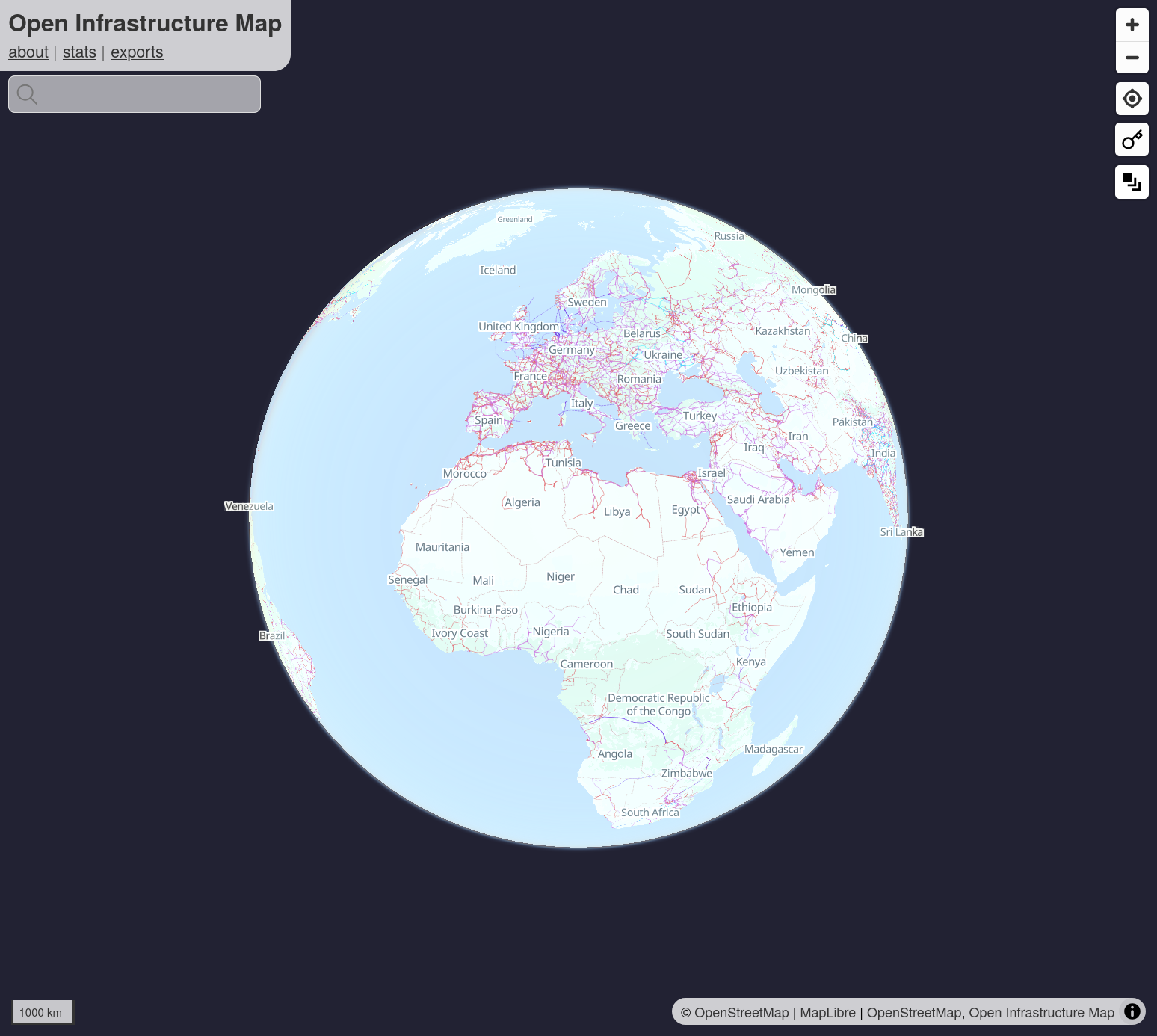

Open Infrastructure map

This is fascinating. Both in terms of the macro picture, but also when you zoom in to where you live, to see the hidden infrastructure that literally powers our world.

Source: Open Infrastructure Map

Post-digital authenticity

I agree with Ava, the author of this post, who effectively says that the internet has been stolen from us by Big Tech and people pushing a “post-authenticity” culture. Going back to offline for verification feels good at first, but steals something from those for whom the internet was liberating.

Looking around the internet, it’s clear that digital representations have become cheap, too perfect, and easily fabricated, and the offline world is increasingly the primary source of confirmation.

[…]

Want to know whether someone really studied, wrote that exam, or is a suitable job candidate? Direct interaction, live problem-solving and in-person demonstrations are the way to go now. Claims of expertise, portfolios, blog posts, code projects, certificates, and even academic records can be fabricated or enhanced by AI online.

[…]

We could call this post-digital authenticity. I know that social media platforms are currently pushing a sort of post-authenticity culture instead, where honesty and truth no longer matters and contrived and fabricated experiences for entertainment (ragebait, AI…) get more attention; but I think many, many people are tired of being constantly lied to, or being unable to trust their senses. I assume that the fascination with the totally fake that some people still have now is shortlived.

This step backwards into the offline feels healing at first, but also hurts, in a way. With all the valid criticisms, the internet still was a rather accessible place to finally find out the truth about events, avoid state censorship, and get to know people differently. It was especially good for the people who could not experience the same offline: People in rural areas, disabled and chronically ill people, queer people living far out and away from their peers, and more. It sucks that while others can and will return to a more authentic offline life, the ones left behind in a wasteland of mimicry are the ones who have always been left out.

Source: …hi, this is ava

Image: Igor Omilaev

Humans exhibit analogues of LLM pathologies

Over on my personal blog I published a post today about AGI already being here and how it’s affecting young people. What we don’t seem to be having a conversation about at the moment, other than the ‘sycophancy’ narrative, is why some people prefer talking to LLMs over human beings.

Which brings us to this post, not about the problems about conversing with AI, but applying some of those critiques to interacting with humans. It which should definitely be taken in a tongue-in-cheek way but it does point towards a wider truth.

Too narrow training set

I’ve got a lot of interests and on any given day, I may be excited to discuss various topics, from kernels to music to cultures and religions. I know I can put together a prompt to give any of today’s leading models and am essentially guaranteed a fresh perspective on the topic of interest. But let me pose the same prompt to people and more often then not the reply will be a polite nod accompanied by clear signs of their thinking something else entirely, or maybe just a summary of the prompt itself, or vague general statements about how things should be. In fact, so rare it is to find someone who knows what I mean that it feels like a magic moment. With the proliferation of genuinely good models—well educated, as it were—finding a conversational partner with a good foundation of shared knowledge has become trivial with AI. This does not bode well for my interest in meeting new people.

[…]

Failure to generalize

By this point, it’s possible to explain what happens in a given situation, and watch the model apply the lessons learned to a similar situation. Not so with humans. When I point out that the same principles would apply elsewhere, their response will be somewhere along the spectrum of total bafflement on the one end and on the other, a face-saving explanation that the comparison doesn’t apply “because it’s different”. Indeed the whole point of comparisons is to apply same principles in different situations, so why the excuse? I’ve learned to take up such discussions with AI and not trouble people with them.

[…]

Indeed, why am I even writing this? I asked GPT-5 for additional failure modes and found more additional examples than I could hope to get from a human:

Beyond the failure modes already discussed, humans also exhibit analogues of several newer LLM pathologies: conversations often suffer from instruction drift, where the original goal quietly decays as social momentum takes over; mode collapse, in which people fall back on a small set of safe clichés and conversational templates; and reward hacking, where social approval or harmony is optimized at the expense of truth or usefulness. Humans frequently overfit the prompt, responding to the literal wording rather than the underlying intent, and display safety overrefusal, declining to engage with reasonable questions to avoid social or reputational risk. Reasoning is also marked by inconsistency across turns, with contradictions going unnoticed, and by temperature instability, where fatigue, emotion, or audience dramatically alters the quality and style of thought from one moment to the next.

Source: embd.cc

Image: Ninthgrid

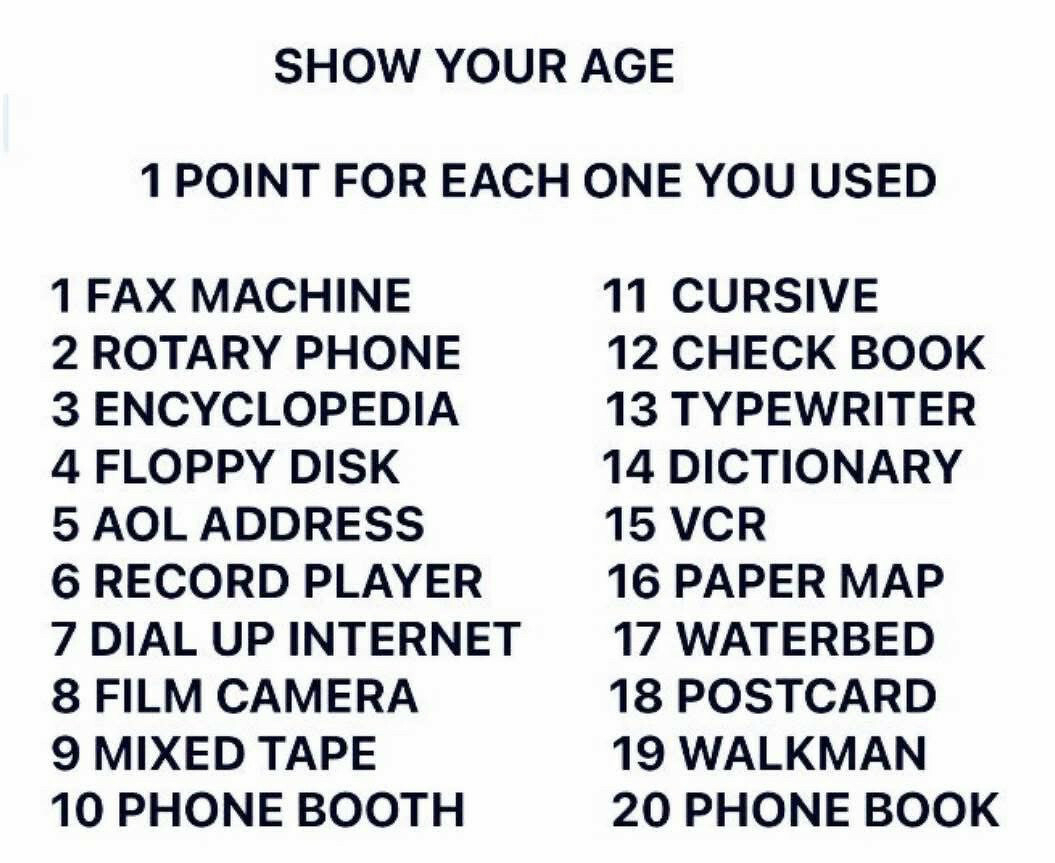

19/20 for me. How about you?

A university degree is now more like a 'visa' than a guaranteed route to professional success

Earlier this week, I published at my newly-revamped personal blog a post entitled Your mental models are out of date. The main thrust of what I was saying was that young people are getting advice from older people whose understanding of the world does not mesh with the way things really are in the mid-2020s.

This article in The Guardian is helpful for understanding the other side of the argument from a policy perspective. My opinion is that as many young people who want to go to university should be able to go, and that it’s the duty of us as a society to enable that to happen as efficiently as possible. Yes, that means that the value of a ‘degree’ as a chunky proxy credential decreases, but that’s why we should have more tailored, up-to-date, granular credentials in any case.

Prof Shitij Kapur, the head of King’s College London, said the days when universities could promise that their graduates were certain to get good jobs are over, in an era where nearly half the population enters higher education.

Kapur said a university degree is now more like a “visa” than a guaranteed route to professional success, a reflection of the shrinking graduate pay premium and the increased competition from AI and other graduates from around the world.

“The competition for graduate jobs is not just all because of AI filling out forms or AI taking away jobs. It’s also because of the stalling of our economy and causing a relative surplus of graduates. So the simple promise of a good job if you get a university degree, has now become conditional on which university you went to, which course you took,” Kapur said.

“The old equation of the university as a passport to social mobility, meant that if you got a degree you were almost certain to get a job as a socially mobile citizen. Now it has become a visa for social mobility – it gives you the chance to visit the arena that has graduate jobs and the related social mobility, but whether you can make it there is not a guarantee.”

[…]

Figures from the Department for Education show that England’s graduates still enjoy higher rates of employment and pay than non-graduates, although the real earnings of younger graduates have been stagnant for the past decade. Kapur points out that the national economy’s slow growth coincided with England’s introduction of £9,000 tuition fees and student loans in 2012, making it “the worst possible time” to transition to individual student loans.

In 2022, Kapur wrote a gloomy discussion of UK higher education that described a “triangle of sadness” between students burdened with debt and pessimistic prospects, a government that used inflation to cut tuition fees, and overstretched university staff trapped in between.

Source: The Guardian

Image: Christian Lendl

An attack on sovereignty itself

I didn’t think I could be shocked by anything Trump says or does, but the US invasion of Venezuela and snatching of President Maduro and his wife is, quite simply, chilling. I’m no expert on South American politics, but this seems to go beyond what we saw with the Gulf War, as it’s a single country operating in violation of international law, and doing seemingly whatever it wants.

Just as, so the saying goes, criminals learn to be better crooks in prison, it seems like Trump has learned to be more of a bully by being exposed to dictators such as Putin. This article by Paris Marx, the Canadian tech and cultural critic, is a useful primer. As he points out, Denmark should be worried about Greenland and, perhaps to a slightly letter extent, Canadian politicians need to sit up and take notice.

For me, this is another reason to be reducing our dependence on US technology. Thankfully, Marx has a guide for that — and reclaiming the stack is something that I plan to explore more with Tom Watson in 2026.

As Trump addressed the people of the world, he couldn’t help himself from going off-script. After previously failing to overthrow Maduro in 2019, he’d finally got his man — and access to the world’s largest proven oil reserves. Introducing the general who led the operation, Trump declared the invasion of Venezuela was an “attack on sovereignty” itself. Countries of the world should shutter at the implications.

[…]

Many Western countries are reticent to challenge the United States and the actions of the Trump administration too forcefully for a number of reasons. They’re militarily dependent on the United States, through NATO and other alliances, and Trump has already shown he can hurt them economically and continually threatens to tighten the screws. There’s also the technological dimension: they’re not only locked into digital systems controlled by US tech companies and dependent on US technology, but they also fear scaring away investment from some of the largest companies in the world and the deep-pocketed investors who’ve prospered from their rise.

As the sanctioning of ICC, UN, and European officials shows: digital sovereignty is paramount. The United States can cut off anyone from the technological systems its companies control, and it’s willing to use that power against anyone who tries to stand in the way of its interests, those of its client states, and largest companies. The bulk of that work must happen at the government level, to create the conditions, deploy the funding, and create the structures to rapidly build and deploy a new technological infrastructure. But individuals can still make a difference by pulling back from US tech services as much as feasibly possible.

Source: The Disconnect

Image: Jon Tyson

Toward an Open Source contribution standard

Emma Irwin, who I overlapped with at my time at Mozilla, is onto something with this idea of a ‘standard’ way of making visible contributions to Open Source. If you, or someone you know, is in a position to support her to do this work, please do get in touch with her!

Evaluating open source contributions, especially at the organizational level,remains frustratingly opaque. Who’s actually investing in the projects we all depend on? Right now, there’s no reliable way to say definitively. That lack of transparency is a true barrier to sustainability efforts.

[…]

GitHub, GitLab and Codeberg contribution graphs are helpful as a snapshot, but you cannot tell if a customer paid for that work; if it relates to employed or personal time - it also doesn’t capture non-coding contribution, like event sponsorship, board membership, code of conduct committee membership and more - that really make up the big picture.

I no longer think this belongs in a single product’s workflow. Instead, I believe we need a standard something communities can adopt and adapt to their own values, implemented through CI/CD workflows.

Not unlike a Code of Conduct, really: a template that defines what contributions count, how value is measured, and how attribution flows. Each community decides what matters to them. And as communities learn, they contribute back to the evolution of that standard.

Source: Sunny Developer

Image: Luke Southern

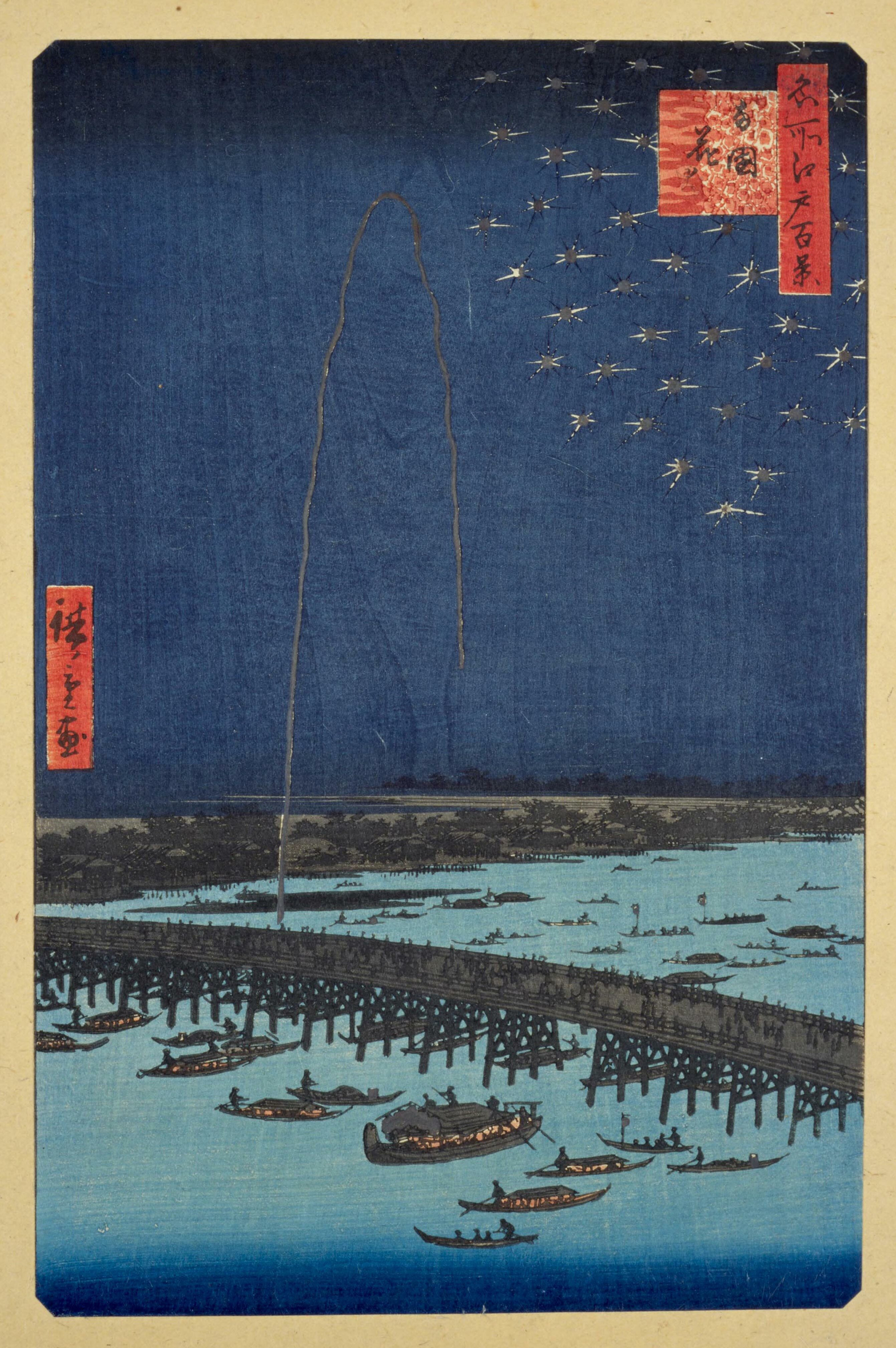

The Woodblock Prints of Utugawa Hiroshige

I bumped into my old Physics teacher over the holidays, and found that he still has a website about Japanese Ukiyo-e woodblock prints. He’s the reason I started liking Japanese art — and the reason I created my first website, aged 17. Thanks, John!

Source: The Woodblock Prints of Utugawa Hiroshige

Image: Fireworks at Ryōgoku

The rise of entrepreneurial heroism

This essay which mixes philosophical reflections, a new word, and popular culture is like catnip for me. In it, W. David Marx argues that we’ve essentially redefined what a “genius” means.

Instead of the lone artist starving in the garret, geniuses these days are those like Taylor Swift who fuse art and business for (huge) commercial success. As Marx argues in his conclusion “the designation of certain people as geniuses has long-term consequences” as it affects future behaviour. Without people doing things differently then, as he quotes John D. Graham as saying, “all the cultural activities of humanity would soon degenerate into clichés.”

Russian Formalist Viktor Shklovsky built his theory of art around the idea of ostranie — artworks that “defamiliarize” the familiar. In his book The Prison-House of Language, Frederic Jameson explains that ostranie-based art is “a way of restoring conscious experience, of breaking through deadening and mechanical habits of conduct.” And even when art fails to achieve full mind-shifts, its strangeness can at least be a source of new stimuli. Avant-garde artists in the 20th century believed art should always be a curveball; a fastball is not art.

[…]

In this, Swift offers us something new: She’s an anti-Kantian genius. Her work is always “direct” and “relatable” — no curveballs, no ostranie. Her constant deployment of existing conventions is not an artistic failure, but a brilliant artistic statement of audience expectation management. Burt lauds Swift for her “deep attachment to the verse-chorus-verse-chorus-bridge conventions of modern songwriting.” This is true: Swift is quite dedicated to specific patterns from recent hits. Many, many musical artists have used the I V vi IV chord progression: Better than Ezra’s “Good,” Justin Bieber’s “Baby,” and Rebecca Black’s “Friday.” Maybe some of these artists even wrote one additional song repeating the same chord pattern. Swift, in her dedication, has actively chosen to deploy the same recognizable chord sequence 21 times. (And she’s used the alternatively famous IV I V vi and vi IV I V progressions at least 9 times each.)

[…]

Swift’s genius status also demonstrates how little tension remains between art and business. In my new book Blank Space, I discuss the rise of what I call entrepreneurial heroism: “the glorification of business savvy as equivalent to artistic genius.” Avant-garde artists never made billions, because ostranie is a bad strategy for securing extraordinary profit or maximizing shareholder value. But when genius is simply “clever deployment of the thing everyone already wants,” there is no longer an inherent conflict with business logic.

Source: Culture: An Owner’s Manual

Image: Rosa Rafael

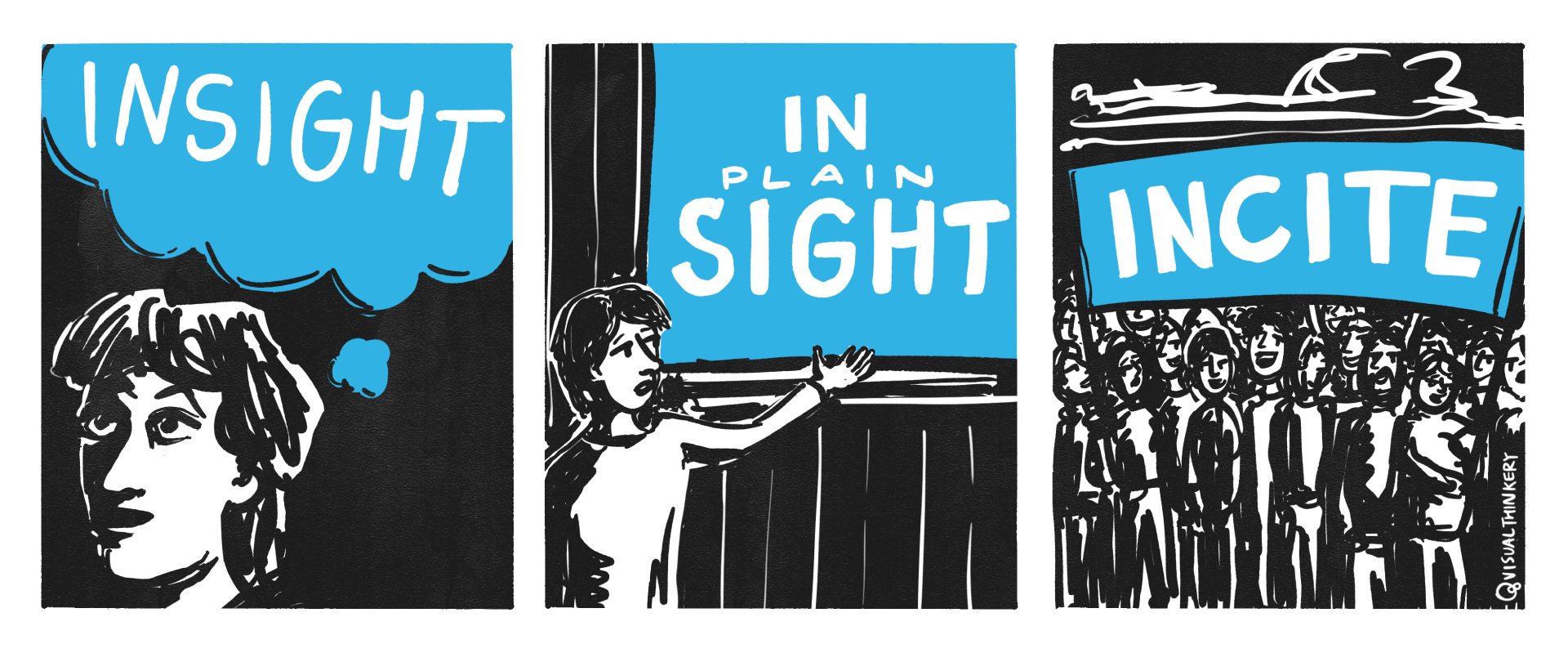

For every snarky comment, there are 10x as many people admiring your work

I’ve talked many times about “increasing your serendipity surface” and you can hear me discussing the idea on this podcast episode from 2024. In this post, Aaron Francis breaks things down into ‘doing the work’, ‘hitting the publish button’, and ‘capturing the luck.’

It’s a useful post, although I don’t think Francis talks enough about the network/community aspect and being part of something bigger than yourself. It’s not all about the personal brand! That’s why I prefer the term ‘serendipity’ to luck ‘luck’ — for me, I’m more interested in the connections than the career advancement opportunities. Although they often go hand-in-hand.

No matter how hard you work, it still takes a little bit of luck for something to hit. That can be discouraging, since luck feels like a force outside our control. But the good news is that we can increase our chances of encountering good luck. That may sound like magic, but it’s not supernatural. The trick is to increase the number of opportunities we have for good fortune to find us. The simple act of publishing your work is one of the best ways to invite a little more luck into your life.

[…]

How can we increase the odds of finding luck? By being a person who works in public. By doing work and being public about it, you build a reputation for yourself. You build a track record. You build a public body of work that speaks on your behalf better than any resume ever could.

[…]

Sharing things you’re learning or making is not prideful. People are drawn to other people in motion. People want to follow along, people want to learn things, people want to be a part of your journey. It’s not bragging to say, “I’ve made a thing and I think it’s cool!” Bringing people along is a good thing for everyone. By publishing your work you’re helping people learn. You’re inspiring others to create.

[…]

Publishing is a skill, it’s something you can learn. You’ll need to build your publishing skill just like you built every other skill you have.

Don’t be afraid to publish along the way. You don’t have to wait until you’re done to drop a perfect, finished artifact from the sky (in fact, you may use that as an excuse to never publish). People like stories, so use that to your benefit. Share the wins, the losses, and the thought processes. Bring us along! If you haven’t been in the habit of sharing your work, it’s going to feel weird when you start. That’s normal! Keep going, you get used to it.

[…]

The formula may be simple, but I’ll admit it’s not always easy. It’s scary to put yourself out there. It’s hard to open yourself up to criticism. People online can be mean. But for every snarky comment, there are ten times as many people quietly following along and admiring not only your work, but your bravery to put it out publicly. And at some point, one of those people quietly following along will reach out with a life-changing opportunity and you’ll think, “Wow, that was lucky.”

Source: The ReadME Project

Image: CC BY-NC-ND Visual Thinkery

The same tools that are keeping some people connected to reality are blurring the lines of what is real for others

I haven’t included the anecdotes cited by Charlie Warzel in his article for The Atlantic but they’re worth a read. It’s not just kids who spend a lot of time on their devices, but increasingly older people too.

As ever, people are quick to rush to moral judgement, and I’m sure there are plenty of problematic cases of people prioritising scrolling over socialising. However, life is different post-pandemic, and sometimes we judge others in ways we wouldn’t want them to judge us.

Screen-time panics typically position children as being without agency, completely at the mercy of evil tech companies that adults must intervene to defend against. But a version of the problem exists on the opposite side of the age spectrum, too: instead of a phone-based childhood, a phone-based retirement.

[…]

Older people really are spending more time online, according to various research, and their usage has been moving in that direction for years. In 2019, the Pew Research Center found that people 60 and older “now spend more than half of their daily leisure time, four hours and 16 minutes, in front of screens,” many watching online videos. A lot of this seems to be happening on YouTube: This year, Nielsen reported that adults 65 and up now watch YouTube on their TVs nearly twice as much as they did two years ago. A recent survey of Americans over 50 revealed that “the average respondent spends a collective 22 hours per week in front of some type of screen.” And one 2,000-person survey of adults aged 59 to 77 showed that 40 percent of respondents felt “anxious or uncomfortable without access” to their device.

[…]

The thing to remember is that not all screen use is equal, especially among older people. Some research suggests that spending time on devices may be linked to better cognitive function for people over 50. Word games, information sleuthing, instructional videos, and even just chatting with friends can provide positive stimuli. Vahia suggests that online habits that might be concerning for young or middle-aged people ought to be considered differently for older generations. “High technology use in teenagers and adolescents is often associated with worse mental health and is a predictor of sort of more isolation and loneliness, even depression,” he told me. “Whereas in older adults, engaging in technology seems to be protecting them from isolation and loneliness.”

[…]

This is a muddled mess. The same tools that are keeping some people connected to reality are blurring the lines of what is real for others. But rather than rush to judgment, younger people should use their concern to open up a conversation—to put down the phones and talk.

Source: The Atlantic

Image: Frankie Cordoba

The world is rarely as neat as any scenario

The TL;DR of this lengthy post by Tim O’Reilly and Mike Loukides is that, as ever, the likelihood is that AI is somewhere between “something we’ve seen before” (i.e. normal technology) and “something completely different” (i.e. the singularity).

In other words, it is likely to be a gamechanger, but probably not in the way that is currently envisaged. One example of this is the way that the energy demands are likely to help us transition to renewables more quickly. It’s also likely to help revolutionise some industries quickly, but take decades to filter into others. The world is a complicated place.

At O’Reilly, we don’t believe in predicting the future. But we do believe you can see signs of the future in the present. Every day, news items land, and if you read them with a kind of soft focus, they slowly add up. Trends are vectors with both a magnitude and a direction, and by watching a series of data points light up those vectors, you can see possible futures taking shape.

[…]

For AI in 2026 and beyond, we see two fundamentally different scenarios that have been competing for attention. Nearly every debate about AI, whether about jobs, about investment, about regulation, or about the shape of the economy to come, is really an argument about which of these scenarios is correct.

Scenario one: AGI is an economic singularity. AI boosters are already backing away from predictions of imminent superintelligent AI leading to a complete break with all human history, but they still envision a fast takeoff of systems capable enough to perform most cognitive work that humans do today. Not perfectly, perhaps, and not in every domain immediately, but well enough, and improving fast enough, that the economic and social consequences will be transformative within this decade. We might call this the economic singularity (to distinguish it from the more complete singularity envisioned by thinkers from John von Neumann, I. J. Good, and Vernor Vinge to Ray Kurzweil).

In this possible future, we aren’t experiencing an ordinary technology cycle. We are experiencing the start of a civilization-level discontinuity. The nature of work changes fundamentally. The question is not which jobs AI will take but which jobs it won’t. Capital’s share of economic output rises dramatically; labor’s share falls. The companies and countries that master this technology first will gain advantages that compound rapidly.

[…]

Scenario two: AI is a normal technology. In this scenario, articulated most clearly by Arvind Narayanan and Sayash Kapoor of Princeton, AI is a powerful and important technology but nonetheless subject to all the normal dynamics of adoption, integration, and diminishing returns. Even if we develop true AGI, adoption will still be a slow process. Like previous waves of automation, it will transform some industries, augment many workers, displace some, but most importantly, take decades to fully diffuse through the economy.

In this world, AI faces the same barriers that every enterprise technology faces: integration costs, organizational resistance, regulatory friction, security concerns, training requirements, and the stubborn complexity of real-world workflows. Impressive demos don’t translate smoothly into deployed systems. The ROI is real but incremental. The hype cycle does what hype cycles do: Expectations crash before realistic adoption begins.

[…]

Every transformative infrastructure build-out begins with a bubble. The railroads of the 1840s, the electrical grid of the 1900s, the fiber-optic networks of the 1990s all involved speculative excess, but all left behind infrastructure that powered decades of subsequent growth. One question is whether AI infrastructure is like the dot-com bubble (which left behind useful fiber and data centers) or the housing bubble (which left behind empty subdivisions and a financial crisis).

The real question when faced with a bubble is What will be the source of value in what is left? It most likely won’t be in the AI chips, which have a short useful life. It may not even be in the data centers themselves. It may be in a new approach to programming that unlocks entirely new classes of applications. But one pretty good bet is that there will be enduring value in the energy infrastructure build-out. Given the Trump administration’s war on renewable energy, the market demand for energy in the AI build-out may be its saving grace. A future of abundant, cheap energy rather than the current fight for access that drives up prices for consumers could be a very nice outcome.

[…]

The most likely outcome, even restricted to these two hypothetical scenarios, is something in between. AI may achieve something like AGI for coding, text, and video while remaining a normal technology for embodied tasks and complex reasoning. It may transform some industries rapidly while others resist for decades. The world is rarely as neat as any scenario.

Source: O’Reilly Radar

Image: Nahrizul Kadri

Choose your own inspirational adventure

I’d never thought of it like this, but essentially there are four genres of motivational advice. They all contradict each other, showing the context-dependency of such things.

Source: Are.na

…and we’re back! A quick reminder that you can support Thought Shrapnel with a one-off or monthly donation. Thanks!