Octopus people

There are two types of people in life: those who make binary statements, and those who don’t.

But seriously, the older I get the more it feels l like there are some people who I’m drawn to who share certain qualities. A lot those qualities are described this this this post by Dave Kang outlining his Octopus Manifesto. The “octopus” part comes from having different “tentacles” focused on different things.

It’s been over a year now that I’ve been living the Octopus Way, and my thinking around this has evolved somewhat. When I first began this journey post-sabbatical, an octopus was just a more fun way to describe a generalist, polymath, or multi-whatever person.

But lately I’ve been wanting to draw the circle a little tighter, as I think Octopus People are actually a smaller subset of this group. One of the fun things about making up your own identity metaphor is you get to define the term. So in this edition I present my updated, tighter definition of an Octopus Person. I’m interested in finding my unique tribe of people, and I hope clarifying and narrowing my definition will help me specifically find more people who are in the same boat as me.

Without quoting paragraphs and paragraphs, here’s the first sentence of each of the numbered points on the manifesto:

- We walk our own life path.

- We are creative.

- We have unlimited curiosity.

- We tend to fly solo.

- We are octopreneurs.

- We like meandering.

- We are resourceful.

- We think different.

Source: Dave Kang’s Octopus Life

Image: Julia Kadel

A complex system, contrary to what people believe, does not require complicated systems and regulations and intricate policies. The simpler, the better. Complications lead to multiplicative chains of unanticipated effects.

(Nassim Nicholas Taleb, Antifragile)

Building your sense of agency by granting yourself permission to do the things you are already allowed to do

I no doubt shared this when I first read it, but I had reason recently to re-find this excellent post from Milan Cvitkovic containing a list of “things you’re allowed to do.”

Brewing in my mind is a longer post over at my main blog about what it means to have agency, and how to develop it in yourself and others. It’s not a fixed state, but something which fluctuates over time. It needs feeding, sometimes by giving yourself permission to just do things.

This is a list of things you’re allowed to do that you thought you weren’t, or didn’t even know you could.

I haven’t tried everything on this list, mainly due to cost. But you’d be surprised how cheap most of the things on this list are (especially the free ones).

Note that you can replace “hire” or “buy” with “barter for” or “find a DIY guide to” nearly everywhere below. E.g. you can clean the bathroom in exchange for your housemate doing a couple hours’ research for you.

Source: milan.cvitkovic.net

Image: Are.na

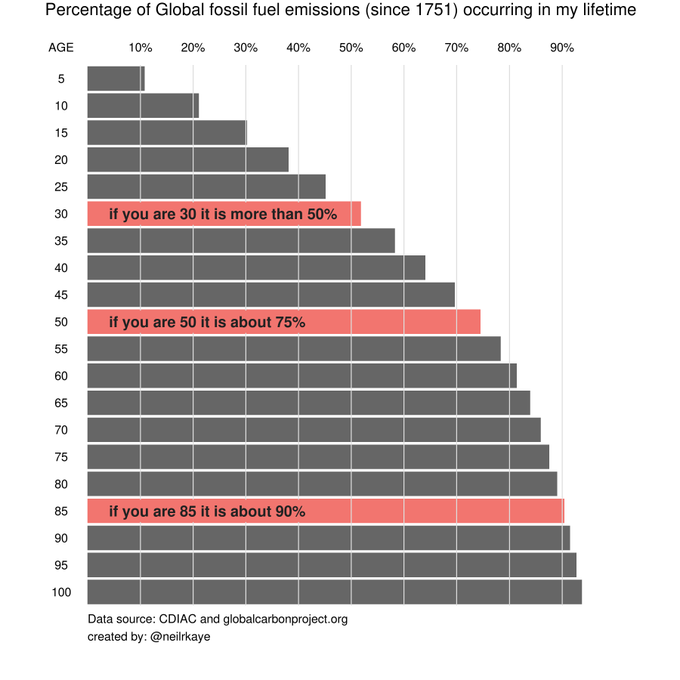

We are, effectively, being fracked to death.

I like experimenting with AI tools, but as I mentioned in a recent post about the Claude Constitution, I’m not a huge fan of the governance behind them. I also get for those in the creative arts, and especially for those for whom AI has arrived midway through their career, the whole “innovation” feels like armageddon.

This post from Andrew Sempere “artist, designer, developer and internet old” starts off with a heartfelt note about how he is desperately looking for work, which lends a appropriately melancholy vibe to what follows. Having really struggled this time last year, both in terms of work and life in general, I really feel for him.

It’s worth reading in its entirety, but here’s an extended excerpt, because he’s not wrong. But what can we salvage from the ruins? What can we create together?

To the extent that any of these AIs “think” at all, it’s because the models have strip-mined the last two decades of internet conversation. The conversation that we used to have in public. All of the blog posts, commentary, free software and detailed debates, RFCs and white papers. Free documentation and APIs and Stack Overflow posts. And now, as far as we can tell, this collective generative activity has almost completely ceased to be.

We have stopped talking to each other on the open channels.

We don’t “need” to anymore.

We definitely don’t want to anymore.

The current moment is so incredibly extractive, it has converted every ounce of human care, kindness and creativity into a “model,” which we then burn as much fossil fuel as possible to convert into a subscription service. We are being asked to pay for a chopped-and-screwed memory of the time we used to live in a semi-functional society.

We are, effectively, being fracked to death. And this isn’t an impending collapse, it’s already happened, we’re just living out the consequences right now.

It’s not the internet that’s dead, it’s the entire tech industry and it wasn’t an accident, it was a murder to try and cash in on the life insurance money. We’re not into late-stage capitalism, we’re fully in The Jackpot, and I fear nothing will ever get any better than it was about two years ago, when we ingested the entire program into itself and then just… gave up… trading all of our living friends for their ghosts.

It seems increasingly likely that we’re locked in a death-cult-groundhog-day timeloop now, forever, remixing only hit-parade records from 2023 again and again and again and again and again until we’re convinced they’re new.

I almost miss the era when there was a shitty startup every week.

[…]

I have memories. Vivid ones, of living in a different country, a different city, a different planet. I look at screenshots and photographs of life from as little as 18 months ago and it becomes difficult to believe that future ever existed. I don’t even feel right in my body, nothing seems to work, everything previously solid seems damaged, unstable, treacherous and unreliable. Things have glitched, gone sideways, completely wrong timeline. I’m told I’m wrong, that things are fine, that this is normal. We’ve always been this way.

So this might be just old-man shit, but I think the word I’m looking for is: bereft.

Source & image: Feral Research

Sometimes the work is rest

My one week of rest to give my autonomic system a break turned into two as, predictably, I caught whatever lurgy my daughter brought home from school. Two weeks away from exercise provided a bit of a reset, and I felt much better.

It reminds me of this excellent podcast episode.

Source: Tumblr

A bit more than a to-do list

I’m pretty sure I’ve come across anytype before, but I was reminded of it after asking for suggestions in the Freelancers Get Sh*t Done Slack. I’ve been using Google Tasks, which are nicely integrated with Google Calendar, for the last few years.

While I can still do that for my co-op to-do’s, I need a different solution for my consultancy business, which I’ve switched to Proton. Lots of people use pen and paper, but I need the ability to includes clickable links, etc.

While anytype is way more than just a tasks app, I do like the way it’s end-to-end encrypted, European, and the kind of Notion alternative I might actually use. I shall be experimenting…

Source: anytype

Because I learned a second thing at the end of my two days of vertigo: That my idea was terrible.

One of our recurring biases is assuming that our last experience of something, or somewhere, still reflects how it is today. For example, places I haven’t visited in over a decade, since before the pandemic, are almost certainly quite different now. The same applies to software and digital tools, which tend to evolve far faster than we expect.

The same is true of software and digital tools, which often evolve much faster than we think. If you haven’t been playing with AI tools (especially things like Cursor and Claude Code) then you really don’t know what you’re missing out on.

This post was shared with me by Tom Watson, who I did some noodling with on Friday in-person (I know, I know). I like the honest reflections in this post, and it meshes with my own experience building things with the help of a Little Robot Friend.

Within a few minutes of installing Cursor and setting up a handful of developmental studs, I had built a working prototype. Within 24 hours, I not only had a polished app; I was already at the limit of my initial idea, no longer just manufacturing what had been in my head, but trying to come up with new things to manifest. And within 48 hours, I was ruined. The comfortable physics that I thought governed Silicon Valley—that stuff takes time to build; that products need to be designed before they can be created; that computers cannot assume intent or interpolate their way through incomplete ideas—broke, utterly. It all worked too well, too fast.2 I was staggered, drunk on the Kool-Aid and high on the pills, unwell and off-brand. I knew that anyone can now build vibe-coded toys; I did not know that people with a basic familiarity with code could go much, much further.

Though it’s hard to benchmark how far I got in two days, this is my best guess: The app is roughly equivalent to what a designer and a couple professional engineers could build in a month or two. Granted, I didn’t build any of the scaffolding that a real company would—proper signup pages, hardened security policies, administrative features, “tests”—but the product expresses its core functionality as completely as any prototype that we showed Mode’s early investors and first customers. In 2013, it took us eight people, nine months, and hundreds of thousands of dollars to build something we could sell, and that was seen as reasonably efficient. Today, that feels possible to do with one person in two days.

Well, with one more caveat. Because I learned a second thing at the end of my two days of vertigo: That my idea was terrible.

The entire conceit didn’t work. My long-loved thesis, when rendered on a screen, was catastrophically bad.3 I did not want to start a business around my app. I did not want to take notes in my app. I did not want to use my app. I wanted to start over.4 Great chefs can come from anywhere, but not everyone can be a great chef.

Equally interesting is this bit, which talks about what happens when people don’t have to accept the “solutions” created by people who don’t experience their problems:

In other words, a lot of today’s technology is the levered ideas of technologists. It is a book store, run by an engineer from a hedge fund; it is computerized cash registers, from a social media founder and Oracle employees; it is fitness classes, built by a Bain consultant and an MIT grad; it is a note-taking app, built by someone who knows enough Typescript to build a note-taking app. But if these products succeed, it’s often more because of the technology than the idea of the technologist. It’s not that the idea was bad; it’s that the idea was not the transformational advantage. A fine CAD program beats a drafting table. A fine banking app beats driving to a branch. Even my app beats hand-written note cards. And because people who are technologists first, and architects or bankers or writers second, are the only people who can lever their ideas with technology, their ideas win.

Moreover, this isn’t just some accidental selection bias; this is the whole point of Silicon Valley. Flagship incubators like Y Combinator are built on the thesis that a smart kid with a computer and summer internship at Goldman Sachs can outwit all of American Express. That’s not because the kid understands the needs of payment processors better than people at American Express, or has better ideas than they do; it’s because the kid can build their idea.

But what if anyone can? What if lots of people at American Express can build stuff? What if someone who’s been an architect for twenty years can make the design software they’ve always wanted? What if a veteran investment banker can write a program that automatically generates pitch books? What if a real writer makes a note-taking app? What if software is the levered ideas of experts?

Source: benn.substack

Image: Kevin Ku

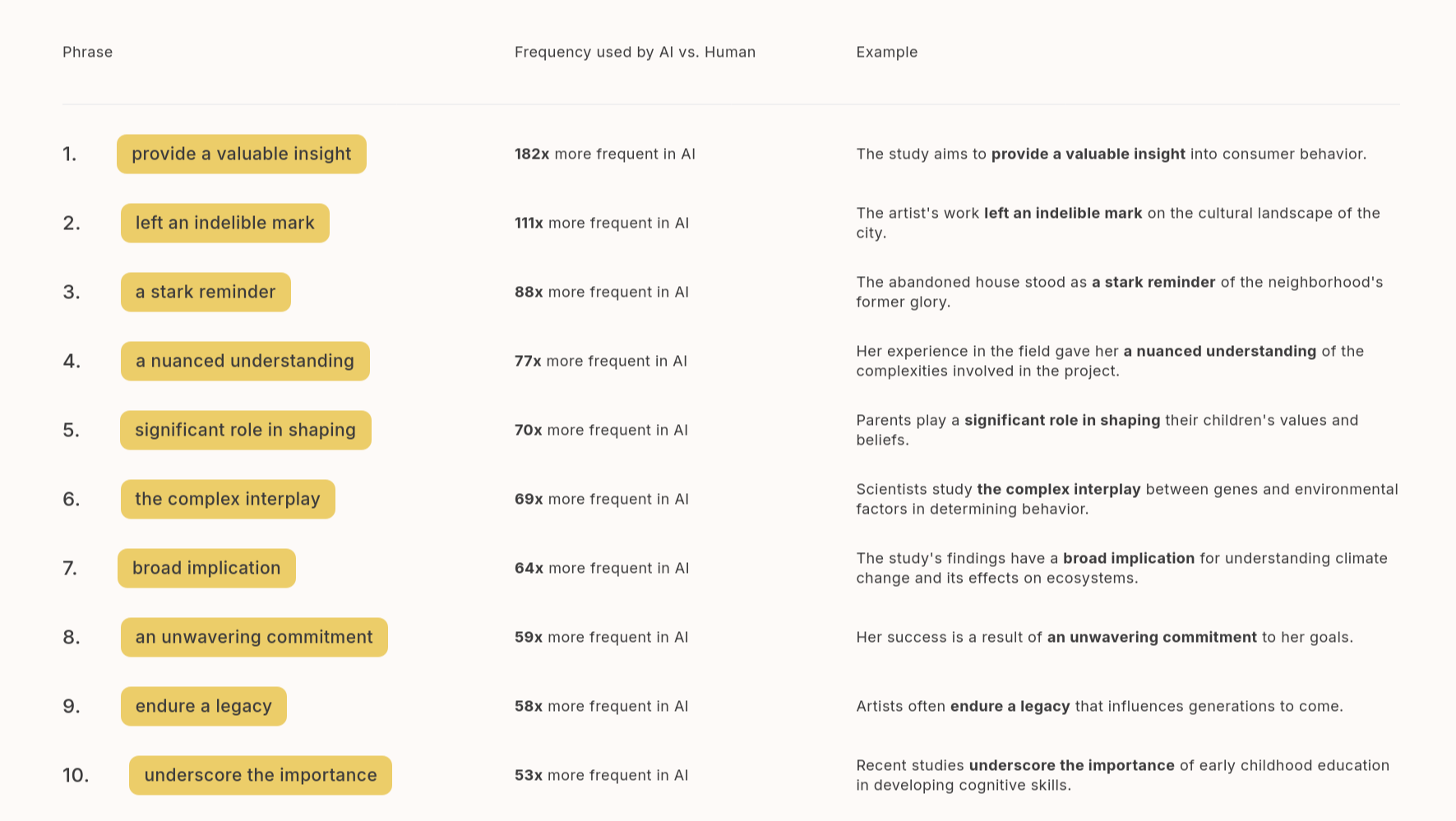

Words/phrases used more in AI-generated text

Whether or not you use LLMs as part of your workflow, you don’t want to be accused of “sounding like AI”. This guide gives a list of words and phrases that are (much) more commonly used in the output from LLMs. I’ve added it my document of AI words/phases to avoid. You’re welcome.

These words and phrases are ranked based on the frequency they appear in AI documents, compared to human documents in our research of 3.3 million texts.

Source: GPTzero

National security assessment on global ecosystems

It’s always worth looking at what governments decide to publish when the public are busy looking the other way. Recently, Trump’s actions around Greenland have been in the news, and so the UK government though it would be a good time to publish this.

It’s only 14 pages long and easily scannable, but TL;DR: “Significant disruption to international markets as a result of ecosystem degradation or collapse will put UK food security at risk."

This assessment is an analysis of how global biodiversity loss and ecosystem collapse could affect UK national security.

It shows how environmental degradation can disrupt food, water, health and supply chains, and trigger wider geopolitical instability. It identifies 6 ecosystems of strategic importance for the UK and explores how their decline could drive cascading global impacts.

This assessment, which was developed by analysts and experts across HM Government, supports long-term resilience planning. Publishing the assessment highlights opportunities for innovation, green finance and global partnerships that can drive growth while safeguarding the ecosystems that underpin our collective security and prosperity.

Source: GOV.UK

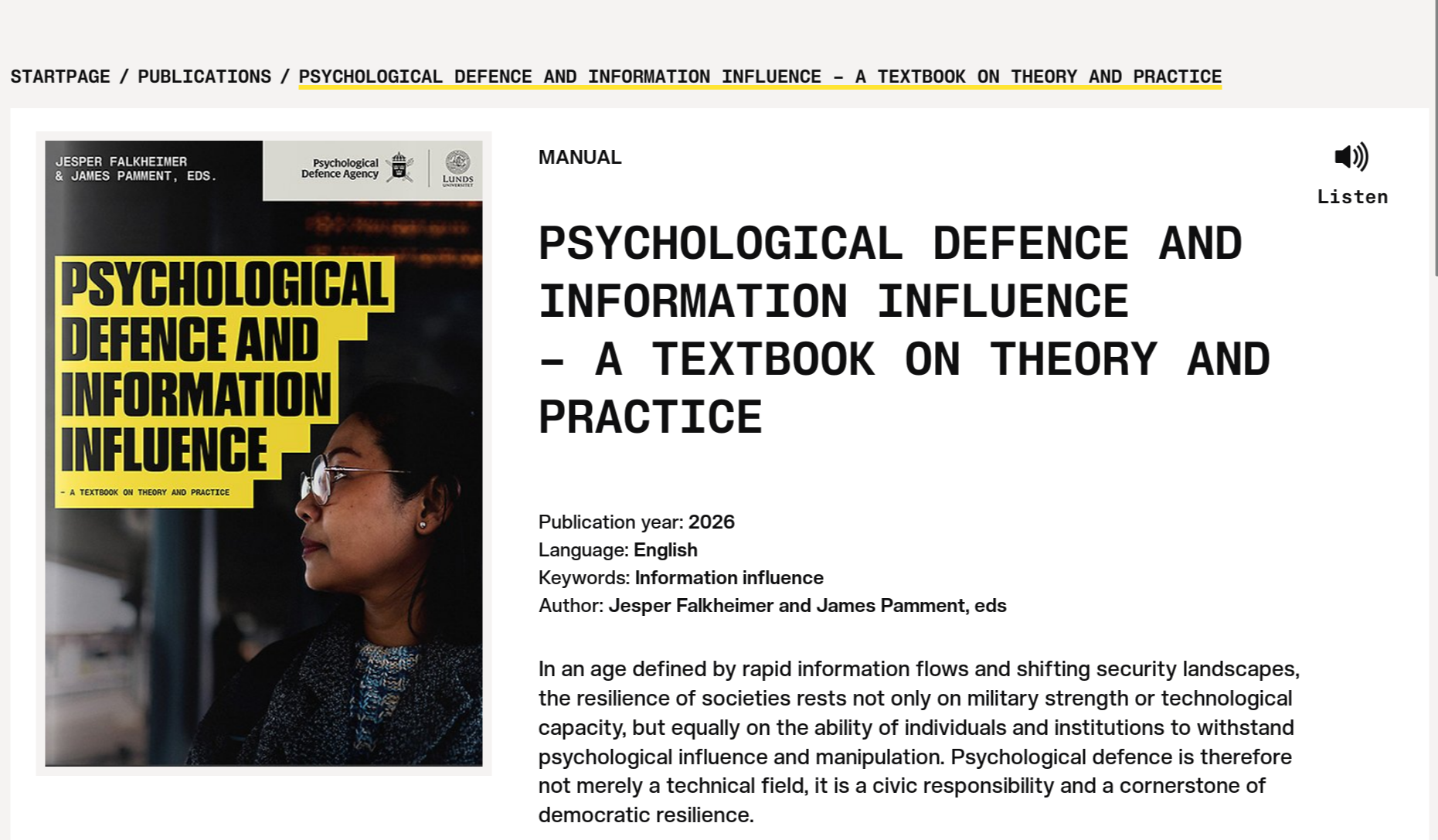

Psychological Defence and Information Influence

This looks interesting. It’s from Sweden’s Psychological Defence Agency and their website has lots of interesting stuff on it.

In an age defined by rapid information flows and shifting security landscapes, the resilience of societies rests not only on military strength or technological capacity, but equally on the ability of individuals and institutions to withstand psychological influence and manipulation. Psychological defence is therefore not merely a technical field, it is a civic responsibility and a cornerstone of democratic resilience.

This textbook is the first of its kind: a comprehensive overview of key issues related to psychological defence and information influence written by leading scholars on each topic. The textbook is an anthology with contributions reflecting the broad debate in Sweden and it provides knowledge, practical guidance, and reflection on how psychological defence can be understood and applied in different contexts. It explores the threats we face – such as disinformation and propaganda – and the tools available to counter them, ranging from critical thinking and communication strategies to institutional preparedness.

The overarching aim of this book is not only to raise awareness of psychological threats, but also to empower readers with the knowledge and confidence to respond effectively and responsibly. By fostering trust, openness, and critical engagement, psychological defence contributes to safeguarding the values of free and democratic societies.

Source: Psychological Defence Agency

What do we mean when we talk about pollution and toxicity in online spaces?

As someone who has done a lot of thinking about community spaces over the years, I like this investigation into what we mean when we use environmental analogies for online communities. It seems like it’s the start of a research project, and there’s a call for people to get in touch with the author.

The metaphor of online communities that “have become toxic” or that are “being polluted” in different ways is a common one. But what do we mean when we talk about pollution and toxicity in online spaces; and what can we learn from the environmental sciences and natural ecosystems to improve things with and for communities?

[…]

Websites solely based around machine generated content are proliferating, polluting both search engines and journalism, crowding our human-generated, high-quality journalism. The analogy of pollution that many of these communities and maintainers refer to seems like an apt one. It even predates the launch of generative AI systems that currently are the focus of this “digital pollution”: The related environmental concept of toxicity is a staple when discussing how people interact in online communities, references to which go back to at least the early 2000s. And more recently, people have argued that social media companies themselves should be viewed as potential polluters of society and how our information is being polluted.

[…]

[T]he goal of the “digital pollution” framing is not to call individual community participants or types of online cultures per se as toxic or polluted. Instead, it can serve to understand how online ecosystems can suffer, despite lots of well-intentioned and well-meaning interactions. Understanding these pollution dynamics is not just of academic interest, it might also help with modeling online interactions. Which in turn can help design interventions that have the potential to support moderators and improve online communities.

If we look at “pollution” more closely, in which ways do different factors in “commons pollution” mirror environmental pollution? Firstly, both environmental pollution and digital pollution can come in different shapes and forms. If we just think of water pollution, we have point source pollution, in which a single, identifiable source such as a factory discharges harmful materials into bodies of water. Online, we can find similar “point sources” in targeted misinformation campaigns, run by humans or bots.

Source: Citizens and Tech Lab

Image: Dan Meyers

Living with your incapacity

The one who learns to live with his incapacity has learned a great deal. This will lead us to the valuation of the smallest things, and to wise limitation, which the greater height demands… . The heroic in you is the fact that you are ruled by the thought that this or that is good, that this or that performance is indispensable, … this or that goal must be attained in headlong striving work, this or that pleasure should be ruthlessly repressed at all costs. Consequently you sin against incapacity. But incapacity exists. No one should deny it, find fault with it, or shout it down.

— Carl Jung

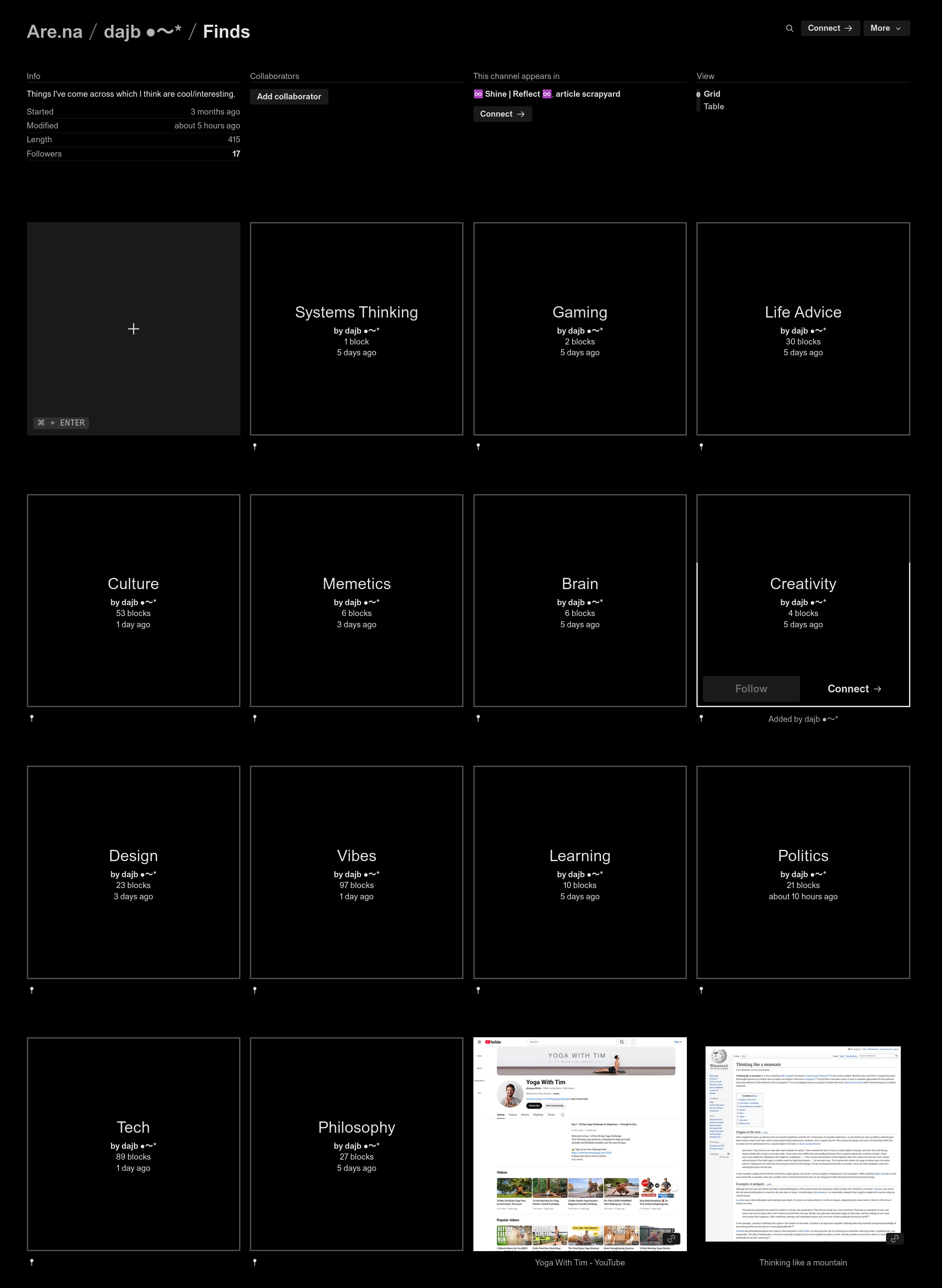

My Are.na channels are now more organised

I only post a small selection of the things I bookmark here on Thought Shrapnel. As ever when I’m not sure about how to organise things, when I started using Are.na again, I just put everything in a single “Finds” channel.

Now that I’ve been using it for a few months, I decided it was high time I was a bit more organised. So I’ve defined some channels and included them with “Finds” for ease of finding.

Note that Are.na allows you to subscribe to any of these in the app — or via RSS by appending /feed/rss to the end of any channel.

Source: Are.na

Are they ever tricked by a voice that is false when they expected it to be a real, live human?

Oh good, it’s not just me who thinks about these things. Ambiguity is a fundamental part of how we interact with each other and with our devices. And it’s very rarely discussed in general, and certainly not part of the stories we read, watch, or listen to — except as a plot device.

These days, when I watch movies with voice interfaces or “AI” assistants in them, I find myself pretty surprised by how many fictional worlds seem to be full of people who never experience any ambiguity about whether they’re talking to a person or to software. Everyone in a sci-fi setting has usually fully internalized the rules about what is or isn’t “real” in their conversational world, and they usually all have a social script for how they’re “supposed” to treat AI voice assistants.

Characters in modern film and TV are almost never rude or cruel to voice assistants except in scenes where they’re being misunderstood by voice recognition. People in stories like these rarely ever get confused about whether something is a human or an AI unless that’s, like, the entire point of the story. But in real life, we’re constantly forced to interact with an unwanted voice UI, or a phone scammer voice that’s pretending to be real. I have found myself really missing moments like these in movies, where humans express any material awareness of the false voices they interact with. Who made their voice assistant? How do they feel about that company or person? Are they ever tricked by a voice that is false when they expected it to be a real, live human?

[…[

I still haven’t seen much media that reflects the way I actually feel about conversational interfaces in the real world - frustrated, tricked, manipulated, and inconvenienced. And I haven’t seen any media at all recently about the equally insidious trend of real human labor being marketed as if it is an autonomous system.

Source: Laura Michet’s Blog

Image: Jelena Kostic