National security assessment on global ecosystems

It’s always worth looking at what governments decide to publish when the public are busy looking the other way. Recently, Trump’s actions around Greenland have been in the news, and so the UK government though it would be a good time to publish this.

It’s only 14 pages long and easily scannable, but TL;DR: “Significant disruption to international markets as a result of ecosystem degradation or collapse will put UK food security at risk."

This assessment is an analysis of how global biodiversity loss and ecosystem collapse could affect UK national security.

It shows how environmental degradation can disrupt food, water, health and supply chains, and trigger wider geopolitical instability. It identifies 6 ecosystems of strategic importance for the UK and explores how their decline could drive cascading global impacts.

This assessment, which was developed by analysts and experts across HM Government, supports long-term resilience planning. Publishing the assessment highlights opportunities for innovation, green finance and global partnerships that can drive growth while safeguarding the ecosystems that underpin our collective security and prosperity.

Source: GOV.UK

Psychological Defence and Information Influence

This looks interesting. It’s from Sweden’s Psychological Defence Agency and their website has lots of interesting stuff on it.

In an age defined by rapid information flows and shifting security landscapes, the resilience of societies rests not only on military strength or technological capacity, but equally on the ability of individuals and institutions to withstand psychological influence and manipulation. Psychological defence is therefore not merely a technical field, it is a civic responsibility and a cornerstone of democratic resilience.

This textbook is the first of its kind: a comprehensive overview of key issues related to psychological defence and information influence written by leading scholars on each topic. The textbook is an anthology with contributions reflecting the broad debate in Sweden and it provides knowledge, practical guidance, and reflection on how psychological defence can be understood and applied in different contexts. It explores the threats we face – such as disinformation and propaganda – and the tools available to counter them, ranging from critical thinking and communication strategies to institutional preparedness.

The overarching aim of this book is not only to raise awareness of psychological threats, but also to empower readers with the knowledge and confidence to respond effectively and responsibly. By fostering trust, openness, and critical engagement, psychological defence contributes to safeguarding the values of free and democratic societies.

Source: Psychological Defence Agency

What do we mean when we talk about pollution and toxicity in online spaces?

As someone who has done a lot of thinking about community spaces over the years, I like this investigation into what we mean when we use environmental analogies for online communities. It seems like it’s the start of a research project, and there’s a call for people to get in touch with the author.

The metaphor of online communities that “have become toxic” or that are “being polluted” in different ways is a common one. But what do we mean when we talk about pollution and toxicity in online spaces; and what can we learn from the environmental sciences and natural ecosystems to improve things with and for communities?

[…]

Websites solely based around machine generated content are proliferating, polluting both search engines and journalism, crowding our human-generated, high-quality journalism. The analogy of pollution that many of these communities and maintainers refer to seems like an apt one. It even predates the launch of generative AI systems that currently are the focus of this “digital pollution”: The related environmental concept of toxicity is a staple when discussing how people interact in online communities, references to which go back to at least the early 2000s. And more recently, people have argued that social media companies themselves should be viewed as potential polluters of society and how our information is being polluted.

[…]

[T]he goal of the “digital pollution” framing is not to call individual community participants or types of online cultures per se as toxic or polluted. Instead, it can serve to understand how online ecosystems can suffer, despite lots of well-intentioned and well-meaning interactions. Understanding these pollution dynamics is not just of academic interest, it might also help with modeling online interactions. Which in turn can help design interventions that have the potential to support moderators and improve online communities.

If we look at “pollution” more closely, in which ways do different factors in “commons pollution” mirror environmental pollution? Firstly, both environmental pollution and digital pollution can come in different shapes and forms. If we just think of water pollution, we have point source pollution, in which a single, identifiable source such as a factory discharges harmful materials into bodies of water. Online, we can find similar “point sources” in targeted misinformation campaigns, run by humans or bots.

Source: Citizens and Tech Lab

Image: Dan Meyers

Living with your incapacity

The one who learns to live with his incapacity has learned a great deal. This will lead us to the valuation of the smallest things, and to wise limitation, which the greater height demands… . The heroic in you is the fact that you are ruled by the thought that this or that is good, that this or that performance is indispensable, … this or that goal must be attained in headlong striving work, this or that pleasure should be ruthlessly repressed at all costs. Consequently you sin against incapacity. But incapacity exists. No one should deny it, find fault with it, or shout it down.

— Carl Jung

Are they ever tricked by a voice that is false when they expected it to be a real, live human?

I only post a small selection of the things I bookmark here on Thought Shrapnel. As ever when I’m not sure about how to organise things, when I started using Are.na again, I just put everything in a single “Finds” channel.

Now that I’ve been using it for a few months, I decided it was high time I was a bit more organised. So I’ve defined some channels and included them with “Finds” for ease of finding.

Note that Are.na allows you to subscribe to any of these in the app — or via RSS by appending /feed/rss to the end of any channel.

Source: Are.na

Are they ever tricked by a voice that is false when they expected it to be a real, live human?

Oh good, it’s not just me who thinks about these things. Ambiguity is a fundamental part of how we interact with each other and with our devices. And it’s very rarely discussed in general, and certainly not part of the stories we read, watch, or listen to — except as a plot device.

These days, when I watch movies with voice interfaces or “AI” assistants in them, I find myself pretty surprised by how many fictional worlds seem to be full of people who never experience any ambiguity about whether they’re talking to a person or to software. Everyone in a sci-fi setting has usually fully internalized the rules about what is or isn’t “real” in their conversational world, and they usually all have a social script for how they’re “supposed” to treat AI voice assistants.

Characters in modern film and TV are almost never rude or cruel to voice assistants except in scenes where they’re being misunderstood by voice recognition. People in stories like these rarely ever get confused about whether something is a human or an AI unless that’s, like, the entire point of the story. But in real life, we’re constantly forced to interact with an unwanted voice UI, or a phone scammer voice that’s pretending to be real. I have found myself really missing moments like these in movies, where humans express any material awareness of the false voices they interact with. Who made their voice assistant? How do they feel about that company or person? Are they ever tricked by a voice that is false when they expected it to be a real, live human?

[…[

I still haven’t seen much media that reflects the way I actually feel about conversational interfaces in the real world - frustrated, tricked, manipulated, and inconvenienced. And I haven’t seen any media at all recently about the equally insidious trend of real human labor being marketed as if it is an autonomous system.

Source: Laura Michet’s Blog

Image: Jelena Kostic

Privacy by design means what it says on the tin

This was shared with me by Tom Watson yesterday, and we discussed it briefly as part of our now-regular Friday ‘noodling’ sessions. Now, fair enough, one would not expect that turning off ChatGPT’s data consent option would delete files on your own computer.

But then, not having backups is, at the very least, cavalier when your livelihood depends on your outputs. So it’s a reminder not only that LLMs are simultaneously very powerful and ‘stupid’ but also that, just like every other time in the history of digital devices you should have backups.

None of us are perfect. This week, for example, after setting a ‘duress’ password on my GrapheneOS-powered smartphone, I accidentally triggered it and all of my data was instantly wiped. Did I blame GrapheneOS? No, I was actually thankful that it did what it said it would do. I blamed myself.

While I lost some history of my chats in Signal it was a reminder that they’ve got an encrypted cloud backup option. So I turned that on, and didn’t write an article blaming everyone except myself.

This was not a case of losing random notes or idle chats. Among my discussions with ChatGPT were project folders containing multiple conversations that I had used to develop grant applications, prepare teaching materials, refine publication drafts and design exam analyses. This was intellectual scaffolding that had been built up over a two-year period.

We are increasingly being encouraged to integrate generative AI into research and teaching. Individuals use it for writing, planning and teaching; universities are experimenting with embedding it into curricula. However, my case reveals a fundamental weakness: these tools were not developed with academic standards of reliability and accountability in mind.

If a single click can irrevocably delete years of work, ChatGPT cannot, in my opinion and on the basis of my experience, be considered completely safe for professional use. As a paying subscriber (€20 per month, or US$23), I assumed basic protective measures would be in place, including a warning about irreversible deletion, a recovery option, albeit time-limited, and backups or redundancy.

OpenAI, in its responses to me, referred to ‘privacy by design’ — which means that everything is deleted without a trace when users deactivate data sharing. The company was clear: once deleted, chats cannot be recovered, and there is no redundancy or backup that would allow such a thing (see ‘No going back’). Ultimately, OpenAI fulfilled what they saw as a commitment to my privacy as a user by deleting my information the second I asked them to.

Source: Nature Briefing

Image: Ujesh Krishnan

The correct response to Dachau was not better training for the guards

This is a must-read from Andrea Pitzer. As she points out, the window of opportunity to do something about what’s happening in the US is closing.

I’ve looked at mass civilian detention around the world. I’ve visited the facilities where people were held. I’ve talked to the people involved—those detained and tortured, those who supported camps, and those who stood idly by. It’s critical to recognize that each of the societies that has had camps underwent a lengthy process. This process is often easier to see happening in your own country if you first look at an example in another one.

My goal today is to warn you that the U.S. has already been seized by the same camp dynamic. It’s not that I’m trying to tell you that bad things are coming, and you have to look out for them. What I’m saying is that the camps have already taken root and are on a fast-track to get exponentially worse. We’re already deep inside the process.

Yet there is power in that knowledge, because in some big ways, we can know what will happen next. We have models for how other societies have moved out of our current perilous state. And we have a ton of tactics we can use to fight back against the expanding harm directed at all of us.

I’ll add right up front that nobody sane now thinks the answer to abuses at Dachau was to give the guards more training.

[…]

[I]f we count the Biden administration as simply a pause on the larger Trump authoritarian agenda in several ways, the U.S. is currently approaching the end of that three-to-five year window. We may already be living in a concentration-camp regime, but it hasn’t yet hardened into the kind of vast system that becomes the controlling factor in the country’s political future.

Still, we’re on the verge of entrenching a massive system, which is a very bad place to be. It’s my opinion that we have a limited window in which to act. What happens this year will be critical for significantly dismantling the existence of and any future capacity for building the extrajudicial camp network the government is constructing today.

Again, we need to do more than stop the construction of additional facilities, more than just get ICE agents to behave more politely. We need to dismantle the current system and remove the possibility for it to exist again. In my opinion, that is what “Abolish ICE” should mean.

[…]

You can’t reform a concentration camp regime. You have to dismantle it and replace it. We have a thousand ways to do it. And most U.S. citizens—particularly white ones—have the freedom to act, for now, with far less risk than the many people currently targeted.

Source: Degenerate Art

Image: Bradley Andrews

They have no idea what’s happening now.

I was talking with Laura about how full-time jobs are pretty much over as a construct. There aren’t as many as there used to be, particularly for knowledge workers, and there’s plenty of people (like us!) who wouldn’t want one in any case.

This time last year I was laughing at the prediction that AI would be able to replace developers. Now I’m vibe coding actually useful software from scratch. AGI is kinda already here. What does that mean in practice? Probably that the world as we know it is slowly going to disappear.

Most people met AI in late 2022 or something, poked ChatGPT once like it was a digital fortune cookie, got a mediocre haiku about their cat, and decided: ah yes, cute toy, overhyped, wake me when it’s Skynet. Then they went back to their inbox rituals.

They have no idea what’s happening now.

They haven’t seen the latest models that quietly chew through documents, write code, design websites, summarize legal contracts, and generate decent strategy decks faster than a middle manager can clear their throat.

Claude just released a “coworker”, which sometime next year will become your new colleague, and then after that year, your replacement.

[…]

We can automate away huge chunks of the drudgery that used to be biologically unavoidable.

And we’re still out here proudly defending the 40-hour week like it’s some sacred law of physics. Still tying healthcare, housing, and dignity to whether you can convince someone that your job should exist for another quarter.

[…]

So yes, we need a transition, and it will be messy.

We will need new rhythms for days and weeks that aren’t defined by clocking in.

We will need new institutions of community—not offices, but workshops, labs, studios, clubs, care centers, gardens, research guilds, whatever—places where humans gather to do things that matter without a boss breathing down their neck for quarterly results.

We will need new ways to recognize status: not “job title and salary,” but contribution, curiosity, care, creativity.

We will need economic architecture: universal basic income, or even better, universal basic services (housing, healthcare, education, mobility) that are not held hostage by employers.

And we’ll need therapy. A lot of it.

Source: The Pavement

Image: Janet Turra & Digit

What’s strange is how little of that generosity we extend to each other

Last week, I discussed how our interactions with LLMs can provide some insights into ways we treat other humans.

I’d recommend reading this article, which is based on The Four Agreements: A Practical Guide to Personal Freedom by Don Miguel Ruiz, as it shows how applying some of the ways we show patience and understanding with AI systems might help us in our interactions in general.

I’ve been thinking about how quickly we’ve adapted to working with AI. We all understand the deal. If the output is bad, it’s probably on us. The prompt was vague. The context was missing. We didn’t give it enough constraints.

So we revise. We clarify. We try again.

No frustration. No judgment. Just iteration.

What’s strange is how little of that generosity we extend to each other. Somewhere along the way, we learned to treat machines as systems that need better inputs—but we still treat humans as if they should just know. And when they don’t, we judge competence, take it personally, make assumptions, or shut down.

Source: UXtopian

Image: Alan Warburton

Time appears in this 3D sort of calendar pattern

It was 18 years ago that I discovered that I’m a bit weird. Like 10-20% of the population, I have a form of synaesthesia, which is usually understood as a “mixing of the senses.” I do get that a bit, but I’m a less extreme of the example of the “spatial-sequence” synaesthesia discussed in this article.

I should imagine the specific details are unique to each synesthete – which is why I debate what “colour” different days of the week and school subjects are with my daughter. However, I recognise being able to see time in three dimensions. Just as with aphantasia we are very surprised when people have a vastly different life to our own.

It was only in my 60s that I discovered there was a name for this phenomenon – not just the way time appears in this 3D sort of calendar pattern, but the colours seen when I think of certain words. Two decades previously, I’d mentioned to a friend that Tuesdays were yellow and she’d looked at me in the same strange, befuddled way that family members always had when told about the calendar in my head. Out of embarrassment, it was never discussed further. I was clearly very odd.

[…]

While thinking about the moment in time I’m at now, I see the day of the week and the hours of that day drawn up in a grid pattern. I am physically in that diagram in my head – and there’s a photographic element to it. If there’s a concert coming up in the calendar, in my mind, a picture of the concert venue is superimposed on to the 7pm to 10pm time slot on that particular day.

Source: The Guardian

Image: Annie Spratt

Somewhere I'd like to spend some time

I saw this and not only did it feel like somewhere I’d like to spend some time, but also reminded me of the cover of Bad Bunny’s Debí Tirar Más Fotos, which was one of my favourite albums of 2025.

Source: Are.na

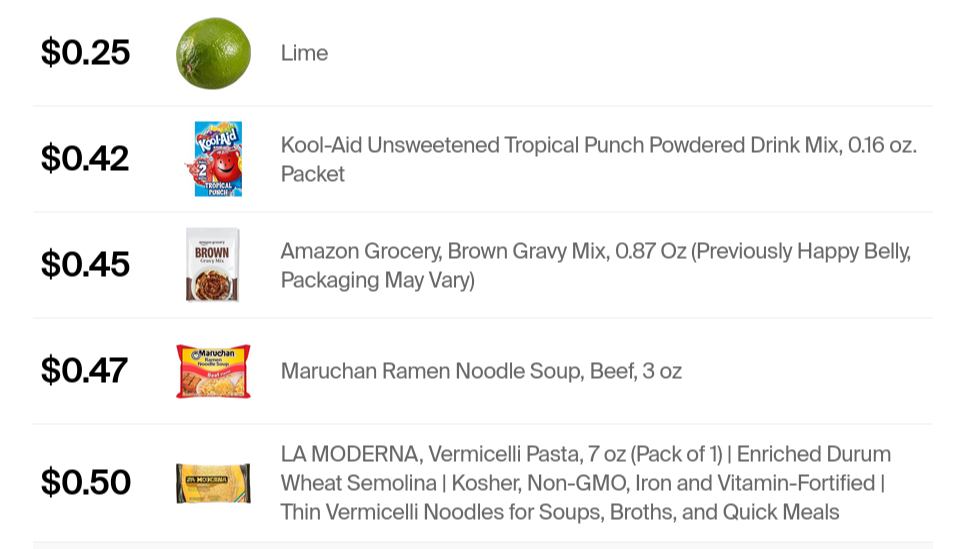

Postal Arbitrage

I sent a parcel from Brexit Britain to my friend and colleague Laura Hilliger in Germany. The “2-3 day” DHL service cost as much as the contents and took from 18th December to 14th January to arrive. I could have just ordered something from Amazon.de for her with free postage and it would have arrived next day.

This article takes things a step further: why send letters and postcards to friends and family when you can order actual products from Amazon to be delivered along with a gift note?

As of 2025, a stamp for a letter costs $0.78 in the United States. Amazon Prime sells items for less than that… with free shipping! Why send a postcard when you can send actual stuff?

I found all items under $0.78 with free Prime shipping — screws, cans, pasta, whatever. Add a free gift note. It arrives in 1 or 2 days. Done.

You’re not only saving money. It’s about sending something real. Your friend gets a random can of tomato sauce with your birthday note attached. They laugh. They remember you. They might even use it!

Source: Riley Walz

The Cost of American Exceptionalism

We all know that what’s going on in the US is pretty terrible. But there remains an underlying assumption that the way that Americans organise their society is in some way better, or more valuable than how things are done in Europe and other OECD nations.

Instead of taking American exceptionalism as our example in the UK, we should be looking to re-integrate with our European neighbours.

America’s problems are solved problems.

Universal healthcare is not some utopian fantasy. It is Tuesday in Toronto. Affordable higher education is not an impossible dream. It is Wednesday in Berlin. Sensible gun regulation is not a violation of natural law. It is Thursday in London. Paid parental leave is not radical. It is Friday in Tallinn, and Monday in Tokyo, and every day in between.

Source: On Data and Democracy

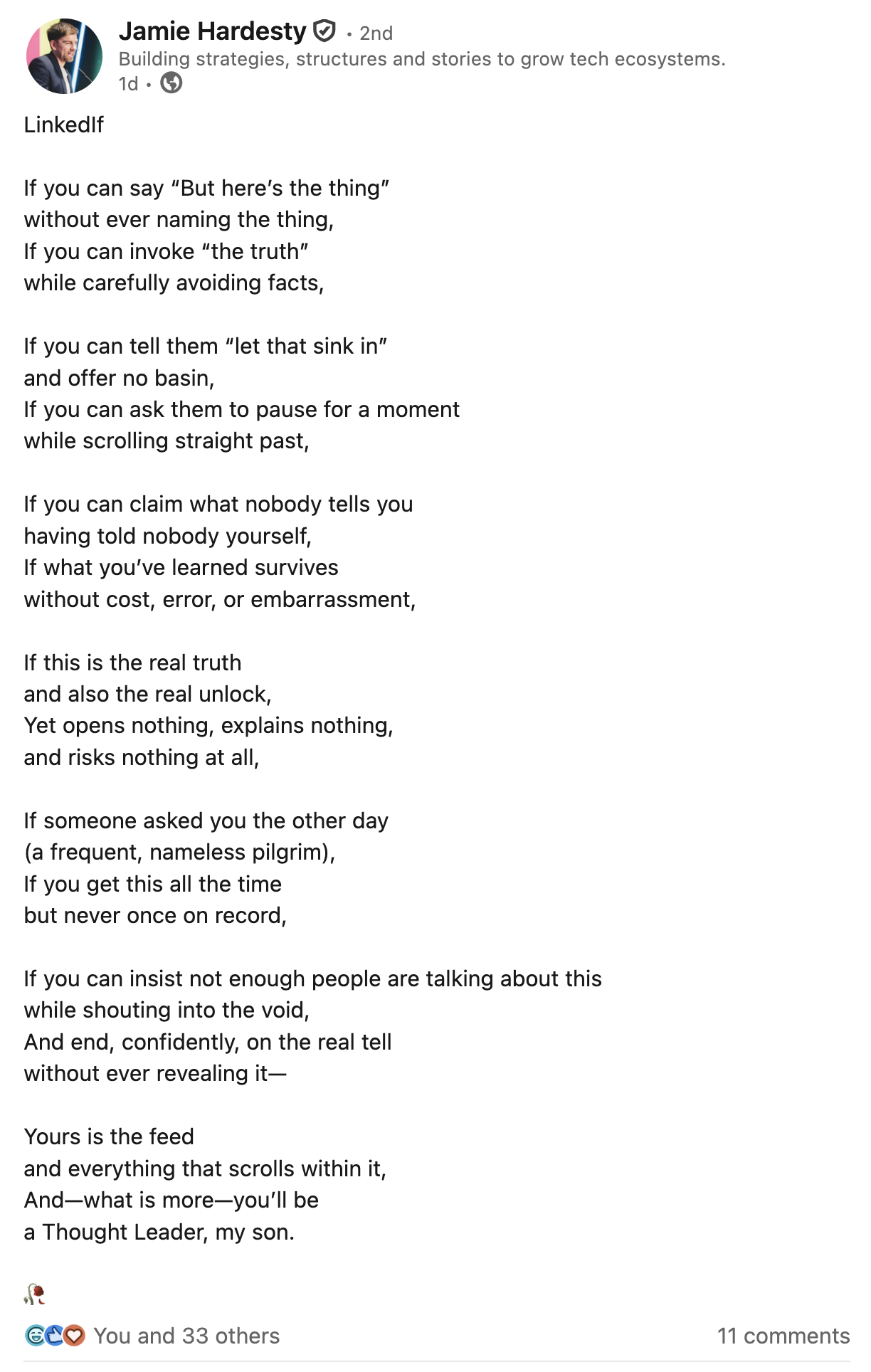

LinkedIf

When I was a boy, I had a poster of Rudyard Kipling’s poem “If” on my wall. As a result, I’ve always had a soft spot for it, even though Kipling can be a controversial character.

So I found this parody entitled “LinkedIf” by Jamie Hardesty hilarious. So, so good.

Source: LinkedIn