2024

Summer digital detox

During my run yesterday morning, I listened to a great podcast episode about doing different things during the summer months. Now, as I wait to pick up my daughter from school in the driving rain it might not feel like summer here in the UK, but the advice is nonetheless spot-on.

In particular, I’ve taken the advice to do a bit of a digital detox and slow down a bit. So I’ve logged out of my Mastodon, Bluesky, and LinkedIn accounts, and will be back… mañana.

The summer months have a different flavour and feel to the other months of the year; there’s something different about our energy, motivation and willpower. And, if we can harness those differences, we have a golden opportunity to make meaningful changes that can have a transformative impact on our health, happiness and relationships and teach us things about ourselves that we previously did not know.

Source: Feel Better Live More

Image: Leone Venter

Informatics of domination

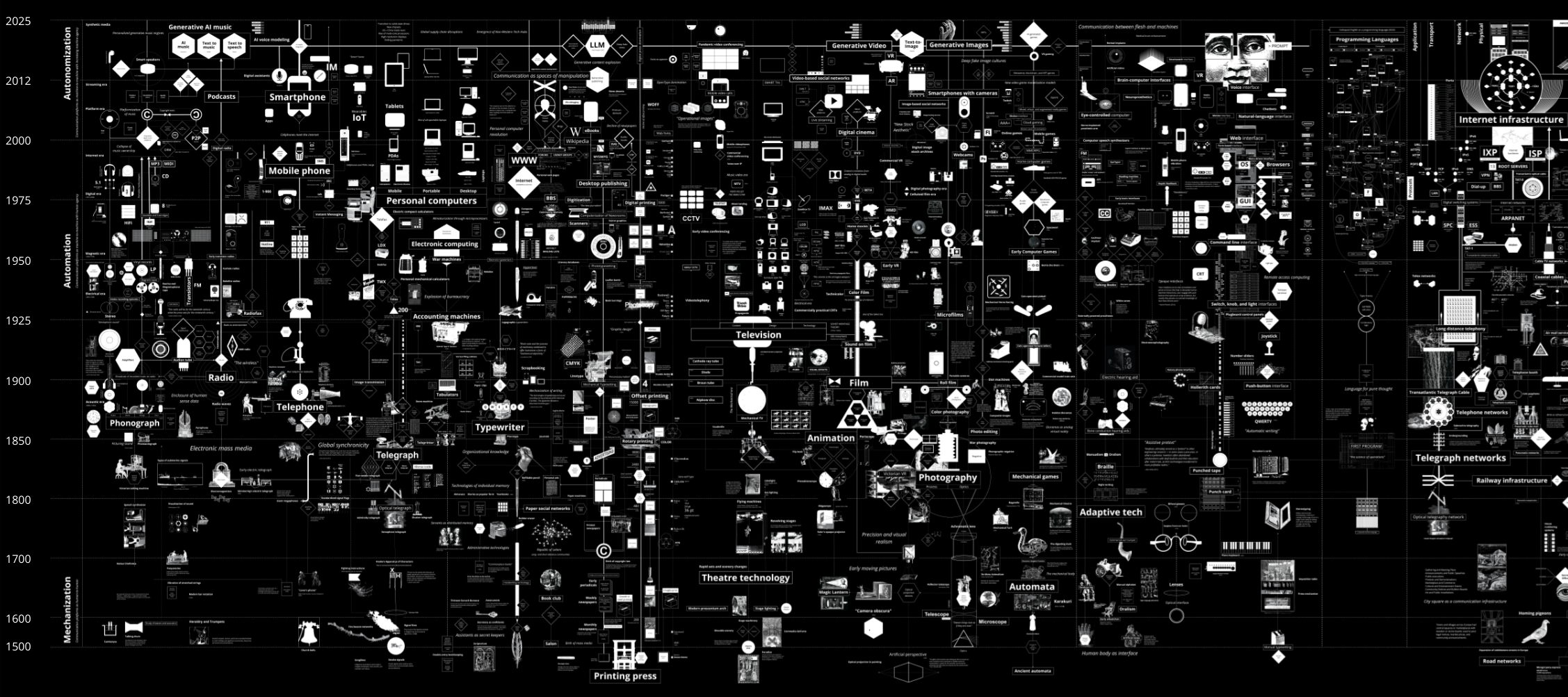

I’ve had this incredible interactive map, created by Kate Crawford and Vladan Joler, bookmarked for a while now. I’m never sure what to do with so much information in one place that isn’t primarily text-based.

I’m sharing it while still exploring it myself, with the hope that others will be able to find a use for it rather than be overwhelmed!

Calculating Empires is a large-scale research visualization exploring how technical and social structures co-evolved over five centuries. The aim is to view the contemporary period in a longer trajectory of ideas, devices, infrastructures, and systems of power. It traces technological patterns of colonialism, militarization, automation, and enclosure since 1500 to show how these forces still subjugate and how they might be unwound. By tracking these imperial pathways, Calculating Empires offers a means of seeing our technological present in a deeper historical context. And by investigating how past empires have calculated, we can see how they created the conditions of empire today.

[…]

Calculating Empires takes Donna Haraway’s provocation literally that we need to map the “informatics of domination.” The technologies of today are the latest manifestations of a long line of entangled systems of knowledge and control. This is the purpose of our visual genealogy: to show the complex interplay of systems of power, information, and circumstance across terrain and time, in order to imagine how things could be otherwise.

This work can never be complete: it is necessarily partial, subjective, and drawn from our own positionality. But that openness is part of the project. You are invited to read, reflect, and consider your own history in the recurring stories of calculation and empire. As the overwhelming now continues to unfold, Calculating Empires offers the possibility of looking back, in order to consider how different futures could be envisioned and realized.

Source: Calculating Empires: A Genealogy of Technology and Power Since 1500

If you're not a part of the solution, there's good money to be made in prolonging the problem

There’s a lot of money sloshing around at the top of society, being channeled into different schemes and offshore bank accounts. To enable this, there are a lot of bullshit jobs, including PR agencies spewing out credulous content.

Joan Westenberg was one of these people, until one day, she decided not to be. As she quotes Upton Sinclair as saying, “It is difficult to get a man to understand something when his salary depends upon his not understanding it.”

One morning, I sat down at my desk to craft yet another press release touting yet another “game-changing” startup that had raised - yet another - $25 million. And I realized I couldn’t remember the last time I’d written something I believed in. The words that used to flow felt like trying to squeeze ancient toothpaste from an empty tube.

That was the day I cracked.

It wasn’t about the individual startups or the overhyped products. It was the whole damn ecosystem—if we can call it that. The inflated valuations, cult-like frat house “culture,” and the relentless, mindless pursuit of growth that comfortably glossed over the human cost of “disruption.”

Somewhere along the way, I’d allowed my writing—the thing that used to give me purpose—to be co-opted by the bullshit industrial complex. I’d convinced myself that I was part of something bigger, something world-changing. But deep down, in the quiet moments between pitch meetings and product launches, I knew better.

Source: Joan Westenberg

Look out for surplus fingers

As I always say about misinformation and disinformation: people believe what they want to believe. So you don’t actually need very sophisticated ‘deepfakes’ for people to reshare fake content.

That being said, this article in The Guardian does a decent job of showing some ways of spotting deepfake content, along with some examples.

Look out for surplus fingers, compare mannerisms with real recordings and apply good old-fashioned common sense and scepticism, experts advise

In a crucial election year for the world, with the UK, US and France among the countries going to the polls, disinformation is swirling around social media.

There is much concern about deepfakes, or artificial intelligence-generated images or audio of leading political figures designed to mislead voters, and whether they will affect results.

They have not been a huge feature of the UK election so far, but there has been a steady supply of examples from around the world, including in the US where a presidential election looms.

Here are the visual elements to look out for.

Source: The Guardian

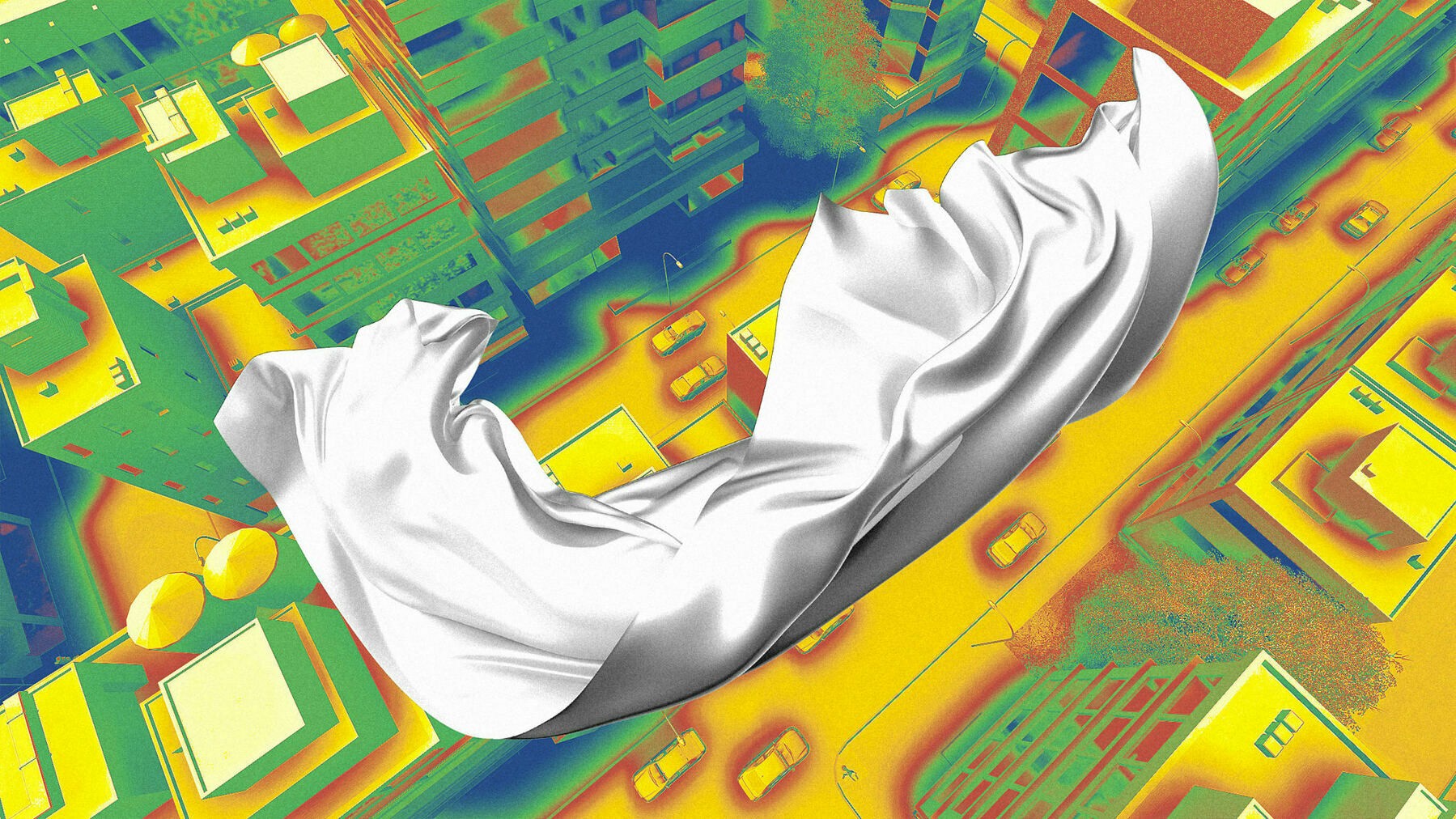

New materials for a super-heated world

As I mentioned I’m reading Lifehouse by Adam Greenfield at the moment, which starts with some stark information about global heating. We need lots of workarounds to combat this, and some new material looks particularly promising.

This new textile could provide at least a little relief. It uses a process called radiative cooling, which describes how objects cool down by radiating thermal energy into their surroundings. Radiative cooling textiles do already exist, but most just reflect the sun’s heat. That “works very well if you’re in an open field,” says Po-Chun Hsu, a molecular engineering professor at the University of Chicago, whose team recently published a paper on their new material in the journal Science. But not in a city.

What those other fabrics don’t do is reflect the ambient heat coming from the street below or a nearby building. The heat coming directly from the sun’s rays and the heat emitted from a sun-baked street aren’t the same; they have different wavelengths. That means a material has to have two different “optical properties” to reflect both.

To do that, the researchers created a three-layer textile. The top layer is made of polymethylpentene or PMP, a type of plastic commonly used for packaging; the researchers had to figure out how to spin it into a fiber. The second is a sheet of silver nanowires, which acts like a mirror to reflect infrared radiation. Together, these block both the solar radiation and the ambient radiation reflected off of surfaces. The third layer can be any conventional fabric, like wool or cotton. Though there are multiple layers, the main thickness comes from the conventional fabric; the top layer is about 1/100th of a human hair.

In outdoor tests in Arizona, the textile stayed 4.1 degrees Fahrenheit (2.3 degrees Celsius) cooler than “broadband emitter” fabrics used for outdoor sports, and 16 F (8.9 C) cooler than regular silk, a breathable fabric often used for dresses and shirts.

Along with clothing, the researchers say this cooling textile could be used on buildings, in cars, or even for food storage and shipping in order to lessen the need for refrigeration, which has a significant climate impact of its own. Next, Hsu’s team is collaborating with other teams to see how the textile could have a health benefit for those in extreme heat conditions.

Source: Fast Company

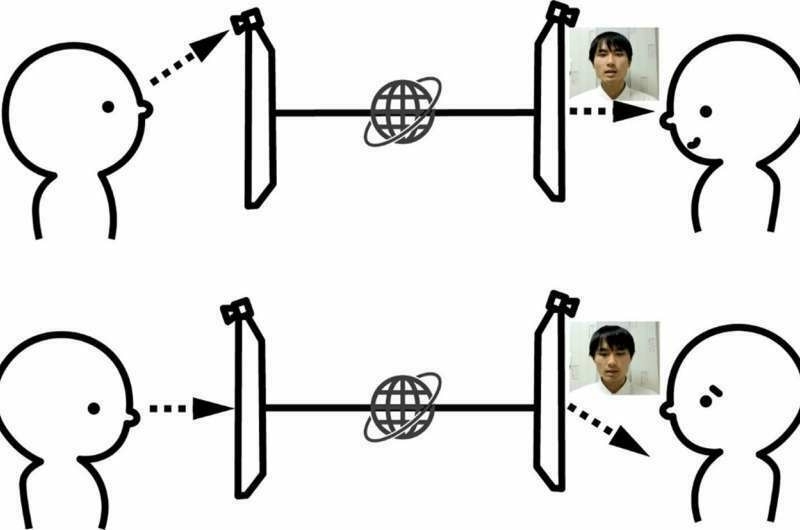

Eye-contact has a significant impact on interpersonal evaluation, and online job interviews are no exception

Maintaining “eye contact” with someone on a video conference call is a bit weird, because it necessitates looking directly into the camera. It’s important, though, otherwise it feels like the other person isn’t looking at you. And that impacts relationships - and, it seems, interpersonal evaluations.

The results indicate interviewers evaluate candidates more positively when their gaze is directed at the camera (i.e., CAM stimulus) compared to when the candidates look at the screen (SKW stimulus). The skewed-gaze stimulus received worse evaluation scores than voice-only presentation (VO stimulus).

Throughout an online interview, it is challenging to maintain “genuine” eye contact—making direct and meaningful visual connection with another person, but gazing into the camera can accomplish a similar feeling online as direct eye contact does in person.

While the evaluators overall preferred interviewees who maintained eye contact with the camera, an unconscious gender bias appeared. Female evaluators judged those with skewed downward gazes more harshly than male evaluators, and the difference in the evaluation of the CAM and SKW stimuli for female interviewees was larger than the male interviewees.

This gender bias within the study could be prevalent under non-experimental conditions. Making both interviewers and interviewees aware of this potentially systematic gender bias could help curtail this issue.

Source: Phys.org

Here is a book as a toolbox to build actual, hard-tacks answers to the crisis of the Long Emergency

I’ve been very much looking forward to reading Lifehouse: Taking Care of Ourselves in a World on Fire by Adam Greenfield, so I was delighted to discover today that, despite having a release date of 9th July, I could already download the ePUB!

Adam generously featured on an episode during the last season of our podcast, The Tao of WAO and was generous with his time. Go and listen to that to discover what the book’s original title was, and also pre-order the book!

I have a particular suspicion of the kind of book that spends 10 chapters telling the reader the many problems that face the contemporary world, and then follows with a final chapter that offers something - socialism, say - as the simple solution to all our woes. I call this the ‘11 Chapter problem’, and warn every author to avoid this trap. It is often easy to diagnose the problem; it is far harder to think clearly about what we are meant to do about it. More often than not the reader is already well aware of the problems: the reason they pick up a book is to find solutions. And this is why I am so excited about Adam Greenfield’s Lifehouse: Taking Care of Ourselves in a World on Fire. Here is a book as a toolbox to build actual, hard-tacks answers to the crisis of the Long Emergency.

[…]

It starts as a building - a church, a library, a school gym - that is the Lifehouse. This is a place for everyone to go to in an emergency - a flood, fire, or hurricane. It will have a kitchen, beds, clothing storage. But it will also be its own power source - with generators or renewable energy from a wind turbine, or solar panels on the roof. It will also be able to produce its own food with vertical farming technology installed. It will be a tool library that allows for repair and restoration, even outside the emergency - with 3-D printing technology. It will also have a skills library so that the community knows who is a doctor, nurse, teacher or transport.

[…]

But sustaining that effort for the long term - the long emergency itself - is hard. Often communities fail to plan far enough ahead. They split and betray each other. They face insurmountable opposition who wish to take away their autonomy. The Lifehouse is designed with this in mind too. It is not an afterthought, but a deeply considered means in which to think about the future.

Source: Verso Book Club: Lifehouse

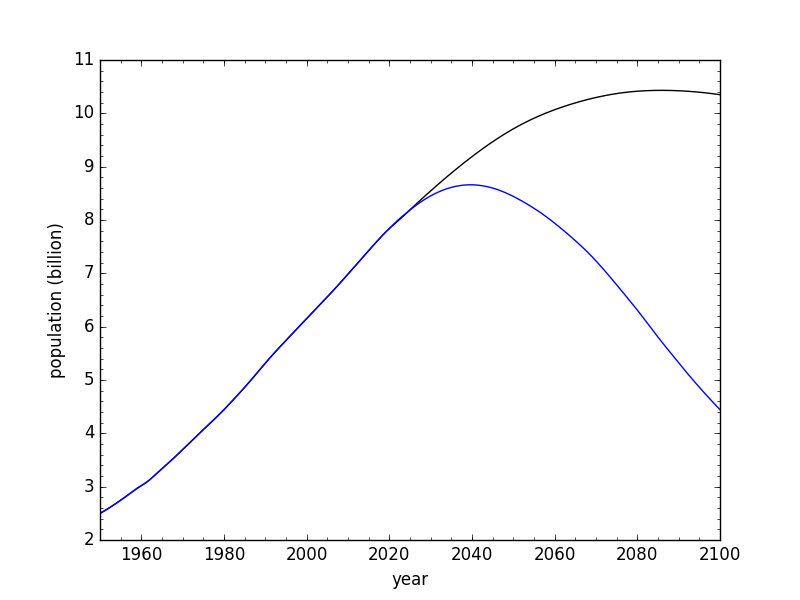

A smaller human population will immensely facilitate other transformations we need

Chart: Population projections from the U.N. (black) and Tom Murphy (blue)

When it’s put as starkly as this, it’s interesting to think about a post-peak human population as something that might happen within my lifetime. The article cited below was linked to from this one by Tom Murphy of UC San Diego, who created the chart I’ve used to accompany this post.

I’m no expert, but Murphy’s reasoning seems reasonable, and I’d assume that the existing right-wing ‘natalism’ is likely to go mainstream within this decade. Interesting times.

Governments worldwide are in a race to see which one can encourage the most women to have the most babies. Hungary is slashing income tax for women with four or more children. Russia is offering women with 10 or more children a “Mother-Heroine” award. Greece, Italy, and South Korea are bribing women with attractive baby bonuses. China has instituted a three-child policy. Iran has outlawed free contraceptives and vasectomies. Japan has joined forces with the fertility industry to infiltrate schools to promote early childbearing. A leading UK demographer has proposed taxing the childless. Religious myths are preventing African men from getting vasectomies. A eugenics-inspired Natal conference just took place in the U.S., a nation leading the way in taking away reproductive rights.

[…]

The alarmism surrounding declining fertility rates is unfounded; it is a positive trend that represents greater reproductive choice, and one that we should accelerate. A smaller human population will immensely facilitate other transformations we need: mitigating climate change, conserving and rewilding ecosystems, making agriculture sustainable, and making communities more resilient and able to integrate more climate and war refugees.

Source: CounterPunch

The Promise and Pitfalls of Decentralised Social Networks

This paper, ‘Decentralized Social Networks and the Future of Free Speech Online’ explores the potential of decentralized social networks like Mastodon and BlueSky to enhance free speech by shifting control from central authorities to individual users. The author, Ted Huang, examines how decentralisation can promote the free speech values of knowledge, democracy, and autonomy, while also acknowledging the inherent challenges and trade-offs in practical implementation.

Huang highlights that decentralized networks face significant challenges in knowledge verification, effective moderation, and avoiding recentralisation. He notes that the ideal of decentralization often conflicts with practical needs, which necessitates some centralised mechanisms for such things as content moderation and cross-community communication. So, Huang argues, to truly empower users, we need inclusive design processes and ongoing policy discussions.

The decentralized social network has been widely viewed as a cure to its centralized counterpart, which is owned by corporate monopolies, funded by surveillance capitalism, and moderated according to rules made by the few (Gehl 2018, 2-3). The tremendous and unchecked power of those giant platforms was seen as a major threat to people’s rights and freedoms online. The newly emergent decentralized social networks, through infrastructural redesign, create a power-sharing scheme with the end users, so that it is the users themselves, rather than a corporate body, that determine how the communities shall be governed. Such an approach has been hailed as a promising way of curbing the monopolies and empowering the users. It was expected to bring more freedom of speech to individuals, and the vision it underscores – openness rather than walled-gardens, bottom-up rather than top-down – represents the future of the Internet (Ricknell 2020, 115).

[…]

The discussion of the decentralization project is trending, but it is too limited because there lacks systematic and critical review on the project’s normative implications. In particular, the current debate is mostly restricted to the technical circle, without sufficient input and participation from other fields such as policy, law and ethics. So far, researchers on the decentralized social networks mainly focus on their technical difficulties and features, rather than its social implications (Marx & Cheong 2023, 2). Lawmakers and regulators in the world have paid little attention yet to regulating this new technical paradigm (Friedl & Morgan 2024, 8). For decentralized networks to serve as the desirable future of online communications, we need to know why this is so and how it can be achieved. Will decentralized networks better facilitate the free speech online than the centralized platforms? How to design the new space to make it really fit with our value commitments? All the utopian and dystopian analyses of the decentralized future are only possibilities: what matters is the choices we make about how these technologies are designed and used (Cohnh & Mir 2022). Value commitments must be carefully examined and considered in the design process.

Source: arXiv

Image: Omar Flores

If we don’t change course, most people in the U.S. will have some flavor of Long COVID of one sort or another

For the past few years, I’ve been on the list of ‘vulnerable’ people who get a free booster Covid vaccine due to my asthma. That’s no longer the case, but Covid is still around, and mutating.

This interview with someone who, admittedly, runs a Covid testing company, has made me think that perhaps I need to pay for a private vaccine because I really don’t want Long Covid. Even the venerable Venkatesh Rao has written about the cognitive impact that he suspects Covid has had on him.

Dr. Phillip Alvelda, a former program manager in DARPA’s Biological Technologies Office that pioneered the synthetic biology industry and the development of mRNA vaccine technology, is the founder of Medio Labs, a COVID diagnostic testing company. He has stepped forward as a strong critic of government COVID management, accusing health agencies of inadequacy and even deception. Alvelda is pushing for accountability and immediate action to tackle Long COVID and fend off future pandemics with stronger public health strategies.

[…]

PA: There are all kinds of weird things going on that could be related to COVID’s cognitive effects. I’ll give you an example. We’ve noticed since the start of the pandemic that accidents are increasing. A report published by TRIP, a transportation research nonprofit, found that traffic fatalities in California increased by 22% from 2019 to 2022. They also found the likelihood of being killed in a traffic crash increased by 28% over that period. Other data, like studies from the National Highway Traffic Safety Administration, came to similar conclusions, reporting that traffic fatalities hit a 16-year high across the country in 2021. The TRIP report also looked at traffic fatalities on a national level and found that traffic fatalities increased by 19%.

[…]

Damage from COVID could be affecting people who are flying our planes, too. We’ve had pilots that had to quit because they couldn’t control the airplanes anymore. We know that medical events among U.S. military pilots were shown to have risen over 1,700% from 2019 to 2022, which the Pentagon attributes to the virus.

[…]

PA: What does this look like if we continue on the way we are doing right now? What is the worst-case scenario? Well, I think there are two important eventualities. So we’re what, four years in? Most people have had COVID three and a half times on average already. After another four years of the same pattern, if we don’t change course, most people in the U.S. will have some flavor of Long COVID of one sort or another.

An inferior, or at least grossly limited version of intelligence

Audrey Watters cites the work of Zoë Schlanger who asks what we mean by ‘intelligence’. This is important, of course, because we’re often prepending the word ‘artificial’ to it, meaning that we’re foregrounding something and backgrounding something else. It’s a zeugma.

Really, it’s intelligence I’ve been thinking about lately, as I’ve been reading Zoë Schlanger’s new book The Light Eaters: How the Unseen World of Plant Intelligence Offers a New Understanding of Life on Earth.

[…]

What do we mean, Schlanger asks, by “intelligence”? What behaviors indicate that an organism, plant or otherwise, is “thinking”? How does one “think” without a nervous system, without a brain? Some of the reasons why we’ve answered these questions in such a way to deny plant intelligence can be traced, of course, to ancient Greece and to the Aristotelian insistence that thinking is the purview solely of Man. It’s a particular kind of thinking too that is privileged in this definition: rationality.

And it’s that form of “thinking,” of “intelligence” that is privileged in the discussions about artificial intelligence. It’s actually an inferior, or at least grossly limited version of intelligence, if it’s intelligence at all — the idea that entities, animal or machine, are programmed, coded. It’s so incredibly limiting.

Source: Second Breakfast

Image: Shane Rounce

F L A M I N G O N E

Miles Astray is a photographer who recently won the People’s Vote and a Jury Award in the artificial intelligence category of 1839 Awards. The photo is of a flamingo whose head is apparently missing.

The twist: the photo is as real as the simple belly scratch the bird is busy with.

With AI-generated content remodelling the digital landscape rapidly while sparking an ever-fiercer debate about its implications for the future of content and the creators behind it – from creatives like artists, journalists, and graphic designers to employees in all sorts of industries – I entered this actual photo into the AI category of 1839 Awards to prove that human-made content has not lost its relevance, that Mother Nature and her human interpreters can still beat the machine, and that creativity and emotion are more than just a string of digits.

After seeing recent instances of AI-generated imagery outshining actual photos in competitions, it occurred to me that I could twist this story inside down and upside out the way only a human could and would, by submitting a real photo into an AI competition. My work F L A M I N G O N E was the perfect candidate because it’s a surreal and almost unimaginable shot, and yet completely natural. It is the first real photo to win an AI award.

Source: Miles Astray

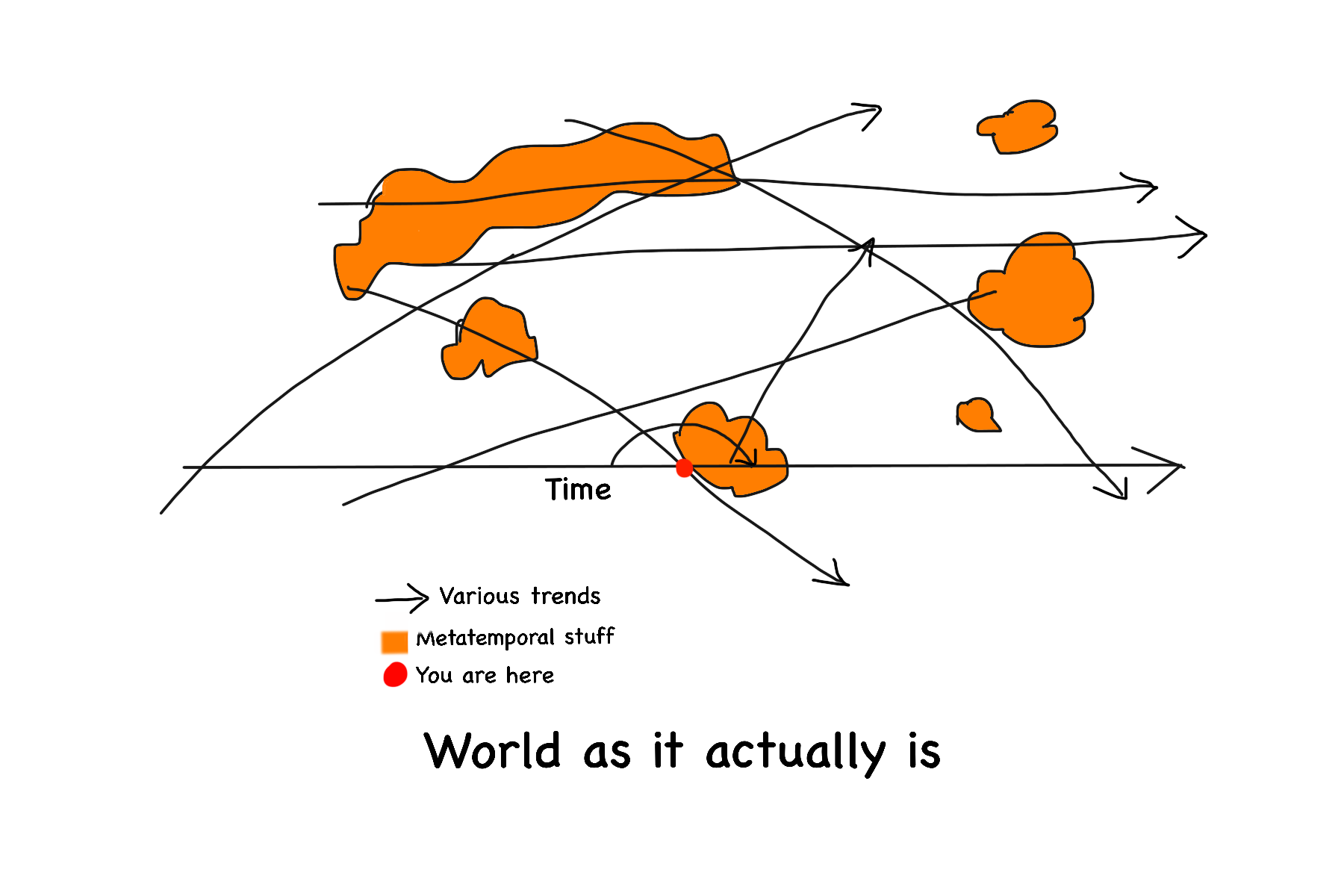

The writer’s equivalent of what in computer architecture is called speculative execution

As ever with Venkatesh Rao’s posts, there’s a lot going on with this one. Ostensibly, it’s about the third anniversary of the most recent iteration of his newsletter, but along the way he discusses the current state of the world. It’s a post worth reading for the latter reason, but I’m focused here on the role of writing and publishing online, which I do quite a lot.

Rao tries to fit his posts into one of five narrative scaffoldings which cover different time spans. Everything else falls by the wayside. I do the opposite: just publishing everything so I’ve got a URL for all of the thoughts, and I can weave it together on demand later. I think Cory Doctorow is a bit more like that, too (although more organised and a much better writer than me!)

As a writer, you cannot both react to the world and participate in writing it into existence — the bit role in “inventing the future” writers get to play — at the same time. My own approach to resolving this tension has been to use narratives at multiple time scales as scaffolding for sense-making. Events that conform (but not necessarily confirm) to one or more of my narratives leave me with room to develop my ab initio creative projects. Events that either do not conform to my narratives or simply fall outside of them entirely tend to derail or drain my creative momentum. It is the writer’s equivalent of what in computer architecture is called speculative execution. If you’re right enough, often enough, as a writer, you can have your cake and eat it too — react to the world, and say what you want to say at the same time.

[…]

Writing seemed like a more culturally significant, personally satisfying, aesthetically appropriate, and existentially penetrating thing to be doing in 2014 than it does now in 2024. I think we live in times when writing has less of a role to play in inventing the future, for a variety of reasons. You have to work harder at it, for less reward, in a smaller role. Fortunately for my sanity, writing is not the only thing I do with my life.

[…]

Maybe we’re just at the end of a long arc of 25 years or so, when writing online was exceptionally culturally significant and happened to line up with my most productive writing years, and the other shoe has dropped on the story of “blogging.”

Source: Ribbonfarm Studio

It's impossible to 'hang out' on the internet, because it is not a place

I spend a lot of time online, but do I ‘hang out’ there? I certainly hang out with people playing video games, but that’s online rather than on the internet. Drew Austin argues that because of the amount of money and algorithms on the internet, it’s impossible to hang out there.

I’m not sure. It depends on your definition of ‘hanging out’ and it also depends whether you’re just focusing on mainstream services, or whether you’re including the Fediverse and niche things such as School of the Possible. The latter, held every Friday by Dave Grey, absolutely is ‘hanging out’, but whether Zoom calls with breakout rooms count as the internet depends on semantics, I guess.

Is “hanging out” on the internet truly possible? I will argue: no it’s not. We’re bombarded with constant thinkpieces about various social crises—young people are sad and lonely; culture is empty or flat or simply too fragmented to incubate any shared meaning; algorithms determine too much of what we see. Some of these essays even note our failure to hang out. The internet is almost always an implicit or explicit villain in such writing but it’s increasingly tedious to keep blaming it for our cultural woes.

Perhaps we could frame the problem differently: The internet doesn’t have to demand our presence the way it currently does. It shouldn’t be something we have to look at all time. If it wasn’t, maybe we’d finally be free to hang out.

[…]

How many hours have been stolen from us? With TV, we at least understood ourselves to be passive observers of the screen, but the interactive nature of the internet fostered the illusion that message boards, Discord servers, and Twitter feeds are digital “places” where we can in fact hang out. If nothing else, this is a trick that gets us to stick around longer. A better analogy for online interaction, however, is sitting down to write a letter to a friend—something no one ever mistook for face-to-face interaction—with the letters going back and forth so rapidly that they start to resemble a real-time conversation, like a pixelated image. Despite all the spatial metaphors in which its interfaces have been dressed up, the internet is not a place.

Source: Kneeling Bus

Image: Wesley Tingey

'Wet streets cause rain' stories

First things first, the George Orwell quotation below is spurious, as the author of this article, David Cain, points out at the end of it. The point is that, it sounds plausible, so we take it on trust. It confirms our worldview.

We live in a web of belief, as W.V. Quine put it, meaning that we easily accept things that confirm our core beliefs. And then, with beliefs that are more peripheral, we pick them up and put them down at no great cost. Finding out that the capital of Burkina Faso is Ouagadougou and not Bobo-Dioulasso makes no practical difference to my life. It would make a huge difference to the residents of either city, however.

I don’t like misinformation, and I think we’re in quite a dangerous time in terms of how it might affect democratic elections. However, it has always been so. Gossip, rumour, and straight up lies have swayed human history. The thing is that, just as we are able to refute poor journalism and false statements on social networks about issues we know a lot about, so we need to be a bit skeptical about things outside of our immediate knowledge.

After all, as Cain quotes Michael Crichton as saying, there are plenty of ‘wet streets cause rain’ stories out there, getting causality exactly backwards — intentionally or otherwise.

Consider the possibility that most of the information being passed around, on whatever topic, is bad information, even where there’s no intentional deception. As George Orwell said, “The most fundamental mistake of man is that he thinks he knows what’s going on. Nobody knows what’s going on.”

Technology may have made this state of affairs inevitable. Today, the vast majority of person’s worldview is assembled from second-hand sources, not from their own experience. Second-hand knowledge, from “reliable” sources or not, usually functions as hearsay – if it seems true, it is immediately incorporated into one’s worldview, usually without any attempt to substantiate it. Most of what you “know” is just something you heard somewhere.

[…]

It makes perfect sense, if you think about it, that reporting is so reliably unreliable. Why do we expect reporters to learn about a suddenly newsworthy situation, gather information about it under deadline, then confidently explain the subject to the rest of the nation after having known about it for all of a week? People form their entire worldviews out of this stuff.

[…]

People do know things though. We have airplanes and phones and spaceships. Clearly somebody knows something. Human beings can be reliable sources of knowledge, but only about small slivers of the whole of what’s going on. They know things because they deal with their sliver every day, and they’re personally invested in how well they know their sliver, which gives them constant feedback on the quality of their beliefs.

Source: Raptitude

Dividers tell the story of how they’ve renovated their houses, becoming architects along the way. Continuers tell the story of an august property that will remain itself regardless of what gets built.

This long article in The New Yorker is based around the author wondering whether the fun he’s had playing with his four year-old will be remembered by his son when he grows up.

Wondering whether you are the same person at the start and end of your life was a central theme of a ‘Mind, Brain, and Personal Identity’ course I did as part of my Philosophy degree around 22 years ago. I still think about it. On the one hand is the Ship of Theseus argument, where you can one-by-one replace all of the planks of a ship, but it’s still the same ship. If you believe it’s the same ship, and believe that you’re the same person as when you were younger, then the author of this article would call you a ‘Continuer’.

On the other hand, if you think that there are important differences between the person you are now and when you were younger. If, for example, the general can’t remember ‘going over the top’ as a young man, despite still having the medal to prove it, is he the same person? If you don’t think so, then perhaps you are a ‘Divider’.

I don’t consider it so clean cut. We tell stories about ourselves and others, and these shape how we think. For example, going to therapy five years ago helped me ‘remove the mask’ and reconsider who I am. That involved reframing some of the experiences in my life and realising that I am this kind of person rather than that kind of person.

It’s absolutely fine to have seasons in your life. In fact, I’m pretty sure there’s some ancient wisdom to that effect?

Are we the same people at four that we will be at twenty-four, forty-four, or seventy-four? Or will we change substantially through time? Is the fix already in, or will our stories have surprising twists and turns? Some people feel that they’ve altered profoundly through the years, and to them the past seems like a foreign country, characterized by peculiar customs, values, and tastes. (Those boyfriends! That music! Those outfits!) But others have a strong sense of connection with their younger selves, and for them the past remains a home. My mother-in-law, who lives not far from her parents’ house in the same town where she grew up, insists that she is the same as she’s always been, and recalls with fresh indignation her sixth birthday, when she was promised a pony but didn’t get one. Her brother holds the opposite view: he looks back on several distinct epochs in his life, each with its own set of attitudes, circumstances, and friends. “I’ve walked through many doorways,” he’s told me. I feel this way, too, although most people who know me well say that I’ve been the same person forever.

[…]

The philosopher Galen Strawson believes that some people are simply more “episodic” than others; they’re fine living day to day, without regard to the broader plot arc. “I’m somewhere down towards the episodic end of this spectrum,” Strawson writes in an essay called “The Sense of the Self.” “I have no sense of my life as a narrative with form, and little interest in my own past.”

[…]

John Stuart Mill once wrote that a young person is like “a tree, which requires to grow and develop itself on all sides, according to the tendency of the inward forces which make it a living thing.” The image suggests a generalized spreading out and reaching up, which is bound to be affected by soil and climate, and might be aided by a little judicious pruning here and there.

Source: The New Yorker

Can’t access it? Try Pocket or Archive Buttons

Source: 2024 Drone Photo Awards Nominees

The iPhone effect, if it was ever real in the first place, is certainly not real now.

It’s announcement time at Apple’s WWDC. And apart from trying to rebrand AI as “Apple Intelligence” I haven’t seen many people get very excited about it. MKBHD has an overview if you want to get into the details. I just use macOS without iCloud because everything works and my Mac Studio is super-fast.

Ryan Broderick has a word for Apple fanboys, who seem to think that everything they touch is gold. Seems like their Vision Pro hasn’t brought VR mainstream, and after the more innovative Steve Jobs era, it seems like they’re more happy to be a luxury brand that plays it relatively safe.

If you press Apple fanboys about their weird revisionist history, they usually pivot to the argument that while iOS’s marketshare has essentially remained flat for a decade, their competitors copy what they do and that trickles down into popular culture from there. Which I’m not even sure is true either. Android had mobile payments three years before Apple, had a smartwatch a year before, a smart speaker a year before, and launched a tablet around the same time as the iPad. We could go on and on here.

And, I should say, I don’t actually think Apple sees themselves as the great innovator their Gen X blogger diehards do. In the 2010s, they shifted comfortably from a visionary tastemaker, at least aesthetically, into something closer to an airport lounge or a country club for consumer technology. They’ll eventually have a version of the new thing you’ve heard about, once they can rebrand it as something uniquely theirs. It’s not VR, it’s “spatial computing,” it’s not AI, it’s “Apple Intelligence”. But they’re not going to shake the boat. They make efficiently-bundled software that’s easy to use (excluding iPadOS) and works well across their nice-looking and easy-to-use devices (excluding the iPad). Which is why Apple Intelligence is not going to be the revolution the AI industry has been hoping for. The same way the Vision Pro wasn’t. The iPhone effect, if it was ever real in the first place, is certainly not real now.

Source: Garbage Day

The latest Hardcore History just dropped

I could listen to Dan Carlin read the phone book all day, so to read the announcement that his latest multi-part (and multi-hour!) series for the Hardcore History podcast has started is great news!

So, after almost two decades of teasing it, we finally begin the Alexander the Great saga.

I have no idea how many parts it will turn out to be, but we are calling the series “Mania for Subjugation” and you can get the first installment HERE. (of course you can also auto-download it through your regular podcast app).

[…]

And what a story it is! My go-to example in any discussion about how truth is better than fiction. It is such a good tale and so mind blowing that more than 2,300 years after it happened our 21st century people still eagerly consume books, movies, television shows and podcasts about it. Alexander is one of the great apex predators of history, and he has become a metaphor for all sorts of Aesop fables-like morals-to-the-story about how power can corrupt and how too much ambition can be a poison.

Source: Look Behind You!