2024

- Let people feel their feels.

- Check your own emotions.

- Talk to children as if they are people.

- Don’t give advice. Not really.

- Don’t relate.

- Ask questions.

-

Bound customer (today): Here, humans set the rules, and machines follow, executing purchases for specific items. This is seen in today’s smart devices and services like automated printer ink subscriptions.

-

Adaptable customer (by 2026): Machines will co-lead with humans, making optimized decisions from a set of choices. This will be reflected in smart home systems that can choose energy providers.

-

Autonomous customer (by 2036): The machine will take the lead, inferring needs and making purchases based on a complex understanding of rules, content, and preferences, such as AI personal assistants managing daily tasks.

Post-Holocene preferable future habitats

If someone asks me “what kind of future would you like to live in?” I’m going to just point them to this. It’s the work of Pascal Wicht, a systems thinker and strategic designer who specialises in tackling complex and ill-defined problems.

The dangers and problems with generative AI are many and well-documented. What I love about it is that all of a sudden we can quickly create things that we point to for inspiration and alternative futures. In this case, Wicht is experimenting with the Midjourney v6 Alpha, and there are many more images here.

Future Visualisations for Preferable Futures, using the MidJourney’s Generative Adversarial Networks.

I am in my third week of long Covid again. I can spend one or two hours per day on AI images and doing some writing. These images are part of what kept me motivated while mostly stuck in bed.

In this ongoing series, I continue to use the power of AI to explore a compelling question: What does a future look like where we successfully slow down and avert the looming abominations of collapse and extinction?

Source: Whispers & Giants

Image CC BY-NC Pascal Wicht

Being a good listener also means being a good talker

What an absolutely fantastic read this is. I’d encourage everyone to read it in its entirety, especially if you’re a parent. The list of things that the author, Molly Brodak, suggests we try out is:

I find #5 difficult, have gotten better at #4, and think that #3 is really, super important. I used to hate being talked to ‘differently’ as a child (compared to adults), and have noticed how much kids appreciated being talked to without being patronised.

I’m a child of a therapist. What that means is that I was expertly listened-to most of my life. And then, wow, I met the rest of the world.

It’s a good thing for our survival. It’s what makes this whole civilization thing possible, these linked minds. So why are so many people still so bad at listening?

One reason is this myth: that the good listener just listens. This egregious misunderstanding actually leads to a lot of bad listening, and I’ll tell you why: because a good listener is actually someone who is good at talking.

Source: Tomb Log

Image: DALL-E 3

AI agents as customers

I don’t often visit Medium other than when I’m writing a post for the WAO blog. When I’m there, it’s unlikely that any of the ‘recommended’ articles grab my attention. But this one did.

Although it seems ‘odd’, when you come to think of it, the notion of businesses selling to machines as well as humans makes complete sense. It won’t be long until, for better or worse, many of us will have AI agents who act on our behalf. That will not only be helping us with routine tasks and giving advice, but also making purchases on our behalf.

Obviously, the entity behind this blog post, “next-generation professional services company” has an interest in this becoming a reality. But it seems plausible.

Below is a timeline that encapsulates this progression, providing a roadmap for navigating the impending shifts in the landscape of consumer behavior:

Source: Slalom Business

Image: DALL-E 3

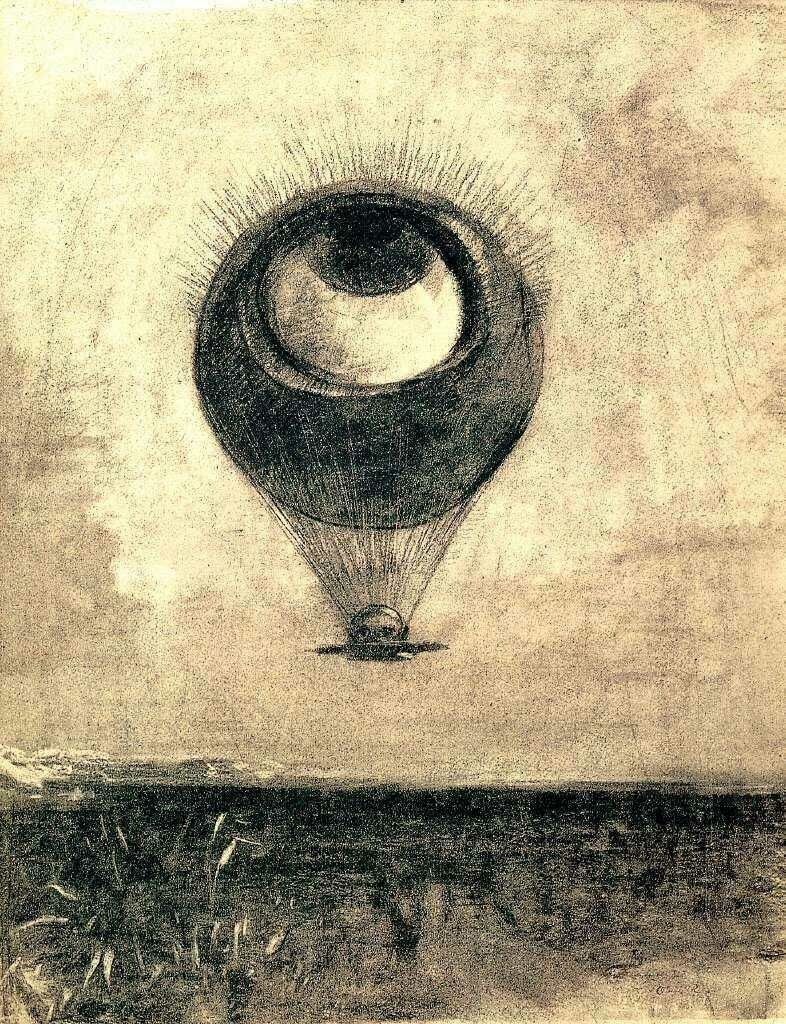

Ultravioleta

I’m not sure of the backstory to this drawing (‘Ultravioleta’) by Jon Juarez, but I don’t really care. It looks great, and so I’ve bought a print of it from their shop. They seem, from what I can tell, to have initially withdrawn from social media after companies such as OpenAI and Midjourney started using artists' work for their training data, but are now coming back.

Fediverse: @harriorrihar@mas.to

Shop: Lama

Language is probably less than you think it is

This is a great post by Jennifer Moore, whose main point is about using AI for software development, but along the way provide three paragraphs which get to the nub of why tools such as ChatGPT seem somewhat magical.

As Moore points out, large language models aren’t aware. They model things based on statistical probability. To my mind, it’s not so different than when my daughter was doing phonics and learning to recognise the construction of words and the probability of how words new to her would be spelled.

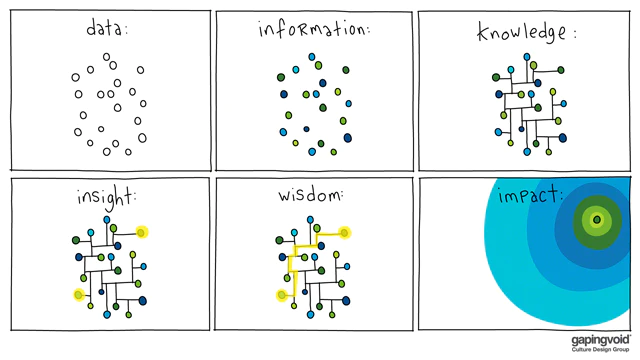

ChatGPT and the like are powered by large language models. Linguistics is certainly an interesting field, and we can learn a lot about ourselves and each other by studying it. But language itself is probably less than you think it is. Language is not comprehension, for example. It’s not feeling, or intent, or awareness. It’s just a system for communication. Our common lived experiences give us lots of examples that anything which can respond to and produce common language in a sensible-enough way must be intelligent. But that’s because only other people have ever been able to do that before. It’s actually an incredible leap to assume, based on nothing else, that a machine which does the same thing is also intelligent. It’s much more reasonable to question whether the link we assume exists between language and intelligence actually exists. Certainly, we should wonder if the two are as tightly coupled as we thought.

That coupling seems even more improbable when you consider what a language model does, and—more importantly—doesn’t consist of. A language model is a statistical model of probability relationships between linguistic tokens. It’s not quite this simple, but those tokens can be thought of as words. They might also be multi-word constructs, like names or idioms. You might find “raining cats and dogs” in a large language model, for instance. But you also might not. The model might reproduce that idiom based on probability factors instead. The relationships between these tokens span a large number of parameters. In fact, that’s much of what’s being referenced when we call a model large. Those parameters represent grammar rules, stylistic patterns, and literally millions of other things.

What those parameters don’t represent is anything like knowledge or understanding. That’s just not what LLMs do. The model doesn’t know what those tokens mean. I want to say it only knows how they’re used, but even that is over stating the case, because it doesn’t know things. It models how those tokens are used. When the model works on a token like “Jennifer”, there are parameters and classifications that capture what we would recognize as things like the fact that it’s a name, it has a degree of formality, it’s feminine coded, it’s common, and so on. But the model doesn’t know, or understand, or comprehend anything about that data any more than a spreadsheet containing the same information would understand it.

Source: Jennifer++

Image: gapingvoid

Humans and AI-generated news

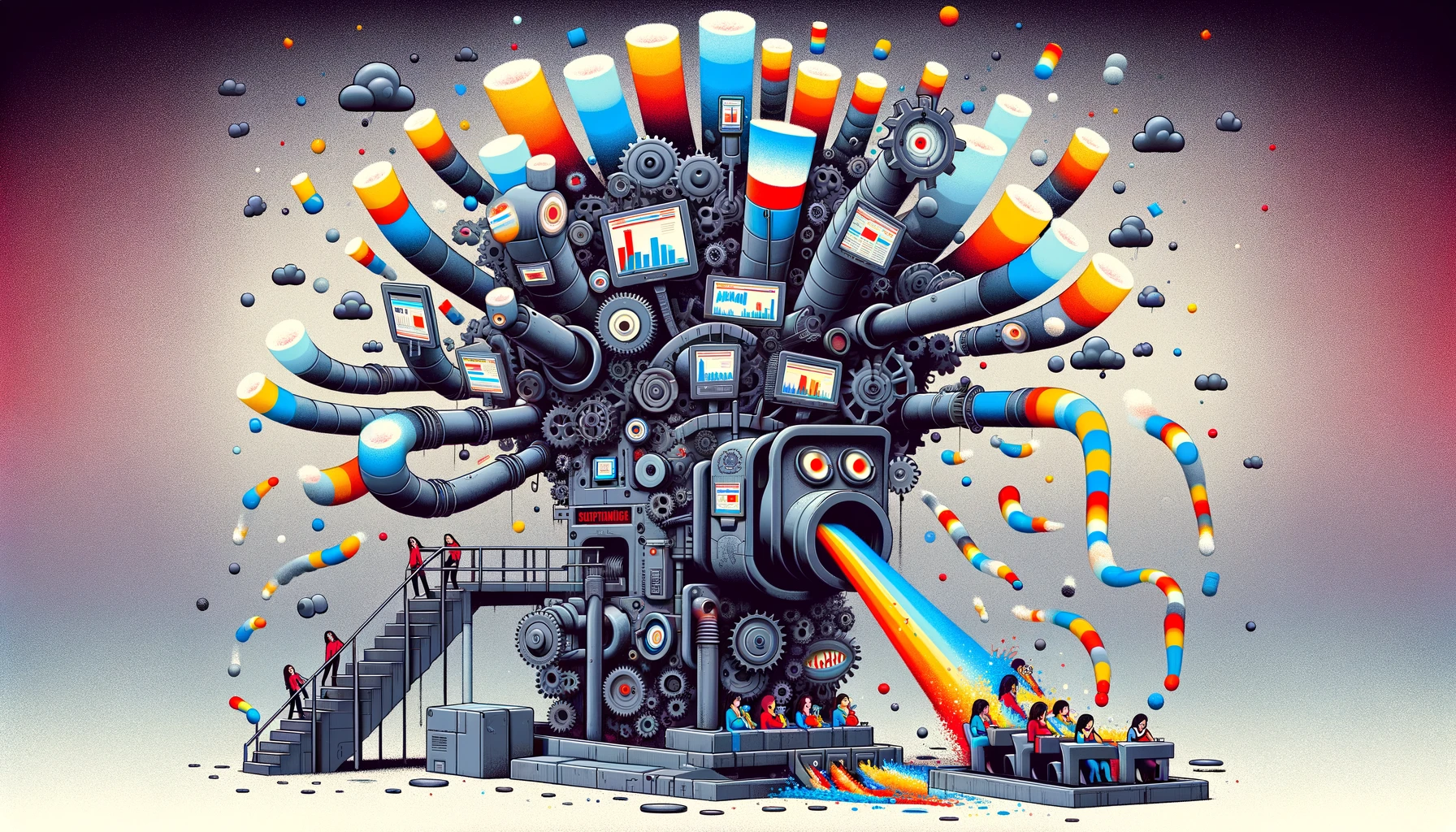

The endgame of news, as far as Big Tech is concerned is, I guess, just-in-time created content for ‘users’ (specified in terms of ad categories) who then react in particular ways. That could be purchasing a thing, but it also could be outrage, meaning more time on site, more engagement, and more shareholder value.

Like Ryan Broderick, I have some faith that humans will get sick of AI-generated content, just as they got sick of videos and list posts. But I also have this niggling doubt: the tendency is to see AI only through the lens of tools such as ChatGPT. That’s not what the AI of the future is likely to resemble, at all.

Adweek broke a story this week that Google will begin paying publications to use an unreleased generative-AI tool to produce content. The details are scarce, but it seems like Google is largely approaching small publishers and paying them an annual “five-figure sum”. Good lord, that’s low.

Adweek also notes that the publishers don’t have to publicly acknowledge they’re using AI-generated copy and the, presumably, larger news organizations the AI is scraping from won’t be notified. As tech critic Brian Merchant succinctly put it, “The nightmare begins — Google is incentivizing the production of AI-generated slop.”

Google told Engadget that the program is not meant to “replace the essential role journalists have in reporting, creating, and fact-checking their articles,” but it’s also impossible to imagine how it won’t, at the very least, create a layer of garbage above or below human-produced information surfaced by Google. Engadget also, astutely, compared it to Facebook pushing publishers towards live video in the mid-2010s.

[…]

Companies like Google or OpenAI don’t have to even offer any traffic to entice publishers to start using generative-AI. They can offer them glorified gift cards and the promise of an executive’s dream newsroom: one without any journalists in it. But the AI news wire concept won’t really work because nothing ever works. For very long, at least. The only thing harder to please than journalists are readers. And I have endless faith in an online audience’s ability to lose interest. They got sick of lists, they got sick of Facebook-powered human interest social news stories, they got sick of tweet roundups, and, soon, they will get sick of “news” entirely once AI finally strips it of all its novelty and personality. And when the next pivot happens — and it will — I, for one, am betting humans figure out how to adapt faster than machines.

Source: Garbage Day

Image: DALL-E 3

Elegant media consumption

Jay Springett shares some media consumption figures. It blows my mind how much time people spend consuming media rather than making stuff.

I was hanging out with a friend the other week and we were talking about our ‘little hobbies’ as we called them. All the things that we’re interested in. Our niches that we nerd out about which aren’t the sort of thing that we can talk to people about at any great length.

We got to wondering about how we spend our time, and what other people spend their time doing. We had big conversation with our other friends at the table with us about what they do with there time. Their answers wen’t all that far away from these stats I’ve just Googled:

Did you know in the UK in January 2024, adults watched an average of 2 hours 31 minutes a day of linear TV?

Meanwhile, a Pinterest user spends about 14 minutes on the platform daily, BUT “83% of weekly Pinterest users report making a purchase based on content they encountered”

The average podcast listener spends an hour a day listening to podcast.

Using a different metric, an average audiobook enjoyer spends an average of 2 hours 19 minutes every day,

In Q3 of 2023 the average amount of time spent on social media a day was 2 hours and 23 minutes? 1 in 3 internet minutes spent online can be attributed to social media platforms.

I like this combined more holistic statistic.

According to a study on media consumption in the United Kingdom, the average time spent consuming traditional media is consistently decreasing while people spend more time using digital media. In 2023, it is estimated that people in the United Kingdom will spend four hours and one minute using traditional media, while the average daily time spent with digital media is predicted to reach six hours and nine minutes.

Source: thejaymo

Image: DALL-E 3

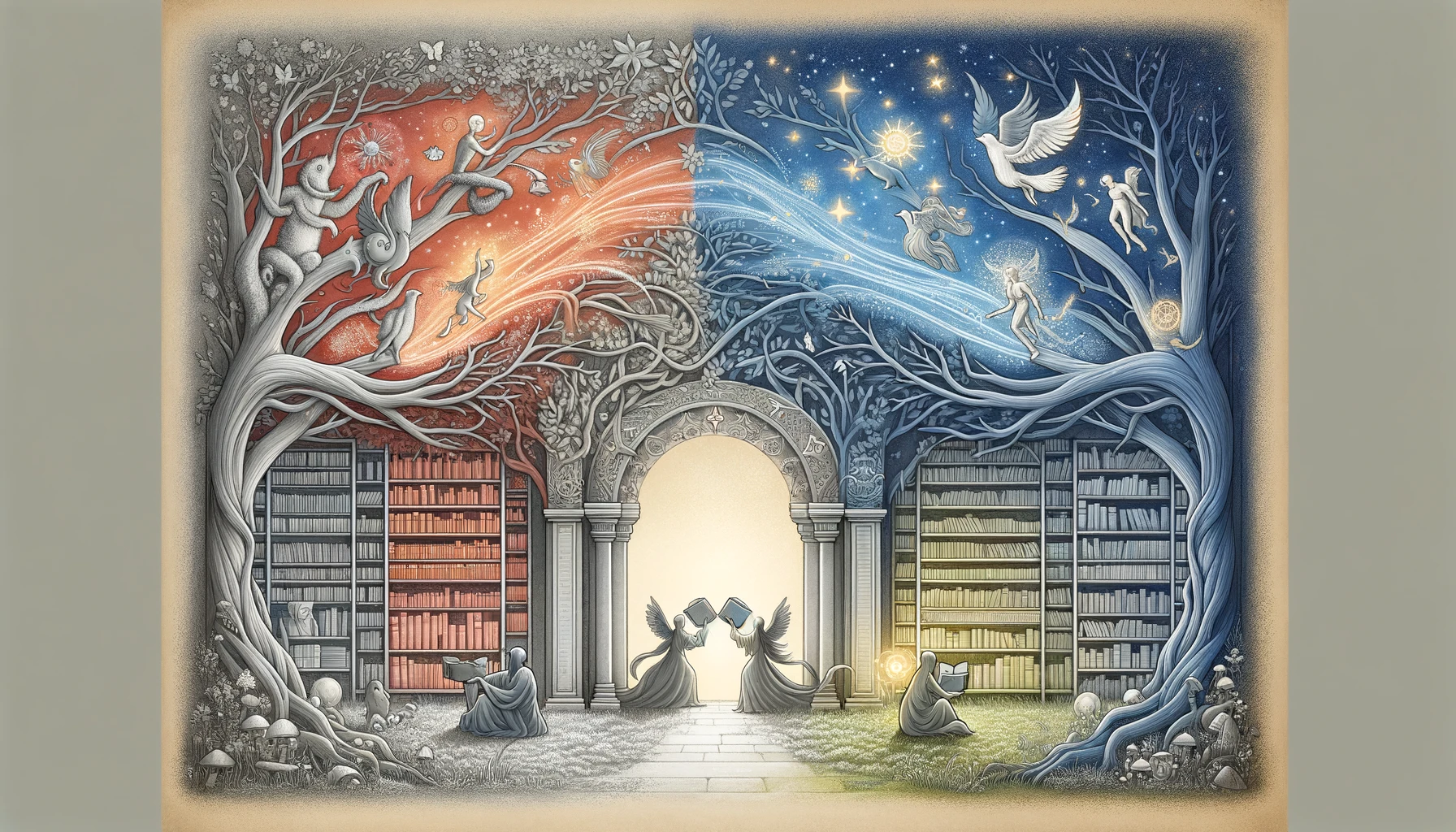

Philosophy and folklore

I love this piece in Aeon from Abigail Tulenko, who argues that folklore and philosophy share a common purpose in challenging us to think deeply about life’s big questions. Her essay is essentially a critique of academic philosophy’s exclusivity and she calls for a broader, more inclusive approach that embraces… folklore.

Tulenko suggests that folktales, with all of their richness and diversity, offer fresh perspectives and can invigorate philosophical discussions by incorporating a wider range of experiences and ideas. By integrating folklore into philosophical inquiry, she suggests that there is the potential to democratise the field and make it not only more accessible and engaging, but help to break down academic barriers and interdisciplinary collaboration.

I’m all for it. Although it’s problematic to talk about Russian novels and culture at the moment, there are some tales from that country which are deeply philosophical in nature. I’d also include things like Dostoevsky’s Crime and Punishment as a story from which philosophers can glean insights.

The Hungarian folktale Pretty Maid Ibronka terrified and tantalised me as a child. In the story, the young Ibronka must tie herself to the devil with string in order to discover important truths. These days, as a PhD student in philosophy, I sometimes worry I’ve done the same. I still believe in philosophy’s capacity to seek truth, but I’m conscious that I’ve tethered myself to an academic heritage plagued by formidable demons.

[…]

propose that one avenue forward is to travel backward into childhood – to stories like Ibronka’s. Folklore is an overlooked repository of philosophical thinking from voices outside the traditional canon. As such, it provides a model for new approaches that are directly responsive to the problems facing academic philosophy today. If, like Ibronka, we find ourselves tied to the devil, one way to disentangle ourselves may be to spin a tale.

Folklore originated and developed orally. It has long flourished beyond the elite, largely male, literate classes. Anyone with a story to tell and a friend, child or grandchild to listen, can originate a folktale. At the risk of stating the obvious, the ‘folk’ are the heart of folklore. Women, in particular, have historically been folklore’s primary originators and preservers. In From the Beast to the Blonde (1995), the historian Marina Warner writes that ‘the predominant pattern reveals older women of a lower status handing on the material to younger people’.

[…]

To answer that question [folklore may be inclusive, but is it philosophy?], one would need at least a loose definition of philosophy. This is daunting to provide but, if pressed, I’d turn to Aristotle, whose Metaphysics offers a hint: ‘it is owing to their wonder that men both now begin, and at first began, to philosophise.’ In my view, philosophy is a mode of wondrous engagement, a practice that can be exercised in academic papers, in theological texts, in stories, in prayer, in dinner-table conversations, in silent reflection, and in action. It is this sense of wonder that draws us to penetrate beyond face-value appearances and look at reality anew.

[…] Beyond ethics, folklore touches all the branches of philosophy. With regard to its metaphysical import, Buddhist folklore provides a striking example. When dharma – roughly, the ultimate nature of reality – ‘is internalised, it is most naturally taught in the form of folk stories: the jataka tales in classical Buddhism, the koans in Zen,’ writes the Zen teacher Robert Aitken Roshi. The philosophers Jing Huang and Jonardon Ganeri offer a fascinating philosophical analysis of a Buddhist folktale seemingly dating back to the 3rd century BCE, which they’ve translated as ‘Is This Me?’ They argue that the tale constructs a similar metaphysical dilemma to Plutarch’s ‘ship of Theseus’ thought-experiment, prompting us to question the nature of personal identity.

Source: Aeon

Image: DALL-E 3

3 issues with global mapping of micro-credentials

If you’ll excuse me for a brief rant, I have three, nested, issues with this ‘global mapping initiative’ from Credential Engine’s Credential Transparency Initiative. The first is situating micro-credentials as “innovative, stackable credentials that incrementally document what a person knows and can do”. No, micro-credentials, with or without the hyphen, are a higher education re-invention of Open Badges, and often conflate the container (i.e. the course) with the method of assessment (i.e. the credential).

Second, the whole point of digital credentials such as Open Badges is to enable the recognition of a much wider range of things that formal education usually provides. Not to double-down on the existing gatekeepers. This was the point of the Keep Badges Weird community, which has morphed into Open Recognition is for Everybody (ORE).

Third, although I recognise the value of approaches such as the Bologna Process, initiatives which map different schemas against one another inevitably flatten and homogenise localised understandings and ways of doing things. It’s the educational equivalent of Starbucks colonising cities around the world.

So I reject the idea at the heart of this, other than to prop up higher education institutions which refuse to think outside of the very narrow corner into which they have painted themselves by capitulating to neoliberalism. Credentials aren’t “less portable” because there is no single standardised definitions. That’s a non sequitur. If you want a better approach to all this, which might be less ‘efficient’ for institutions, but which is more valuable for individuals, check out Using Open Recognition to Map Real-World Skills and Attributes.

Because micro-credentials have different definitions in different places and contexts, they are less portable, because it’s harder to interpret and apply them consistently, accurately, and efficiently.

The Global Micro-Credential Schema Mapping project helps to address this issue by taking different schemas and frameworks for defining micro-credentials and lining them up against each other so that they can be compared. Schema mapping involves crosswalking the defined terms that are used in data structures. The micro-credential mapping does not involve any personally identifiable information about people or the individual credentials that are issued to them– the mapping is done across metadata structures. This project has been initially scoped to include schema terms defining the micro-credential owner or offeror, issuer, assertion, and claim.

Source: Credential Engine

Image: DALL-E 3

Perhaps stop caring about what other people think (of you)

In this post, Mark Manson, author of _The Subtle Art of Not Giving a F*ck _ outlines ‘5 Life-Changing Levels of Not Giving a Fuck’. It’s not for those with an aversion to profanity, but having read his book what I like about Manson’s work is that he’s essentially applying some of the lessons of Stoic philosophy to modern life. An alternative might be Derren Brown’s book Happy: Why more or less everything is absolutely fine.

Both books are a reaction to the self-help industry, which doesn’t really deal with the root cause of suffering in the world. As the first lines of Epictetus' Enchiridion note: “Some things are in our control and others not. Things in our control are opinion, pursuit, desire, aversion, and, in a word, whatever are our own actions. Things not in our control are body, property, reputation, command, and, in one word, whatever are not our own actions.”

Manson’s post is essentially a riff on this, outlining five ‘levels’ of, essentially, getting over yourself. There’s a video, if you prefer, but I’m just going to pull out a couple of parts from the post which I think are actually most life-changing if you can internalise them. At the end of the day, unless you’re in a coercive relationship of some description, the only person that can stop you doing something is… yourself.

The big breakthrough for most people comes when they finally drop the performance and embrace authenticity in their relationships. When they realize no matter how well they perform, they’re eventually gonna be rejected by someone, they might as well get rejected for who they already are.

When you start approaching relationships with authenticity, by being unapologetic about who you are and living with the results, you realize you don’t have to wait around for people to choose you, you can also choose them.

[…]

Look, you and everyone you know are gonna die one day. So what the fuck are you waiting for? That goal you have, that dream you keep to yourself, that person you wanna meet. What are you letting stop you? Go do it.

Source: Mark Manson

Image: DALL-E 3

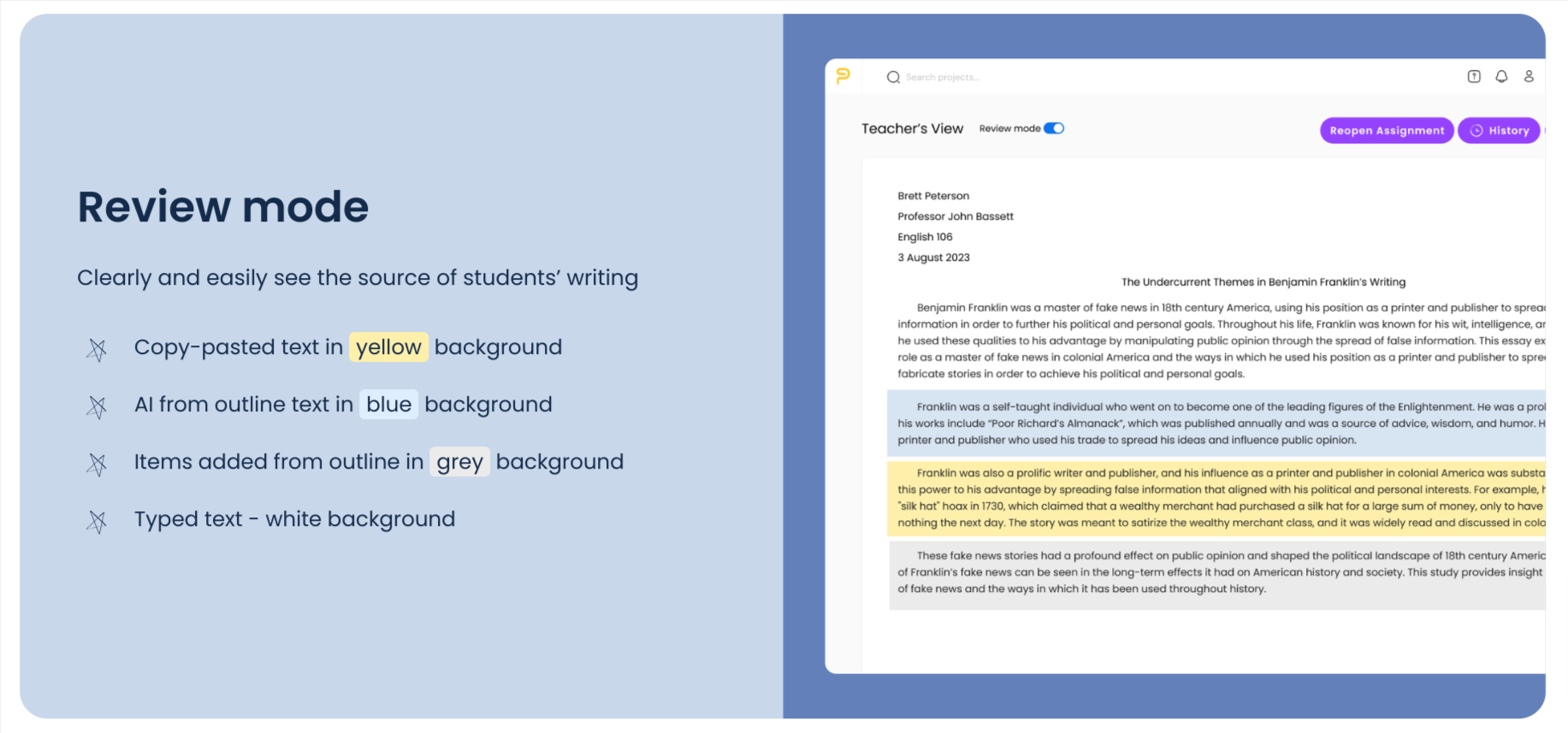

Educators should demand better than 'semi-proctored writing environments'

My longer rant about the whole formal education system of which this is a symptom will have to wait for another day, but this (via [Stephen Downes](https://www.downes.ca/cgi-bin/page.cgi?post=76308)) makes me despair a little. Noting that "it is essentially impossible for one machine to determine if a piece of writing was produced by another machine" one company has decided to create a "semi-proctored writing tool" to "protect academic integrity".

My longer rant about the whole formal education system of which this is a symptom will have to wait for another day, but this (via [Stephen Downes](https://www.downes.ca/cgi-bin/page.cgi?post=76308)) makes me despair a little. Noting that "it is essentially impossible for one machine to determine if a piece of writing was produced by another machine" one company has decided to create a "semi-proctored writing tool" to "protect academic integrity".

Generative AI is disruptive, for sure. But as I mentioned on my recent appearance on the Artificiality podcast, it’s disruptive to a way of doing assessment that makes things easier for educators. Writing essays to prove that you understand something is an approach which was invented a long time ago. We can do much better, including using technology to provide much more individualised feedback, and allowing students to use technology to much more closely apply it to their own practice.

Update: check out the AI Pedagogy project from metaLAB at Harvard

PowerNotes built Composer in response to feedback from educators who wanted a word processor that could protect academic integrity as AI is being integrated into existing Microsoft and Google products. It is essentially impossible for one machine to determine if a piece of writing was produced by another machine, so PowerNotes takes a different approach by making it easier to use AI ethically. For example, because AI is integrated into and research content is stored in PowerNotes, copying and pasting information from another source should be very limited and will be flagged by Composer.

If a teacher or manager does suspect the inappropriate use of AI, PowerNotes+ and Composer help shift the conversation from accusation to evidence by providing a clear trail of every action a writer has taken and where things came from. Putting clear parameters on the AI-plagiarism conversation keeps the focus on the process of developing an idea into a completed paper or presentation.

Source: eSchool News

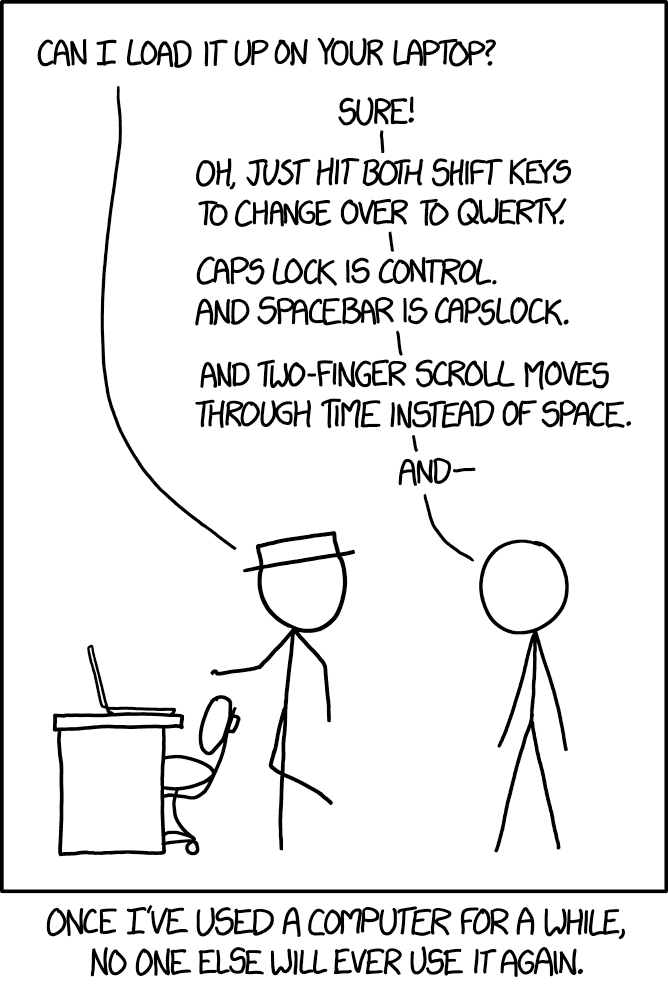

The perils of over-customising your setup

Until about a decade ago, I used to heavily customise my digital working environment. I’d have custom keyboard shortcuts, and automations, and all kinds of things. What I learned was that a) these things take time to maintain, and b) using computers other than your own becomes that much harder. I think the turning point was reading Clay Shirky say “current optimization is long-term anachronism”.

So, these days, I run macOS on my desktop with pretty much the out-of-the-box configuration. My laptop runs ChromeOS Flex. I think if I went back to Linux, I’d probably go for something like Fedora Silverblue which is an immutable system like ChromeOS. In other words, the system files are read-only which makes for an extremely stable system.

One other point which might not work for everyone, but works for me. It’s been seven years since I ditched my cloud-based password manager for a deterministic one. Although my passwords don’t auto-fill, it’s easy for me to access them anywhere, on any device. And they’re not stored anywhere meaning there’s no single point of failure.

Source: xkcd

Systems thinking and the FRAMED mnemonic

I’m currently studying towards my first module of a planned MSc in Systems Thinking through the Open University. I’ve written a fair number of posts on my personal blog.

It can be difficult to explain to other people what systems thinking is actually about in a succinct way, so I appreciated this post (via Andrew Curry which not only provides a handy definition, but also a mnemonic for going about doing it.

An important thing which is missing from this is the introspection required to first reflect upon one’s tradition of understanding and to deconstruct it. But helping people to understand that systems thinking isn’t a ‘technique’ is also a difficult thing to do.

A system is the interaction of relationships, interactions, and resources in a defined context. Systems are not merely the sum of their parts; they are the product of the interactions among these parts. Importantly, social systems are not isolated entities; they are interconnected and subjectively constructed, defined by the boundaries we establish to understand and influence them.

Systems thinking, then, is an approach to solving problems in complex systems that looks at the interconnectedness of things to achieve a particular goal.

[…]

Systems thinking is helpful when addressing complex, dynamic, and generative social challenges. This approach is necessary when there is no definitive statement of the problem because the problem manifests differently depending on where one is situated in that system, which implies there is no objectively right answer, and the process of solving the issue involves diverse stakeholders with different roles. Systems thinking enables us to dig deeper into the root causes of these problems, making it more effective for social change initiatives.

Given the importance of defining and drawing the boundaries of the systems of our intervention, the acrostic “FENCED” captures the six systems transformational principles of how to apply systems thinking in driving social change.

F - frame the challenge as a shared endeavour

E - establish a diverse convening group

N - nudge inner and outer work

C - centre an appreciation of complexity

E - embrace conflict and connection, chaos and order

D - develop innovative solutions that can be tested and scaled.

Source: Reos Partners

Image: DALL-E 3

The war on the URL

A typically jargon-filled but nevertheless insightful post by Venkatesh Rao. This one discusses the ‘war’ on the URL, something that Rao quite rightly points out is a “vulnerability of the commons to outsiders problem” rather than a “tragedy of the commons” problem.

Literacy around URLs is extremely low, especially given the amount of tracking spam appended on the end these days. Although browser extensions and some browsers themselves can strip this, it’s actually worth knowing what has been added. By distrusting all URLs, and forcing people into an app-per-platform experience, we degrade the web, increase surveillance, and make it ever-harder to create the software commons.

The disingenuous philosophy in support of this war is the idea that URLs are somehow dangerous and ugly glimpses of a naked, bare-metal protocol that innocent users must be paternalistically protected from by benevolent and beautiful products. The truth is, when you hide or compromise the naked hyperlink, you expropriate power and agency from a thriving commons. Sure, aging grandpas may have some trouble with the concept but that’s true of everything, including the friendliest geriatric experiences (GXes). My grandfather handled phone numbers and zip codes fine. URLs aren’t much more demanding and vastly more empowering to be able to manipulate directly as a user. Similarly, accessibility considerations are a disingenuous excuse for a war on hyperlinks.

A useful way to think about this is the interaction of the Hypertext Experience (HX) with Josh Stark’s notion of a Trust Experience (TX), which needs to be extended beyond the high-financial-stakes blockchain context he focuses on, to low-stakes everyday browsing. We all agree that the TX of the web has broken and it’s now a Dark Forest. The median random link click now takes you to danger, not serendipitous discovery. This is not entirely the fault of platform corps. We all contributed. And there really is a world of scammers, trolls, phishers, spammers, spies, stalkers, and thieves out there. I’m not proposing to civilize the Dark Forest so we don’t need to protect ourselves from it. I merely don’t want the protection solution to be worse than the problem. Or worse, end up in a “you now have two problems” situation where the HX is degraded with no security benefits, or even degraded security.

[…]

There is also the retreat from pURLs (pretty URLs) to ugly URLs (uURLs) with enormous strings of gobbledygook attached to readable domain-name-stemmed base URLs, mostly meant for tracking, not HX enhancement (in fact uURLs are a dark HX pattern/feature if you’re Google or Twitter). Even when you can figure out how to copy and paste links (in 10 easy steps!), you’re forced to edit them for both aesthetics and character-length reasons. And this is of course even harder on mobile, which suits app-enclosure patterns just fine. In this arms race for control of the HX, we users have resorted to cutting and pasting text itself, creating patterns of useless redundancy, transcription errors, and canonicity loss (when transclusion is now a technically tractable canonicity-preserving alternative). Or worse, screenshots (and idiotic screenshot essays that need OCR or AI help to interact with) that horribly degrade accessibility and create the added overhead of creating alt text (which will no doubt add even more AI for a problem that shouldn’t exist to begin with).

There is a general pattern here: Just like comparable privately owned products and services, public commons and protocols of course have their flaws and limitations, and need innovation and stewardship to improve and evolve. But if you’re fundamentally hostile to the very existence of commons goods and services, the slightest flaw becomes an attack surface and justification to destroy the whole thing. It’s not a tragedy of the commons problem created by participants in it; it’s a vulnerability of the commons to outsiders problem. A technical warfare problem rather than a socio-political problem.

Source: Ribbonfarm

Image: DALL-E 3

Educators in an AI generated world

Helen Beetham comment on OpenAI’s Sora AI video generating engine in relation to education. She makes three fantastic points: first, that pivoting an assessment to a different medium doesn’t make for a different assignment; second that ‘spot how the AI generated video is incorrect’ is a cute end-of-term quiz, not the syllabus; third, that auto-graded assignments which are auto-generated is a waste of everyone’s time.

Something for educators to ponder, for sure.

(My thesis supervisor, Steve Higgins, used to talk about technologies that ‘increase the teacher bubble’ such as interactive whiteboards. I think part of the problem with AI is that bursts the assessment bubble.)t

Only five minutes ago, educators were being urged to get around student use of synthetic text by setting more ‘innovative’ assignments, such as videos and presentations. Some of us pointed out that this would work for about five minutes, and here we are. The medium is not the assignment. The assignment is the work of its production. This is already enshrined in many practices of university assessment, such as authentic assessment (a resource from Heriot Watt University), assessment for learning (a handy table from Queen Mary’s UL) and assessing the process of writing (often from teaching English as a second language, e.g. this summary from the British Council). The generative AI surge has prompted a further shift towards these methods: I’ve found some great resources recently at the University of Melbourne and the University of Monash.

But all these approaches require investment in teachers. Attending to students as meaning-making people, negotiating authentic assessments, giving feedback on process, and welcoming diversity: these are very difficult to ‘scale’. And in all but a few universities, funding per student is diminishing. So instead there is standardisation, and data-based methods to support standardisation, and this has turned assessment into a process that can easily be gamed. If the pressures on students to auto-produce assignments are matched by pressures on staff to auto-detect and auto-grade them, we might as well just have student generative technologies talk directly to institutional ones, and open a channel from student bank accounts directly into the accounts of big tech while universities extract a percentage for accreditation.

Source: imperfect offerings

Image: DALL-E 3

Random advice from Ryan

I know this is just another one of Ryan Holiday’s somewhat-rambling list posts, but there’s still some good advice in it. Here’s a couple of anecdotes and pieces of advice that resonated with me:

There is a story about the manager of Iron Maiden, one of the greatest metal bands of all time. At a dinner honoring the band, a young agent comes up to him and says how much he admires his skillful work in the music business. The manager looks at him and says, “HA! You think I am in the music business? No. I’m in the Iron fucking Maiden business.” The idea being that you want to be in the business of YOU. Not of your respective industry. Not of the critics. Not of the fads and trends and what everyone else is doing.

If you never hear no from clients, if the other side in a negotiation has never balked to something you’ve asked for, then you are not pricing yourself high enough, you are not being aggressive enough.

A friend of mine just left a very important job that a lot of people would kill for. When he left I said, “If you can’t walk away, then you don’t have the job…the job has you.”

Source: _Ryan Holiday

Image: Faro marina (February 2024) by me

The line between “just enough” and “too much” can fluctuate

When I was younger, I wanted to be a minimalist. I thought that famous photo of Steve Jobs sitting on the floor surrounded only by a very few possessions was something to which I should aspire.

As I’ve grown older, and especially since starting a family, I’ve realised that there are stories in our possessions. That’s not a reason to live in clutter, but as I’ve moved house recently, I’ve come to notice that I’ve held on to things that have no practical value, but which make me feel more like a fully-rounded human being.

This essay suggests that, for everyday, regular people, the stuff that is given to us and the things that evoke memories are the equivalent of haivng our names “carved into buildings or attached to scholarships”.

Cramming our spaces with painful tokens from the past can seem wrong. But according to Natalia Skritskaya, a clinical psychologist and research scientist at Columbia University’s Center for Prolonged Grief, holding on to objects that carry mixed feelings is natural. “We’re complex creatures,” she told me. When I reflect on the most memorable periods of my life, they’re not completely devoid of sadness; sorrow and disappointment often linger close by joy and belonging, giving the latter their weight. I want my home to reflect this nuance. Of course, in some cases, clinging to old belongings can keep someone from processing a loss, Skritskaya said. But avoiding all sad associations isn’t the solution either. Not only is clearing our spaces of all signs of grief impossible to sustain, but if every room is scrubbed of all suffering, it will also be scrubbed of its depth.

Deciding what to keep and what to lose is an ongoing, intuitive process that never feels quite finished or certain. The line between “just enough” and “too much” can fluctuate, even if I’m the one drawing it. A slight shift in my mood can transform a cherished heirloom into an obtrusive nuisance in a second. Never is this feeling stronger than when I’m frantically searching for my keys, or some important piece of mail. Such moments make me feel that my life is disordered, that I lack control over my surroundings (because many of my things were given to me, rather than intentionally chosen). Yet still more stuff finds its way into our limited space as our child receives toys and we acquire more gear. I do part with some of my stash semi-regularly. Even so, I’m sure that more remains than any professional organizer would recommend.

Source: The Atlantic

Image: DALL-E 3

We tell ourselves stories in order to live

M.E. Rothwell publishes Cosmographia which hits the sweet spot for me, and for many, being focused on “history, myth, and the arts”. He often publishes old maps, as well as telling stories about faraway places.

In a new series which he calls Venus' Notebook, Rothwell is juxtaposing imagery and quotations. This particular coupling jumped out at me, and so I wanted to pass it on. The quotation is from Joan Didion, and the image is The Eye, Like a Strange Balloon, Mounts toward Infinity by Odilon Redon (1882).

We tell ourselves stories in order to live.

Source: Cosmographia

What kind of online world are we manifesting with AI search?

Withering words from the consistently-excellent auteur of internet culture, Ryan Broderick. I’m a fan of the Arc browser, but I fear they’ve got to a point, like many companies, where they’re stuffing in AI features just for the sake of it.

As Broderick wonders, the creeping inclusion of AI in products isn’t like web3 (or even VR) as it can be introduced in a way that leads to “an inescapable layer of hallucinating AI in between us and everyone else online”. It’s hard not to be concerned.

The Browser Company’s new app lets you ask semantic questions to a chatbot, which then summarizes live internet results in a simulation of a conversation. Which is great, in theory, as long as you don’t have any concerns about whether what it’s saying is accurate, don’t care where that information is coming from or who wrote it, and don’t think through the long-term feasibility of a product like this even a little bit.

But the base logic of something like Arc’s AI search doesn’t even really make sense. As Engadget recently asked in their excellent teardown of Arc’s AI search pivot, “Who makes money when AI reads the internet for us?” But let’s take a step even further here. Why even bother making new websites if no one’s going to see them? At least with the Web3 hype cycle, there were vague platitudes about ownership and financial freedom for content creators. To even entertain the idea of building AI-powered search engines means, in some sense, that you are comfortable with eventually being the reason those creators no longer exist. It is an undeniably apocalyptic project, but not just for the web as we know it, but also your own product. Unless you plan on subsidizing an entire internet’s worth of constantly new content with the revenue from your AI chatbot, the information it’s spitting out will get worse as people stop contributing to the network.

And making matters worse, if you’re hoping to prevent the eventual death of search, there won’t be a before and after moment where suddenly AI replaces our existing search engines. We’ve already seen how AI development works. It slowly optimizes itself in drips and drops, subtly worming its way into our various widgets and windows. Which means it’s likely we’re already living in the world of AI search and we just don’t fully grasp how pervasive it is yet.

Which means it’s not about saving the web we had, but trying to steer our AI future in the direction we want. Unless, like the Web3 bust, we’re about to watch this entire industry go over a cliff this year. Possible, but unlikely.

The only hope here is that consumers just don’t like these products. And even then, we have to hope that the companies rolling them out even care if we like them or not. Of course, once there’s an inescapable layer of hallucinating AI in between us and everyone else online, you have to wonder if anyone will even notice.

Source: Garbage Day

Image: DALL-E 3

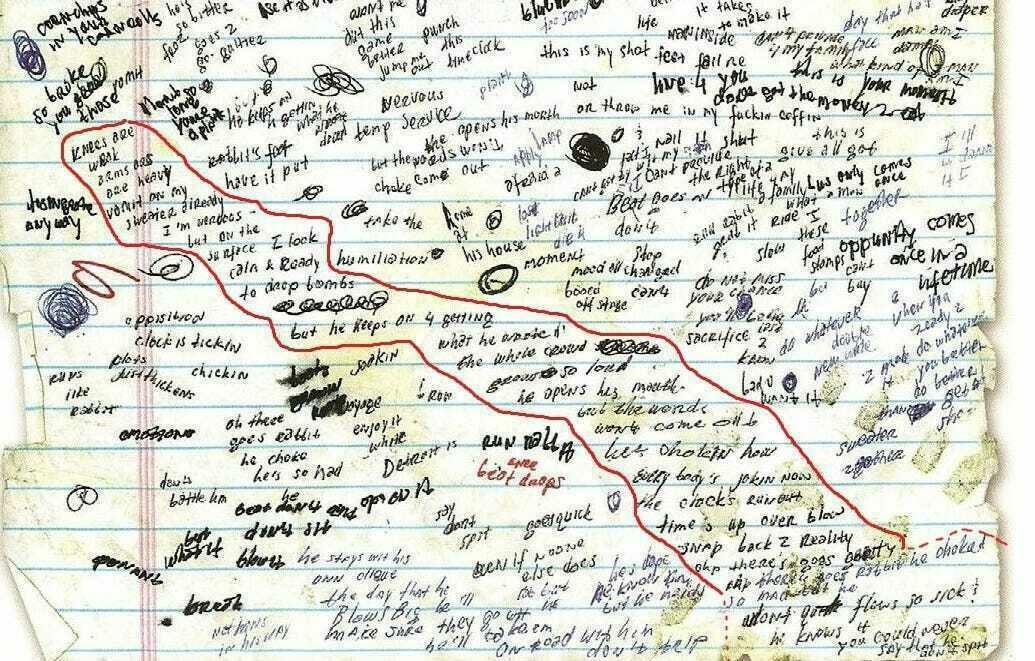

Vomit on my sweater already / mom’s spaghetti

If you’re not into rap or hip hop you may not fully understand the genius of Eminem’s rhyme schemes. If that’s the case, I suggest watching this video before going any further:

The article I actually want to share discusses Eminem’s loose-leaf notes (which he calls “stacking ammo”) and his approaching to writing rhyme schemes:

Eminem claims he has a “rhyming disease.” He explains, “In my head everything rhymes.” But he won’t remember his rhymes if he doesn’t write them down. And he’ll use any available surface to record them. Mostly, he scrawls his rhymes in tightly bound lists on loose leaf, yellow legal pads, and hotel notepads.

[…]

Anyone who thinks notes ought to be neat and tidy should look at Eminem’s lyric sheets. He saves rhymes from the page’s chaos by circling those he think he might use, as he does here with lines that appear in “The Real Slim Shady.”

Source: Noted