2023

Generative AI, misinformation, and content authenticity

As a philosopher, historian, and educator by training, and a technologist by profession, this initiative really hits my sweet spot. The image below shows how, even before AI and digital technologies, altering the public record through manipulating photographs was possible.

Now, of course, spreading misinformation and disinformation is so much easier, especially on social networks. This series of posts from the Content Authenticity Initiative outlines ways in which the technology they are developing can be prove whether or not an image has been altered.

Of course, unless verification is built into social networks, this is only likely to be useful to journalists and in a court of law. After all, people tend to reshare whatever chimes with their worldview.

Although it varies in form and creation, generative AI content (a.k.a. deepfakes) refers to images, audio, or video that has been automatically synthesized by an AI-based system. Deepfakes are the latest in a long line of techniques used to manipulate reality — from Stalin's darkroom to Photoshop to classic computer-generated renderings. However, their introduction poses new opportunities and risks now that everyone has access to what was historically the purview of a small number of sophisticated organizations.Source: From the darkroom to generative AI | Content Authenticity InitiativeEven in these early days of the AI revolution, we are seeing stunning advances in generative AI. The technology can create a realistic photo from a simple text prompt, clone a person’s voice from a few minutes of an audio recording, and insert a person into a video to make them appear to be doing whatever the creator desires. We are also seeing real harms from this content in the form of non-consensual sexual imagery, small- to large-scale fraud, and disinformation campaigns.

Building on our earlier research in digital media forensics techniques, over the past few years my research group and I have turned our attention to this new breed of digital fakery. All our authentication techniques work in the absence of digital watermarks or signatures. Instead, they model the path of light through the entire image-creation process and quantify physical, geometric, and statistical regularities in images that are disrupted by the creation of a fake.

On the need to measure productivity

I’ve long said that no-one really knows what knowledge work looks like. It’s easy to see whether or not someone is digging a hole in the ground, but it’s much more difficult to see whether the work that someone is doing on a computer is ‘productive’.

This is, I think, partly because ‘productivity’ is something that is best thought about for things that can be systematised and made routine. A lot of knowledge work is fundamentally creative, and so quantitative metrics are meaningless. Who cares if you’ve made a million pull requests if they’re all to change a single character?

This article discusses the complexities of assessing productivity in various fields, the issues with current interviewing processes, and suggests that future evaluations may become more tied to tangible accomplishments rather than arbitrary metrics. That’s presupposing, of course, that hierarchical evaluations are even necessary.

[E]very potential metric we devise appears woefully inadequate in assessing this holistic outcome. Whether it's pull requests, lines of code, user stories, story points, or ship dates, it seems that every metric can be manipulated or gamed. Ship dates may be advanced, but quality suffers; story points morph in size depending on the project, and lines of code can be bulked up with a test suite. Even pull requests can be sliced and diced to skew the numbers. It's a frustrating conundrum.Source: Why is it so hard to measure productivity? | fractional.workFor more fuzzy fields, like product management or marketing or design, it becomes even more hand-wavey. Some fields tend to depend on getting other roles to execute better, but you can’t go rewind history and try things with a different PM to see if things would have been better. Same with design.

[…]

If you give the most productive employee more work, presumably they’d be justified in asking for higher compensation? After all, they are driving greater outcomes for you. Would you be comfortable paying it?

For example, would you pay a 3x more productive designer 3x the fully loaded cost of the average designer? If 10x engineers truly exist, why do pay scales intra company not cover a 10x spectrum?

[…]

My suspicion is that, like in other fields where performance matters and is financially rewarded, there will be a surge in our capacity to measure and evaluate real-life work performance. Compensation will become more closely tied to tangible accomplishments rather than arbitrary levels or seniority. Interviews will transition to be more real-world scenarios, perhaps within the customer’s actual codebase, addressing a genuine problem the customer faces—possibly even compensating the interviewee for their time.

Image: Kelly Sikkema

The declining relevance of Google search

I can’t remember the last time I searched Google. It’s been around six years since I used DuckDuckGo as my main search engine. Which is weird, because people use ‘google’ for searching the web as they do ‘hoover’ for vacuuming cleaning.

This article explores Google’s history and its impact on SEO, content creation. it’s written by Ryan Broderick, author of Garbage Day, a newsletter to which I subscribe. He charts the rise of alternative platforms like Meta’s Facebook, Instagram, and TikTok, and suggests that Google’s era of influence may be waning.

There is a growing chorus of complaints that Google is not as accurate, as competent, as dedicated to search as it once was. The rise of massive closed algorithmic social networks like Meta’s Facebook and Instagram began eating the web in the 2010s. More recently, there’s been a shift to entertainment-based video feeds like TikTok — which is now being used as a primary search engine by a new generation of internet users.Source: How Google made the world go viral | The VergeFor two decades, Google Search was the largely invisible force that determined the ebb and flow of online content. Now, for the first time since Google’s launch, a world without it at the center actually seems possible. We’re clearly at the end of one era and at the threshold of another. But to understand where we’re headed, we have to look back at how it all started.

[…]

Twenty-five years ago, at the dawn of a different internet age, another search engine began to struggle with similar issues. It was considered the top of the heap, praised for its sophisticated technology, and then suddenly faced an existential threat. A young company created a new way of finding content.

Instead of trying to make its core product better, fixing the issues its users had, the company, instead, became more of a portal, weighted down by bloated services that worked less and less well. The company’s CEO admitted in 2002 that it “tried to become a portal too late in the game, and lost focus” and told Wired at the time that it was going to try and double back and focus on search again. But it never regained the lead.

That company was AltaVista.

An end to rabbit hole radicalization?

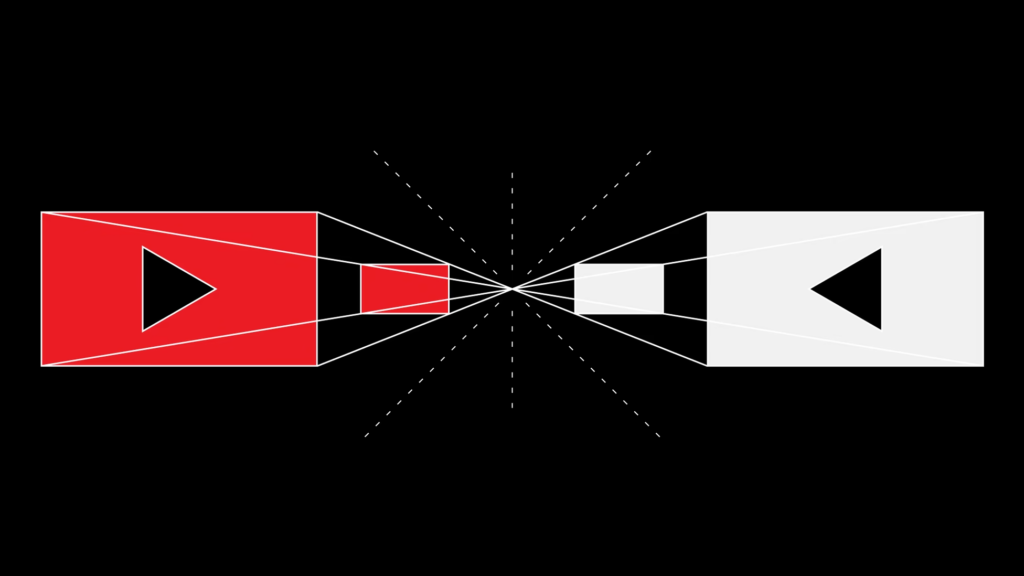

A new peer-reviewed study suggests that YouTube’s efforts to stop people being radicalized through its recommendation algorithm have been effective. The study monitored 1,181 people’s YouTube activity and found that only 6% watched extremist videos, with most of these deliberately subscribing to extremist channels.

Interestingly, though, the study cannot account for user behaviour prior to YouTube’s 2019 algorithm changes, which means we can only wonder about how influential the platform was in terms of radicalization up to and including pretty significant elections.

Around the time of the 2016 election, YouTube became known as a home to the rising alt-right and to massively popular conspiracy theorists. The Google-owned site had more than 1 billion users and was playing host to charismatic personalities who had developed intimate relationships with their audiences, potentially making it a powerful vector for political influence. At the time, Alex Jones’s channel, Infowars, had more than 2 million subscribers. And YouTube’s recommendation algorithm, which accounted for the majority of what people watched on the platform, looked to be pulling people deeper and deeper into dangerous delusions.Source: The World Will Never Know the Truth About YouTube’s Rabbit Holes | The AtlanticThe process of “falling down the rabbit hole” was memorably illustrated by personal accounts of people who had ended up on strange paths into the dark heart of the platform, where they were intrigued and then convinced by extremist rhetoric—an interest in critiques of feminism could lead to men’s rights and then white supremacy and then calls for violence. Most troubling is that a person who was not necessarily looking for extreme content could end up watching it because the algorithm noticed a whisper of something in their previous choices. It could exacerbate a person’s worst impulses and take them to a place they wouldn’t have chosen, but would have trouble getting out of.

[…]

The… research is… important, in part because it proposes a specific, technical definition of ‘rabbit hole’. The term has been used in different ways in common speech and even in academic research. Nyhan’s team defined a “rabbit hole event” as one in which a person follows a recommendation to get to a more extreme type of video than they were previously watching. They can’t have been subscribing to the channel they end up on, or to similarly extreme channels, before the recommendation pushed them. This mechanism wasn’t common in their findings at all. They saw it act on only 1 percent of participants, accounting for only 0.002 percent of all views of extremist-channel videos.

Nyhan was careful not to say that this paper represents a total exoneration of YouTube. The platform hasn’t stopped letting its subscription feature drive traffic to extremists. It also continues to allow users to publish extremist videos. And learning that only a tiny percentage of users stumble across extremist content isn’t the same as learning that no one does; a tiny percentage of a gargantuan user base still represents a large number of people.

Crypto is the biggest ponzi scheme of all time

Ben McKenzie, an actor turned anti-crypto activist, argues in his new book Easy Money that while cryptocurrencies highlight legitimate flaws in the financial system, they are essentially a Ponzi scheme.

He criticises the “Hollywoodisation” of crypto and the lack of regulatory oversight, warning that the tech utopianism surrounding crypto and now AI could leave many losers in its wake. It’s funny how people seamlessly move from one grift to the next without ever being properly called out on it.

The secret behind most conspiracy-driven movements is that there is often a glimmer of truth at the centre of their beliefs. Anti-vaxxers, for instance, can point to the past behaviour of large pharmaceutical companies as evidence that the medical establishment can’t be trusted. This glimmer is what’s used to ensnare you, says Ben McKenzie, the actor and cryptocurrency critic.Source: “The biggest Ponzi of all time”: why Ben McKenzie became a crypto critic | New Statesman[…]

After a friend urged him to buy Bitcoin, McKenzie – a former economics student with a degree from the University of Virginia – took a 24-part online course on cryptocurrencies, taught by the current US Securities and Exchange Commission chair Gary Gensler. He came away thinking the entire cryptocurrency thing was a scam. Worse, it was a scam with a lot of momentum behind it. “Advocates will tell you there is no ‘Bitcoin marketing department’,” McKenzie said. “But of course, if Bitcoin and crypto doesn’t have a product, if there is no actual tangible asset behind it, then in fact, Bitcoin and crypto is only marketing. It’s only a story.”

[…]

During the peak of 2021, some of the most recognisable people in the world – including Matt Damon, Reese Witherspoon and Kim Kardashian – began promoting cryptocurrencies and non-fungible tokens (NFTs).

McKenzie told me this is part of a more aggressive “hustle culture” in which people use their social contacts to promote products. Multi-level marketing (or MLM) and pyramid-selling schemes have existed for at least a century, but they have been transformed by technology. “In the 1950s, if you wanted to sell someone Mary Kay Cosmetics or Tupperware, you would need to invite them over to your house, cook them dinner, spend three hours trying to convince them [to buy products].” Now, he said, “the MLM can be done through TikTok and Instagram.”

Though McKenzie is openly critical of the celebrities who have pushed these products, he reserves ultimate blame for the sluggish regulators that allowed it to happen. “I think in many cases the celebrities didn’t really understand what they were selling. Which is not to absolve them of a moral, ethical [or] potentially even legal responsibility for their actions. But they don’t need to be bad people – they just see easy money, right?”

B Lane

There’s a lot going on in this short post. It reminded me of a saying of Steve Jobs: “A players attract A players. B players attract C players.”

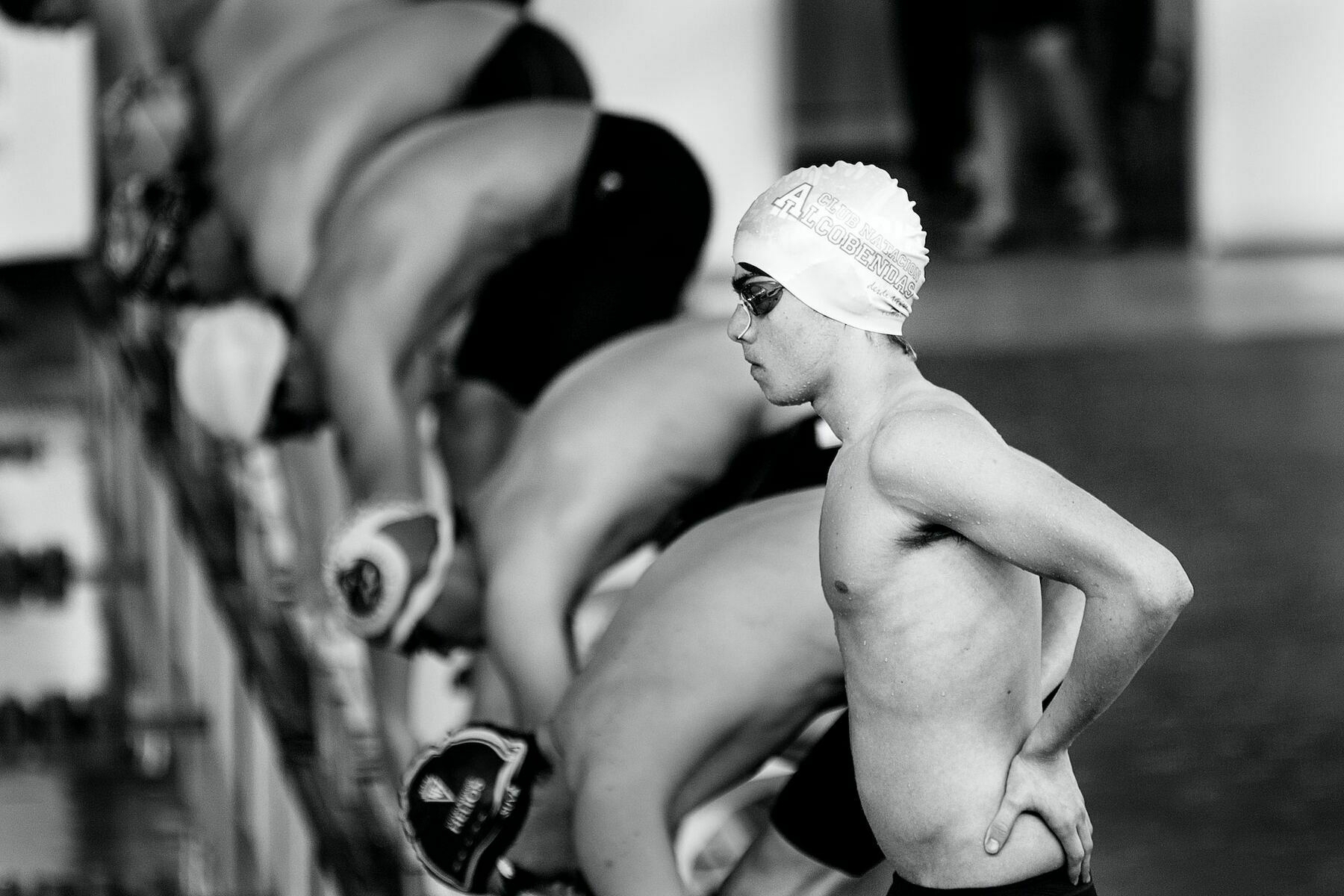

Now there’s something in that, in terms of the mentality that people bring to working hard and playing hard. But this post is talking about the way that people treat other people.

I’ve definitely noticed in my life, from my own studies to my kids sports teams, the tyranny of the “not quite top-level” mindset. It’s almost like you have to get over yourself to get to the “top”. What that is and whether it’s worth pursuing is another question entirely.

I noticed that when I swam next to the B lane swimmers, they were not nearly as kind and friendly as the C lane swimmers had been when they were my next-lane neighbours. The A lane swimmers were extremely nice, and were generous with encouragement, praise and tips. This wasn’t a hard and fast rule, but I started to notice a pattern: A, C, and D lane swimmers tended to be nice, friendly, and helpful to pretty much everyone; B lane swimmers tended to be nice to A lane and other B lane swimmers but not so much to C and D.Source: The B Lane Swimmer | Holly WittemanWhen I stopped doing tris and moved back to field sports, I started to notice the same thing. The very top athletes were nice to everyone and so were the middle and bottom of the pack. The not quite top players, though, were less friendly. They played more political games, and acted out their threatened feelings of being not quite good enough by being snobbish to those below them. (In retrospect, I worry I did some of this, too, especially when I was playing on a top team but was not a top player. I definitely felt a need to prove myself.)

I have since noted the same phenomenon in nearly every domain, including academia. The truly great researchers are generous and friendly; so are many of the middle of the roaders. Those who have something to prove, though, and who feel like they aren’t quite managing to do it, show definite aspects of being B lane swimmers.

Image: Quino AI

Money does not solve disasters like this

The Burning Man Festival started in 1986 as a small event on a beach. It was originally an event for hippies, bohemians, and those who lived outside of mainstream culture. It’s an art event.

As with most things like this, it became cool, and so people with money started going. Now, less than 40 years later, it’s dominated by the Silicon Valley elite, celebrities, and grifters.

While one person has died this year due to extreme weather events, which is a tragedy, I can’t help but feel some schadenfreude at rich people being stuck in a situation they can’t buy their way out of.

Tens of thousands of “burners” at the Burning Man festival have been told to stay in the camps, conserve food and water and are being blocked from leaving Nevada’s Black Rock desert after a slow-moving rainstorm turned the event into a mud bath.Source: Burning Man festival-goers trapped in desert as rain turns site to mud | The Guardian[…]

As of noon Saturday, Nevada’s Bureau of Land Management declared the entrance to Burning Man shut down for good. “Rain over the last 24 hours has created a situation that required a full stop of vehicle movement on the playa. More rain is expected over the next few days and conditions are not expected to improve enough to allow vehicles to enter the playa,” read a BLM statement.

[…]

The festival this year was already taking place under unusual circumstances with the desert floor flooded by the remnants of Hurricane Hilary as the event was being set up.

Tara Saylor, an attendee from Ojai, California, faced the threat of the hurricane as well as a 5.1-magnitude earthquake that shook her city before she left, reported the Los Angeles Times. Saylor told the newspaper she’s seen the founders of two different companies at Burning Man this year, but added, “it doesn’t matter how much money you have, nobody can do anything about it. There’s no planes, there’s no buses.”

“Money does not solve disasters like this.”

The Atlantis of the North Sea

A couple of years ago, I started subscribing to Northern Earth magazine on the recommendation of Warren Ellis. It’s quirky and brilliant.

The most recent issue contains reference to Rungholt, which I then looked up on Wikipedia. It was destroyed in the 14th century due to a storm surge. Until excavations this year people weren’t entirely sure it ever existed but it turns out it was a flourishing port town.

Rungholt was a settlement in North Frisia, in what was then the Danish Duchy of Schleswig. The area today lies in Germany. Rungholt reportedly sank beneath the waves of the North Sea when a storm tide (known as Grote Mandrenke or Den Store Manddrukning) hit the coast on 15 or 16 January 1362.Source: Rungholt | Wikipedia[…]

In June 2023, the German Research Foundation announced that researchers had found the probable location of Rungholt under mudflats in the Wadden Sea and had already mapped 10 square kilometers of the area.

[…]

Today it is widely accepted that Rungholt existed and was not just a local legend. Documents support this, although they mostly date from much later times (16th century). Archaeologists think Rungholt was an important town and port. It might have contained up to 500 houses, with about 3,000 people. Findings indicate trade in agricultural products and possibly amber. Supposed relics of the town have been found in the Wadden Sea, but shifting sediments make it hard to preserve them.

Reconstructing Tenochtitlan

This is an absolutely incredible piece of work, showing the complexity and sophistication of the Aztec empire. My favourite part is the slider that allows you to see how much of Mexico City is based upon the structure of Tenochtitlan.

The year is 1518. Mexico-Tenochtitlan, once an unassuming settlement in the middle of Lake Texcoco, now a bustling metropolis. It is the capital of an empire ruling over, and receiving tribute from, more than 5 million people. Tenochtitlan is home to 200.000 farmers, artisans, merchants, soldiers, priests and aristocrats. At this time, it is one of the largest cities in the world.Source: A Portrait of TenochtitlanToday, we call this city Ciudad de Mexico - Mexico City.

Not much is left of the old Aztec - or Mexica - capital Tenochtitlan. What did this city, raised from the lake bed by hand, look like? Using historical and archeological sources, and the expertise of many, I have tried to faithfully bring this iconic city to life.

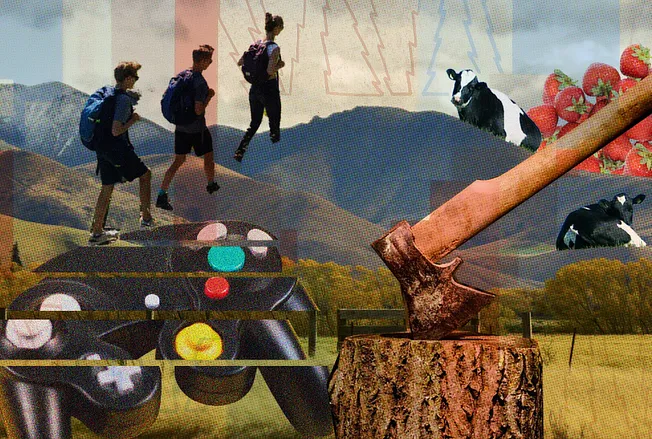

Taking screenagers to the forest

As a parent of a 16 year-old boy and 12 year-old girl I found this article fascinating. Written by Caleb Silverberg, now 17 years of age, it describes his decision to break free from his screen addiction and enrol in Midland, an experiential boarding school located in a forest where technology is forbidden.

Trading his smartphone for an ax, he found liberation and genuine human connection through chores like chopping firewood, living off the land, and engaging in face-to-face conversations. Silverberg advocates for a “Technology Shabbat,” a periodic break from screens, as a solution for his generation’s screen-related issues like ADHD and depression.

At 15 years old, I looked in the mirror and saw a shell of myself. My face was pale. My eyes were hollow. I needed a radical change.Source: Why I Traded My Smartphone for an Ax | The Free PressI vaguely remembered one of my older sister’s friends describing her unique high school, Midland, an experiential boarding school located in the Los Padres National Forest. The school was founded in 1932 under the belief of “Needs Not Wants.” In the forest, cell phones and video games are forbidden, and replaced with a job to keep the place running: washing dishes, cleaning bathrooms, or sanitizing the mess hall. Students depend on one another.

[…]

September 2, 2021, was my first day at Midland, when I traded my smartphone for an ax.

At Midland, students must chop firewood to generate hot water for their showers and heat for their cabins and classrooms. If no one chops the wood or makes the fire, there’s a cold shower, a freezing bed, or a chilly classroom. No punishment by a teacher or adult. Just the disappointment of your peers. Your friends.

[…]

Before Midland, whenever I sat on the couch, engrossed in TikTok or Instagram, my parents would caution me: “Caleb, your brain is going to melt if you keep staring at that screen!” I dismissed their concerns at first. But eventually, I experienced life without an electronic device glued to my hand and learned they were right all along.

[…]

I have been privileged to attend Midland. But anyone can benefit from its lessons. To my generation, I would like to offer a 5,000-year-old solution to our twenty-first-century dilemma. Shabbat is the weekly sabbath in Judaic custom where individuals take 24 hours to rest and relax. This weekly reset allows our bodies and minds to recharge.

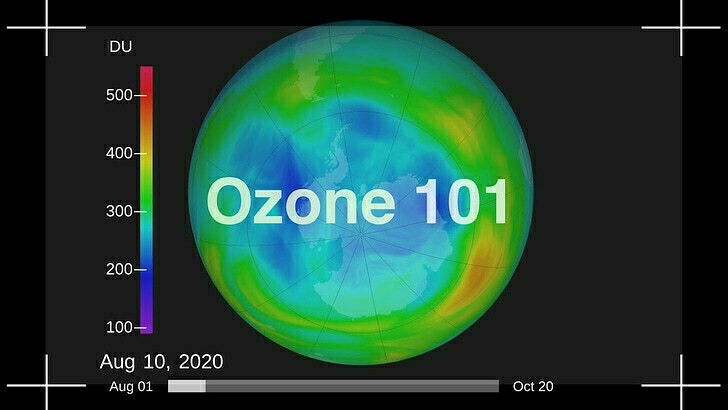

What we can learn about the climate emergency from the world's response to ozone depletion in the 1980s

This article by Andrew Dessler discusses the near-miss catastrophe of ozone depletion. Anyone alive at the time can probably remember how the world came together to address the issue by phasing out chlorofluorocarbons (CFCs) through the Montreal Protocol in 1987.

Dessler draws parallels with the current climate crisis, arguing that global policy collaboration based on scientific research can solve pressing environmental issues. Along the way, he also debunks claims that transitioning to renewable energy would be economically catastrophic.

In the early 1970s, scientists theorized that certain man-made chemicals, known as chlorofluorocarbons or CFCs, had the potential to reduce the amount of ozone in our atmosphere — this became known as ozone depletion. Given the crucial role of ozone in maintaining a livable environment, this caused great concern.Source: Ozone depletion: The bullet that missed |Andrew DesslerEven before evidence of actual ozone depletion was observed, countries began to take action. For example, the U.S. banned many non-essential uses of the chemicals, such as propellants in aerosol spray cans. This reflected a different view at the time that government should protect its citizens rather than protect the profits of corporations.

By the mid-1980s, the world was busy negotiating the phase-out of the primary ozone-depleting CFCs when the Antarctic ozone hole (AOH) was discovered. The AOH is an annual event: over Antarctica, the majority of the ozone is destroyed during Spring. The ozone builds back up as Spring ends and, by Summer, things are basically back to normal.

[…]

The ‘reference’ future is our world, the ‘world avoided’ is the world that would have existed had we not phased out CFCs. By the 2060s, the world would have lost two-thirds of it’s ozone. This, in turn, would have greatly increased the dangerous ultraviolet radiation reaching the surface. This plot shows the UV dose at noon under clear skies in July in mid-latitudes.

Today’s value of 10 is ‘high risk’ for UV exposure, which is why public health professionals tell you to wear sunscreen when you go out. The world avoided has a UV index of 30 — three times what is considered high risk and high enough to give you a perceptible sunburn in 5 minutes.

Disaster capitalism, climate change, and agriculture

Many readers will be aware of the extreme weather conditions in Vermont USA. This has led to a disastrous year for agriculture and financial struggles for local farmers.

The article delves into the broader implications of these challenges, framing them within the context of ‘disaster capitalism,’ where the degradation of farming and natural resources is exploited for profit, exacerbating systemic issues and inequalities. We’re at the thin of the wedge with this stuff.

Vermont has suffered a miserable growing season and many Vermonters lost a great deal to the flood. Some lost their home and everything in it. But only two people lost their lives, and very few lost their jobs. This is astonishing, given the number of businesses that were shuttered for most of the last eight weeks. Some are still not open. Yes, we may have lost quite a lot, but our losses are marginal compared to the many dozens of people killed in the Maui fire and the billions of dollars of devastation in flooding elsewhere in this country. Similarly, farm losses across the country are measured not in tens of millions, but in billions. Net income from US farming is expected to drop by $30.5 billion, an 18.2% loss over 2022, which was itself not a good year. I’m sure Vermont is in that estimate, but we only add a bit to the decimal places of that number. And these few horrific numbers barely scrape the surface of loss in the US, with even greater horrors mounting everywhere else in the world. (Can Canada even measure the damages sustained in this year’s fires…)Source: The future earth is already here | resilienceThese numbers show that we are in the age of the disaster capitalism described by Naomi Klein after she witnessed the response to Katrina. There has always been more income in the breaking of human lives than in maintaining them. In truth, the 20th century surge in disposable products and planned obsolescence was nothing but extracting profits from breakdown. Similarly, our economy was strongest as it pulled itself out of the devastation of World War II. As long as there are resources and cheap labor somewhere, somebody will profit over our loss. What is different now is that nearly resources and cheap labor are being funneled into this economics of disaster with little left to sustain actual lives.

[…]

We are in disaster capitalism and have been for a long while. I believe that capitalism has always been intrinsically tied to disaster and destruction, whether natural or engineered, but not many people share my views — because my views are from the edge spaces, the bottom and sides of this system. I sit outside and can see things that those dependent on this capitalist system for privilege and wealth do not, or can not. Upton Sinclair’s quip about the inverse relationship between a man’s paycheck and his ability to comprehend any given issue is the duct tape that holds capitalism together in these increasingly disastrous times — increasingly disastrous because of capitalism, whether the result of inadvertent externality or planned waste and breakdown. This system can only survive if enough people refuse to see that its basic function is destruction. Though of course it can also only survive if there are cheap resources and labor to mine for disaster remediation, and we are now entering the stage of late capitalism that has no more cheap things to turn into waste. Capital is feeding on itself, struggling to bring in revenues that can cover its increased costs — costs like fires and floods, scarce resources and a debilitated workforce wracked by disaster. The system needs all the propaganda it can muster to keep telling itself that it is alive and well, keeping those men with dependent paychecks blind to its demise.

Eating the rich is optional, taxing them is mandatory

The article in Insider discusses the findings of the 2022 World Inequality Report, which highlights extreme levels of wealth and income inequality globally. The report was coordinated by leading economists and debunks the trickle-down economic theory.

They found that the bottom half of the global population owns just 2% of total wealth, while the top 10% holds 76%. It also notes that billionaires now hold a 3% share of global wealth, up from 1% in 1995. As everyone knows, inequality is a result of political choices and the only way to fix it is through progressive wealth taxes and perhaps even reparations.

The data serves as a complete rebuke of the trickle-down economic theory, which posits that cutting taxes on the rich will "trickle down" to those below, with the cuts eventually benefiting everyone. In America, trickle-down was exemplified by President Ronald Reagan's tax slashes. It's a theory that persists today, even though most research has shown that 50 years of tax cuts benefits the wealthy and worsens inequality.Source: Huge 20-Year Study Shows Trickle-Down Is a Myth, Inequality Rampant | InsiderThe researchers are some of the leading minds on inequality in the entire field of economics. Chancel is the co-director of the World Inequality Lab, while Saez and Zucman have literally written a book on the rich dodging taxes and helped create wealth tax proposals for senators like Elizabeth Warren and Bernie Sanders.

[…]

Billionaire gains are a well-documented trend: The left-leaning Institute for Policy Studies and Americans for Tax Fairness found that Americans added $2.1 trillion to their wealth during the pandemic, a 70% increase.

Image: Mathieu Stern

How does doing what I need make time for everything else?

I can’t remember whether someone said to me or I once read that we should manage our energy rather than our time, but it made a big difference to my life. Having control over when and how you work is a huge privilege, and enables you to be the best version of you.

People often smile or laugh when I talk about the SOFA philosophy, but giving yourself the freedom to start creative pursuits and not finish them is actually massively liberating, mood-boosting, and energy-giving.

The point being that you don’t need to ‘make time’ to do things. You just need to prioritise stuff that energises you.

I often find myself listening as someone talks about being out of time. Even the most progressive and thoughtful organizations regularly cultivate situations where the amount of work outstrips the capacity of the people in place to do it. Combine that with our appalling lack of support for caretakers, the administrative burden of accessing your healthcare, the often thankless tasks of keeping house and home, and it’s no wonder that even the people most trained in solving tricky problems run into a hard wall with this one.Source: Energy makes time | everything changes[…]

We all know that time can be stretchy or compressed—we’ve experienced hours that plodded along interminably and those that whisked by in a few breaths. We’ve had days in which we got so much done we surprised ourselves and days where we got into a staring contest with the to-do list and the to-do list didn’t blink. And we’ve also had days that left us puddled on the floor and days that left us pumped up, practically leaping out of our chairs. What differentiates these experiences isn’t the number of hours in the day but the energy we get from the work. Energy makes time.

Here’s a concrete example, and perhaps a familiar one: someone is so busy with work and caretaking that they don’t make time for their art. At the end of the day they’re too tired to write or paint or make music or whathaveyou. So they don’t. Days, then weeks go by. They are more and more tired. They are getting less and less done. They take a mental health day and catch up on sleep but the exhaustion persists. Their overwhelm grows larger, becomes intolerable. The usual tactics don’t work..

Then one day they say fuck it all. They eat leftover pasta over the sink, drop mom off at her mahjongg game, and go sit in the park to draw. They draw for hours, until the sun goes down and they’re squinting under the street lights. And, lo and behold, the next day they plow through all those lingering to-dos. They see clearly that half of them were unnecessary when before they all seemed critical. They recognize a few others as things better handed off to their peers. They suddenly find time for attending to that one project they’d been procrastinating on for weeks. They sleep better. Their skin looks great. (Okay I might be exaggerating on that last one, but only mildly.)

It turns out, not doing their art was costing them time, was draining it away, little by little, like a slow but steady leak. They had assumed, wrongly, that there wasn’t enough time in the day to do their art, because they assumed (because we’re conditioned to assume) that every thing we do costs time. But that math doesn’t take energy into account, doesn’t grok that doing things that energize you gives you time back. By doing their art, a whole lot of time suddenly returned. Their art didn’t need more time; their time needed their art.

[…]

The question to ask with all those things isn’t, “how do I make time for this?” The answer to that question always disappoints, because that view of time has it forever speeding away from you. The better question is, how does doing what I need make time for everything else?

Image: Aron Visuals

Note taking tools and processes

Casey Newton delves into the limitations of current note-taking apps like Obsidian, arguing that they are designed more for storing information than for sparking insights or improving thinking. He suggests that while AI has the potential to revolutionise these platforms by making them more interactive and insightful, the real challenge lies in our ability to focus and think deeply — something that software alone cannot automate.

This is partly why I write Thought Shrapnel. Not only does it force me to actually read things I’ve bookmaked, but I make sense of them, and often make links to my work and other things I’ve read.

Note-taking, after all, does not take place in a vacuum. It takes place on your computer, next to email, and Slack, and Discord, and iMessage, and the text-based social network of your choosing. In the era of alt-tabbing between these and other apps, our ability to build knowledge and draw connections is permanently challenged by what might be our ultimately futile efforts to multitask.Source: Why note-taking apps don’t make us smarter | Platformer[…]

In short: it is probably a mistake, in the end, to ask software to improve our thinking. Even if you can rescue your attention from the acid bath of the internet; even if you can gather the most interesting data and observations into the app of your choosing; even if you revisit that data from time to time — this will not be enough. It might not even be worth trying.

The reason, sadly, is that thinking takes place in your brain. And thinking is an active pursuit — one that often happens when you are spending long stretches of time staring into space, then writing a bit, and then staring into space a bit more. It’s here that the connections are made and the insights are formed. And it is a process that stubbornly resists automation.

Which is not to say that software can’t help. Andy Matuschak, a researcher whose spectacular website offers a feast of thinking about notes and note-taking, observes that note-taking apps emphasize displaying and manipulating notes, but never making sense between them. Before I totally resign myself to the idea that a note-taking app can’t solve my problems, I will admit that on some fundamental level no one has really tried.

Poverty is expensive. Cash helps homeless people.

Real-world studies such as this are important for busting myths about homeless people spending money recklessly compared to the rest of us.

The widely held stereotype that people experiencing homelessness would be more likely to spend extra cash on drugs, alcohol and “temptation goods” has been upended by a study that found a majority used a $7,500 payment mostly on rent, food, housing, transit and clothes.Source: Canada study debunks stereotypes of homeless people’s spending habits | The GuardianThe biases punctured by the study highlight the difficulties in developing policies to reduce homelessness, say the Canadian researchers behind it. They said the unconditional cash appeared to reduce homelessness, giving added weight to calls for a guaranteed basic income that would help adults cover essential living expenses.

[…]

They found the cash recipients each spent an average of 99 fewer days homeless than the control group, increased their savings more and also “cost” society less by spending less time in shelters.

[…]

Researchers ensured the cash was in a lump sum “to enable maximum purchasing freedom and choice” as opposed to small, consistent transfers.

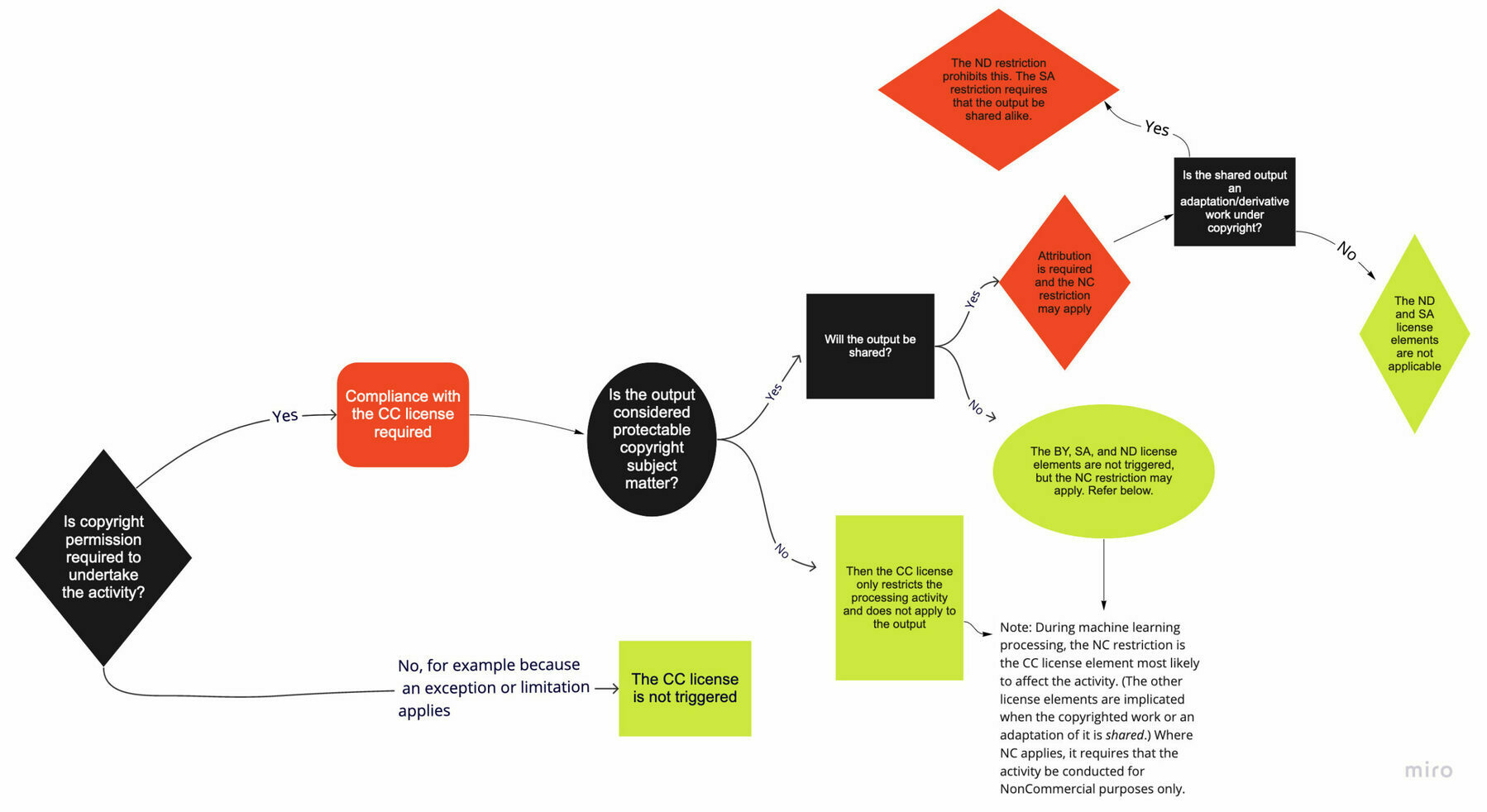

Can you use CC licenses to restrict how people use copyrighted works in AI training?

TL;DR seems to be that copyright isn’t going to prevent people data mining content to use for training AI models. However, there are protections around privacy that might come into play.

This is among the most common questions that we receive. While the answer depends on the exact circumstances, we want to clear up some misconceptions about how CC licenses function and what they do and do not cover.Source: Understanding CC Licenses and Generative AI | Creative CommonsYou can use CC licenses to grant permission for reuse in any situation that requires permission under copyright. However, the licenses do not supersede existing limitations and exceptions; in other words, as a licensor, you cannot use the licenses to prohibit a use if it is otherwise permitted by limitations and exceptions to copyright.

This is directly relevant to AI, given that the use of copyrighted works to train AI may be protected under existing exceptions and limitations to copyright. For instance, we believe there are strong arguments that, in most cases, using copyrighted works to train generative AI models would be fair use in the United States, and such training can be protected by the text and data mining exception in the EU. However, whether these limitations apply may depend on the particular use case.

It's all about the DMs

I think it’s fascinating that this article uses a zeugma to explain what’s happened to places that we’ve called home online. In other words, we’ve moved from social media to social media with the emphasis on the content and performance rather than the sharing.

The fatigue average people feel when it comes to posting on Instagram has pushed more users toward private posting and closed groups. Features like Close Friends (a private list of people who have access to your content) and the rise of group chats give people a safer place to share memes, gossip with friends, and even meet new people. It's less pressure — they won't mind if I didn't blur out the pimple on my forehead — but this side of Instagram hardly fulfills the original free-flowing promise of social media.Source: Social media is dead | Insider[…]

Despite the efforts of big incumbents and buzzy new apps, the old ways of posting are gone, and people don’t want to go back. Even Adam Mosseri, the head of Instagram, admitted that users have moved on to direct messages, closed communities, and group chats. Regularly posting content is now largely confined to content creators and influencers, while non-creators are moving toward sharing bits of their lives behind private accounts.

As more people have been confronted with the consequences of constant sharing, social media has become less social and more media — a constellation of entertainment platforms where users consume content but rarely, if ever, create their own. Influencers, marketers, average users, and even social-media executives agree: Social media, as we once knew it, is dead.

[…]

And if Instagram was the bellwether for the rise and fall of the “social” social-media era, it is also a harbinger of this new era. “If you look at how teens spend their time on Instagram, they spend more time in DMs than they do in stories, and they spend more time in stories than they do in feed,” Mosseri said during the “20VC” interview. Given this changing behavior, Mosseri said the platform has shifted its resources to messaging tools. “Actually, at one point a couple years ago, I think I put the entire stories team on messaging,” he said.

A philosophy of travel

There’s a book by philosopher Alain de Botton called The Art of Travel. In it, he cites Seneca as bemoaning the fact that when you travel you take yourself, with all of your anxieties, frustrations, and insecurities with you. In other words, you might escape your home, but you don’t escape yourself.

This article critically examines the concept of travel, questioning its oft-claimed benefits of ‘enlightenment’ and ‘personal growth’. It cites various thinkers who have critiqued travel (including one of my favourites, Fernando Pessoa) suggesting that it can actually distance us from genuine human connection and meaningful experiences.

It’s hard not to agree with the conclusion that the allure of travel may lie in its ability to temporarily distract us from the existential dread of mortality. Perhaps we need more Marcus Aurelius in our life, who extolled one the benefits of philosophy as being able to be find calm no matter where you are.

“A tourist is a temporarily leisured person who voluntarily visits a place away from home for the purpose of experiencing a change.” This definition is taken from the opening of “Hosts and Guests,” the classic academic volume on the anthropology of tourism. The last phrase is crucial: touristic travel exists for the sake of change. But what, exactly, gets changed? Here is a telling observation from the concluding chapter of the same book: “Tourists are less likely to borrow from their hosts than their hosts are from them, thus precipitating a chain of change in the host community.” We go to experience a change, but end up inflicting change on others.Source: The Case Against Travel | The New YorkerFor example, a decade ago, when I was in Abu Dhabi, I went on a guided tour of a falcon hospital. I took a photo with a falcon on my arm. I have no interest in falconry or falcons, and a generalized dislike of encounters with nonhuman animals. But the falcon hospital was one of the answers to the question, “What does one do in Abu Dhabi?” So I went. I suspect that everything about the falcon hospital, from its layout to its mission statement, is and will continue to be shaped by the visits of people like me—we unchanged changers, we tourists. (On the wall of the foyer, I recall seeing a series of “excellence in tourism” awards. Keep in mind that this is an animal hospital.)

Why might it be bad for a place to be shaped by the people who travel there, voluntarily, for the purpose of experiencing a change? The answer is that such people not only do not know what they are doing but are not even trying to learn. Consider me. It would be one thing to have such a deep passion for falconry that one is willing to fly to Abu Dhabi to pursue it, and it would be another thing to approach the visit in an aspirational spirit, with the hope of developing my life in a new direction. I was in neither position. I entered the hospital knowing that my post-Abu Dhabi life would contain exactly as much falconry as my pre-Abu Dhabi life—which is to say, zero falconry. If you are going to see something you neither value nor aspire to value, you are not doing much of anything besides locomoting.

[…]

The single most important fact about tourism is this: we already know what we will be like when we return. A vacation is not like immigrating to a foreign country, or matriculating at a university, or starting a new job, or falling in love. We embark on those pursuits with the trepidation of one who enters a tunnel not knowing who she will be when she walks out. The traveller departs confident that she will come back with the same basic interests, political beliefs, and living arrangements. Travel is a boomerang. It drops you right where you started.

[…]

Travel is fun, so it is not mysterious that we like it. What is mysterious is why we imbue it with a vast significance, an aura of virtue. If a vacation is merely the pursuit of unchanging change, an embrace of nothing, why insist on its meaning?

Using semesters for goal-setting

This article suggests using the academic calendar as a framework for setting and achieving personal goals, breaking life into “semesters” to focus on mini-goals that contribute to larger ambitions. It argues that this approach can aid in time management, motivation, and skill development, offering a structured yet flexible way to make meaningful progress in various aspects of life.

As someone who spent a long time in formal education, was a teacher, and spent time working in Higher Education, it’s difficult to get out of the habit of the academic year and breaking your work into ‘terms’. Perhaps I should be leaning into it?

While it’s important to set goals, the roadmap for how to attain them can be murky. Instead of embarking without a plan toward broad ambitions, there’s value in incremental objectives in service of a larger aim. Take a page from the educational system and divide the future into “semesters” — traditionally 15 to 17 weeks long at American colleges — in which to implement minigoals to help get you where you want to go. Use the traditional academic year as a guide to help you stay on track, says Rachel Wu, an associate professor of psychology at the University of California Riverside. Many community classes and educational opportunities are offered roughly on a quarter or semester basis. “At the very least, it will help people, maybe, feel young again. I think that’s a huge benefit,” Wu says. “They can think back to that point in their life when they had that kind of organization and that might be something that works for them.” (You don’t need to follow a traditional academic structure by any means, but having a firm start and end date within a few months’ span in which to focus on certain skills or activities can help keep you motivated.)Source: Semesters for adults: How the academic school year can help with goal-setting, time management, and motivation | Vox[…]

Modeling your life after academic years allows you to adequately mark your process. It’s difficult to determine improvement with daily or even weekly goals, Fishbach says. But with a quarterly or biannual milestone, you’re more easily able to track your progress; you can more clearly look back on what you’ve learned after a 20-week intro to coding class as opposed to after a few days of instruction. The end of a semester allows for these report cards. “It just helps you feel that you’re growing as a person,” Fishbach says. “You’re not the person you were three months ago.”

[…]

A self-imposed semester system also lends itself to increased motivation due, in part, to the fresh start effect, where people are more driven to pursue goals after a “fresh start” like a new year or semester. (Fully embrace the back-to-school energy and buy some new school supplies, Wu says, “and then learn something.”) With goals that have an endpoint, called an all-or-nothing goal, Fishbach says, motivation increases as you approach the deadline. Having a distinct cutoff to your personal semester can help you stay driven knowing there’s an end in sight.