- People seem not to see that their opinion of the world is also a confession of character

- We have it in our power to begin the world over again

- There is no creature whose inward being is so strong that it is not greatly determined by what lies outside it

- The old is dying and the new cannot be born

- Are we on the road to civilisation collapse? (BBC Future) — "Collapse is often quick and greatness provides no immunity. The Roman Empire covered 4.4 million sq km (1.9 million sq miles) in 390. Five years later, it had plummeted to 2 million sq km (770,000 sq miles). By 476, the empire’s reach was zero."

- Fish farming could be the center of a future food system (Fast Company) — "Aquaculture has been shown to have 10% of the greenhouse gas emissions of beef when it’s done well, and 50% of the feed usage per unit of production as beef"

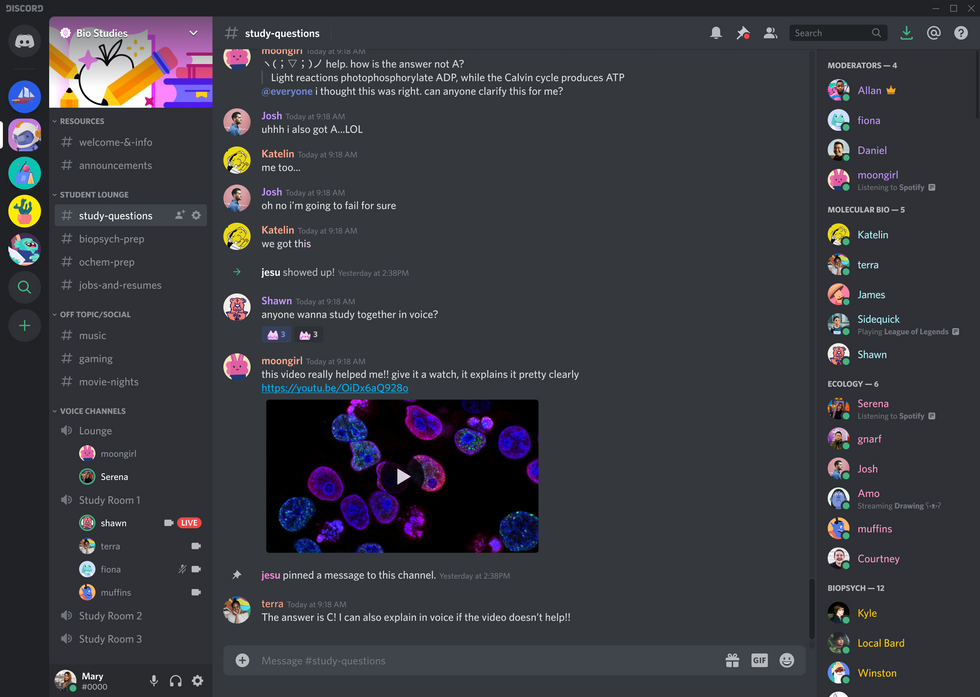

- The global internet is disintegrating. What comes next? (BBC FutureNow) — "A separate internet for some, Facebook-mediated sovereignty for others: whether the information borders are drawn up by individual countries, coalitions, or global internet platforms, one thing is clear – the open internet that its early creators dreamed of is already gone."

- Your peers will exert downward pressure on the number of holidays you actually take.

- If there's no set holiday entitlement, when you leave the company doesn't have to pay for unused holiday days.

- Passionate

- Dispassionate

- Compassionate

Killer robots are already here

Great.

Kargu is a “loitering” drone that uses machine learning-based object classification to select and engage targets, according to STM, and also has swarming capabilities to allow 20 drones to work together.Source: Military drones may have attacked humans for first time without being instructed to, UN report says | The Independent“The lethal autonomous weapons systems were programmed to attack targets without requiring data connectivity between the operator and the munition: in effect, a true ‘fire, forget and find’ capability,” the experts wrote in the report.

You can never get rid of what is part of you, even when you throw it away

🤖 Why the Dancing Robots Are a Really, Really Big Problem — "No, robots don’t dance: they carry out the very precise movements that their — exceedingly clever — programmers design to move in a way that humans will perceive as dancing. It is a simulacrum, a trompe l’oeil, a conjurer’s trick. And it works not because of something inherent in the machinery, but because of something inherent in ours: our ever-present capacity for finding the familiar. It looks like human dancing, except it’s an utterly meaningless act, stripped of any social, cultural, historical, or religious context, and carried out as a humblebrag show of technological might."

💭 Why Do We Dream? A New Theory on How It Protects Our Brains — "We suggest that the brain preserves the territory of the visual cortex by keeping it active at night. In our “defensive activation theory,” dream sleep exists to keep neurons in the visual cortex active, thereby combating a takeover by the neighboring senses."

✅ A simple 2 x 2 for choices — "It might be simple, but it’s not always easy. Success doesn’t always mean money, it just means that you got what you were hoping for. And while every project fits into one of the four quadrants, there’s no right answer for any given person or any given moment.."

📅 Four-day week means 'I don't waste holidays on chores' — "The four-day working week with no reduction in pay is good for the economy, good for workers and good for the environment. It's an idea whose time has come."

💡 100 Tips For A Better Life — "It is cheap for people to talk about their values, goals, rules, and lifestyle. When people’s actions contradict their talk, pay attention!"

As scarce as truth is, the supply has always been in excess of demand

💬 Welcome to the Next Level of Bullshit

📚 The Best Self-Help Books of the 21st Century

💊 A radical prescription to make work fit for the future

👣 This desolate English path has killed more than 100 people

Quotation-as-title by Josh Billings. Image from top linked post.

To be in process of change is not an evil, any more than to be the product of change is a good

🌐 Unlimited Information Is Transforming Society

📱 Your Smartphone Can Tell If You’re Drunk-Walking

🚸 Britain's obsession with school uniform reinforces social divisions

🤖 Robot Teachers, Racist Algorithms, and Disaster Pedagogy

Quotation-as-title by Marcus Aurelius. Image from top linked post.

Arguing that you don't care about the right to privacy because you have nothing to hide is no different than saying you don't care about free speech because you have nothing to say

Post-pandemic surveillance culture

Today's title comes from Edward Snowden, and is a pithy overview of the 'nothing to hide' argument that I guess I've struggled to answer over the years. I'm usually so shocked that an intelligent person would say something to that effect, that I'm not sure how to reply.

When you say, ‘I have nothing to hide,’ you’re saying, ‘I don’t care about this right.’ You’re saying, ‘I don’t have this right, because I’ve got to the point where I have to justify it.’ The way rights work is, the government has to justify its intrusion into your rights.

Edward Snowden

This, then, is the fifth article in my ongoing blogchain about post-pandemic society, which already includes:

It does not surprise me that those with either a loose grip on how the world works, or those who need to believe that someone, somewhere has 'a plan', believe in conspiracy theories around the pandemic.

What is true, and what can easily be mistaken for 'planning' is the preparedness of those with a strong ideology to double-down on it during a crisis. People and organisations reveal their true colours under stress. What was previously a long game now becomes a short-term priority.

For example, this week, the US Senate "voted to give law enforcement agencies access to web browsing data without a warrant", reports VICE. What's interesting, and concerning to me, is that Big Tech and governments are acting like they've already won the war on harvesting our online life, and now they're after our offline life, too.

I have huge reservations about the speed in which Covid-19 apps for contact tracing are being launched when, ultimately, they're likely to be largely ineffective.

We already know how to do contact tracing well and to train people how to do it. But, of course, it costs money and is an investment in people instead of technology, and privacy instead of surveillance.

There are plenty of articles out there on the difference between the types of contact tracing apps that are being developed, and this BBC News article has a useful diagram showing the differences between the two.

TL;DR: there is no way that kind of app is going on my phone. I can't imagine anyone who I know who understands tech even a little bit installing it either.

Whatever the mechanics of how it goes about doing it happen to be, the whole point of a contact tracing app is to alert you and the authorities when you have been in contact with someone with the virus. Depending on the wider context, that may or may not be useful to you and society.

However, such apps are more widely applicable. One of the things about technology is to think about the effects it could have. What else could an app like this have, especially if it's baked into the operating systems of devices used by 99% of smartphone users worldwide?

The above diagram is Marshall McLuhan's tetrad of media effects, which is a useful frame for thinking about the impact of technology on society.

Big Tech and governments have our online social graphs, a global map of how everyone relates to everyone else in digital spaces. Now they're going after our offline social graphs too.

Exhibit A

The general reaction to this seemed to be one of eye-rolling and expressing some kind of Chinese exceptionalism when this was reported back in January.

Exhibit B

Today, this Boston Dynamics robot is trotting around parks in Singapore reminding everyone about social distancing. What are these robots doing in five years' time?

Exhibit C

Drones in different countries are disinfecting the streets. What's their role by 2030?

I think it's drones that concern me most of all. Places like Baltimore were already planning overhead surveillance pre-pandemic, and our current situation has only accelerated and exacerbated that trend.

In that case, it's US Predator drones that have previously been used to monitor and bomb places in the Middle East that are being deployed on the civilian population. These drones operate from a great height, unlike the kind of consumer drones that anyone can buy.

However, as was reported last year, we're on the cusp of photovoltaic drones that can fly for days at a time:

This breakthrough has big implications for technologies that currently rely on heavy batteries for power. Thermophotovoltaics are an ultralight alternative power source that could allow drones and other unmanned aerial vehicles to operate continuously for days. It could also be used to power deep space probes for centuries and eventually an entire house with a generator the size of an envelope.

Linda Vu (TechXplore)

Not only will the government be able to fly thousands of low-cost drones to monitor the population, but they can buy technology, like this example from DefendTex, to take down other drones.

That is, of course, if civilian drones continue to be allowed, especially given the 'security risk' of Chinese-made drones flying around.

It's interesting times for those who keep a watchful eye on their civil liberties and government invasion of privacy. Bear that in mind when tech bros tell you not to fear robots because they're dumb. The people behind them aren't, and they have an agenda.

Header image via Pixabay

Technology is the name we give to stuff that doesn't work properly yet

So said my namesake Douglas Adams. In fact, he said lots of wise things about technology, most of them too long to serve as a title.

I'm in a weird place, emotionally, at the moment, but sometimes this can be a good thing. Being taken out of your usual 'autopilot' can be a useful way to see things differently. So I'm going to take this opportunity to share three things that, to be honest, make me a bit concerned about the next few years...

Attempts to put microphones everywhere

In an article for Slate, Shannon Palus ranks all of Amazon's new products by 'creepiness'. The Echo Frames are, in her words:

A microphone that stays on your person all day and doesn’t look like anything resembling a microphone, nor follows any established social codes for wearable microphones? How is anyone around you supposed to have any idea that you are wearing a microphone?

Shannon Palus

When we're not talking about weapons of mass destruction, it's not the tech that concerns me, but the context in which the tech is used. As Palus points out, how are you going to be able to have a 'quiet word' with anyone wearing glasses ever again?

It's not just Amazon, of course. Google and Facebook are at it, too.

Full-body deepfakes

With the exception, perhaps, of populist politicians, I don't think we're ready for a post-truth society. Check out the video above, which shows Chinese technology that allows for 'full body deepfakes'.

The video is embedded, along with a couple of others in an article for Fast Company by DJ Pangburn, who also notes that AI is learning human body movements from videos. Not only will you be able to prank your friends by showing them a convincing video of your ability to do 100 pull-ups, but the fake news it engenders will mean we can't trust anything any more.

Neuromarketing

If you clicked on the 'super-secret link' in Sunday's newsletter, you will have come across STEALING UR FEELINGS which is nothing short of incredible. As powerful as it is in showing you the kind of data that organisations have on us, it's the tip of the iceberg.

Kaveh Waddell, in an article for Axios, explains that brains are the last frontier for privacy:

"The sort of future we're looking ahead toward is a world where our neural data — which we don't even have access to — could be used" against us, says Tim Brown, a researcher at the University of Washington Center for Neurotechnology.

Kaveh Waddell

This would lead to 'neuromarketing', with advertisers knowing what triggers and influences you better than you know yourself. Also, it will no doubt be used for discriminatory purposes and, because it's coming directly from your brainwaves, short of literally wearing a tinfoil hat, there's nothing much you can do.

So there we are. Am I being too fearful here?

One can see only what one has already seen

Fernando Pessoa with today's quotation-as-title. He's best known for The Book of Disquiet which he called "a factless autobiography". It's... odd. Here's a sample:

Whether or not they exist, we're slaves to the gods.

Fernando pessoa

I've been reading a lot of Seneca recently, who famously said:

Life is divided into three periods, past, present and future. Of these, the present is short, the future is doubtful, the past is certain.

Seneca

The trouble is, we try and predict the future in order to control the future. Some people have a good track record in this, partly because they are involved in shaping things in the present. Other people have a vested interest in trying to get the world to bend to their ideology.

In an article for WIRED, Joi Ito, Director of the MIT Media Lab writes about 'extended intelligence' being the future rather than AI:

The notion of singularity – which includes the idea that AI will supercede humans with its exponential growth, making everything we humans have done and will do insignificant – is a religion created mostly by people who have designed and successfully deployed computation to solve problems previously considered impossibly complex for machines.

Joi Ito

It's a useful counter-balance to those banging the AI drum and talking about the coming jobs apocalypse.

After talking about 'S curves' and adaptive systems, Ito explains that:

Instead of thinking about machine intelligence in terms of humans vs machines, we should consider the system that integrates humans and machines – not artificial intelligence but extended intelligence. Instead of trying to control or design or even understand systems, it is more important to design systems that participate as responsible, aware and robust elements of even more complex systems.

Joi Ito

I haven't had a chance to read it yet, but I'm looking forward to seeing some of the ideas put forward in The Weight of Light: a collection of solar futures (which is free to download in multiple formats). We need to stop listening solely to rich white guys proclaiming the Silicon Valley narrative of 'disruption'. There are many other, much more collaborative and egalitarian, ways of thinking about and designing for the future.

This collection was inspired by a simple question: what would a world powered entirely by solar energy look like? In part, this question is about the materiality of solar energy—about where people will choose to put all the solar panels needed to power the global economy. It’s also about how people will rearrange their lives, values, relationships, markets, and politics around photovoltaic technologies. The political theorist and historian Timothy Mitchell argues that our current societies are carbon democracies, societies wrapped around the technologies, systems, and logics of oil.What will it be like, instead, to live in the photon societies of the future?

The Weight of Light: a collection of solar futures

We create the future, it doesn't just happen to us. My concern is that we don't recognise the signs that we're in the last days. Someone shared this quotation from the philosopher Kierkegaard recently, and I think it describes where we're at pretty well:

A fire broke out backstage in a theatre. The clown came out to warn the public; they thought it was a joke and applauded. He repeated it; the acclaim was even greater. I think that's just how the world will come to an end: to general applause from wits who believe it's a joke.

Søren Kierkegaard

Let's home we collectively wake up before it's too late.

Also check out:

The robot economy and social-emotional skills

Ben Williamson writes:

The steady shift of the knowledge economy into a robot economy, characterized by machine learning, artificial intelligence, automation and data analytics, is now bringing about changes in the ways that many influential organizations conceptualize education moving towards the 2020s. Although this is not an epochal or decisive shift in economic conditions, but rather a slow metamorphosis involving machine intelligence in the production of capital, it is bringing about fresh concerns with rethinking the purposes and aims of education as global competition is increasingly linked to robot capital rather than human capital alone.A plethora of reports and pronouncements by 'thought-leaders' and think tanks warn us about a medium-term future where jobs are 'under threat'. This has a concomitant impact on education:

The first is that education needs to de-emphasize rote skills of the kind that are easy for computers to replace and stress instead more digital upskilling, coding and computer science. The second is that humans must be educated to do things that computerization cannot replace, particularly by upgrading their ‘social-emotional skills’.A few years ago, I remember asking someone who ran different types of coding bootcamps which would be best approach for me. Somewhat conspiratorially, he told me that I didn't need to learn to code, I just needed to learn how to manage those who do the coding. As robots and AI become more sophisticated and can write their own programs, I suspect this 'management' will include non-human actors.

Of all of the things I’ve had to learn for and during my (so-called) career, the hardest has been gaining the social-emotional skills to work remotely. This isn’t an easy thing to unpack, especially when we’re all encouraged to have a ‘mission’ in life and to be emotionally invested in our work.

Williams notes:

The OECD’s Andreas Schleicher is especially explicit about the perceived strategic importance of cultivating social-emotional skills to work with artificial intelligence, writing that ‘the kinds of things that are easy to teach have become easy to digitise and automate. The future is about pairing the artificial intelligence of computers with the cognitive, social and emotional skills, and values of human beings’.I’m less bothered about Schleicher’s link between social-emotional skills and the robot economy. I reckon that, no matter what time period you live in, there are knowledge and skills you need to be successful when interacting with other human beings.Moreover, he casts this in clearly economic terms, noting that ‘humans are in danger of losing their economic value, as biological and computer engineering make many forms of human activity redundant and decouple intelligence from consciousness’. As such, human emotional intelligence is seen as complementary to computerized artificial intelligence, as both possess complementary economic value. Indeed, by pairing human and machine intelligence, economic potential would be maximized.

[…]

The keywords of the knowledge economy have been replaced by the keywords of the robot economy. Even if robotization does not pose an immediate threat to the future jobs and labour market prospects of students today, education systems are being pressured to change in anticipation of this economic transformation.

That being said, there are ways of interacting with machines that are important to learn to get ahead. I stand by what I said in 2013 about the importance of including computational thinking in school curricula. To me, education is about producing healthy, engaged citizens. They need to understand the world around them, be (digitally) confident in it, and have the conceptual tools to be able to problem-solve.

Source: Code Acts in Education

What can dreams of a communist robot utopia teach us about human nature?

This article in Aeon by Victor Petrov posits that, in the post-industrial age, we no longer see human beings as primarily manual workers, but as thinkers using digital screens to get stuff done. What does that do to our self-image?

The communist parties of eastern Europe grappled with this new question, too. The utopian social order they were promising from Berlin to Vladivostok rested on the claim that proletarian societies would use technology to its full potential, in the service of all working people. Bourgeois information society would alienate workers even more from their own labour, turning them into playthings of the ruling classes; but a socialist information society would free Man from drudgery, unleash his creative powers, and enable him to ‘hunt in the morning … and criticise after dinner’, as Karl Marx put it in 1845. However, socialist society and its intellectuals foresaw many of the anxieties that are still with us today. What would a man do in a world of no labour, and where thinking was done by machines?Bulgaria was a communist country that, after the Second World War, went from producing cigarettes to being one of the world's largest producers of computers. This had a knock-on effect on what people wrote about in the country.

The Bulgarian reader was increasingly treated to debates about what humanity would be in this new age. Some, such as the philosopher Mityu Yankov, argued that what set Man apart from the animals was his ability to change and shape nature. For thousands of years, he had done this through physical means and his own brawn. But the Industrial Revolution had started a change of Man’s own nature, which was culminating with the Information Revolution – humanity now was becoming not a worker but a ‘governor’, a master of nature, and the means of production were not machines or muscles, but the human brain.Lyuben Dilov, a popular sci-fi author, focused on "the boundaries between man and machine, brain and computer". His books were full of societies obsessed with technology.

Added to this, there is technological anxiety, too – what is it to be a man when there are so many machines? Thus, Dilov invents a Fourth Law of Robotics, to supplement Asimov’s famous three, which states that ‘the robot must, in all circumstances, legitimate itself as a robot’. This was a reaction by science to the roboticists’ wish to give their creations ever more human qualities and appearance, making them subordinate to their function – often copying animal or insect forms. Zenon muses on human interactions with robots that start from a young age, giving the child power over the machine from the outset. This undermines our trust in the very machines on which we depend. Humans need a distinction from the robots, they need to know that they are always in power and couldn’t be lied to. For Dilov, the anxiety was about the limits of humanity, at least in its current stage – fearful, humans could not yet treat anything else, including their machines, as equals.This all seems very pertinent at a time when deepfakes make us question what is real online. We're perhaps less worried about a Blade Runner-style dystopia and more concerned about digital 'reality' but, nevertheless, questions about what it means to be human persist.

Bulgarian robots were both to be feared and they were the future. Socialism promised to end meaningless labour but reproduced many of the anxieties that are still with us today in our ever-automating world. What can Man do that a machine cannot do is something we still haven’t solved. But, like Kesarovski, perhaps we need not fear this new world so much, nor give up our reservations for the promise of a better, easier world.Source: Aeon

The spectrum of work autonomy

Some companies have (and advertise as a huge perk) their ‘unlimited vacation’ policy. That, of course, sounds amazing. Except, of course, that there’s a reason why companies are so benevolent.

I can think of at least two:

And that, increasingly, is the dividing line in modern workplaces: trust versus the lack of it; autonomy versus micro-management; being treated like a human being or programmed like a machine. Human jobs give the people who do them chances to exercise their own judgment, even if it’s only deciding what radio station to have on in the background, or set their own pace. Machine jobs offer at best a petty, box-ticking mentality with no scope for individual discretion, and at worst the ever-present threat of being tracked, timed and stalked by technology – a practice reaching its nadir among gig economy platforms controlling a resentful army of supposedly self-employed workers.Never mind robots coming to steal our jobs, that's just a symptom in a wider trend of neoliberal, late-stage capitalism:

There have always been crummy jobs, and badly paid ones. Not everyone gets to follow their dream or discover a vocation – and for some people, work will only ever be a means of paying the rent. But the saving grace of crummy jobs was often that there was at least some leeway for goofing around; for taking a fag break, gossiping with your equally bored workmates, or chatting a bit longer than necessary to lonely customers.The 'contract' with employers these days goes way beyond the piece of paper you sign that states such mundanities as how much you will be paid or how much holiday you get. It's about trust, as Hinsliff comments:

The mark of human jobs is an increasing understanding that you don’t have to know where your employees are and what they’re doing every second of the day to ensure they do it; that people can be just as productive, say, working from home, or switching their hours around so that they are working in the evening. Machine jobs offer all the insecurity of working for yourself without any of the freedom.Embedded in this are huge diversity issues. I purposely chose a photo of a young white guy to go with the post, as they're disproportionately likely to do well from this 'trust-based' workplace approach. People of colour, women, and those with disabilities are more likely to suffer from implicit bias and other forms of discrimination.

The debate about whether robots will soon be coming for everyone’s jobs is real. But it shouldn’t blind us to the risk right under our noses: not so much of people being automated out of jobs, as automated while still in them.I consume a lot of what I post to Thought Shrapnel online, but I originally red this one in the dead-tree version of The Guardian. Interestingly, in the same issue there was a letter from a doctor by the name of Jonathan Shapiro, who wrote that he divides his colleagues into three different types:

What we need to be focusing on in education is preparing young people to be compassionate human beings, not cogs in the capitalist machine.

Source: The Guardian

Robo-advisors are coming for your job (and that's OK)

Algorithms and artificial intelligence are an increasingly-normal part of our everyday lives, notes this article, so the next step is in the workplace:

Each one of us is becoming increasingly more comfortable being advised by robots for everything from what movie to watch to where to put our retirement. Given the groundwork that has been laid for artificial intelligence in companies, it’s only a matter of time before the $60 billion consulting industry in the U.S. is going to be disrupted by robotic advisors.I remember years ago being told that by 2020 it would be normal to have an algorithm on your team. It sounded fanciful at the time, but now we just take it for granted:

Robo-advisors have the potential to deliver a broader array of advice and there may be a range of specialized tools in particular decision domains. These robo-advisors may be used to automate certain aspects of risk management and provide decisions that are ethical and compliant with regulation. In data-intensive fields like marketing and supply chain management, the results and decisions that robotic algorithms provide is likely to be more accurate than those made by human intuition.I'm kind of looking forward to this becoming a reality, to be honest. Let machines do what machines are good at, and humans do what humans are good at would be my mantra.

Source: Harvard Business Review

Is it pointless to ban autonomous killing machines?

The authors do have a point:

Suppose the UN were to implement a preventive ban on the further development of all autonomous weapons technology. Further suppose – quite optimistically, already – that all armies around the world were to respect the ban, and abort their autonomous-weapons research programmes. Even with both of these assumptions in place, we would still have to worry about autonomous weapons. A self-driving car can be easily re-programmed into an autonomous weapons system: instead of instructing it to swerve when it sees a pedestrian, just teach it to run over the pedestrian.Source: Aeon