- We Have No Reason to Believe 5G Is Safe (Scientific American) — "The latest cellular technology, 5G, will employ millimeter waves for the first time in addition to microwaves that have been in use for older cellular technologies, 2G through 4G. Given limited reach, 5G will require cell antennas every 100 to 200 meters, exposing many people to millimeter wave radiation... [which are] absorbed within a few millimeters of human skin and in the surface layers of the cornea. Short-term exposure can have adverse physiological effects in the peripheral nervous system, the immune system and the cardiovascular system."

- Situated degree pathways (The Ed Techie) — "[T]he Trukese navigator “begins with an objective rather than a plan. He sets off toward the objective and responds to conditions as they arise in an ad hoc fashion. He utilizes information provided by the wind, the waves, the tide and current, the fauna, the stars, the clouds, the sound of the water on the side of the boat, and he steers accordingly.” This is in contrast to the European navigator who plots a course “and he carries out his voyage by relating his every move to that plan. His effort throughout his voyage is directed to remaining ‘on course’."

- on rms / necessary but not sufficient (p1k3) — "To the extent that free software was about wanting the freedom to hack and freely exchange the fruits of your hacking, this hasn’t gone so badly. It could be better, but I remember the 1990s pretty well and I can tell you that much of the stuff trivially at my disposal now would have blown my tiny mind back then. Sometimes I kind of snap to awareness in the middle of installing some package or including some library in a software project and this rush of gratitude comes over me."

- Screen time is good for you—maybe (MIT Technology Review) — "Przybylski admitted there are some drawbacks to his team’s study: demographic effects, like socioeconomics, are tied to psychological well-being, and he said his team is working to differentiate those effects—along with the self-selection bias introduced when kids and their caregivers report their own screen use. He also said he was working to figure out whether a certain type of screen use was more beneficial than others."

- This Map Lets You Plug in Your Address to See How It’s Changed Over the Past 750 Million Years (Smithsonian Magazine) — "Users can input a specific address or more generalized region, such as a state or country, and then choose a date ranging from zero to 750 million years ago. Currently, the map offers 26 timeline options, traveling back from the present to the Cryogenian Period at intervals of 15 to 150 million years."

- Understanding extinction — humanity has destroyed half the life on Earth (CBC) — "One of the most significant ways we've reduced the biomass on the planet is by altering the kind of life our planet supports. One huge decrease and shift was due to the deforestation that's occurred with our increasing reliance on agriculture. Forests represent more living material than fields of wheat or soybeans."

- Honks vs. Quacks: A Long Chat With the Developers of 'Untitled Goose Game' (Vice) — "[L]ike all creative work, this game was made through a series of political decisions. Even if this doesn’t explicitly manifest in the text of the game, there are a bunch of ambient traces of our politics evident throughout it: this is why there are no cops in the game, and why there’s no crown on the postbox."

- What is the Zeroth World, and how can we use it? (Bryan Alexander) — "[T]he idea of a zeroth world is also a critique. The first world idea is inherently self-congratulatory. In response, zeroth sets the first in some shade, causing us to see its flaws and limitations. Like postmodern to modern, or Internet2 to the rest of the internet, it’s a way of helping us move past the status quo."

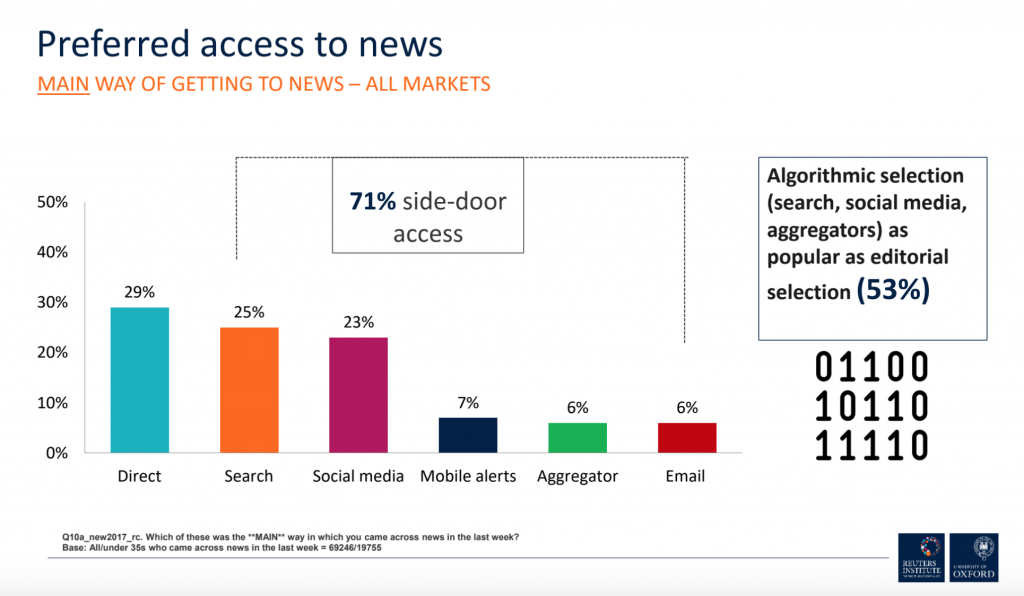

- It’s not the claim, it’s the frame (Hapgood) — "[A] news-reading strategy where one has to check every fact of a source because the source itself cannot be trusted is neither efficient nor effective. Disinformation is not usually distributed as an entire page of lies.... Even where people fabricate issues, they usually place the lies in a bed of truth."

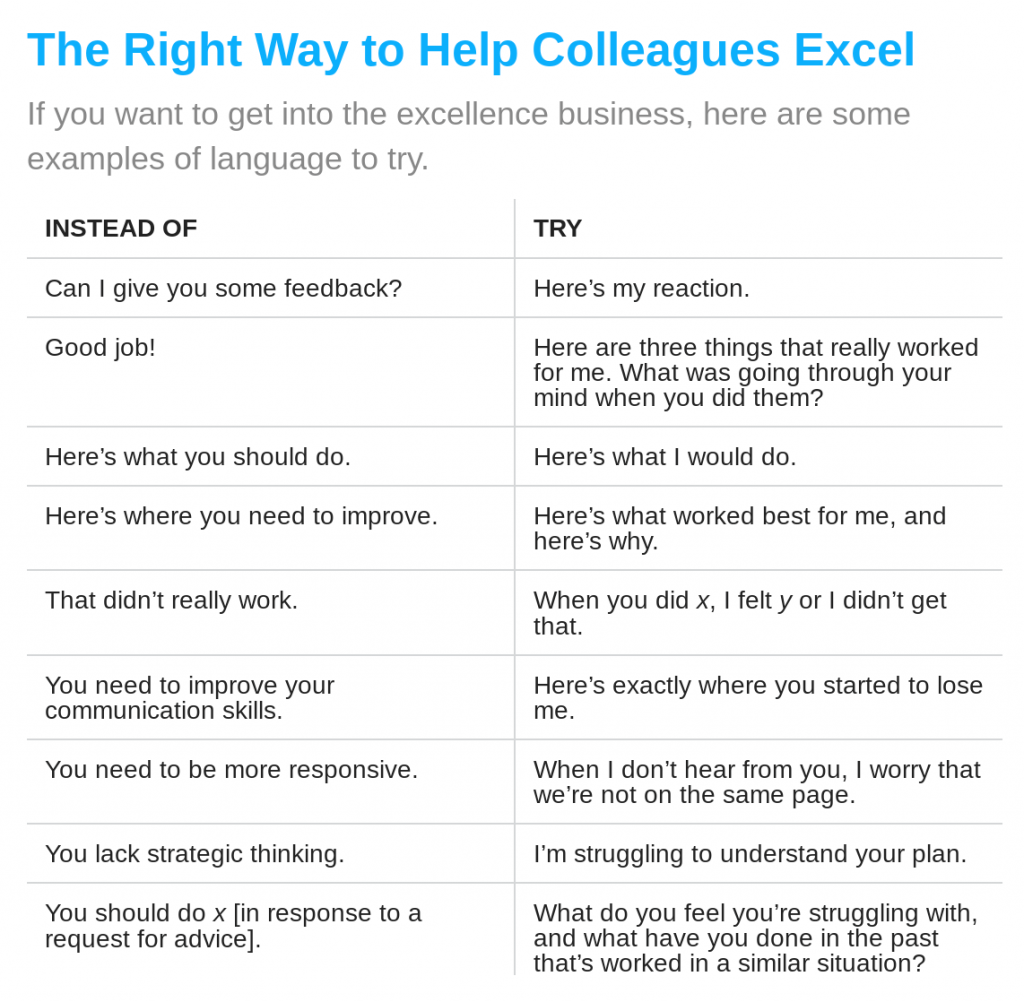

- Other people are more aware than you are of your weaknesses

- You lack certain abilities you need to acquire, so your colleagues should teach them to you

- Great performance is universal, analyzable, and describable, and that once defined, it can be transferred from one person to another, regardless of who each individual is

- Iris Murdoch, The Art of Fiction No. 117 (The Paris Review) — "I would abominate the idea of putting real people into a novel, not only because I think it’s morally questionable, but also because I think it would be terribly dull."

- How an 18th-Century Philosopher Helped Solve My Midlife Crisis (The Atlantic) — "I had found my salvation in the sheer endless curiosity of the human mind—and the sheer endless variety of human experience."

- A brief history of almost everything in five minutes (Aeon) —According to [the artist], the piece ‘is intended for both introspection and self-reflection, as a mirror to ourselves, our own mind and how we make sense of what we see; and also as a window into the mind of the machine, as it tries to make sense of its observations and memories’.

- Teens 'not damaged by screen time', study finds (BBC Technology) — "The analysis is robust and suggests an overall population effect too small to warrant consideration as a public health problem. They also question the widely held belief that screens before bedtime are especially bad for mental health."

- Human Contact Is Now a Luxury Good (The New York Times) — "The rich have grown afraid of screens. They want their children to play with blocks, and tech-free private schools are booming. Humans are more expensive, and rich people are willing and able to pay for them. Conspicuous human interaction — living without a phone for a day, quitting social networks and not answering email — has become a status symbol."

- NHS sleep programme ‘life changing’ for 800 Sheffield children each year (The Guardian) — "Families struggling with children’s seriously disrupted sleep have seen major improvements by deploying consistent bedtimes, banning sugary drinks in the evening and removing toys and electronics from bedrooms."

- AI generates non-stop stream of death metal (Engadget) — "The result isn't entirely natural, if simply because it's not limited by the constraints of the human body. There are no real pauses. However, it certainly sounds the part — you'll find plenty of hyper-fast drums, guitar thrashing and guttural growling."

- How AI Will Turn Us All Into Filmmakers (WIRED) — "AI-assisted editing won’t make Oscar-worthy auteurs out of us. But amateur visual storytelling will probably explode in complexity."

- Experts Weigh in on Merits of AI in Education (THE Journal) — "AI systems are perfect for analyzing students’ progress, providing more practice where needed and moving on to new material when students are ready," she stated. "This allows time with instructors to focus on more complex learning, including 21st-century skills."

Information cannot be transmitted faster than the [vacuum] speed of light

It’s been a while since I studied Physics, so I confess to not exactly understanding what’s going on here. However, if it speeds up my internet connection at some point in the future, it’s all good.

"Our experiment shows that the generally held misconception that nothing can move faster than the speed of light, is wrong. Einstein's Theory of Relativity still stands, however, because it is still correct to say that information cannot be transmitted faster than the vacuum speed of light," said Dr. Lijun Wang. "We will continue to study the nature of light and hopefully it will provide us with a better insight about the natural world and further stimulate new thinking towards peaceful applications that will benefit all humanity."Source: Laser pulse travels 300 times faster than light

Friday facilitations

This week, je presente...

Image of hugelkultur bed via Sid

We never look at just one thing; we are always looking at the relation between things and ourselves

Today's title comes from John Berger's Ways of Seeing, which is an incredible book. Soon after the above quotation, he continues,

The eye of the other combines with our own eye to make it fully credible that we are part of the visible world.

John Berger

That period of time when you come to be you is really interesting. As an adolescent, and before films like The Matrix, I can remember thinking that the world literally revolved around me; that other people were testing me in some way. I hope that's kind of normal, and I'd add somewhat hastily that I grew out of that way of thinking a long time ago. Obviously.

All of this is a roundabout way of saying that we cannot know the 'inner lives' of other people, or in fact that they have them. Writing in The Guardian, psychologist Oliver Burkeman notes that we sail through life assuming that we experience everything similarly, when that's not true at all:

A new study on a technical-sounding topic – “genetic variation across the human olfactory receptor repertoire” – is a reminder that we smell the world differently... Researchers found that a single genetic mutation accounts for many of those differences: the way beetroot smells (and tastes) like disgustingly dirty soil to some people, or how others can’t detect the smokiness of whisky, or smell lily of the valley in perfumes.

Oliver Burkeman

I know that my wife sees colours differently to me, as purple is one of her favourite colours. Neither of us is colour-blind, but some things she calls 'purple' are in no way 'purple' to me.

So when it comes to giving one another feedback, where should we even begin? How can we know the intentions or the thought processes behind someone's actions? In an article for Harvard Business Review, Marcus Buckingham and Ashley Goodall explain that our theories about feedback are based on three theories:

All of these, the author's claim, are false:

What the research has revealed is that we’re all color-blind when it comes to abstract attributes, such as strategic thinking, potential, and political savvy. Our inability to rate others on them is predictable and explainable—it is systematic. We cannot remove the error by adding more data inputs and averaging them out, and doing that actually makes the error bigger.

Buckingham & Goodall

What I liked was their actionable advice about how to help colleagues thrive, captured in this table:

Finally, as an educator and parent, I've noticed that human learning doesn't follow a linear trajectory. Anything but, in fact. Yet we talk and interact as though it does. That's why I found Good Things By Their Nature Are Fragile by Jason Kottke so interesting, quoting a 2005 post from Michael Barrish. I'm going to quote the same section as Kottke:

In 1988 Laura and I created a three-stage model of what we called “living process.” We called the three stages Good Thing, Rut, and Transition. As we saw it, Good Thing becomes Rut, Rut becomes Transition, and Transition becomes Good Thing. It’s a continuous circuit.

A Good Thing never leads directly to a Transition, in large part because it has no reason to. A Good Thing wants to remain a Good Thing, and this is precisely why it becomes a Rut. Ruts, on the other hand, want desperately to change into something else.

Transitions can be indistinguishable from Ruts. The only important difference is that new events can occur during Transitions, whereas Ruts, by definition, consist of the same thing happening over and over.

Michael Barrish

In life, sometimes we don't even know what stage we're in, never mind other people. So let's cut one another some slack, dispel the three myths about feedback listed above, and allow people to be different to us in diverse and glorious ways.

Also check out:

Header image: webcomicname.com

Cutting the Gordian knot of 'screen time'

Let's start this with an admission: my wife and I limit our children's time on their tablets, and they're only allowed on our games console at weekends. Nevertheless, I still maintain that wielding 'screen time' as a blunt instrument does more harm than good.

There's a lot of hand-wringing on this subject, especially around social skills and interaction. Take a recent article in The Guardian, for example, where Peter Fonagy, who is a professor of Contemporary Psychoanalysis and Developmental Science at UCL, comments:

“My impression is that young people have less face-to-face contact with older people than they once used to. The socialising agent for a young person is another young person, and that’s not what the brain is designed for.

“It is designed for a young person to be socialised and supported in their development by an older person. Families have fewer meals together as people spend more time with friends on the internet. The digital is not so much the problem – it’s what the digital pushes out.”

I don't disagree that we all need a balance here, but where's the evidence? On balance, I spend more time with my children than my father spent with my sister and I, yet my wife, two children and me probably have fewer mealtimes sat down at a table together than I did with my parents and sister. Different isn't always worse, and in our case it's often due to their sporting commitments.

So I'd agree with Jordan Shapiro who writes that the World Health Organisation's guidelines on screen time for kids isn't particularly useful. He quotes several sources that dismiss the WHO's recommendations:

Andrew Przybylski, the Director of Research at the Oxford Internet Institute, University of Oxford, said: “The authors are overly optimistic when they conclude screen time and physical activity can be swapped on a 1:1 basis.” He added that, “the advice overly focuses on quantity of screen time and fails to consider the content and context of use. Both the American Academy of Pediatricians and the Royal College of Paediatrics and Child Health now emphasize that not all screen time is created equal.”

That being said, parents still need some guidance. As I've said before, my generation of parents are the first ones having to deal with all of this, so where do we turn for advice?

An article by Roja Heydarpour suggests three strategies, including one from Mimi Ito who I hold in the utmost respect for her work around Connected Learning:

“Just because [kids] may meet an unsavory person in the park, we don’t ban them from outdoor spaces,” said Mimi Ito, director of the Connected Learning Lab at University of California-Irvine, at the 10th annual Women in the World Summit on Thursday. After years of research, the mother of two college-age children said she thinks parents need to understand how important digital spaces are to children and adjust accordingly.

Taking away access to these spaces, she said, is taking away what kids perceive as a human right. Gaming is like the proverbial water cooler for many boys, she said. And for many girls, social media can bring access to friends and stave off social isolation. “We all have to learn how to regulate our media consumption,” Ito said. “The longer you delay kids being able to use those muscles, the longer you delay kids learning how to regulate.”

I feel a bit bad reading that, as we've recently banned my son from the game Fortnite, which we felt was taking over his life a little too much. It's not forever, though, and he does have to find that balance between it having a place in his life and literally talking about it all of the freaking time.

One authoritative voice in the area is my friend and sometimes collaborator Ian O'Byrne, who, together with Kristen Hawley Turner, has created screentime.me which features a blog, podcast, and up-to-date research on the subject. Well worth checking out!

Also check out:

The benefits of Artificial Intelligence

As an historian, I’m surprisingly bad at recalling facts and dates. However, I’d argue that the study of history is actually about the relationship between those facts and dates — which, let’s face it, so long as you’re in the right ballpark, you can always look up.

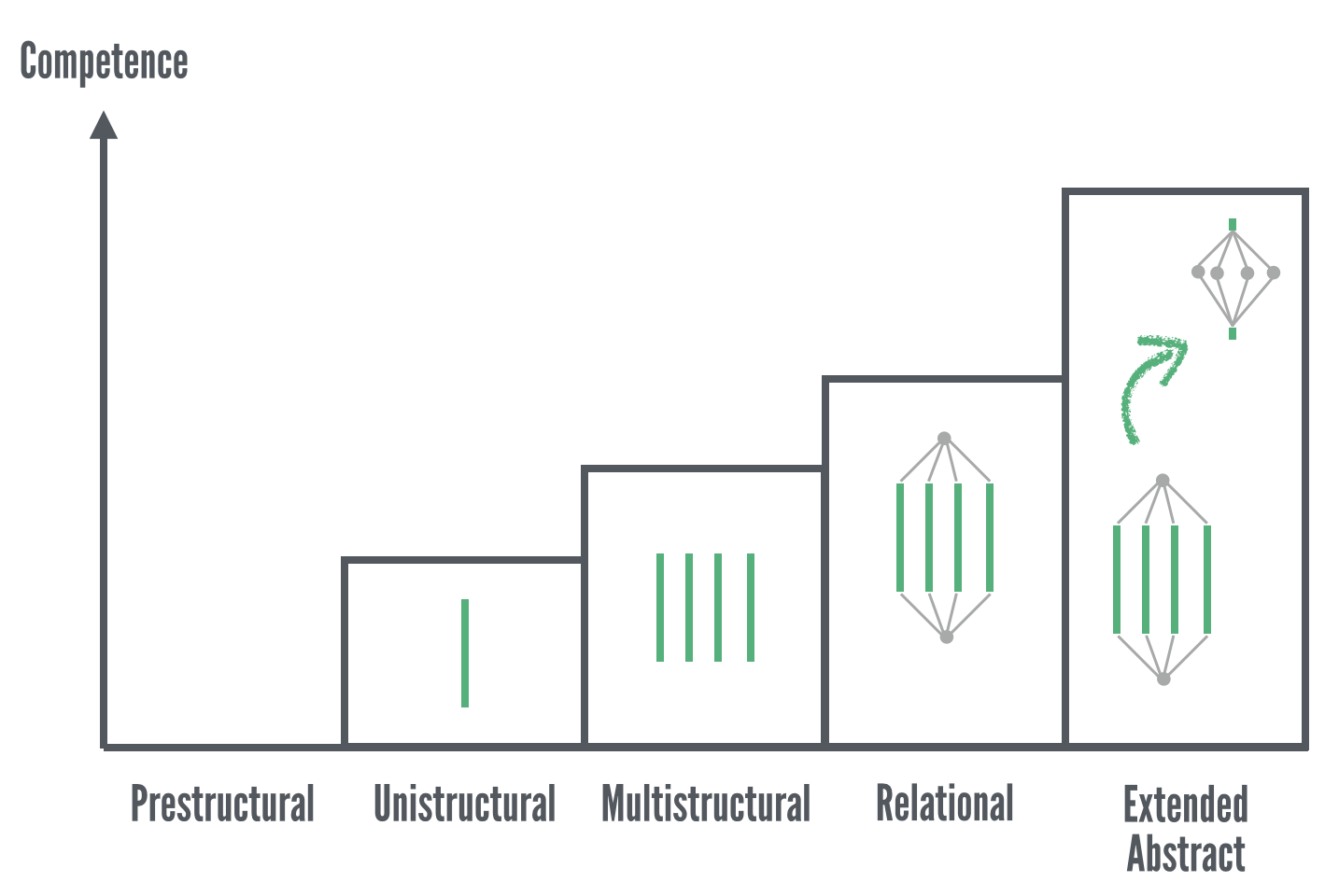

Understanding the relationship between things, I’d argue, is a demonstration of higher-order competence. This is described well by the SOLO Taxonomy, which I featured in my ebook on digital literacies:

This is important, as it helps to explain two related concepts around which people often get confused: ‘artificial intelligence’ and ‘machine learning’. If you look at the diagram above, you can see that the ‘Extended Abstract’ of the SOLO taxonomy also includes the ‘Relational’ part. Similarly, the field of ‘artificial intelligence’ includes ‘machine learning’.

There are some examples of each in this WIRED article, but for the purposes of this post let’s just leave it there. Some of what I want to talk about here involves machine learning and some artificial intelligence. It’s all interesting and affects the future of tech in education and society.

If you’re a gamer, you’ll already be familiar with some of the benefits of AI. No longer are ‘CPU players’ dumb, but actually play a lot like human players. That means with no unfair advantages programmed in by the designers of the game, the AI can work out strategies to defeat opponents. The recent example of OpenAI Five beating the best players at a game called Dota 2, and then internet teams finding vulnerabilities in the system, is a fascinating battle of human versus machine:

“Beating OpenAI Five is a testament to human tenacity and skill. The human teams have been working together to get those wins. The way people win is to take advantage of every single weakness in Five—some coming from the few parts of Five that are scripted rather than learned—gradually build up resources, and most importantly, never engage Five in a fair fight.” OpenAI co-founder Greg Brockman told Motherboard.Deepfakes, are created via "a technique for human image synthesis based on artificial intelligence... that can depict a person or persons saying things or performing actions that never occurred in reality". There's plenty of porn, of course, but also politically-motivated videos claiming that people said things they never did.

There’s benefits here, though, too. Recent AI research shows how, soon, it will be possible to replace any game character with one created from your own videos. In other words, you will be able to be in the game!

It only took a few short videos of each activity -- fencing, dancing and tennis -- to train the system. It was able to filter out other people and compensate for different camera angles. The research resembles Adobe's "content-aware fill" that also uses AI to remove elements from video, like tourists or garbage cans. Other companies, like NVIDIA, have also built AI that can transform real-life video into virtual landscapes suitable for games.It's easy to be scared of all of this, fearful that it's going to ravage our democratic institutions and cause a meltdown of civilisation. But, actually, the best way to ensure that it's not used for those purposes is to try and understand it. To play with it. To experiment.

Algorithms have already been appointed to the boards of some companies and, if you think about it, there’s plenty of job roles where automated testing is entirely normal. I’m looking forward to a world where AI makes our lives a whole lot easier and friction-free.

Also check out:

When we eat matters

As I get older, I’m more aware that some things I do are very affected by the world around me. For example, since finding out that the intensity of light you experience during the day is correlated with the amount of sleep you get, I don’t feel so bad about ‘sleeping in’ during the summer months.

So it shouldn’t be surprising that this article in The New York Times suggests that there’s a good and a bad time to eat:

A more promising approach is what some call 'intermittent fasting' where you restrict your calorific intake to eight hours of the day, and don't consume anything other than water for the other 16 hours.A growing body of research suggests that our bodies function optimally when we align our eating patterns with our circadian rhythms, the innate 24-hour cycles that tell our bodies when to wake up, when to eat and when to fall asleep. Studies show that chronically disrupting this rhythm — by eating late meals or nibbling on midnight snacks, for example — could be a recipe for weight gain and metabolic trouble.

This approach, known as early time-restricted feeding, stems from the idea that human metabolism follows a daily rhythm, with our hormones, enzymes and digestive systems primed for food intake in the morning and afternoon. Many people, however, snack and graze from roughly the time they wake up until shortly before they go to bed. Dr. Panda has found in his research that the average person eats over a 15-hour or longer period each day, starting with something like milk and coffee shortly after rising and ending with a glass of wine, a late night meal or a handful of chips, nuts or some other snack shortly before bed.So when should we eat? As early as possible in the day, it would seem:That pattern of eating, he says, conflicts with our biological rhythms.

Most of the evidence in humans suggests that consuming the bulk of your food earlier in the day is better for your health, said Dr. Courtney Peterson, an assistant professor in the department of nutrition sciences at the University of Alabama at Birmingham. Dozens of studies demonstrate that blood sugar control is best in the morning and at its worst in the evening. We burn more calories and digest food more efficiently in the morning as well.That's not great news for me. After a protein smoothie in the morning and eggs for lunch, I end up eating most of my calories in the evening. I'm going to have to rethink my regime...

Source: The New York Times

Audio Adversarial speech-to-text

I don’t usually go in for detailed technical papers on stuff that’s not directly relevant to what I’m working on, but I made an exception for this. Here’s the abstract:

We construct targeted audio adversarial examples on automatic speech recognition. Given any audio waveform, we can produce another that is over 99.9% similar, but transcribes as any phrase we choose (at a rate of up to 50 characters per second). We apply our white-box iterative optimization-based attack to Mozilla’s implementation DeepSpeech end-to-end, and show it has a 100% success rate. The feasibility of this attack introduce a new domain to study adversarial examples.In other words, the researchers managed to fool a neural network devoted to speech recognition into transcribing a phrase different to that which was uttered.

So how does it work?

By starting with an arbitrary waveform instead of speech (such as music), we can embed speech into audio that should not be recognized as speech; and by choosing silence as the target, we can hide audio from a speech-to-text systemThe authors state that merely changing words so that something different occurs is a standard adverserial attack. But a targeted adverserial attack is different:

Not only are we able to construct adversarial examples converting a person saying one phrase to that of them saying a different phrase, we are also able to begin with arbitrary non-speech audio sample and make that recognize as any target phrase.This kind of stuff is possible due to open source projects, in particular Mozilla Common Voice. Great stuff.

Source: Arxiv