About that MIT paper on LLMs for essay writing...

I suppose I should say something about this MIT research about the use of LLMs for essay writing. I can guarantee you that most people who are using this paper to justify the position that “the use of LLMs is a bad thing” haven’t even read the proper abstract, never mind the full paper. There’s a lot of “news” about it, which mostly links to this press release.

So let’s actually look at the properly, shall we? We’ll start with part of the actual abstract from the academic paper:

We assigned participants to three groups: LLM group, Search Engine group, Brain-only group, where each participant used a designated tool (or no tool in the latter) to write an essay. We conducted 3 sessions with the same group assignment for each participant. In the 4th session we asked LLM group participants to use no tools (we refer to them as LLM-to-Brain), and the Brain-only group participants were asked to use LLM (Brain-to-LLM). We recruited a total of 54 participants for Sessions 1, 2, 3, and 18 participants among them completed session 4.

The 54 participants is a red herring, as the claims being made in this paper are based on the number of people who completed the fourth session — a total of 18 participants. Nine first used no tool, and then used an LLM (“Brain-to-LLM”) and first used an LLM and then no tool (“LLM-to-Brain”).

There’s lots of neuroscience in this paper which I’m not in a position to comment on. What I am in a position to comment on is the research design, the claims being made, and the language used to express them. The first thing I’d say is the press release being titled Your Brain on ChatGPT is purposely channeling the This Is Your Brain On Drugs commercial which aired in the US in the 1980s. I’m not sure that’s a very neutral framing.

Second, any time I see an uncritical reference to “Cognitive Load Theory” in an academic paper, it’s a huge red flag for me. As Alfie Kohn points out it’s usually a way of justifying direct instruction. In other words, centring the teacher instead of the learner.

Third, the paper is poorly organised and written. For example, if one of my GCSE students back in the day had written the following, I’d have underlined it in red and written “VAGUE” next to it:

Overall, the debate between search engines and LLMs is quite polarized and the new wave of LLMs is about to undoubtedly shape how people learn.

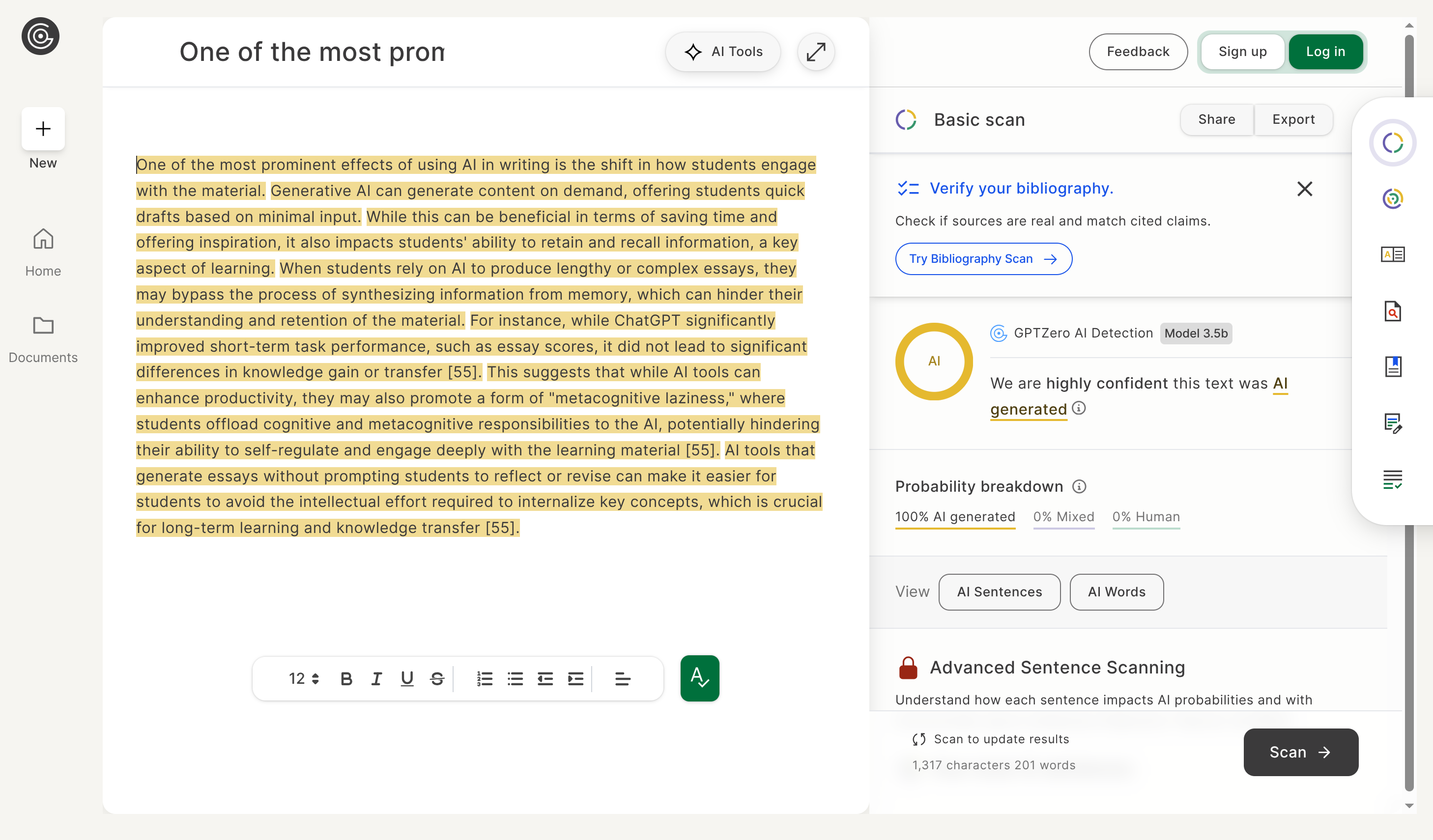

One of the funniest things about the paper, though, is that the authors undoubtedly used AI to write sections of it. For example, here’s a random paragraph (p.19)

So using LLMs is bad for essay-writing, but good for writing academic articles? Please.

I could continue. For example, the research design was terrible (a random collection of people with different levels of educational experience and qualifications), session 4 was optional, and the participants had 20 minutes to write an essay, in amongst other things. I mean, if someone gave you the following, pointed you at an LLM and gave you 20 minutes, what would you do?

Many people believe that loyalty whether to an individual, an organization, or a nation means unconditional and unquestioning support no matter what. To these people, the withdrawal of support is by definition a betrayal of loyalty. But doesn’t true loyalty sometimes require us to be critical of those we are loyal to? If we see that they are doing something that we believe is wrong, doesn’t true loyalty require us to speak up, even if we must be critical?

It’s not like they were being prompted to turn in an actual paper. Unlike the authors of this poor excuse for one.

Anyway, life is short and this paper is terrible. I’ll continue to use LLMs in my everyday work, and have zero issues with students using them to complete badly-designed assessment tasks. Final note: academics using LLMs (sometimes to write part of their papers!) while chiding students for doing so is abject hypocrisy.

Source: arXiv

Images: Growtika / Screenshot from GPTzero

Update: I just saw, via a link from Stephen Downes, a TIME Magazine article about this paper which says it hasn’t been peer reviewed. I missed that fact, and while the process isn’t infallible it explains a lot…