The first fully-open LLM to outperform GPT3.5-Turbo and GPT-4o mini

Thanks to Patrick Tanguay who commented on one of my LinkedIn updates, pointing me towards this post by Simon Willison.

Patrick was commenting in response to my post about ‘openness’ in relation to (generative) AI. Simon has tried out an LLM which claims to have the full stack freely available for inspection and reuse.

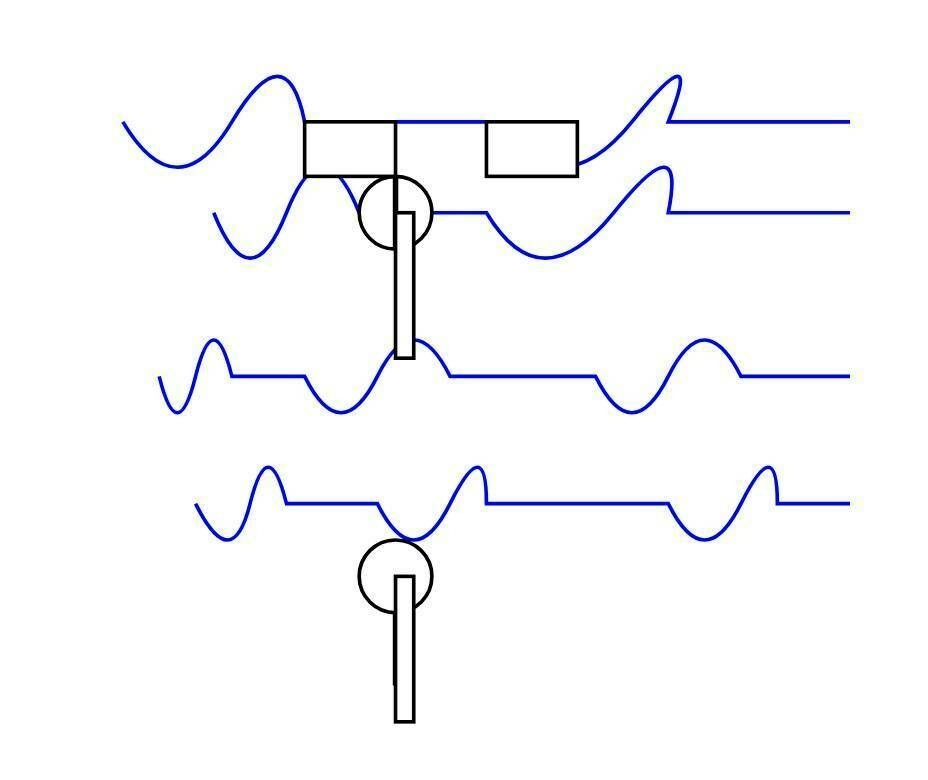

He tests these kinds of things by, among other things, getting it to draw a picture of a pelian riding a bicycle. The image accompanying this post is what OLMo 2 created (“refreshingly abstract”!) To be fair, Google’s Gemma 3 model didn’t do a great job either.

It’s made me think about what an appropriate test suite would be for me (i.e. subjectively) and what would be appropriate (objectively). There’s Humanity’s Last Exam but that’s based on exam-style knowledge which isn’t always super-practical.

mlx-community/OLMo-2-0325-32B-Instruct-4bit (via) OLMo 2 32B claims to be “the first fully-open model (all data, code, weights, and details are freely available) to outperform GPT3.5-Turbo and GPT-4o mini”.

Source: Simon Willison’s Weblog