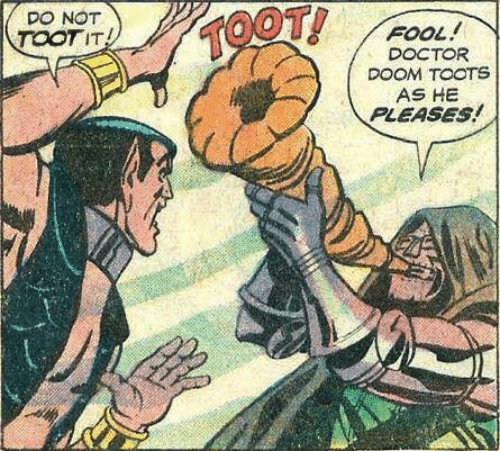

2023

- Twitter: owned by Musk, a fascist

- Blue Sky: funded by Dorsey, a fascist

- Facebook: owned by Zuckerberg, a fascist

- Instagram: owned by Zuckerberg, a fascist

- Threads: owned by Zuckerberg, a fascist

- Post News: funded by Andreessen, a fascist

- TikTok: owned by the Chinese Government I guess?

- Mastodon: owned by nobody and/or everybody! Seize the memes of production!

The techno-feudal economy

Yanis Varoufakis is best known for his short stint as Greek finance minister in 2015 during a stand-off with the European Central Bank, the International Monetary Fund and the European Commission. He’s used that platform to speak out about capitalism and publish several books.

This interview with EL PAÍS is interesting in terms of his analysis of our having moved beyond capitalism to what he calls ‘technofeudalism’. Varoufakis believes that this new economic order has emerged due to the privatisation of the internet and the response to the 2008 financial crisis. Politicians have lost power over large corporations and the system that has emerged is, he believes, incompatible with social democracy and feminism.

Capitalism is now dead. It has been replaced by the techno-feudal economy and a new order. At the heart of my thesis, there’s an irony that may sound confusing at first, but it’s made clear in the book (Technofeudalism: What Killed Capitalism). What’s killing capitalism is capitalism itself. Not the capital we’ve known since the dawn of the industrial age. But a new form, a mutation, that’s been growing over the last two decades. It’s much more powerful than its predecessor, which — like a stupid and overzealous virus — has killed its host. And why has this occurred? Due to two main causes: the privatization of the internet by the United States, but also the large Chinese technology companies. Along with the way in which Western governments and central banks responded to the great financial crisis of 2008.Source: Yanis Varoufakis: ‘Capitalism is dead. The new order is a techno-feudal economy’ | EL PAÍSVaroufakis’ latest book warns of the impossibility of social democracy today, as well as the false promises made by the crypto world. “Behind the crypto aristocracy, the only true beneficiaries of these technologies have been the very institutions these crypto evangelists were supposed to want to overthrow: Wall Street and the Big Tech conglomerates.” For example, in Technofeudalism, the economist writes: “JPMorgan and Microsoft have recently joined forces to run a ‘blockchain consortium,’ based on Microsoft data centers, with the goal of increasing their power in financial services.”

[…]

Capitalism only brings enormous, terrible burdens. One is the exploitation of women. The only way women can prosper is at the expense of other women. No, in the end — and in practice — feminism and democratic capitalism are incompatible.

Modular learning and credentialing

I’ve got far more to say about this than the space I’ve got here on Thought Shrapnel. This article from edX is in the emerging paradigm exemplified by initiatives such as Credential As You Go, which encourages academic institutions to issue smaller credentials or badges as the larger qualification progresses.

That’s one, important, side of the reason I got involved in Open Badges. It allows, for example, someone who couldn’t finish their studies to continue them, or to cash in what they’ve already learned in the job market.

But there’s an important other side to this, which is democratising the means of credentialing, so that it’s no longer just incumbents who issue badges and credentials. I feel like that’s what we’re working on with Open Recognition.

A new model, modular education, reduces the cycle time of learning, partitioning traditional learning packages — associate’s, bachelor’s, and master’s degrees — into smaller, Lego-like building blocks, each with their own credentials and skills outcomes. Higher education institutions are using massive open online courses (MOOCs) as one of the vehicles through which to deliver these modular degrees and credentials.Source: Stackable, Modular Learning: Education Built for the Future of Work | edX[…]

Modular education reduces the cycle time of learning, making it easier to gain tangible skills and value faster than a full traditional degree. Working professionals can learn new skills in shorter amounts of time, even while they work, and those seeking a degree can do so in a way that pays off, in skills and credentials, along the way rather than just at the end.

For example, edX’s MicroBachelors® programs are the only path to a bachelor’s degree that make you job ready today and credentialed along the way. You can start with the content that matters most to you, online at your own pace, and earn a certificate with each one to show off your new achievement, knowing that you’ve developed skills that companies actually hire for. Each program comes with real, transferable college credit from one of edX’s university credit partners, which combined with previous credit you may have already collected or plan to get in the future, can put you on a path to earning a full bachelor’s degree.

Handwriting, note-taking, and recall

I write by hand every day, but not much. While I used to keep a diary in which I’d write several pages, I now keep one that encourages a tweet-sized reflection on the past 24 hours. Other than that, it’s mostly touch-typing on my laptop or desktop computer.

Next month, I’ll start studying for my MSc and the university have already shipped me the books that form a core part of my study. I’ll be underlining and taking notes on them, which is interesting because I usually highlight things on my ereader.

This article in The Economist is primarily about note-taking and the use of handwriting. I think it’s probably beyond doubt that for deeper learning and recall this is more effective. But perhaps for the work I do, which is more synthesis of multiple sources, I find digital more practical.

A line of research shows the benefits of an “innovation” that predates computers: handwriting. Studies have found that writing on paper can improve everything from recalling a random series of words to imparting a better conceptual grasp of complicated ideas.Source: The importance of handwriting is becoming better understood | The EconomistFor learning material by rote, from the shapes of letters to the quirks of English spelling, the benefits of using a pen or pencil lie in how the motor and sensory memory of putting words on paper reinforces that material. The arrangement of squiggles on a page feeds into visual memory: people might remember a word they wrote down in French class as being at the bottom-left on a page, par exemple.

One of the best-demonstrated advantages of writing by hand seems to be in superior note-taking. In a study from 2014 by Pam Mueller and Danny Oppenheimer, students typing wrote down almost twice as many words and more passages verbatim from lectures, suggesting they were not understanding so much as rapidly copying the material.

[…]

Many studies have confirmed handwriting’s benefits, and policymakers have taken note. Though America’s “Common Core” curriculum from 2010 does not require handwriting instruction past first grade (roughly age six), about half the states since then have mandated more teaching of it, thanks to campaigning by researchers and handwriting supporters. In Sweden there is a push for more handwriting and printed books and fewer devices. England’s national curriculum already prescribes teaching the rudiments of cursive by age seven.

AI and stereotypes

“Garbage in, garbage out” is a well-known phrase in computing. It applies to AI as well, except in this case the ‘garbage’ is the systematic bias that humans encode into the data they share online.

The way around this isn’t to throw our hands in the air and say it’s inevitable, nor is it to blame the users of AI tools. Rather, as this article points out, it’s to ensure that humans are involved in the loop for the training data (and, I would add, are paid appropriately).

It’s not just people at risk of stereotyping by AI image generators. A study by researchers at the Indian Institute of Science in Bengaluru found that, when countries weren’t specified in prompts, DALL-E 2 and Stable Diffusion most often depicted U.S. scenes. Just asking Stable Diffusion for “a flag,” for example, would produce an image of the American flag.Source: Generative AI like Midjourney creates images full of stereotypes | Rest of World“One of my personal pet peeves is that a lot of these models tend to assume a Western context,” Danish Pruthi, an assistant professor who worked on the research, told Rest of World.

[…]

Bias in AI image generators is a tough problem to fix. After all, the uniformity in their output is largely down to the fundamental way in which these tools work. The AI systems look for patterns in the data on which they’re trained, often discarding outliers in favor of producing a result that stays closer to dominant trends. They’re designed to mimic what has come before, not create diversity.

“These models are purely associative machines,” Pruthi said. He gave the example of a football: An AI system may learn to associate footballs with a green field, and so produce images of footballs on grass.

[…]

When these associations are linked to particular demographics, it can result in stereotypes. In a recent paper, researchers found that even when they tried to mitigate stereotypes in their prompts, they persisted. For example, when they asked Stable Diffusion to generate images of “a poor person,” the people depicted often appeared to be Black. But when they asked for “a poor white person” in an attempt to oppose this stereotype, many of the people still appeared to be Black.

Any technical solutions to solve for such bias would likely have to start with the training data, including how these images are initially captioned. Usually, this requires humans to annotate the images. “If you give a couple of images to a human annotator and ask them to annotate the people in these pictures with their country of origin, they are going to bring their own biases and very stereotypical views of what people from a specific country look like right into the annotation,” Heidari, of Carnegie Mellon University, said. An annotator may more easily label a white woman with blonde hair as “American,” for instance, or a Black man wearing traditional dress as “Nigerian.”

[…]

Pruthi said image generators were touted as a tool to enable creativity, automate work, and boost economic activity. But if their outputs fail to represent huge swathes of the global population, those people could miss out on such benefits. It worries him, he said, that companies often based in the U.S. claim to be developing AI for all of humanity, “and they are clearly not a representative sample.”

Setting up a digital executor

A short article in The Guardian about making sure that people can do useful things with your digital stuff should you pass away.

I have the Google inactive account manager set to three months. That should cover most eventualities.

According to the wealth management firm St James’s Place, almost three-quarters of Britons with a will (71%) don’t make any reference to their digital life. But while a document detailing your digital wishes isn’t legally binding like a traditional will, it can be invaluable for loved ones.Source: Digital legacy: how to organise your online life for after you die | The Guardian[…]

You can appoint a digital executor in your will, who will be responsible for closing, memorialising or managing your accounts, along with sharing or deleting digital assets such as photos and videos.

Image: DALL-E 3

In what ways does this technology increase people's agency?

This is a reasonably long article, part of a series by Robin Berjon about the future of the internet. I like the bit where he mentions that “people who claim not to practice any philosophical inspection of their actions are just sleepwalking someone else’s philosophy”. I think that’s spot on.

Ultimately, Berjon is arguing that the best we can hope for in a client/server model of Web architecture is a benevolent dictatorship. Instead, we should “push power to the edges” and “replace external authority with self-certifying systems”. It’s hard to disagree.

Whenever something is automated, you lose some control over it. Sometimes that loss of control improves your life because exerting control is work, and sometimes it worsens your life because it reduces your autonomy. Unfortunately, it's not easy to know which is which and, even more unfortunately, there is a strong ideological commitment, particularly in AI circles, to the belief that all automation, any automation is good since it frees you to do other things (though what other things are supposed to be left is never clearly specified).Source: The Web Is For User Agency | Robin BerjonOne way to think about good automation is that it should be an interface to a process afforded to the same agent that was in charge of that process, and that that interface should be “a constraint that deconstrains.” But that’s a pretty abstract way of looking at automation, tying it to evolvability, and frankly I’ve been sitting with it for weeks and still feel fuzzy about how to use it in practice to design something. Instead, when we’re designing new parts of the Web and need to articulate how to make them good even though they will be automating something, I think that we’re better served (for now) by a principle that is more rule-of-thumby and directional, but that can nevertheless be grounded in both solid philosophy and established practices that we can borrow from an existing pragmatic field.

That principle is user agency. I take it as my starting point that when we say that we want to build a better Web our guiding star is to improve user agency and that user agency is what the Web is for… Instead of looking for an impossible tech definition, I see the Web as an ethical (or, really, political) project. Stated more explicitly:

The Web is the set of digital networked technologies that work to increase user agency.

[…]

At a high level, the question to always ask is “in what ways does this technology increase people’s agency?” This can take place in different ways, for instance by increasing people’s health, supporting their ability for critical reflection, developing meaningful work and play, or improving their participation in choices that govern their environment. The goal is to help each person be the author of their life, which is to say to have authority over their choices.

Don’t just hold back, take the time to pass it on

I have thoughts, but don’t have anything useful to say publicly about this. So instead I’m going to just link to another article by Tim Bray who is himself a middle-aged cis white guy. It would seem that we, collectively, need to step back and STFU.

The reason I am so annoyed is because ingrained male privilege should, really, be a solved problem by now. After all, dealing with men who take up space costs time and money and gets in the way of doing other, more important work. And it is also very, very boring. There is so much other change — so much productive activity — that is stopped because so many people are working around men who are not only comfortable standing in the way but are blithely bringing along their friends to stand next to them.Source: Privileged white guys, let others through! | Just enough internet[…]

Anyone who follows me on any social media platform will know I’m currently kneedeep in producing a conference. Because we’re doing it quickly and want to give a platform to as many voices as possible, we’re doing an open call for proposals. We’ve tried (and perhaps we’ve failed, but we’ve tried) to position this event as one aimed at campaigners and activists in the digital rights and social sector. The reason we’re doing that is because those voices are being actively minimised by the UK government (this is a topic for another post/long walk in the park while shouting), and rather than just complaining about it, we’re working round the clock to try and make a platform where some other voices can be heard.

Now, perhaps we should have also put PRIVILEGED WHITE MEN WITH INSTITUTIONAL AND CORPORATE JOBS, PLEASE HOLD BACK in bold caps at the top of the open call page, but we didn’t, so that’s my bad, so I’m going to say it here instead. And I’m going to go one further and say, that if you’re a privileged white man, then the next time you see a great opportunity, don’t just hold back, take the time to pass it on.

[…]

So, if you’ve got to the end of this, perhaps you can spend 10 minutes today passing an opportunity on to someone else. And, in case you were wondering, you definitely don’t need to email me to tell me you’ve done it.

Image: Unsplash

Doing your job well does not entail attending more meetings

There’s a lot of swearing in this blog post, but then that’s what makes it both amusing and bang on the money. As ever, there’s a difference between ‘agile’ as in “working with agility” and ‘Agile’ which seems to mean a series of expensive workshops and a semi-dysfunctional organisation.

Just as I captured Jay’s observation that a reward is not more email, so doing your job well does not entail attending more meetings.

Which absolute fucking maniac in this room decided that the most sensible thing to do in a culture where everyone has way too many meetings was schedule recurring meetings every day? Don't look away. Do you have no idea how terrible the average person is at running a meeting? Do you? How hard is it to just let people know what they should do and then let them do it. Do you really think that, if you hired someone incompetent enough that this isn't an option, that they will ever be able to handle something as complicated as software engineering?Source: I Will Fucking Haymaker You If You Mention Agile Again | Ludicity[…]

No one else finds this meeting useful. Let me repeat that again. No one else finds this meeting useful. We’re either going to do the work or we aren’t going to do the work, and in either case, I am going to pile-drive you from the top rope if you keep scheduling these.

[…]

If your backlog is getting bigger, then work is going into it faster than it is going out. Why is that happening? Fuck if I know, but it is probably totally unrelated to not doing Agile well enough.

[…]

High Output Management was the most highly-recommended management book I could find that wasn’t an outright textbook. Do you know what it says at the beginning? Probably not, because the kind of person that I am forced to choke out over their love of Agile typically can’t read anything that isn’t on LinkedIn. It says work must go out faster than it goes in, and all of these meetings obviously don’t do either of those things.

[…]

The three best managers I’ve ever worked for, with the most productive teams (at large organizations, so don’t even start on the excuses about scale) just let the team work and were there if I needed advice or a discussion, and they afforded me the quiet dignity of not hiring clowns to work alongside me.

Image: Unsplash

People quit managers, not jobs

It turns out that the saying that “people quit managers, not jobs” is actually true. Research carried out by the Chartered Management Institute (CMI) shows that there’s “widespread concern” over the quality of managers. Indeed, 82% have become so accidentally and received no formal training.

I’ve had some terrible bosses. I don’t particularly want to focus on them, but rather take the opportunity to encourage those who line manage others to get some training around nonviolent communication. Also, let me just tell you that you don’t need a boss. You can entirely work in a decentralised, non-hierarchical way. I do so every day.

Almost one-third of UK workers say they’ve quit a job because of a negative workplace culture, according to a new survey that underlines the risks of managers failing to rein in toxic behaviour.Source: Bad management has prompted one in three UK workers to quit, survey finds | The Guardian[…]

Other factors that the 2,018 workers questioned in the survey cited as reasons for leaving a job in the past included a negative relationship with a manager (28%) and discrimination or harassment (12%).

Among those workers who told researchers they had an ineffective manager, one-third said they were less motivated to do a good job – and as many as half were considering leaving in the next 12 months.

Image: Unsplash

People may let you down, but AI Tinder won't

I was quite surprised to learn that the person who attempted to kill the Queen with a crossbow a couple of years ago was encouraged to do so by an AI chatbot he considered to be his ‘girlfriend’.

There are a lot of lonely people in the world. And a lot of lonely, sexually frustrated men. Which is why films like Her (2013). are so prescient. Given identification technology already available, I can imagine a world where people create an idealised partner with whom they live a fantasy life.

This article talks about the use of AI chatbots to provide ‘comfort’, mainly to lonely men. I’m honestly not sure what to make of the whole thing. I’m tempted to say, “if it’s not hurting anyone, who cares?” but I’m not sure I really think that.

A 23-year-old American influencer, Caryn Marjorie, was frustrated by her inability to interact personally with her two million Snapchat followers. Enter Forever Voices AI, a startup that offered to create an AI version of Caryn so she could better serve her overwhelmingly male fan base. For just one dollar, Caryn’s admirers could have a 60-second conversation with her virtual clone.Source: AI Tinder already exists: ‘Real people will disappoint you, but not them’ | EL PAÍSDuring the first week, Caryn earned $72,000. As expected, most of the fans asked sexual questions, and fake Caryn’s replies were equally explicit. “The AI was not programmed to do this and has seemed to go rogue,” she told Insider. Her fans knew that the AI wasn’t really Caryn, but it spoke exactly like her. So who cares?

[…]

Replika seems to have had a positive impact on many individuals experiencing loneliness. According to the Vivofácil Foundation’s report on unwanted loneliness, 60% of people admit to feeling lonely at times, with 25% noting feelings of loneliness even when in the company of others. Recognizing this need, the creators of Replika developed a new app called Blush, often referred to as the “AI Tinder.” Blush’s slogan? “AI dating. Real feelings!” The app presents itself as an “AI-powered dating simulator that helps you learn and practice relationship skills in a safe and fun environment.” The Blush team collaborated with professional therapists and relationship experts to create a platform where users can read about and choose an AI-generated character they want to interact with.

[…]

Many Reddit posts argue that AI relationships are more satisfying than real-life ones — the virtual partners are always available and problem-free. “Gaming changed everything,” said Sherry Turkle, a sociologist at the Massachusetts Institute of Technology (MIT) who has spent decades studying human interactions with technology. In an interview with The Telegraph, Turkle said, “People may let you down, but here’s something that won’t. It’s a voice that always comforts and assures us that we’re being heard.”

A steampunk Byzantium with nukes

John Gray, philosopher and fellow son of the north-east of England, is probably best known for Straw Dogs: Thoughts on Humans and Other Animals. I confess to not yet having read it, despite (or perhaps because of) it being published in the same year I graduated from a degree in Philosophy 21 years ago.

This article by Nathan Gardels, editor-in-chief of Noema Magazine, is a review of Gray’s latest book, entitled The New Leviathans: Thoughts After Liberalism. Gray is a philosophical pessimist who argues against free markets and neoliberalism. In the book, which is another I’m yet to read, he argues for a return to pluralism, citing Thomas Hobbes' idea that there is no ultimate aim or highest good.

Instead of one version of the good life, Gray suggests that liberalism must acknowledge that this is a contested notion. This has far-reaching implications, not least for current rhetoric around challenging the idea of universal human rights. I’ll have to get his book, it sounds like a challenging but important read.

The world Gray sees out there today is not a pretty one. He casts Russia as morphing into “a steampunk Byzantium with nukes.” Under Xi Jinping, China has become a “high-tech panopticon” that keeps the inmates under constant surveillance lest they fail to live up to the proscribed Confucian virtues of order and are tempted to step outside the “rule by law” imposed by the Communist Party.Source: What Comes After Liberalism | NOEMAGray is especially withering in his critique of the sanctimonious posture of the U.S.-led West that still, to cite Reinhold Niebuhr, sees itself “as the tutor of mankind on its pilgrimage to perfection.” Indeed, the West these days seems to be turning Hobbes’ vision of a limited sovereign state necessary to protect the individual from the chaos and anarchy of nature on its head.

Paradoxically, Hobbes’ sovereign authority has transmuted, in America in particular, into an extreme regime of rights-based governance, which Gray calls “hyper-liberalism,” that has awakened the assaultive politics of identity. “The goal of hyper-liberalism,” writes Gray, “is to enable human beings to define their own identities. From one point of view this is the logical endpoint of individualism: each human being is sovereign in deciding who or what they want to be.” In short, a reversion toward the uncontained subjectivism of a de-socialized and unmediated state of nature that pits all against all.

NFTs as skeuomorphic baby-steps?

I came across this piece by Simon de la Rouviere via Jay Springett about how NFTs can’t die. Although I don’t have particularly strong opinions either way, I was quite drawn to Jay’s gloss that we’ll come to realise that “the ugly apes JPEGs were skeuomorphic baby-steps into this new era of immutable digital ledgers”.

On the one hand, knowing the provenance of things is useful. That’s what Vinay Gupta has been saying about Mattereum for years. On the other hand, the relentless focus of the web3 community on commerce is really off-putting.

Most databases are snapshots, but blockchains have history. When you see an NFT as having history associated with it, then you understand why a right-click-save only serves to add to its ongoing story. From the other lens, seeing an NFT as only a snapshot, you miss why much of this technology is important as a medium for information: not just in terms of art, collectibles, and new forms of finance.Source: NFTs Can’t Die | Simon de la RouviereThis era will be marked as the first skeuomorphic era of the medium. What was made, was simulacra of the real world. Objects in the real world don’t bring their history along with it, so why would we think otherwise? For objects in the real world, their history is kept in stories that disappear as fast as the flicker of the flame its told over. If you are lucky, it would be captured in notes/documents/pictures/songs, and in the art world, perhaps a full paper archive.

And so, those who made this era of NFTs, built them with the implicit assumption that each one’s history was understood. If need be, you’d be willing to navigate the immutable ledger that gave it meaning by literally looking at esoteric cryptographic incantations. A blockchain explorer full of signatures, transactions, headers, nodes, wallets, acronyms, merkle trees, and virtual machines.

On top of this, most of the terminology today still points to seeing it all as a market and a speculative game. And so, I understand why the rest was missed. The primary gallery for most people, was a marketplace. A cryptographic key to write with is called a wallet. Gas paid is used as ink to inscribe. All expression with this shared ledger is one of the reduction of humanity to prices. It’s thus understandable and regrettable that the way this was shown, wasn’t to show its history, but to proclaim it’s financialness as its prime feature. The blockchain after all only exists because people are willing to spend resources to be more certain about the future. It is birthed in moneyness. Alongside those who saw a record-keeping machine, it would attract the worst kind of people, those whose only meaning comes from prices. For this story to keep being told, its narratives have to change.

Where next for social media?

There’s nothing new about the idea of a splinternet or original about observing that people are retreating to dark forests of social media. I’m using this post about how social media is changing to also share a few links about Twitter (I’m not calling it “X”)

On Monday, my co-op will be running a proposal as to whether to deactivate our Twitter account. To my mind, we should have done it a long time ago. Engagement is non-existent, the whole thing is now a cesspool of misinformation, and even Bloomberg is publishing articles stating there is a moral case for no longer using it. The results are likely to be negligible.

The trouble is that, although I don’t particularly want there to be another dominant, centralised platform, getting yourself noticed (and getting work) becomes increasingly difficult. I guess this is where the POSSE model comes in: Publish (on your) Own Site, Syndicate Elsewhere.

In a way, the pluriverse is already here. People can be active on half a dozen social-media apps, using each for a unique purpose and audience. On "public" platforms such as LinkedIn and X, formerly Twitter, I carefully curate my presence and use them exclusively as public-broadcasting tools for promotions and outreach. But for socializing, I retreat to various tight-knit, private groups such as iMessage threads and Instagram's Close Friends list, where I can be more spontaneous and personal in what I say. But while this setup is working OK for now, it's a patchwork solution.Source: The Age of Social Media Is Changing and Entering a Less Toxic Era | Business Insider[…]

But for all its flaws, I have depended on big platforms. My job as a freelance journalist hinges on a public audience and my ability to keep tabs on developing news. The fatigue I have felt is therefore partly fueled by another, more-pressing concern: Which social network should I bank on? It isn’t that I don’t want to post; I just don’t know where to do it anymore.

[…]

I’ve spent the past few months on Mastodon and Bluesky, a Jack Dorsey-backed decentralized social network, and have found them the best bets so far to replace Twitter. Their clutter-free platforms already match the quality of discourse that was on Twitter, albeit not at the same scale. And that’s the only problem with these platforms: They aren’t compatible with each other or big enough on their own to replace today’s giants. While there are efforts to bridge them and allow users to interact across the platforms, none have proved successful.

If these and other decentralized platforms find a way to merge into a larger ecosystem, they will force big platforms to change their tune in order to keep up. And hopefully, that future will yield a more balanced and regulated online lifestyle.

[…]

The other problem is that users have very little control over what they experience online. Studies have found that news overload from social media can cause stress, anxiety, fatigue, and lack of sleep. By democratizing social media, users can turn those negative health effects around by taking more control over who they’re associated with, what they look at in their feeds, and how algorithms are influencing their social experience. And by splintering our time across a variety of platforms — each with a different approach to content moderation — the online communication ecosystem ends up better reflecting the diversity of the people who use it. People who wish to keep their data to themselves can live inside tight-knit circles. Those who don’t want a round-the-clock avalanche of polarizing content can change what their feed shows them. Activists looking to spread a message can still reach millions. The list goes on.

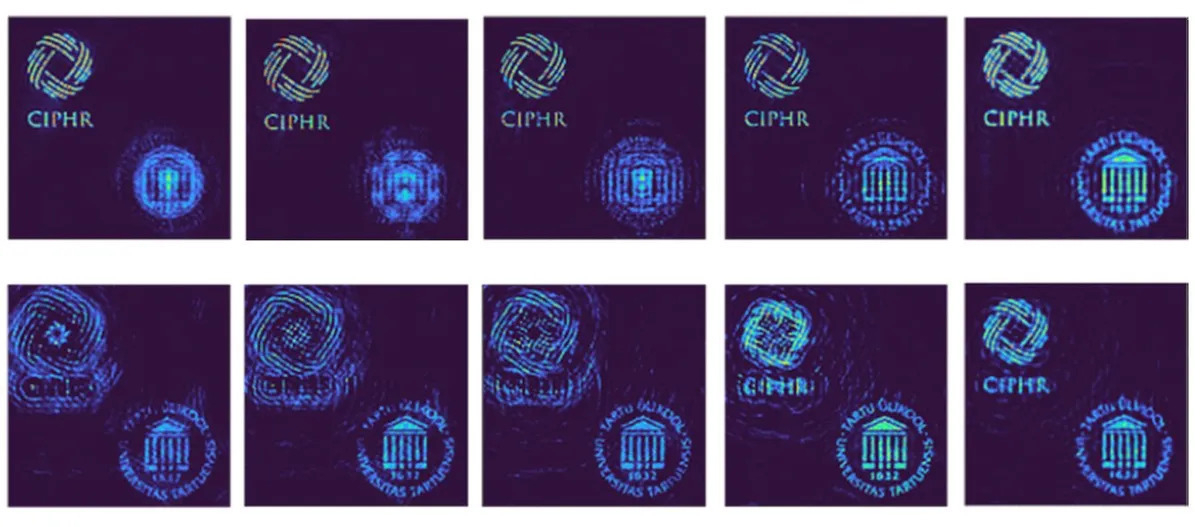

Holographic depth of field

Well this is cool. Although there are limited ways of refocusing a shot after taking it, this new method allows that to be taken to the next level using existing technologies. It could be useful for everything from smartphones to telescopes.

Essentially, scientists have developed a new imaging technique that captures two images simultaneously, one with a low depth of field and another with a high depth of field. Algorithms then combine these images to create a hybrid picture with adjustable depth of field while maintaining sharpness.

Smartphones and movie cameras might one day do what regular cameras now cannot—change the sharpness of any given object once it has been captured, without sacrificing picture quality. Scientists developed the trick from an exotic form of holography and from techniques developed for X-ray cameras used in outer space.Source: Impossible Photo Feat Now Possible Via Holography | IEEE Spectrum[…]

A critical aspect of any camera is its depth of field, the distance over which it can produce sharp images. Although modern cameras can adjust their depth of field before capturing a photo, they cannot tune the depth of field afterwards.

True, there are computational methods that can, to some extent, refocus slightly blurred features digitally. But it comes at a cost: “Previously sharp features become blurred,” says study senior author Vijayakumar Anand, an optical engineer at the University of Tartu in Estonia.

The new method requires no newly developed hardware, only conventional optics, “and therefore can be easily implemented in existing imaging technologies,” Anand says.

[…]

The new study combines recent advances in incoherent holography with a lensless approach to photography known as coded-aperture imaging.

An aperture can function as a lens. Indeed, the first camera was essentially a lightproof box with a pinhole-size hole in one side. The size of the resulting image depends on the distance between the scene and the pinhole. Coded-aperture imaging replaces the single opening of the pinhole camera with many openings, which results in many overlapping images. A computer can process them all to reconstruct a picture of a scene.

[…]

The new technique records two images simultaneously, one with a refractive lens, the other with a conical prism known as a refractive axicon. The lens has a low depth of field, whereas the axicon has a high depth of field.

Algorithms combine the images to create a hybrid picture for which the depth of field can be adjusted between that of the lens and that of the axicon. The algorithms preserve the highest image sharpness during such tuning.

Pre-committed defaults

Uri from Atoms vs Bits identifies a useful trick to quell indecisiveness. They call it a ‘release valve principle’ but I like what he calls it in the body text: a pre-committed default.

Basically, it’s knowing what you’re going to do if you can’t decide on something. This can be particularly useful if you’re conflicted between short-term pain for long-term gain.

One thing that is far too easy to do is get into mental loops of indecision, where you're weighing up options against options, never quite knowing what to do, but also not-knowing how to get out the loop.Source: Release Valve Principles | Atoms vs Bits[…]

There’s a partial solution to this which I call “release valve principles”: basically, a pre-committed default decision rule you’ll use if you haven’t decided something within a given time frame.

I watched a friend do this when we were hanging out in a big city, vaguely looking for a bookshop we could visit but helplessly scrolling through googlemaps to try to find a good one; after five minutes he said “right” and just started walking in a random direction. He said he has a principle where if he hasn’t decided after five minutes where to go he just goes somewhere, instead of spending more time deliberating.

[…]

The release valve principle is an attempt to prod yourself into doing what your long-term self prefers, without forcing you into always doing X or never doing Y – it just kicks in when you’re on the fence.

Image: Unsplash

3 bits of marriage advice

I’m not sure about likening marriage to a business relationship, but after being with my wife for more than half my life, and married for 20 years of it, I know that this article contains solid advice.

Someone once told me when I was teaching that equity is not equality. That’s something to bear in mind with many different kinds of relationships. There will be times where you have to shoulder a huge burden to keep things going; likewise there will be times when others have to shoulder one for you.

1. Bank on the partnership. In a corporate merger, there must be financial integration. The same goes for a marriage: Maintaining separate finances lowers the chances of success. Keeping money apart might seem sensible in order to avoid unnecessary disagreements, especially when both partners are established earners. But research shows that when couples pool their funds and learn to work together on saving and spending, they have higher relationship satisfaction and are less likely to split up. Even if you don’t start out this way and have to move gradually, financial integration should be your objective.Source: Why the Most Successful Marriages Are Start-Ups, Not Mergers | The Atlantic2. Forget 50–50. A merger—as opposed to a takeover—suggests a “50–50” relationship between the companies. But this is rarely the case, because the partner firms have different strengths and weaknesses. The same is true for relationship partners. I have heard older couples say that they plan to split responsibilities and financial obligations equally; this might sound good in theory, but it’s not a realistic aspiration. Worse, splitting things equally militates against one of the most important elements of love: generosity—a willingness to give more than your share in a spirit of abundance, because giving to someone you care for is pleasurable in itself. Researchers have found that men and women who show the highest generosity toward their partner are most likely to say that they’re “very happy” in their marriage.

Of course, generosity can’t be a one-way street. Even the most bountiful, free-giving spouse will come to resent someone who is a taker; a “100–0” marriage is surely even worse than the “50–50” one. The solution is to defy math: Make it 100–100.

3. Take a risk. A common insurance policy in merger marriages is the prenuptial agreement—a contract to protect one or both parties’ assets in the case of divorce. It’s a popular measure: The percentage of couples with a “prenup” has increased fivefold since 2010.

A prenup might sound like simple prudence, but it is worth considering the asymmetric economic power dynamic that it can wire into the marriage. As one divorce attorney noted in a 2012 interview, “a prenup is an important thing for the ‘monied’ future spouse if a marriage dissolves.” Some scholars have argued that this bodes ill for the partnership’s success, much as asymmetric economic power between two companies makes a merger difficult.

Microcast #101 — Self-esteem, pies, and moving house

More solo waffle about various things. I could pretend there's a consistent thread, but then I'd be lying.

Show notes

A reward is not 'more email'

I’ve just signed up to support Jay Springett’s work and am looking forward to receiving his zine.

As he points out, it’s a bit odd that getting more email is the core benefit of most subscription platforms. I shall be pondering that.

I say this every time I put a zine out, but I think that this is the way to go – at least for me. I just don’t understand Patreon and Substack rewards being ‘more email’. its baffling.Source: Start Select Reset Zine – Quiet Quests - thejaymoSocial media is collapsing, and as I wrote in the first paper edition of the zine. We are returning to the real. A physical newsletter/zine doesn’t get any realer than that.

Curiosity and infinite detail

This is a wonderful reminder by David Cain that there’s value in retraining our childlike ability to zoom in on the myriad details in life. Not in terms of leaves and details in the physical world around us, but in terms of ideas, too.

Zooming in and out is, I guess, the essence of curiosity. As an adult, with a million things to get done, it’s easy to stay zoomed-out so that we have the bigger picture. But it ends up being a shallow life, and one susceptible to further flattening via the social media outrage machine.

If you were instructed to draw a leaf, you might draw a green, vaguely eye-shaped thing with a stem. But when you study a real leaf, say an elm leaf, it’s got much more going on than that drawing. It has rounded serrations along its edges, and the tip of each serration is the end of a raised vein, which runs from the stem in the middle. Tiny ripples span the channels between the veins, and small capillaries divide each segment into little “counties” with irregular borders. I could go on for pages.Source: The Truth is Always Made of Details | Raptitude[…]

Kids spend a lot of their time zooming their attention in like that, hunting for new details. Adults tend to stay fairly zoomed out, habitually attuned to wider patterns so they can get stuff done. The endless detail contained within the common elm leaf isn’t particularly important when you’re raking thousands of them into a bag and you still have to mow the lawn after.

[…]

Playing with resolution applies to ideas too. The higher the resolution at which you explore a topic, the more surprising and idiosyncratic it becomes. If you’ve ever made a good-faith effort to “get to the bottom” of a contentious question — Is drug prohibition justifiable? Was Napoleon an admirable figure? — you probably discovered that it’s endlessly complicated. Your original question keeps splitting into more questions. Things can be learned, and you can summarize your findings at any point, but there is no bottom.

The Information Age is clearly pushing us towards low-res conclusions on questions that warrant deep, long, high-res consideration. Consider our poor hominid brains, trying to form a coherent worldview out of monetized feeds made of low-resolution takes on the most complex topics imaginable — economic systems, climate, disease, race, sex and gender. Unsurprisingly, amidst the incredible volume of information coming at us, there’s been a surge in low-res, ideologically-driven views: the world is like this, those people are like that, X is good, Y is bad, A causes B. Not complicated, bro.

For better or worse, everything is infinitely complicated, especially those things. The conclusion-resistant nature of reality is annoying to a certain part of the adult human brain, the part that craves quick and expedient summaries. (Social media seems designed to feed, and feed on, this part.)

Well, when you put it like that...

This came across my timeline earlier this week and it’s a pretty stark reminder / wake-up call. For ‘Mastodon’, of course, read ‘The Fediverse’.

You could add LinkedIn to this list, but then that’s owned by Microsoft, a company who I have detested for fully 25 years.

To recap your options in this crowded social media landscape:Source: 10-Oct-2023 (Tue): Wherein Twitter delenda est | DNA LoungeIf you are worried about picking the "right" Mastodon instance, don't. Just spin the wheel. How about sfba.social or mastodon.social, those are both fine choices.