More like Grammarly than Hal 9000

I’m currently studying towards an MSc in Systems Thinking and earlier this week created a GPT to help me. I fed in all of the course materials, being careful to check the box saying that OpenAI couldn’t use it to improve their models.

It’s not perfect, but it’s really useful. Given the extra context, ChatGPT can not only help me understand key concepts on the course, but help relate them more closely to the overall context.

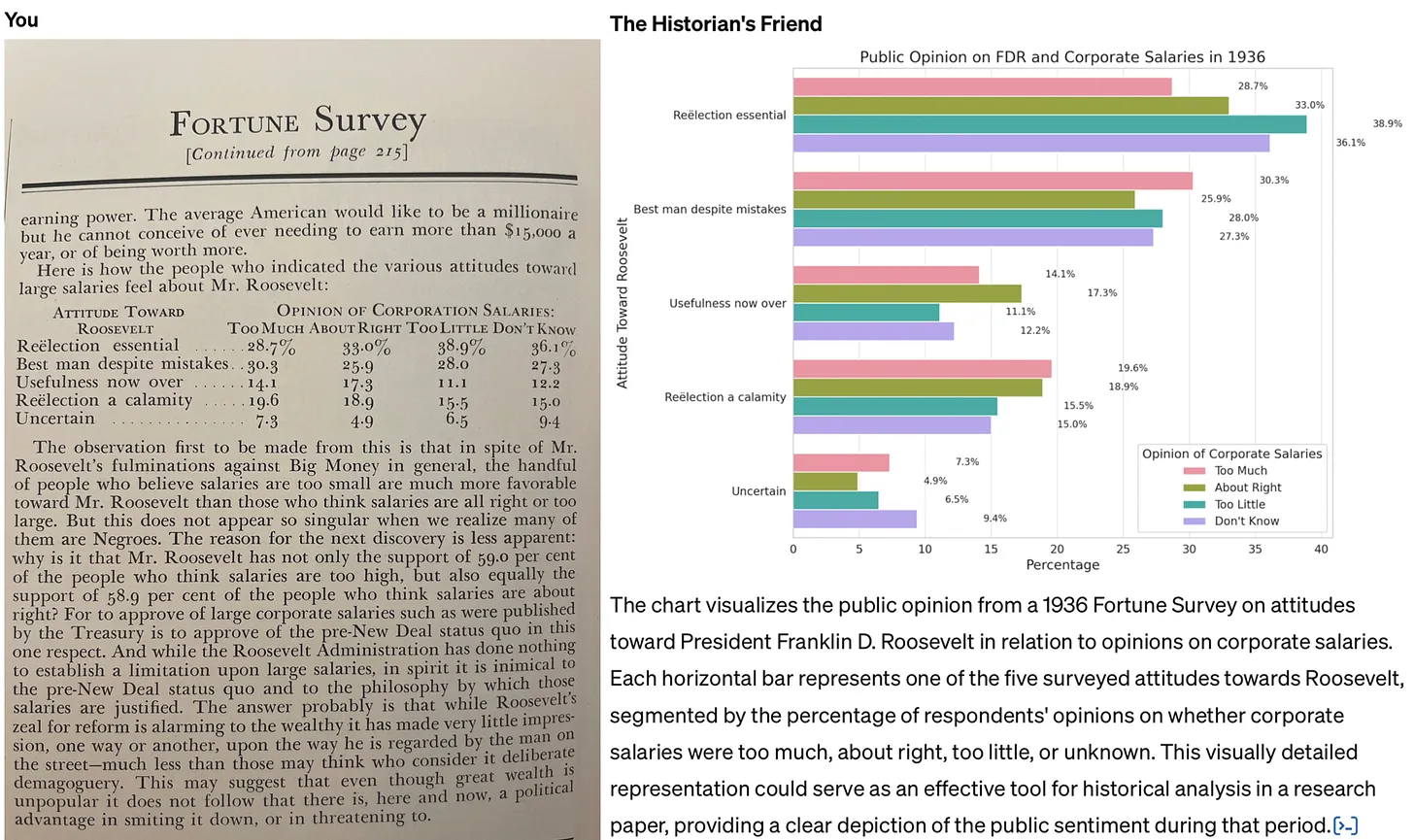

This example would have been really useful on the MA in Modern History I studied for 20 years ago. Back then, I was in the archives with primary sources such as minutes from the meetings of Victorians discussing educational policy, and reading reports. Being able to have an LLM do everything from explain things in more detail, to guess illegible words, to (as below) creating charts from data would have been super useful.

The key thing is to avoid following the path of least resistance when it comes to thinking about generative AI. I’m referring to the tendency to see it primarily as a tool used to cheat (whether by students generating essays for their classes, or professionals automating their grading, research, or writing). Not only is this use case of AI unethical: the work just isn’t very good. In a recent post to his Substack, John Warner experimented with creating a custom GPT that was asked to emulate his columns for the Chicago Tribune. He reached the same conclusion.Source: How to use generative AI for historical research | Res Obscura[…]

The job of historians and other professional researchers and writers, it seems to me, is not to assume the worst, but to work to demonstrate clear pathways for more constructive uses of these tools. For this reason, it’s also important to be clear about the limitations of AI — and to understand that these limits are, in many cases, actually a good thing, because they allow us to adapt to the coming changes incrementally. Warner faults his custom model for outputting a version of his newspaper column filled with cliché and schmaltz. But he never tests whether a custom GPT with more limited aspirations could help writers avoid such pitfalls in their own writing. This is change more on the level of Grammarly than Hal 9000.

In other words: we shouldn’t fault the AI for being unable to write in a way that imitates us perfectly. That’s a good thing! Instead, it can give us critiques, suggest alternative ideas, and help us with research assistant-like tasks. Again, it’s about augmenting, not replacing.