Population ethics

Will MacAskill is an Oxford philosopher. He’s an influential member of the Effective Altruism movement and has a view of the world he calls ‘longtermism’. I don’t know him, and I haven’t read his book, but I have done some ethics as part of my Philosophy degree.

As a parent, I find this review of his most recent book pretty shocking. I’m willing to consider most ideas but utilitarianism is the kind of thing which is super-attractive as a first-year Philosophy student but which… you grow out of?

The review goes more into depth than I can here, but human beings are not cold, calculating machines. We’re emotional people. We’re parents. And all I can say is that, well, my worldview changed a lot after I became a father.

Oxford philosophers William MacAskill and Toby Ord, both affiliated with the university’s Future of Humanity Institute, coined the word “longtermism” five years ago. Their outlook draws on utilitarian thinking about morality. According to utilitarianism—a moral theory developed by Jeremy Bentham and John Stuart Mill in the nineteenth century—we are morally required to maximize expected aggregate well-being, adding points for every moment of happiness, subtracting points for suffering, and discounting for probability. When you do this, you find that tiny chances of extinction swamp the moral mathematics. If you could save a million lives today or shave 0.0001 percent off the probability of premature human extinction—a one in a million chance of saving at least 8 trillion lives—you should do the latter, allowing a million people to die.Source: The New Moral Mathematics | Boston ReviewNow, as many have noted since its origin, utilitarianism is a radically counterintuitive moral view. It tells us that we cannot give more weight to our own interests or the interests of those we love than the interests of perfect strangers. We must sacrifice everything for the greater good. Worse, it tells us that we should do so by any effective means: if we can shave 0.0001 percent off the probability of human extinction by killing a million people, we should—so long as there are no other adverse effects.

[…]

MacAskill spends a lot of time and effort asking how to benefit future people. What I’ll come back to is the moral question whether they matter in the way he thinks they do, and why. As it turns out, MacAskill’s moral revolution rests on contentious, counterintuitive claims in “population ethics.”

[…]

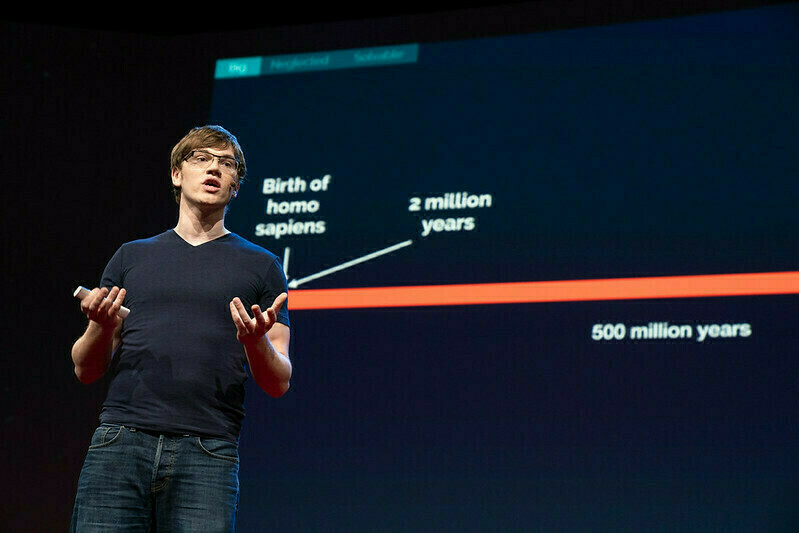

[W]hat is most alarming in his approach is how little he is alarmed. As of 2022, the ‘Bulletin of Atomic Scientists’ set the Doomsday Clock, which measures our proximity to doom, at 100 seconds to midnight, the closest it’s ever been. According to a study commissioned by MacAskill, however, even in the worst-case scenario—a nuclear war that kills 99 percent of us—society would likely survive. The future trillions would be safe. The same goes for climate change. MacAskill is upbeat about our chances of surviving seven degrees of warming or worse: “even with fifteen degrees of warming,” he contends, “the heat would not pass lethal limits for crops in most regions.”

This is shocking in two ways. First, because it conflicts with credible claims one reads elsewhere. The last time the temperature was six degree higher than preindustrial levels was 251 million years ago, in the Permian-Triassic Extinction, the most devastating of the five great extinctions. Deserts reached almost to the Arctic and more than 90 percent of species were wiped out. According to environmental journalist Mark Lynas, who synthesized current research in ‘Our Final Warning: Six Degrees of Climate Emergency’ (2020), at six degrees of warming the oceans will become anoxic, killing most marine life, and they’ll begin to release methane hydrate, which is flammable at concentrations of five percent, creating a risk of roving firestorms. It’s not clear how we could survive this hell, let alone fifteen degrees.