Boundaries

I might pay for Noah Smith’s publication if it weren’t on Substack. While it’s a shame that I may never see the bit beyond the paywall of this article, there’s enough in the bit I can enough read to be thought-provoking.

He riffs off a Twitter thread by Mark Allan Bovair who points to 2015 when lots of people started to be extremely online. This changed society greatly because we started understanding the world through a political lens, both online and offline. (Although there isn’t really an ‘offline’ any more with smartphones in our pockets and wearables on our wrists.)

We like to think that our worldviews are based on facts, but they’re much more likely to be based on emotion. Given the increasingly-short social media-fueled news cycles, our tendency to favour images and video over text, and our willingness to share things that fit with our existing worldview, I think we’re in a lot of trouble, actually.

(I’d also point out in passing that the moral panic around teenagers and smartphones whipped up by commenters such as Jonathan Haidt says more about parents than it does about their kids)

Those equating this to events or technology are missing the point. There was a shift around 2015 where the “online” world spilled over into the real world and the way we view/treat each other changed.

After 2015 things in everyday life started to go through the political lens. We started to bucket people and behaviors along the political spectrum, which was largely an online behavior pre-2015. We started judging everyone as left or right, or we walked on eggshells to avoid it.

Before that, you knew your neighbor was Republican or Democrat based on their lawn signs, but it had little bearing on your daily interactions or behaviors. And it only seemed to matter every four years for a few months. Now it’s constant and pervasive.

And pre-2015 we had phones and social media, but there was more of a boundary, and most people would “log off” most of the day. The dopamine addiction, heightened by the polarization, was much lower. Only fringe message board and twitter posters spent their days arguing online, now it’s everywhere, and there’s no real boundary.

Source: Noahpinion

Eudaimonic exercise

Audrey Watters discusses “the yips,” a term for “a form of dissociative freeze” which is bound up with trauma and can lead to performance anxiety and failure.

I have nowhere near the level of trauma that Audrey has had in her life, but I certainly recognise the use of exercise and competition (with others / with self) as a way of not addressing certain things. For example, I’ve had some kind of virus over the last few days which has made me feel weak. I was desperate to get back to the gym.

Even without the personal grief, trauma, and baggage we carry around as we age, the pandemic means that we have a lot of collective issues to process. Exercise seems benign because it doesn’t seem destructive like, for example, drug use. But anything can be an addictive behaviour — and, as Aristotle pointed out, eudaimonia does not sit at an extreme.

I can troubleshoot what went wrong at the gym on Wednesday. I can troubleshoot why I’m having trouble getting back to the pace and distance I was running before my “accident.” I have a whole list of physiological reasons why the barbell’s not moving, why my legs are moving. My age. My training. My knee. My glutes. My diet. My sleep.

But I’m starting to recognize – really recognize – some significant psychological reasons too. My trauma. My trauma. My trauma. Not just my fall, but all the trauma that I’ve experienced in the last few years, last few decades. I’ve funneled a lot of my hopes for “mental health” into the rhythms of exercise and movement, and it’s an incredibly fragile routine.

There are times when I know my body loves it. And there are times when my brain certainly does too. But there are other times, particularly when I get the yips (which, for the record don’t always look like failing at a deadlift; it can be something that happens all the time, like failing to lean forward as I run) that I’m starting to recognize now are bound up in fear and shame.

I don’t think any amount of “tracking” or “optimization” with gadgets is going to address this issue. For me or for others. Indeed, what if we’re just making things worse?

Source: Second Breakfast

Origami unicorn

Erin Kissane wrote a long essay about Threads and the Fediverse. It’s worth a read in its own right, but the thing that really stood out to me for some reason was a random-ish link to instructions for making an origami unicorn.

There is zero chance of me ever making this, but I’m passing it on in case you’re less bad at this kind of thing. For me, it’s not the folding that I find difficult, it’s the rotational 3D stuff. I even find it difficult putting the duvet cover on the right way round (much to my wife’s amusement/dismay).

This model was first designed in 2014, but this is an updated version with some “bug fixes” (legs are properly locked) and a color changed horn.

Source: Jo Nakashima

The best antidote for the tendency to caricature one’s opponent

Daniel Dennett is a philosopher who I enjoyed reading an undergraduate studying towards a Philosophy degree. I don’t think I’ve read him since, although his book Intuition Pumps and Other Tools for Thinking is on my list of books I’d like to read.

Maria Popova has extracted four rules which Dennett cites in Intuition Pumps which originally come from game theorist Anatol Rapoport. Sounds like good advice to me, especially in this fractured, fragmented world.

How to compose a successful critical commentary:

You should attempt to re-express your target’s position so clearly, vividly, and fairly that your target says, “Thanks, I wish I’d thought of putting it that way.”

You should list any points of agreement (especially if they are not matters of general or widespread agreement).

You should mention anything you have learned from your target.

Only then are you permitted to say so much as a word of rebuttal or criticism.

Source: The Marginalian

Image: Daniel Dennett (via The New Yorker)

Endlessly clever

Ethan Marcotte takes a phrase used in passing by a friend and applies it to his own career. He makes a good point.

(I noticed that Marcotte’s logo resembles the Firefox imagery that was used while I was at Mozilla. I typed that organisation and his name into a search engine and serendipitiously discovered With Great Tech Comes Great Responsibility, which I don’t think I’ve seen before?)

As tech workers, we’re expected to constantly adapt — to be, well, endlessly clever. We’re asked to learn the latest framework, the newest design tool, the latest research methodology. Our tools keep getting updated, processes become more complex, and the simple act of just doing work seems to get redefined overnight.

And crucially, we’re not the ones who get to redefine how we work. Most recently, our industry’s relentless investment in “artificial intelligence” means that every time a new Devin or Firefly or Sora announces itself, the rest of us have to ask how we’ll adapt this time.

Dunno. Maybe it’s time we step out of that negotiation cycle, and start deciding what we want our work to look like.

Source: Ethan Marcotte

Image: Daniele Franchi

When should you replace running shoes?

John Sutton knows more about this area than I do. Not only his he an ultramarathon runner but he works in the area of ‘carbon literacy’ and sustainability. I’m also sure that he’s correct that the claims that you need to replace your running shoes after a certain number of miles is driven by marketing departments.

Still, I’ve definitely experienced creeping lower-back pain when getting to around 650 miles in a pair of running shoes. Of course, now I’m wondering whether it’s all psychosomatic…

With age and high mileage, it is said that the midsole no longer provides the cushioning that you need to prevent injury. This is cited as the main reason that shoes need replacing on a regular basis. Again, looking at the Lightboost midsole on these shoes, I see no evidence of crushing or squashing and I certainly don’t think I can feel any difference to the foot strike than when they were new. Obviously, any change in perceived cushioning is likely to be imperceptibly gradual and I could only really confirm that the cushioning was no longer up to snuff by comparing them directly with a new pair. These shoes are at a premium price (£170) and as such, I would expect them to be made of premium materials and built to last. My visual inspection of them suggests that they are still in excellent condition.

On the face of it, I see no obvious reason why I should retire these Ultraboost Lights any time soon. However, that seems to go against industry recommendations. What if invisible midsole damage has been so gradual that I haven’t noticed it? Now that I’ve reached 500 miles, am I likely to injure myself through continued usage? As a triathlete, I know from years of bitter experience that I am far more likely to injure myself on a run than I am cycling or swimming. So, anything I can do to improve my chances of not getting injured would be a powerful incentive to act. Thus, if it could be proven scientifically that buying a new pair of trainers every 300 – 500 miles would lessen my chances of injury, then I would take that evidence very seriously indeed.

[…]

In a previous blog post I discussed the carbon footprint of a pair of running shoes (usually between 8kg and 16kg of CO2 per pair). In the great scheme of things, this is not a huge figure (until you scale up to the billions pairs of trainers sold each year and the realisation that virtually all of these are destined for landfill at end of life). My Ultraboosts have a significant content made from ocean plastic and recycled plastic which reduces their carbon footprint by 10% compared to the previous model made with non-recycled materials. 10% is better than nothing, and the use of some ocean plastic is much better than taking plastic bottles out of the recycling loop and spinning them into polyester. But, I can do a lot better than 10% by not swapping my shoes for a new pair until they are properly worn out. Simply by deciding to double the mileage and aiming for at least 1000 miles out of these shoes (hopefully more) I can at least halve the carbon footprint of my running shoe consumption.

Source: Irontwit

Human agency in a world of AI

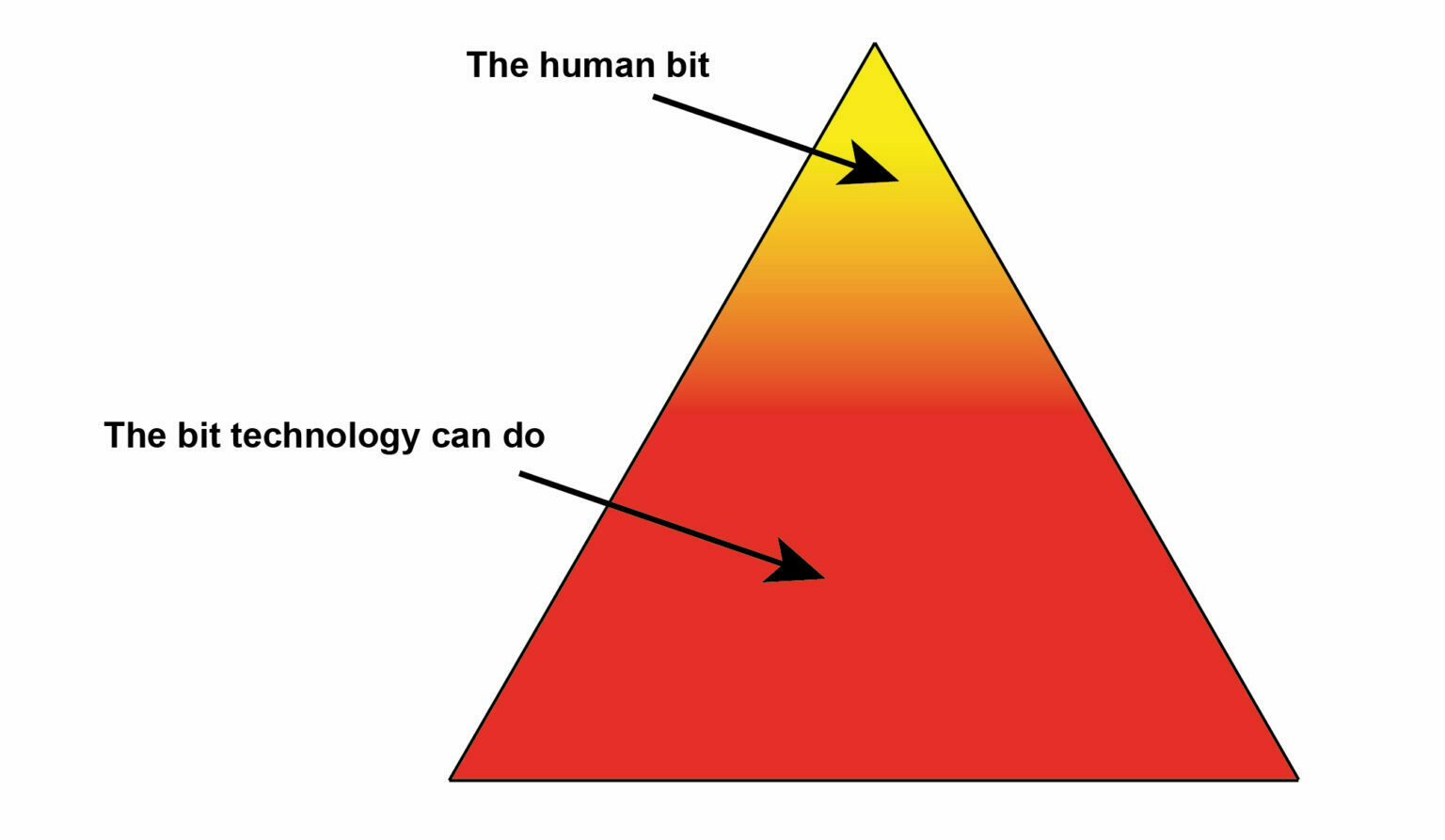

Dave White, Head of Digital Education and Academic Practice at the University of the Arts in London, reflects on a recent conference he attended where the tone seemed to be somewhat ‘defensive’. Instead of cheerleading for tech, the opening video and keynote instead focused on human agency.

White notes that this may be heartening but it’s a narrative that’s overly-simplistic. The creative process involves technology of all different types and descriptions. It’s not just the case that humans “get inspired” and then just use technology to achieve their ends.

The downside of these triangles is that they imply ‘development’ is a kind of ladder. You climb your way to the top where the best stuff happens. Anyone who has ever undertaken a creative process will know that it involves repeatedly moving up and down that ladder or rather, it involves iterating research, experimentation, analysis, reflection and creating (making). Every iteration is an authentic part of the process, every rung of the ladder is repeatedly required, so when I say technology allows us to spend more time at the ‘top’ of these diagrams, I’m not suggesting that we should try and avoid the rest.

I’d argue that attempting to erase the rest of the process with technology is missing the point(y). However, a positive reading would be that, as opposed the zero-sum-gain notion, a well-informed incorporation of technology could make the pointy bit a bit bigger (or more pointy). The tech could support us to explore a constantly shifting and, I hope, expanding, notion of humanness. This idea is very much in tension with the Surveillance Capitalism, Silicon Valley, reading of our times. I’m not saying that the tech does support us to explore our humanity, I’m saying it could and what is involved in that ‘could’ is worth thinking about.

Source: David White

5 ways in which AI is discussed

Helen Beetham, whose work over at imperfect offerings I’ve mentioned many times here, has a guest post on the LSE Higher Education blog about AI in education.

She discusses five ways in which it’s often discussed: as a specific technology, as intelligence, as a collaborator, as a model of the world, and as the future of work. In my day-to-day routine, I tend to use it as a collaborator, because I have (what I hope to be) a reasonable mental model of the capacities and limitations of LLMs.

What’s particularly useful about this article is the meta-framing that more ‘productivity’ isn’t always to be valued. Sometimes, what we want, is for people to slow down and deliberate a bit more.

AI narratives arrive in an academic setting where productivity is already overvalued. What other values besides productivity and speed can be put forward in teaching and learning, particularly in assessment? We don’t ask students to produce assignments so that there can be more content in the world, but so we (and they) have evidence that they are developing their own mental world, in the context of disciplinary questions and practices.

Source: LSE Higher Education blog

14 years of Tory (mis)rule

I don’t even have words for how bad the last 14 years have been under the Tories. Thankfully, people who do have the words have written some of them down.

This piece in The New Yorker is very long, but even just reading some of it will help those outside the UK understand what is going on, and those inside it hold your head in shame.

Some people insisted that the past decade and a half of British politics resists satisfying explanation. The only way to think about it is as a psychodrama enacted, for the most part, by a small group of middle-aged men who went to élite private schools, studied at the University of Oxford, and have been climbing and chucking one another off the ladder of British public life—the cursus honorum, as Johnson once called it—ever since.

[…]

These have been years of loss and waste. The U.K. has yet to recover from the financial crisis that began in 2008. According to one estimate, the average worker is now fourteen thousand pounds worse off per year than if earnings had continued to rise at pre-crisis rates—it is the worst period for wage growth since the Napoleonic Wars. “Nobody who’s alive and working in the British economy today has ever seen anything like this,” Torsten Bell, the chief executive of the Resolution Foundation, which published the analysis, told the BBC last year. “This is what failure looks like.”

[…]

“Austerity” is now a contested term. Plenty of Conservatives question whether it really happened. So it is worth being clear: between 2010 and 2019, British public spending fell from about forty-one per cent of G.D.P. to thirty-five per cent. The Office of Budget Responsibility, the equivalent of the American Congressional Budget Office, describes what came to be known as Plan A as “one of the biggest deficit reduction programmes seen in any advanced economy since World War II.” Governments across Europe pursued fiscal consolidation, but the British version was distinct for its emphasis on shrinking the state rather than raising taxes.

Like the choice of the word itself, austerity was politically calculated. Huge areas of public spending—on the N.H.S. and education—were nominally maintained. Pensions and international aid became more generous, to show that British compassion was not dead. But protecting some parts of the state meant sacrificing the rest: the courts, the prisons, police budgets, wildlife departments, rural buses, care for the elderly, youth programs, road maintenance, public health, the diplomatic corps.

In the accident theory of Brexit, leaving the E.U. has turned out to be a puncture rather than a catastrophe: a falloff in trade; a return of forgotten bureaucracy with our near neighbors; an exodus of financial jobs from London; a misalignment in the world. “There is a sort of problem for the British state, including Labour as well as all these Tory governments since 2016, which is that they are having to live a lie,” as Osborne, who voted Remain, said. “It’s a bit like tractor-production figures in the Soviet Union. You have to sort of pretend that this thing is working, and everyone in the system knows it isn’t.”

Source: The New Yorker

Identifying things that don't work

I always find something I agree with in posts like this. Here are some of those things in a list of “things that don’t work”:

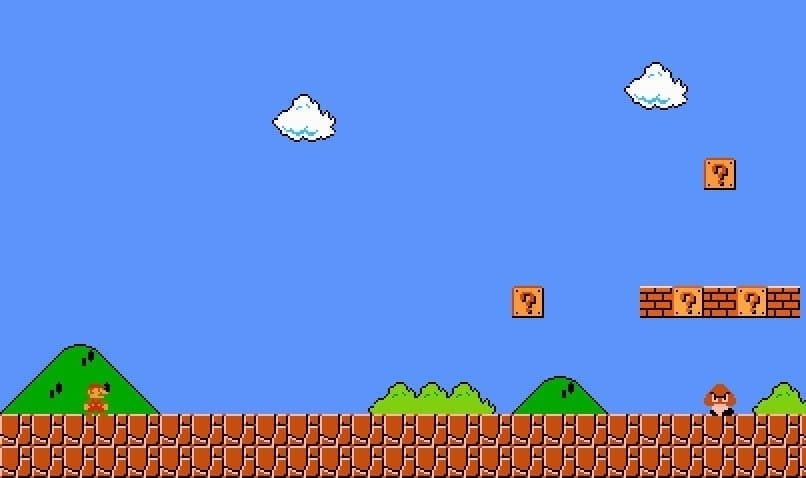

- Tearing your hair out because people don’t follow written instructions. You can fill your instructions with BOLD CAPS and rend your garments when this too fails. A more pleasant option is to craft supportive interfaces where people don’t need instructions. I’m convinced the best interface in history is the beginning of Super Mario Brothers. You just start.

[…]

- Doing unto others as you would have them do unto you. This is a beautiful idea, but often other people simply don’t have the same needs you do.

[…]

- Trying to figure it all out ahead of time. For hard problems, you can sit around trying to see around all corners and anticipate all possibilities. This can work—when Apollo 11 landed on the moon, everything worked the first time. But it’s really hard. If you can, it’s easier to build a prototype, learn from the flaws, and then build another one. (This, of course, contradicts the previous point.)

[…]

Things that work: Dogs, vegetables, index funds, jogging, sleep, lists, learning to cook, drinking less alcohol, surrounding yourself with people you trust and admire.

Source: Dynomight

The art of distraction

L.M. Sacasas has written a lengthy commentary on an essay by Ted Gioia, which is well worth reading in its entirety. The main thrust of Gioia’s essay is that we have substituted ‘dopamine culture’ for the arts and creative pursuits. Sacasas believes that this is too simplistic a framing.

I’m quoting the part where he uses Pascal to show Gioia, and anyone else who holds a similar point of view, that human beings have been forever thus. Except these days we live like kings of old, where we have the means to be distracted easily and at will. I think, in general, we’re far too bothered about how other people act, and not bothered enough about how we do.

It might be helpful to back up a few hundred years and consider a different telling of our compulsive relationship to distraction, and from there to ask some better questions of our current situation. Writing in the mid-seventeenth century, the French polymath Blaise Pascal wrote a series of strikingly relevant observations about distraction, or, as the translations typically put it, diversions. Frankly, these centuries-old observations do more, as I see it, to illuminate the nature of the problem we face than an appeal to dopamine and they do so because they do not reduce human behavior to neuro-chemical process, however helpful that knowledge may sometimes be.

Pascal argued, for example, that human beings will naturally seek distractions rather than confront their own thoughts in moments of solitude and quiet because those thoughts will eventually lead them to consider unpleasant matters such as their own mortality, the vanity of their endeavors, and the general frailty of the human condition. Even a king, Pascal notes, pursues distractions despite having all the earthly pleasures and honors one could aspire to in this life. “The king is surrounded by persons whose only thought is to divert the king, and to prevent his thinking of self,” Pascal writes. “For he is unhappy, king though he be, if he think of himself.”

We are all of us kings now surrounded by devices whose only purpose is to prevent us from thinking about ourselves.

Pascal even struck a familiar note by commenting directly on the young who do not see the vanity of the world because their lives “are all noise, diversions, and thoughts for the future.” “But take away their

devicesdiversions,” Pascal observes, “and you will see them bored to extinction. Then they feel their nullity without recognizing it, for nothing could be more wretched than to be intolerably depressed as soon as one is reduced to introspection with no means of diversion.”I don’t know, you tell me? I wouldn’t limit that description to the “young.” What do you feel when confronted with a sudden unexpected moment of silence and inactivity? Do you grow uneasy? Do you find it difficult to abide the stillness and quiet? Do your thoughts worry you? Solitude, as opposed to loneliness, can be understood as a practice or maybe even a skill. Have we been deskilled in the practice of solitude? Have we grown uncomfortable in our own company and has this amplified the preponderance of loneliness in contemporary society? Recall, for instance, how Hannah Arendt once distinguished solitude from loneliness: “I call this existential state [thinking as an internal conversation] in which I keep myself company ‘solitude’ to distinguish it from ‘loneliness,’ where I am also alone but now deserted not only by human company but also by the possible company of myself.”

It seems to me that these are all now familiar issues and tired questions. As observations about our situation, they now strike me as banal. We all know this, right? But perhaps for that reason we do well to recall them to mind from time to time. After all, Pascal would also tell us that the stakes are high, quite high. “The only thing which consoles us for our miseries is diversion, and yet this is the greatest of our miseries,” he writes. “For it is this which principally hinders us from reflecting upon ourselves, and which makes us insensibly ruin ourselves. Without this we should be in a state of weariness, and this weariness would spur us to seek a more solid means of escaping from it. But diversion amuses us, and leads us unconsciously to death.”

Source: The Convivial Society

The problem with private property societies

I still subscribe to a few author’s publications on Substack, although I wish they’d leave the platform. One of these is Antonia Malchik’s On The Commons whose posts often include a turn of phrase which really resonates with me.

This week, I’ve been listening to Ep.24 of Hardcore History: Addendum where the host, Dan Carlin, interviews Rick Rubin, the legendary music producer. Towards the end, Rubin turns the tables and asks Carlin a few questions. One of them is about what life was like before land ownership. Carlin, who usually hugely impresses me, seemed to suggest that humans have always owned land in one way or another, and that it’s only aberrations where it was collectively owned.

I’m not sure that’s true. I think Carlin would do well to read, for example, Dave Graeber’s The Dawn of Everything: A New History of Humanity. Private ownership of everything is something that seems to be burned into the American psyche. But it doesn’t have to be this way. As Malchik points out in her post, private ownership within a capitalist economy is essentially why we can’t have nice things.

In their book The Prehistory of Private Property, authors Karl Widerquist and Grant S. McCall repeatedly go back to the main difference that they see in a private property society versus one where private ownership of, say, land, much less water and food, is unknown: freedom to leave. That is, if you want to walk away from your people, or your place, can you do so and still support yourself? Can you walk away and find or make food, shelter, and clothing? In non-private property societies, the freedom to walk away and still live just fine is the norm. In private property societies, it’s almost nonexistent. You have to work to make rent. Land-rent, you might call it. Someone else owns the land, and you have to pay to live on it.

The extent to which this reality runs counter to most of our existence, even if we’re just counting the few hundred thousand years that Homo sapiens have been here and not the millions of years of hominin evolution before that, is mind-bending. There have been territories and civilizations and controlling empires for thousands of years all over the world, but for most of our species’ existence, most humans had some kind of freedom to live on, with, and from land without needing to pay someone else for the privilege of existing. Until relatively recently.

We can’t all spend our time as we would wish not just because capitalism allows a few humans to hoard an increasing amount of money and power, but because the planet’s dominant societies force land to be privately owned, and make access to food and clean water something we have to pay for.

Source: On The Commons

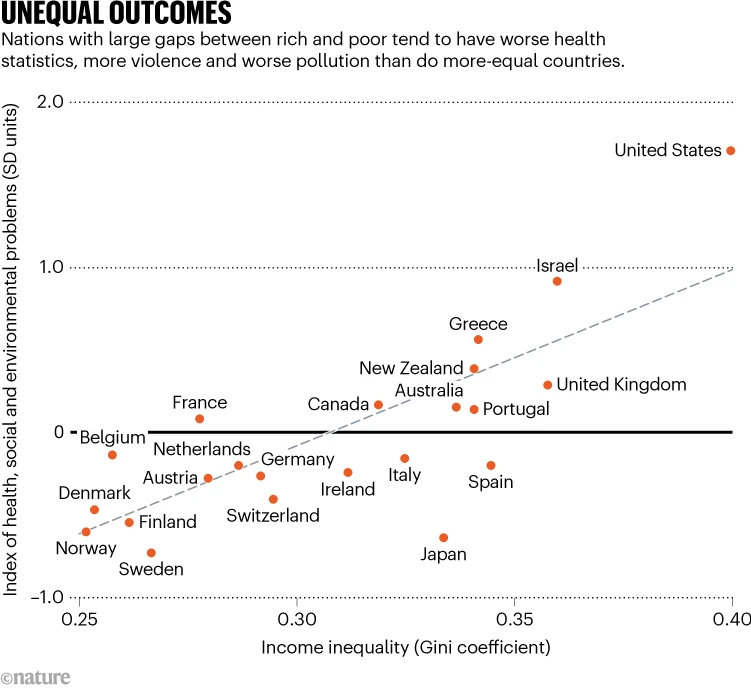

More equal societies perform better

It’s easy to say that you hate the Tories. The reason, of course, is that while they’re in government they institute policies and pass laws that make the country more unequal. This is problematic for everyone, not just those impoverished.

This well-referenced article is published in Nature. I’d also check out this video I saw posted to LinkedIn (but originally from TikTok) about the argument about more capitalism not being better.

Even affluent people would enjoy a better quality of life if they lived in a country with a more equal distribution of wealth, similar to a Scandinavian nation. They might see improvements in their mental health and have a reduced chance of becoming victims of violence; their children might do better at school and be less likely to take dangerous drugs.

[…]

Many commentators have drawn attention to the environmental need to limit economic growth and instead prioritize sustainability and well-being. Here we argue that tackling inequality is the foremost task of that transformation. Greater equality will reduce unhealthy and excess consumption, and will increase the solidarity and cohesion that are needed to make societies more adaptable in the face of climate and other emergencies.

[…]

Other studies have also shown that more-equal societies are more cohesive, with higher levels of trust and participation in local groups16. And, compared with less-equal rich countries, another 10–20% of the populations of more-equal countries think that environmental protection should be prioritized over economic growth. More-equal societies also perform better on the Global Peace Index (which ranks states on their levels of peacefulness), and provide more foreign aid. The UN target is for countries to spend 0.7% of their gross national income (GNI) on foreign aid; Sweden and Norway each give around 1% of their GNI, whereas the United Kingdom gives 0.5% and the United States only 0.2%.

Source: Nature

Toward the ad-free city?

I can’t stand adverts whether appearing on the web (adblockers!), TV (sound off!) or on billboards (ignore!) It feels like mind pollution to me.

I’m glad that Sheffield, a city I called home for three years while at university, has decided to do something about the most pernicious forms of advertising. It’s particularly interesting that they’ve done a cost/benefit analysis against the cost to “the NHS and other services”.

Adverts for a wide range of polluting products and brands, including airlines, airports, fossil fuel-powered cars (including hybrids) and fossil fuel companies, will not be permitted on council-owned advertising billboards under the new Sheffield City Council Advertising and Sponsorship Policy. The council’s social media, websites, publications and any sponsorship arrangements will also be subject to the restrictions.

[…]

This breaks new ground in the UK, with Sheffield going further than any other council to remove polluting promotions. Sheffield declared a climate emergency in 2019, alongside many other local councils. This step demonstrates a real commitment to reducing emissions, driving down air pollution, and encouraging a shift towards lower-carbon lifestyles.

[…]

By including specific criteria that prioritises small local businesses, the policy also aims to protect Sheffield’s local economy. After consultation with other councils and outdoor advertising companies, Sheffield’s Finance Committee concluded that the financial impact of the policy was likely to be low (approx. £14,000-£21,000) compared to the costs incurred via pressures on the NHS and other services.

Source: badvertising

The impact of the pandemic

This is a difficult read. Without even going into the breakdown in social relations and trust, it lays out the health and development impact of the pandemic for different age groups.

I can only thank my lucky stars that neither of our kids weren’t born in 2020. It still had an effect on them, in different ways; thankfully, that doesn’t seem to have been in terms of health or development.

The article attempts to end on a positive note, which I’ve included here. But it’s difficult to see that, unless a newly-elected Labour government manages to completely turn things around 180-degrees from the direction we’re headed under the Tories, things getting much better soon.

Across all age groups, the pandemic appears to have chipped away at health and the NHS treatment that people receive.

The challenge of reversing these trends can appear overwhelming and insurmountable, but recognising the scale of a problem can also, in time, galvanise a proportionate response.

“There are parallels with the Industrial Revolution, which was really bad for health inequalities,” said Steves. “But that was followed by a period of philanthropy, government leadership and infrastructure changes. The pandemic does have a legacy that’s important for health. So we need to also think about how this could be a major opportunity.”

Source: The Guardian

Microcast #104 — Questioning uncritical acceptance

A microcast to respond to a thread on the Fediverse about uncritical acceptance of new technologies .

Show notes

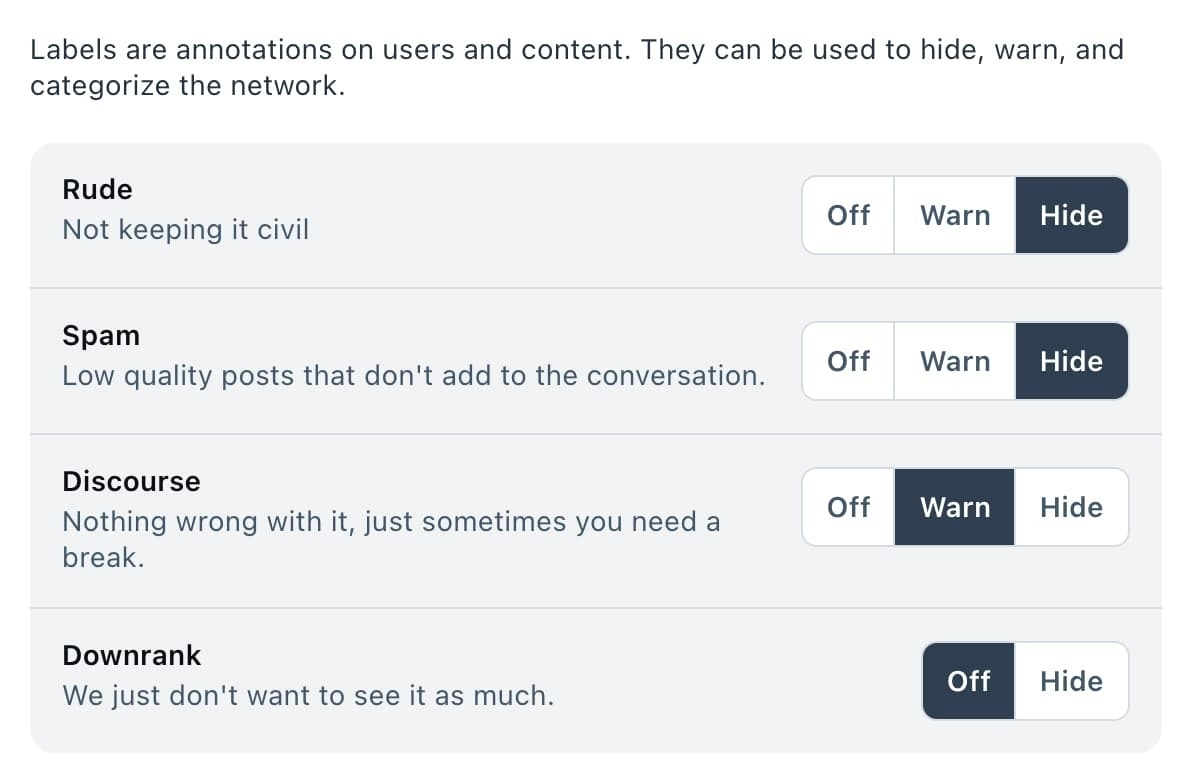

Bluesky's approach to decentralised moderation

Over the last 5-6 years I’ve had to think deeply about moderation in decentralised networks, first for MoodleNet and then for Bonfire. In that time, a new network has come along called Bluesky, seeded with money from Twitter (pre-Musk).

Bluesky (atpro) and Fediverse apps such as Mastodon, Pixelfed, and Bonfire (ActivityPub) use different protocols. There’s no reason why they can’t be bridged, but attempts to do so have met with some hostility. Moderation in ActivityPub-compatible networks rely on the server/instance that you’re on. There’s advantages to this, but I guess the downside is that if you like the people but not the moderation policy, you’ve got to decide to stick or twist.

What Bluesky is doing is something similar to something Bonfire has proposed: allowing people to follow accounts that focus on moderation. This means that you can decide to, for example, dial down the profanity, or mark things as spam based on a definition you share with someone else.

Today, we’re excited to announce that we’re open-sourcing Ozone, our collaborative moderation tool. With Ozone, individuals and teams can work together to review and label content across the network. Later this week, we’re opening up the ability for you to run your own independent moderation services, seamlessly integrated into the Bluesky app. This means that you’ll be able to create and subscribe to additional moderation services on top of what Bluesky requires, giving you unprecedented control over your social media experience.

At Bluesky, we’re investing in safety from two angles. First, we’ve built our own moderation team dedicated to providing around-the-clock coverage to uphold our community guidelines. Additionally, we recognize that there is no one-size-fits-all approach to moderation — no single company can get online safety right for every country, culture, and community in the world. So we’ve also been building something bigger — an ecosystem of moderation and open-source safety tools that gives communities power to create their own spaces, with their own norms and preferences. Still, using Bluesky feels familiar and intuitive. It’s a straightforward app on the surface, but under the hood, we have enabled real innovation and competition in social media by building a new kind of open network.

[…]

Bluesky’s vision for moderation is a stackable ecosystem of services. Starting this week, you’ll have the power to install filters from independent moderation services, layering them like building blocks on top of the Bluesky app’s foundation. This allows you to create a customized experience tailored to your preferences.

Source: Bluesky blog

Barnacle ball

Some great photos in this year’s British Wildlife Photography Awards. I was going to share the black and white one of the mountains, but this one, the overall winner, is incredibly powerful. It’s also just a fantastically-composed image.

An incredible image of a football covered in goose barnacles is the winner of this year’s British Wildlife Photography Awards.

The picture was chosen from more than 14,000 entries by both amateur and professional photographers.

The photograph, which also won the Coast and Marine category, was taken by Ryan Stalker.

“Above the water is just a football. But below the waterline is a colony of creatures. The football was washed up in Dorset after making a huge ocean journey across the Atlantic,” says Stalker.

“More rubbish in the sea could increase the risk of more creatures making it to our shores and becoming invasive species.”

Source: BBC News

Anti-AI hyperbole

<img src=“https://cdn.uploads.micro.blog/139275/2024/dalle-2024-03-20-18.21.59illustrate-a-scene-where-a-large-exaggerated-bubbl.webp" width=“600” height=“342” alt=“A figure resembling Ed Zitron pops a large, shimmering “AI Hype” bubble against a tech city skyline, with digital particles and a palette of light gray, dark gray, bright red, yellow, and blue.">

This post has been going around my networks recently, so I’ve finally got around to giving it a read. The first thing that’s worth pointing out is that the author, Ed Zitron, is CEO of a tech PR firm. So it’s no surprise that it’s written in a way that’s supposed to try and pop the AI hype bubble.

I’m not unsympathetic to Zitron’s position, but when he talks about not knowing anyone using ChatGPT, I don’t think he’s telling the truth. I’m using GPT-4 every day at this point, and now supplementing it with Perplexity.ai and Claude 3. A combination of the three can be really useful for everything from speeding up idea generation to converting a bullet point list to a mindmap.

One thing I’ve found AI assistants to be incredibly powerful for is to spot things I might have missed, to provide a different perspective. Or even to put in a list of things and to generate recommendations based on that. You can do this for music playlists through to business competitors.

Every time Sam Altman speaks he almost immediately veers into the world of fan fiction, talking about both the general things that “AI” could do and non-specifically where ChatGPT might or might not fit into that without ever describing a real-world use case. And he’s done so in exactly the same way for years, failing to describe any industrial or societal need for artificial intelligence beyond a vague promise of automation and “models” that will be able to do stuff that humans can, even though OpenAI’s models continually prove themselves unable to match even the dumbest human beings alive.

Altman wants to talk about the big, sexy stories of Average General Intelligences that can take human jobs because the reality of OpenAI — and generative AI by extension — is far more boring, limited and expensive than he’d like you to know.

[…]

I believe a large part of the artificial intelligence boom is hot air, pumped through a combination of executive bullshitting and a compliant media that will gladly write stories imagining what AI can do rather than focus on what it’s actually doing. Notorious boss-advocate Chip Cutter of the Wall Street Journal wrote a piece last week about how AI is being integrated in the office, spending most of the article discussing how companies “might” use tech before digressing that every company he spoke to was using these tools experimentally and that they kept making mistakes.

[…]

Generative AI’s core problems — its hallucinations, its massive energy and unprofitable compute demands — are not close to being solved. Having now read and listened to a great deal of Murati and Altman’s interviews, I can find few cases where they’re even asked about these problems, let alone ones where they provide a cogent answer.

And I believe it’s because there isn’t one.

Source: Where’s Your Ed At?

Microcast #103 — Microphones and Moving to Micro.blog

The first microcast of 2024 and also the first on micro.blog. This one discusses the reasons for the move, and how it went.