Abusing AI girlfriends

I don’t often share this kind of thing because I find it distressing. We shouldn’t be surprised, though, that the kind of people who physically, sexually, and emotionally abuse other humans beings also do so in virtual worlds, too.

In general, chatbot abuse is disconcerting, both for the people who experience distress from it and the people who carry it out. It’s also an increasingly pertinent ethical dilemma as relationships between humans and bots become more widespread — after all, most people have used a virtual assistant at least once.Source: Men Are Creating AI Girlfriends and Then Verbally Abusing Them | FuturismOn the one hand, users who flex their darkest impulses on chatbots could have those worst behaviors reinforced, building unhealthy habits for relationships with actual humans. On the other hand, being able to talk to or take one’s anger out on an unfeeling digital entity could be cathartic.

But it’s worth noting that chatbot abuse often has a gendered component. Although not exclusively, it seems that it’s often men creating a digital girlfriend, only to then punish her with words and simulated aggression. These users’ violence, even when carried out on a cluster of code, reflect the reality of domestic violence against women.

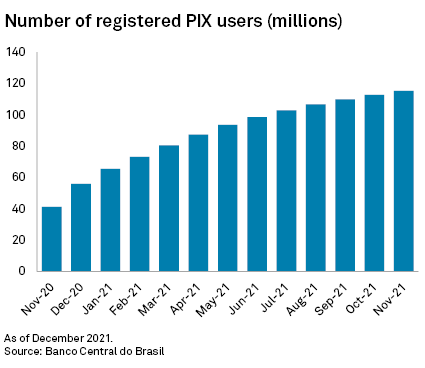

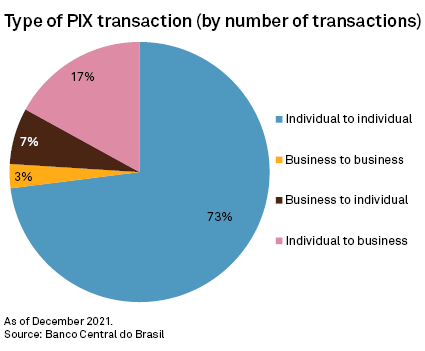

Pix and digital payments in Brazil

I came across this story via Benedict Evans' newsletter (it’s not the kind of thing I’d usually track). What I find interesting is this is a hugely successful rollout of a digital payments system done by a central bank. It’s helping real people, including those in poverty.

Meanwhile, crypto tokens are held by crypto bros and middle-class white guys like myself trying to make a quick buck. Just goes to show that innovation doesn’t always come from where you expect.

Source: Pix breaks ground in Brazil, shakes up payments market | S&P Global Market IntelligencePix, rolled out by the Banco Central do Brasil in Nov. 2020, was built for efficiency and financial inclusion. It now has 107.5 million registered accounts, more than half of the country’s population. One year after implementation, more than half a trillion Brazilian reais were transacted through the low-cost payments system last month. According to central bank data, Pix payments volume is already equivalent to 80% of debit and credit card transactions.

[…]

“Except for very particular transactions, market penetration tends to 99% on all individual transfers,” [Julian Colombo, CEO of banking technology firm N5] added. However, the rollout has not been without hiccups, including kidnapping.

[…]

On a recent Sunday in Rio de Janeiro, a three-member samba band played for a crowded restaurant. At the end, they passed around the tambourine to collect money. One diner apologized, saying he did not have any cash on him. The drummer said, “No problem, I take Pix,” and proceeded to share his code — which can be an email, phone number or other easy-to-remember code — with the diner, who promptly transferred the money his way.

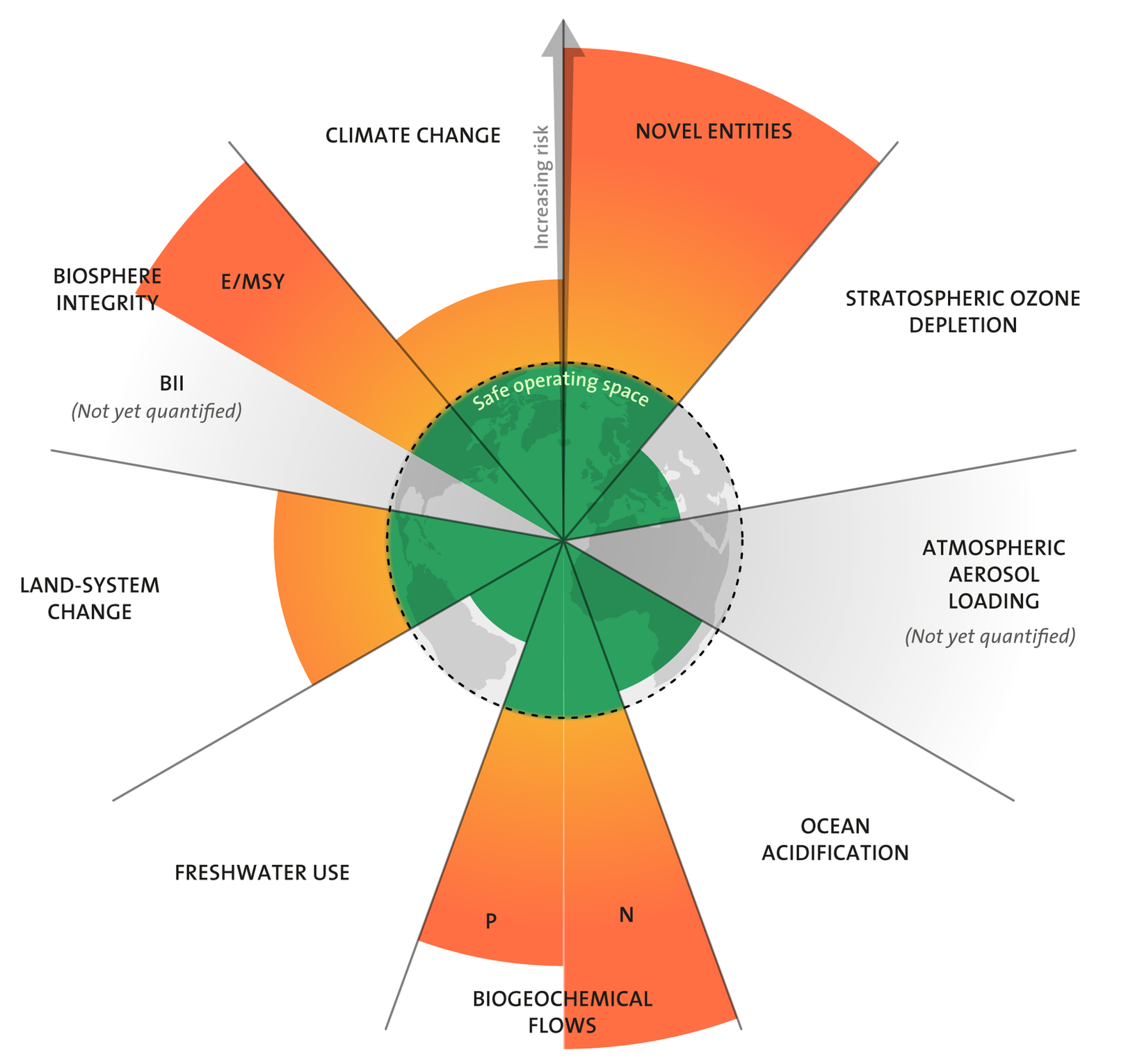

Nine planetary boundaries

This is a useful diagram to share in order to demonstrate that we might think we’re shafted with regards to climate change, but that pales into insignificance compared to pollution from chemicals and plastics.

The researchers say there are many ways that chemicals and plastics have negative effects on planetary health, from mining, fracking and drilling to extract raw materials to production and waste management.Source: Safe planetary boundary for pollutants, including plastics, exceeded, say researchers | Stockholm Resilience Centre“Some of these pollutants can be found globally, from the Arctic to Antarctica, and can be extremely persistent. We have overwhelming evidence of negative impacts on Earth systems, including biodiversity and biogeochemical cycles,” says Carney Almroth.

Global production and consumption of novel entities is set to continue to grow. The total mass of plastics on the planet is now over twice the mass of all living mammals, and roughly 80% of all plastics ever produced remain in the environment.

Plastics contain over 10,000 other chemicals, so their environmental degradation creates new combinations of materials – and unprecedented environmental hazards. Production of plastics is set to increase and predictions indicate that the release of plastic pollution to the environment will rise too, despite huge efforts in many countries to reduce waste.

Optimism about the future

I don’t have a particularly strong interest in sci-fi, nor do I have access to all of this paywalled post. However, I don’t need either to share a couple of insights.

First, I agree that the utopia/dystopia distinction are two sides of the same coin depending on what your view of what constitutes a flourishing human life. You don’t need to look far in our current situation to see that in action.

Second, while I’d probably broadly agree with the three conditions for optimism the author lays out, you could technically argue against all of them.

One possibility is that utopia and dystopia are just whatever the author decides to present as such. Take a war-torn unequal Malthusian future and add some soaring music and graphics of cities lighting up, and maybe audiences will see it as utopian. Or take a serene, pastel-colored post-scarcity hippie society and add a shirtless Sean Connery shouting that it’s all an illusion, and maybe it starts to seem like a creepy dystopia. (Of course, if this is what’s going on, there will be a tendency toward presenting any future as dystopian, since stories need external conflict; the world has to be “messed up” in some way in order for the protagonists to “fix” it.)Source: What makes an "optimistic" vision of the future? | NoahpinionBut in fact I submit that in order to be truly optimistic, a sci-fi world needs more than just a stirring theme song. It needs to present a future with several concrete features corresponding to the type of future people want to imagine actually living in. The “Wang Standard” is a good start, with its emphasis on the power of human effort, but in the end it relies on the somewhat circular notion of a “radically better” future. What does it mean for the future to be better? I submit that for a future to feel optimistic, it should feature the following elements:

- Material abundance

- Egalitarianism — broadly shared prosperity, relatively moderate status differences, and broad political participation

- Human agency — the ability of human effort to alter the conditions of the world

Reading is useless

I like this post by graduate student Beck Tench. Reading is useless, she says, in the same way that meditation is useless. It’s for its own sake, not for something else.

When I titled this post “reading is useless,” I was referring to a Zen saying that goes, “Meditation is useless.” It means that you meditate to meditate, not to use it for something. And like the saying, I’m being provocative. Of course reading is not useless. We read in useful ways all the time and for good reason. Reading expands our horizons, it helps us understand things, it complicates, it validates, it clarifies. There’s nothing wrong with reading (or meditating for that matter) with a goal in mind, but maybe there is something wrong if we feel we can’t read unless it’s good for something.Source: Reading is Useless: A 10-Week Experiment in Contemplative Reading | Beck TenchThis quarter’s experiment was an effort to allow myself space to “read to read,” nothing more and certainly nothing less. With more time and fewer expectations, I realized that so much happens while I read, the most important of which are the moments and hours of my life. I am smelling, hearing, seeing, feeling, even tasting. What I read takes up place in my thoughts, yes, and also in my heart and bones. My body, which includes my brain, reads along with me and holds the ideas I encounter.

This suggests to me that reading isn’t just about knowing in an intellectual way, it’s also about holding what I read. The things I read this quarter were held by my body, my dreams, my conversations with others, my drawings and journal entries. I mean holding in an active way, like holding something in your hands in front of you. It takes endurance and patience to actively hold something for very long. As scholars, we need to cultivate patience and endurance for what we read. We need to hold it without doing something with it right away, without having to know.

The cost of a thing is the amount of life which is required to be exchanged for it

This article in The Atlantic by Alan Lightman points out how biophilic we have been historically as a species, and how that’s changed only recently.

None of this, of course, helps with the climate emergency and the concomitant biodiversity collapse. I read the WEF Global Risks Report for 2022 and, well, I’ve read more hopeful documents.

Most of the minutes and hours of each day we spend in temperature-controlled structures of wood, concrete, and steel. With all of its success, our technology has greatly diminished our direct experience with nature. We live mediated lives. We have created a natureless world.Source: This Is No Way to Be Human - The AtlanticIt was not always this way. For more than 99 percent of our history as humans, we lived close to nature. We lived in the open. The first house with a roof appeared only 5,000 years ago. Television less than a century ago. Internet-connected phones only about 30 years ago. Over the large majority of our 2-million-year evolutionary history, Darwinian forces molded our brains to find kinship with nature, what the biologist E. O. Wilson called “biophilia.” That kinship had survival benefit. Habitat selection, foraging for food, reading the signs of upcoming storms all would have favored a deep affinity with nature. Social psychologists have documented that such sensitivities are still present in our psyches today. Further psychological and physiological studies have shown that more time spent in nature increases happiness and well-being; less time increases stress and anxiety. Thus, there is a profound disconnect between the natureless environment we have created and the “natural” affections of our minds. In effect, we live in two worlds: a world in close contact with nature, buried deep in our ancestral brains, and a natureless world of the digital screen and constructed environment, fashioned from our technology and intellectual achievements. We are at war with our ancestral selves. The cost of this war is only now becoming apparent.

[…]

I am not so naive as to think that the careening technologization of the modern world will stop or even slow down. But I do think that we need to be more mindful of what this technology has cost us and the vital importance of direct experiences with nature. And by “cost,” I mean what Henry David Thoreau meant in Walden: “The cost of a thing is the amount of what I will call life which is required to be exchanged for it, immediately or in the long run.” The new technology in Thoreau’s day was the railroad, which he feared was overtaking life. Thoreau’s concern was updated by the literary critic and historian of technology Leo Marx in his 1964 book, The Machine in the Garden. That book describes the way in which pastoral life in America was interrupted by the technology and industrialization of the 19th and 20th centuries. Marx could not have imagined the internet and the smartphone, which arrived only a few decades later. And now I worry about the promise of an all-encompassing virtual world called the “metaverse,” and the Silicon Valley arms race to build it. Again, it is not the technology itself that should concern us. It is how we use that technology, in balance with the rest of our lives.

Matching work activities to mind modes

This by Jakob Greenfeld reminds me of Buster Benson’s evergreen post Live like a hydra — especially the sub-section ‘Seven modes (for seven heads)’.

Of course, you can’t always be driven by what mood you happen to be in. Sometimes, you have to change things up to ensure that your mood changes. But hey, all bets are off during a pandemic, right?

I recently discovered a simple step-by-step process that significantly increased my personal productivity and made me happier along the way.Source: Effortless personal productivity (or how I learned to love my monkey mind) – Jakob GreenfeldIt costs $0 and no, it’s not some note-taking or to-do list system.

In short:

Step 1: develop meta-awareness of your state of mind.

Step 2: pattern-match to identify your mind’s most common modes.

Step 3: learn to pick activities that match each mode.

Does Not Translate

I enjoyed some of these untranslatable words from languages other than English.

Sturmfrei (German) When all the people you live with are gone for a while and you have the whole place to yourself.Source: Does Not Translate – Words that don’t translate to other languagesGyakugire (Japanese) Getting mad at somebody because they got mad at you for something you did.

Bear favour (Swedish) To do something for someone with good intentions, but it actually has negative consequences instead.

How to be useless

I love articles that give us a different lens for looking at the world, and this one certainly does that. It also provides links for further reading, which I very much appreciate.

Zhuangzi argued that we can reclaim our lives, and be happier and more fulfilled, if we become more useless. In this, he went against many influential thinkers of his time, such as the Mohists. These followers of Master Mo (c470-391 BCE) prized efficiency and welfare above all. They insisted on cutting away all ‘useless’ parts of life – art, luxury, ritual, culture, leisure, even the expression of emotions – and instead focused on ensuring that people across the social classes receive essential material resources. The Mohists viewed many practices common at the time as immorally wasteful. Rather than a funeral rich with rituals following tradition, such as burial within three layers of coffins and a years-long mourning period, Mohists recommended simply digging a pit deep enough so the body doesn’t smell. You were permitted to cry on your way to and from the burial site, but then you needed to return to work and life.Source: How to be useless | Psyche GuidesAlthough the Mohists wrote more than 2,000 years ago, their ideas sound familiar to modern ears. We frequently hear how we should avoid supposedly useless things, such as pursuing the arts, or a humanities education (see the all-too-frequent slashing of liberal arts budgets at universities). Or it’s often said that we should allow for these things only insofar as they benefit the economy or human welfare. You might have felt this discomfort in your own life: the pressure from the meritocracy to serve some purpose, have some benefit, maximise some utility – that everything you do should be, in some sense, useful.

However, as we will show here, Zhuangzi offers an essential antidote to this pernicious means-ends way of thinking. He demonstrates that you can improve your life if you let go of the anxiety of wanting to serve a purpose. To be sure, Zhuangzi doesn’t altogether spurn usefulness. Rather, he argues that usefulness itself should not be life’s bottom line.

Your accusations are your confessions

I didn’t know Stephen Downes had a political blog. These are his thoughts on cancel culture which, like most of what he says in general, I agree with.

Every time a conservative complains about censorship or ‘cancel culture’ we need to remind ourselves, and to say to them,Source: Cancelled | Leftish“You are the one complaining about cancel culture because you are the one who uses silencing and suppression as political tools to advance your own interests and maintain your own power.

“You are complaining about cancel culture because the people you have always silenced are beginning to have a voice, and they are beginning to say, we won’t be silent any more.

“And when you say the people working against racism and misogyny and oppression are silencing you, that tells us exactly who – and what – you are.”

“Your accusations are your confessions.”

Web3, the metaverse, and the DRM-isation of everything

I’ve been reading a report entitled Crypto Theses for 2022 recently. Despite having some small investments in crypto, the world that’s painted in that report is, quite frankly, dystopian.

The author of that report admits to being on the right of politics and, to my mind, this is the problem: we’ve got people who believe that societal control and monetisation of all of the things in a free market economy is desirable.

This article focuses on Mark Zuckerberg’s announcement at the end of 2021 about the ‘metaverse’. This is something which is a goal of the awkwardly-titled ‘web3’ movement.

Perhaps I’m getting old, but to me technology should be about enabling humans to do new things or existing things better. As far as I can see, crypto/web3 just adds a DRM and monetisation layer on top of the open web?

In one sense, it's a vision of a future world that takes many long-existing concepts, like shared online worlds and digital avatars, and combines them with recently emerging trends, like digital art ownership through NFT technology and digital "tipping" for creators.Source: Zuckerberg Convinced the Tech World That ‘the Metaverse’ Is the Future | Business InsiderIn another sense, it’s a vision that takes our existing reality — where you can already hang out in 2D or 3D virtual chat rooms with friends who are or are not using VR headsets — and tacks on more opportunities for monetization and advertising.

America, fascism, and the first, second, and third 'solutions'

Jason Kottke reminds us of Toni Morrison’s “Ten Steps Towards Fascism” from 1995. As an historian, it was this bit that he also quoted that jumped out at me, though.

Let us be reminded that before there is a final solution, there must be a first solution, a second one, even a third. The move toward a final solution is not a jump. It takes one step, then another, then another.To outsiders, Americans at this point seem like slowly-boiled frogs on their way to a fascist stew. Canadians seemingly understand the threat.

It’s terrifying when you think about it too much. (Most people in a position to do anything about it seemingly aren’t thinking about it…)

Persistent Practices and Pragmatism

I think Albert Wenger has discovered, however obliquely, Pragmatism. Once you realise that the correspondence theory of truth is nonsense, and that it makes more sense to think about truth as being “good in the way of belief” the world starts making a lot more sense…

Yoga works. Meditation works. Conscious breathing works. By “works” I mean that these practices have positive effects for people who observe them. They can help build and retain strength and flexibility of both body and mind. The fact that they work shouldn’t be entirely surprising, given that these practices have been developed over thousands of years through trial and error by millions of people. The persistence of these practices by itself provides devidence of their effectiveness.Source: A Short Note on Persistent Practices | ContinuationsBut does that mean the theories frequently cited to explain these practices are also valid? Do chakras and energy flows exist? I don’t want to rule this out – there have been various attempts to map chakras to the nervous and endocrine systems – but I think it is much more likely that these are pre-scientific explanations not unlike the phlogiston theory of combustion. I will refer to these as “internal theories,” meaning the theories that are generally associated with the practices historically.

Meetings and work theatre

The way that you do something is almost as important as what you do. However, I’ve definitely noticed that, during the pandemic as people get used to working remotely (as I’ve done for a decade now) there’s definitely been some, let’s say, ‘theatre’ added to it all.

Meetings, the office’s answer to the theatre, have proliferated. They are harder to avoid now that invitations must be responded to and diaries are public. Even if you don’t say anything, cameras make meetings into a miming performance: an attentive expression and occasional nodding now count as a form of work. The chat function is a new way to project yourself. Satya Nadella, the boss of Microsoft, says that comments in chat help him to meet colleagues he would not otherwise hear from. Maybe so, but that is an irresistible incentive to pose questions that do not need answering and offer observations that are not worth making.Source: The rise of performative work | The EconomistShared documents and messaging channels are also playgrounds of performativity. Colleagues can leave public comments in documents, and in the process notify their authors that something approximating work has been done. They can start new channels and invite anyone in; when no one uses them, they can archive them again and appear efficient. By assigning tasks to people or tagging them in a conversation, they can cast long shadows of faux-industriousness. It is telling that one recent research study found that members of high-performing teams are more likely to speak to each other on the phone, the very opposite of public communication.

Performative celebration is another hallmark of the pandemic. Once one person has reacted to a message with a clapping emoji, others are likely to join in until a virtual ovation is under way. At least emojis are fun. The arrival of a round-robin email announcing a promotion is as welcome as a rifle shot in an avalanche zone. Someone responds with congratulations, and then another recipient adds their own well wishes. As more people pile in, pressure builds on the non-responders to reply as well. Within minutes colleagues are telling someone they have never met in person how richly they deserve their new job.

Vaccine Hesitancy as part of a Plague Anthology

I’m not sure who’s behind this website, but it looks good. I appreciated the historical context behind vaccine hesitancy in cultures other than my own provided in the most recent post.

Anti-vaxxers adjacent to conspiracy theorists are nuts, but there’s definitely a communications angle to ensuring the effective roll-out of life-saving vaccines.

In Egypt, around 1800, there are reports of 60 000 deaths each year. The Ottoman ruler, Muhammad Ali Pasha, began in 1819 to institute a plan for general vaccinations and the logical people to carry this out were the barber-surgeons, known and trusted by the locals. While the Bedouin had long been enthusiastic about protecting their children in this way, the fellahin (peasantry) was reluctant, largely because they did not trust the government and thought it was a way of “marking” their children for conscription. Religious objections and concerns about mixing Muslim and Christian blood also played their part, and attempts to bribe the vaccinators were not uncommon.Source: Vaccine Hesitancy – Egypt 1866 | Plague AnthologyAfter the serious epidemic of 1836, official efforts intensified, with barber-vaccinators being trained and records kept. Gradually, the message got through and by 1850, the decline in child mortality was affecting the population statistics. The following anecdote, describes a perhaps surprising pocket of vaccine hesitancy.

Let's Settle This

This is good fun and, in fact, Laura and I used it to structure the upcoming Season 3 trailer for our podcast.

It's time to settle the endless internet debates.Source: Let's Settle This

Signal's CEO on 'web3'

My first response to most new technological things is usually “cool, I wonder how I/we could use that?” With so-called ‘web3’, though, I’ve kind of thought it was bullshit.

This post by Moxie Marlinspike, CEO of Signal, goes a step forward and includes opinions by someone who actually knows what they’re talking about.

I’m not sure what I think about the bit quoted below about not distributing infrastructure? In Marxist terms, it seems like not distributing or providing ownership of the means of production?

If we do want to change our relationship to technology, I think we’d have to do it intentionally. My basic thoughts are roughly:Source: Moxie Marlinspike >> Blog >> My first impressions of web3

- We should accept the premise that people will not run their own servers by designing systems that can distribute trust without having to distribute infrastructure. This means architecture that anticipates and accepts the inevitable outcome of relatively centralized client/server relationships, but uses cryptography (rather than infrastructure) to distribute trust. One of the surprising things to me about web3, despite being built on “crypto,” is how little cryptography seems to be involved!

- We should try to reduce the burden of building software. At this point, software projects require an enormous amount of human effort. Even relatively simple apps require a group of people to sit in front of a computer for eight hours a day, every day, forever. This wasn’t always the case, and there was a time when 50 people working on a software project wasn’t considered a “small team.” As long as software requires such concerted energy and so much highly specialized human focus, I think it will have the tendency to serve the interests of the people sitting in that room every day rather than what we may consider our broader goals. I think changing our relationship to technology will probably require making software easier to create, but in my lifetime I’ve seen the opposite come to pass. Unfortunately, I think distributed systems have a tendency to exacerbate this trend by making things more complicated and more difficult, not less complicated and less difficult.

Update: Moxie Marlinspike has announced he’s stepping down as Signal CEO.

Pessimism of the intellect, optimism of the will.

Someone I once knew well used to cite Gramsci’s famous quotation: “Pessimism of the intellect, optimism of the will.” I’m having to channel that as I look forward to 2022.

Here’s the well-informed writer Charlie Stross on the ways he sees things panning out.

Climate: we're boned. Quite possibly the Antarctic ice shelves will be destablized decades ahead of schedule, leading to gradual but inexorable sea levels rising around the world. This may paradoxically trigger an economic boom in construction—both of coastal defenses and of new inland waterways and ports. But the dismal prospect is that we may begin experiencing so many heat emergencies that we destabilize agriculture. The C3 photosynthesis pathway doesn't work at temperatures over 40 degrees celsius. The C4 pathway is a bit more robust, but not as many crops make use of it. Genetic engineering of hardy, thermotolerant cultivars may buy us some time, but it's not going to help if events like the recent Colorado wildfires become common.Source: Oh, 2022! | Charlie’s DiaryPolitics: we’re boned there, too. Frightened people are cautious people, and they don’t like taking in refugees. We currently see a wave of extreme right-wing demagogues in power in various nations, and increasingly harsh immigration laws all round. I can’t help thinking that this is the ruling kleptocracy battening down the hatches and preparing to fend off the inevitable mass migrations they expect when changing sea levels inundate low-lying coastal nations like Bangladesh. The klept built their wealth on iron and coal, then oil: they invested in real estate, inflated asset bubble after asset bubble, drove real estate prices and job security out of reach of anyone aged under 50, and now they’d like to lock in their status by freezing social mobility. The result is a grim dystopia for the young—and by “young” I mean anyone who isn’t aged, or born with a trust fund—and denial of the changing climate is a touchstone. The propaganda of the Koch network and the Mercer soft money has corrupted political discourse in the US, and increasingly the west in general. Australia and the UK have their own turbulent billionaires manipulating the political process.

Laptops aren't what they used to be

This guy went back to using a Lenovo ThinkPad T430 and explains why in this post. Over Christmas, I replaced some of the cosmetic parts of my X220, which is also from 2012.

It’s amazing how usable it still is, and I actively prefer the keyboard over the more modern ones.

I’ve been using this setup for over a month now, and it has been surprisingly adequate. Yes, opening Java projects in IntelliJ will make things slow, and to record my desktop with OBS and acceptable performance, I had to drop my screen resolution to 720p. I can’t expect everything to work super well on this machine, but for a computer that’s released almost 10 years ago, it’s still holding up well.Source: Why I went back to using a ThinkPad from 2012I’d like to thank Intel here for making this possible. The CPU innovation stagnation between 2012-2017 has resulted in 4 cores still being an acceptable low-end CPU in early 2022. Without this, my laptop would likely be obsolete by now.

Somebody please tell the travel industry there's a climate emergency

Utter madness.

German giant Lufthansa said it would have to fly an additional 18,000 “unnecessary” flights through the winter to hold on to landing slots. Even if the holidays brought a big increase in passengers — marked by thousands of flight cancellations that left travelers stranded — the rest of the winter period could be slow as omicron surges worldwide.Source: Near-empty flights crisscross Europe to secure landing slots | AP NewsLanding and departure slots for popular routes in the biggest airports are an extremely precious commodity in the industry, and to keep them, airlines have to guarantee a high percentage of flights. It is why loss-making flights have to be maintained to ensure companies keep their slots.

It was an accepted practice despite the pollution concerns, but the pandemic slump in flying put that in question. Normally, airlines had to use 80% of their given slots to preserve their rights, but the EU has cut that to 50% to ensure as few empty or near-empty planes crisscross the sky as possible.