It's impossible to 'hang out' on the internet, because it is not a place

I spend a lot of time online, but do I ‘hang out’ there? I certainly hang out with people playing video games, but that’s online rather than on the internet. Drew Austin argues that because of the amount of money and algorithms on the internet, it’s impossible to hang out there.

I’m not sure. It depends on your definition of ‘hanging out’ and it also depends whether you’re just focusing on mainstream services, or whether you’re including the Fediverse and niche things such as School of the Possible. The latter, held every Friday by Dave Grey, absolutely is ‘hanging out’, but whether Zoom calls with breakout rooms count as the internet depends on semantics, I guess.

Is “hanging out” on the internet truly possible? I will argue: no it’s not. We’re bombarded with constant thinkpieces about various social crises—young people are sad and lonely; culture is empty or flat or simply too fragmented to incubate any shared meaning; algorithms determine too much of what we see. Some of these essays even note our failure to hang out. The internet is almost always an implicit or explicit villain in such writing but it’s increasingly tedious to keep blaming it for our cultural woes.

Perhaps we could frame the problem differently: The internet doesn’t have to demand our presence the way it currently does. It shouldn’t be something we have to look at all time. If it wasn’t, maybe we’d finally be free to hang out.

[…]

How many hours have been stolen from us? With TV, we at least understood ourselves to be passive observers of the screen, but the interactive nature of the internet fostered the illusion that message boards, Discord servers, and Twitter feeds are digital “places” where we can in fact hang out. If nothing else, this is a trick that gets us to stick around longer. A better analogy for online interaction, however, is sitting down to write a letter to a friend—something no one ever mistook for face-to-face interaction—with the letters going back and forth so rapidly that they start to resemble a real-time conversation, like a pixelated image. Despite all the spatial metaphors in which its interfaces have been dressed up, the internet is not a place.

Source: Kneeling Bus

Image: Wesley Tingey

'Wet streets cause rain' stories

First things first, the George Orwell quotation below is spurious, as the author of this article, David Cain, points out at the end of it. The point is that, it sounds plausible, so we take it on trust. It confirms our worldview.

We live in a web of belief, as W.V. Quine put it, meaning that we easily accept things that confirm our core beliefs. And then, with beliefs that are more peripheral, we pick them up and put them down at no great cost. Finding out that the capital of Burkina Faso is Ouagadougou and not Bobo-Dioulasso makes no practical difference to my life. It would make a huge difference to the residents of either city, however.

I don’t like misinformation, and I think we’re in quite a dangerous time in terms of how it might affect democratic elections. However, it has always been so. Gossip, rumour, and straight up lies have swayed human history. The thing is that, just as we are able to refute poor journalism and false statements on social networks about issues we know a lot about, so we need to be a bit skeptical about things outside of our immediate knowledge.

After all, as Cain quotes Michael Crichton as saying, there are plenty of ‘wet streets cause rain’ stories out there, getting causality exactly backwards — intentionally or otherwise.

Consider the possibility that most of the information being passed around, on whatever topic, is bad information, even where there’s no intentional deception. As George Orwell said, “The most fundamental mistake of man is that he thinks he knows what’s going on. Nobody knows what’s going on.”

Technology may have made this state of affairs inevitable. Today, the vast majority of person’s worldview is assembled from second-hand sources, not from their own experience. Second-hand knowledge, from “reliable” sources or not, usually functions as hearsay – if it seems true, it is immediately incorporated into one’s worldview, usually without any attempt to substantiate it. Most of what you “know” is just something you heard somewhere.

[…]

It makes perfect sense, if you think about it, that reporting is so reliably unreliable. Why do we expect reporters to learn about a suddenly newsworthy situation, gather information about it under deadline, then confidently explain the subject to the rest of the nation after having known about it for all of a week? People form their entire worldviews out of this stuff.

[…]

People do know things though. We have airplanes and phones and spaceships. Clearly somebody knows something. Human beings can be reliable sources of knowledge, but only about small slivers of the whole of what’s going on. They know things because they deal with their sliver every day, and they’re personally invested in how well they know their sliver, which gives them constant feedback on the quality of their beliefs.

Source: Raptitude

Dividers tell the story of how they’ve renovated their houses, becoming architects along the way. Continuers tell the story of an august property that will remain itself regardless of what gets built.

This long article in The New Yorker is based around the author wondering whether the fun he’s had playing with his four year-old will be remembered by his son when he grows up.

Wondering whether you are the same person at the start and end of your life was a central theme of a ‘Mind, Brain, and Personal Identity’ course I did as part of my Philosophy degree around 22 years ago. I still think about it. On the one hand is the Ship of Theseus argument, where you can one-by-one replace all of the planks of a ship, but it’s still the same ship. If you believe it’s the same ship, and believe that you’re the same person as when you were younger, then the author of this article would call you a ‘Continuer’.

On the other hand, if you think that there are important differences between the person you are now and when you were younger. If, for example, the general can’t remember ‘going over the top’ as a young man, despite still having the medal to prove it, is he the same person? If you don’t think so, then perhaps you are a ‘Divider’.

I don’t consider it so clean cut. We tell stories about ourselves and others, and these shape how we think. For example, going to therapy five years ago helped me ‘remove the mask’ and reconsider who I am. That involved reframing some of the experiences in my life and realising that I am this kind of person rather than that kind of person.

It’s absolutely fine to have seasons in your life. In fact, I’m pretty sure there’s some ancient wisdom to that effect?

Are we the same people at four that we will be at twenty-four, forty-four, or seventy-four? Or will we change substantially through time? Is the fix already in, or will our stories have surprising twists and turns? Some people feel that they’ve altered profoundly through the years, and to them the past seems like a foreign country, characterized by peculiar customs, values, and tastes. (Those boyfriends! That music! Those outfits!) But others have a strong sense of connection with their younger selves, and for them the past remains a home. My mother-in-law, who lives not far from her parents’ house in the same town where she grew up, insists that she is the same as she’s always been, and recalls with fresh indignation her sixth birthday, when she was promised a pony but didn’t get one. Her brother holds the opposite view: he looks back on several distinct epochs in his life, each with its own set of attitudes, circumstances, and friends. “I’ve walked through many doorways,” he’s told me. I feel this way, too, although most people who know me well say that I’ve been the same person forever.

[…]

The philosopher Galen Strawson believes that some people are simply more “episodic” than others; they’re fine living day to day, without regard to the broader plot arc. “I’m somewhere down towards the episodic end of this spectrum,” Strawson writes in an essay called “The Sense of the Self.” “I have no sense of my life as a narrative with form, and little interest in my own past.”

[…]

John Stuart Mill once wrote that a young person is like “a tree, which requires to grow and develop itself on all sides, according to the tendency of the inward forces which make it a living thing.” The image suggests a generalized spreading out and reaching up, which is bound to be affected by soil and climate, and might be aided by a little judicious pruning here and there.

Source: The New Yorker

Can’t access it? Try Pocket or Archive Buttons

Source: 2024 Drone Photo Awards Nominees

The iPhone effect, if it was ever real in the first place, is certainly not real now.

It’s announcement time at Apple’s WWDC. And apart from trying to rebrand AI as “Apple Intelligence” I haven’t seen many people get very excited about it. MKBHD has an overview if you want to get into the details. I just use macOS without iCloud because everything works and my Mac Studio is super-fast.

Ryan Broderick has a word for Apple fanboys, who seem to think that everything they touch is gold. Seems like their Vision Pro hasn’t brought VR mainstream, and after the more innovative Steve Jobs era, it seems like they’re more happy to be a luxury brand that plays it relatively safe.

If you press Apple fanboys about their weird revisionist history, they usually pivot to the argument that while iOS’s marketshare has essentially remained flat for a decade, their competitors copy what they do and that trickles down into popular culture from there. Which I’m not even sure is true either. Android had mobile payments three years before Apple, had a smartwatch a year before, a smart speaker a year before, and launched a tablet around the same time as the iPad. We could go on and on here.

And, I should say, I don’t actually think Apple sees themselves as the great innovator their Gen X blogger diehards do. In the 2010s, they shifted comfortably from a visionary tastemaker, at least aesthetically, into something closer to an airport lounge or a country club for consumer technology. They’ll eventually have a version of the new thing you’ve heard about, once they can rebrand it as something uniquely theirs. It’s not VR, it’s “spatial computing,” it’s not AI, it’s “Apple Intelligence”. But they’re not going to shake the boat. They make efficiently-bundled software that’s easy to use (excluding iPadOS) and works well across their nice-looking and easy-to-use devices (excluding the iPad). Which is why Apple Intelligence is not going to be the revolution the AI industry has been hoping for. The same way the Vision Pro wasn’t. The iPhone effect, if it was ever real in the first place, is certainly not real now.

Source: Garbage Day

The latest Hardcore History just dropped

I could listen to Dan Carlin read the phone book all day, so to read the announcement that his latest multi-part (and multi-hour!) series for the Hardcore History podcast has started is great news!

So, after almost two decades of teasing it, we finally begin the Alexander the Great saga.

I have no idea how many parts it will turn out to be, but we are calling the series “Mania for Subjugation” and you can get the first installment HERE. (of course you can also auto-download it through your regular podcast app).

[…]

And what a story it is! My go-to example in any discussion about how truth is better than fiction. It is such a good tale and so mind blowing that more than 2,300 years after it happened our 21st century people still eagerly consume books, movies, television shows and podcasts about it. Alexander is one of the great apex predators of history, and he has become a metaphor for all sorts of Aesop fables-like morals-to-the-story about how power can corrupt and how too much ambition can be a poison.

Source: Look Behind You!

The logical conclusion of rich, isolated computer programmers having ketamine orgies with each other

Ryan Broderick with a reality check about OpenAI and GenAI in general:

I think this [Effective Altruists vs effective accelerationists debate] is all very silly. I also think this the logical conclusion of rich, isolated computer programmers having ketamine orgies with each other. But it does, unfortunately, underpin every debate you’re probably seeing about the future of AI. Silicon Valley’s elite believe in these ideas so devoutly that Google is comfortable sacrificing its own business in pursuit of them. Even though EA and e/acc are effectively just competing cargo cults for a fancy autocorrect. Though, they also help alleviate some of the intense pressure huge tech companies are under to stay afloat in the AI arms race. Here’s how it works.

[…]

Analysts told The Information last year that OpenAI’s ChatGPT is possibly costing the company up to $700,000 a day to operate. Sure, Microsoft invested $13 billion in the company and, as of February, OpenAI was reportedly projecting $2 billion in revenue, but it’s not just about maintaining what you’ve built. The weird nerds I mentioned above have all decided that the finish line here is “artificial general intelligence,” or AGI, a sentient AI model. Which is actually very funny because now every major tech company has to burn all of their money — and their reputations — indefinitely, as they compete to build something that is, in my opinion, likely impossible (don’t @ me). This has largely manifested as a monthly drum beat of new AI products no one wants rolling out with increased desperation. But you know what’s cheaper than churning out new models? “Scaring” investors.

[…]

This is why OpenAI lets CEO Sam Altman walk out on stages every few weeks and tell everyone that its product will soon destroy the economy forever. Because every manager and executive in America hears that and thinks, “well, everyone will lose their jobs but me,” and continues paying for their ChatGPT subscription. As my friend Katie Notopoulos wrote in Business Insider last week, it’s likely this is the majority of what Altman’s role is at OpenAI. Doomer in chief.

[…]

I’ve written this before, but I’m going to keep repeating it until the god computer sends me to cyber hell: The “two” “sides” of the AI “debate“ are not real. They both result in the same outcome — an entire world run by automations owned by the ultra-wealthy. Which is why the most important question right now is not, “how safe is this AI model?” It’s, “do we need even need it?”

Source: Garbage Day

Image: Google DeepMind

In the English language, a human alone has distinction while all other living beings are lumped with the nonliving “its.”

I posted on social media recently that I want more verbs and fewer nouns in my life. This article, via Dense Discovery backs this sentiment up, with reference to the author of Braiding Sweetgrass' indigenous heritage.

Grammar, especially our use of pronouns, is the way we chart relationships in language and, as it happens, how we relate to each other and to the natural world.

[…]

[…]

We have a special grammar for personhood. We would never say of our late neighbor, “It is buried in Oakwood Cemetery.” Such language would be deeply disrespectful and would rob him of his humanity. We use instead a special grammar for humans: we distinguish them with the use of he or she, a grammar of personhood for both living and dead Homo sapiens. Yet we say of the oriole warbling comfort to mourners from the treetops or the oak tree herself beneath whom we stand, “It lives in Oakwood Cemetery.” In the English language, a human alone has distinction while all other living beings are lumped with the nonliving “its.”

There are words for states of being that have no equivalent in English. The language that my grandfather was forbidden to speak is composed primarily of verbs, ways to describe the vital beingness of the world. Both nouns and verbs come in two forms, the animate and the inanimate. You hear a blue jay with a different verb than you hear an airplane, distinguishing that which possesses the quality of life from that which is merely an object.

[…]

Linguistic imperialism has always been a tool of colonization, meant to obliterate history and the visibility of the people who were displaced along with their languages… Because we speak and live with this language every day, our minds have also been colonized by this notion that the nonhuman living world and the world of inanimate objects have equal status. Bulldozers, buttons, berries, and butterflies are all referred to as it, as things, whether they are inanimate industrial products or living beings.

Source: Orion Magazine

Oblivion doesn’t just mean eradication: it is erasure

If you haven’t come across the The New Design Congress before, I highly suggest reading their essays and research notes, and subscribing to their newsletter. The following is an excerpt from their most recent issue:

It is not only a gluttony for energy that animates Big and Small Tech, but also social legitimacy. Here, oblivion doesn’t just mean eradication: it is erasure. This manifests in the social burden of the so-called ‘unintended consequences’ of technology. There is much concern to hold regarding the deployment of digitised forms of identification, including so-called decentralised and self-sovereign ones. Feasible only at immense scale, their proposed reliance on power-hungry blockchains so susceptible to scams, frauds and wastefulness is but one issue. Digital identities sketch schizophrenic futures made of radical self-custody combined with naive market-based ecosystems of private identity managers. This assetisation is backed by a trust mechanism bound to become the mother of all social engineering attack vector, relying as it does on idealist claims of identity. If trustworthiness within a digital identity system can be defined as that which is necessary to permit access, it can also be defined as that which necessarily breaks security policies. In the US and UK, voter ID is already an efficient weapon for reactionary power structures to fight off democratic participation, particularly of minorities. No actors in the field has seriously reckoned with such socio-technical weaponisation of their tech stack.

As we etched in the previous Cable, another world is possible. One where new modes of self- and interpersonal recognition are developed from a posture of conciliation, rather than a fragile and vampiric extraction of socially-shared goods. The challenge now is sifting through the gold rush, to find systems that are capable of fulfilling this promise.

Source: CABLE 2024/03-05

65% of UK adults aged 18-35 support “a strong leader who doesn’t have to bother with parliamentary elections”

I wouldn’t usually link to UnHerd, but the figure quoted here is taken from a tweet by Rory Stewart, who I do trust. I’m hugely concerned about creeping authoritarianism, and so why I’m dead against the Tories, I can’t see how an even further-right party in the guise of Reform UK is something to be celebrated.

While I get the desire to have someone to sort things out, the way we do so is together using systems. Not by electing a tough-talking figurehead who dispenses with elections.

It is no wonder that, after a generation of Conservative Party rule, 46% of British adults now support “a strong leader who doesn’t have to bother with parliamentary elections”, a figure which rises to 65% of those aged 18-35. By Gove’s definition, the majority of the electorate will soon be composed of extremists: this widening gulf between the governing and the governed is not a recipe for political stability.

Source: UnHerd

Image: Cold War Steve

Podcasts worth listening to

TIME has a list of the ‘best podcasts of 2024 so far’. 99% Invisible is great, but my favourite podcasts are nowhere to be seen on here, not to mention my own with Laura, The Tao of WAO! I love podcasts, and listen to them while running, in the gym, washing dishes, in the car, mowing the lawn… wherever,

You can download an OPML file (?) of all of the shows I subscribe via my Open Source app of choice, AntennaPod. My favourites at the moment though, in alphabetical order, are:

- Dan Carlin’s Hardcore History

- No Such Thing As A Fish

- The Art of Manliness

- The Rest is Politics

- You Are Not So Smart

It’s getting increasingly difficult to discover new treasures in the cacophonous world of podcasting. There are a lot of shows—many of them not good. It seems every week a new celebrity announces a podcast in which they ask other celebrities out-of-touch questions or revisit their own network sitcom heyday. And studios continue to scrounge for the most morally dubious true-crime topics they can find.

Source: TIME

Image: C D-X

The theory of 'a rising tide lifts all boats' does not work when you allow the people with the most influence to buy their way out of the water

I agree with this so much. I’ve had jobs where I’ve been entitled to private health insurance, which I’ve turned down because I want to have a stake in the success and continued existence of the National Health Service. I’d love a world where I don’t have to have a car because public transport is ubiquitous. I would never send my kids to private school, and am delighted that Labour have announced that, if they get into power, they’ll raise money for public schools from taxing private schools like the businesses they are.

One of the most direct ways to improve a flawed system is simply to end the ability of rich and powerful people to exclude themselves from it. If, for example, you outlawed private schools, the public schools would get better. They would get better not because every child deserves to have a quality education, but rather because it would be the only for rich and powerful people to ensure that their children were going to good schools. The theory of “a rising tide lifts all boats” does not work when you allow the people with the most influence to buy their way out of the water. It would be nice if we fixed broken systems simply because they are broken. In practice, governments are generally happy to ignore broken things if they do not affect people with enough power to make the government listen. So the more people that we push into public systems, the better.

Rich kids should go to public schools. The mayor should ride the subway to work. When wealthy people get sick, they should be sent to public hospitals. Business executives should have to stand in the same airport security lines as everyone else. The very fact that people want to buy their way out of all of these experiences points to the reason why they shouldn’t be able to. Private schools and private limos and private doctors and private security are all pressure release valves that eliminate the friction that would cause powerful people to call for all of these bad things to get better. The degree to which we allow the rich to insulate themselves from the unpleasant reality that others are forced to experience is directly related to how long that reality is allowed to stay unpleasant. When they are left with no other option, rich people will force improvement in public systems. Their public spirit will be infinitely less urgent when they are contemplating these things from afar than when they are sitting in a hot ER waiting room for six hours themselves.

Source: How Things Work

Image: Daniel Sinoca

TikTok as spectacle

Audrey Watters links to this post by Rob Horning which talks about sports, social media, AI, and Guy Debord. So pretty much catnip for me.

I’m just going to share the part about TikTok and Debord’s ‘spectacle’. It’s worth reading the rest of it for how Horning then goes on to apply this to LLMs such as GPT-4o and the semblance of doing rather than simply watching and consuming.

The way TikTok conflates experience with voyeurism makes it a somewhat clear demonstration of Guy Debord’s “society of the spectacle.” Debord argues that under the conditions of late 20th century capitalism — conditions of media centricity and monopoly that have only intensified into our century — spectacle and lived experience are in a complex dialectic that sustains a generalized alienation and a universal reification. “It is not just that the relationship to commodities is now plain to see, commodities are now all that there is to see; the world we see is the world of the commodity.” Debord concludes that individuals are “condemned to the passive acceptance of an alien everyday reality” and are driven to “resorting to magical devices” to “entertain the illusion” of “reacting to this fate.” TikTok could be considered as one of those magical devices (along with the phone in its entirety) that manages that dialectic. Under the guise of “entertainment,” passivity reappears to the entertained individual as a kind of perfected agency; alienation is redeemed as the requisite precursor to consumer delectation.

The way TikTok conflates experience with voyeurism makes it a somewhat clear demonstration of Guy Debord’s “society of the spectacle.” Debord argues that under the conditions of late 20th century capitalism — conditions of media centricity and monopoly that have only intensified into our century — spectacle and lived experience are in a complex dialectic that sustains a generalized alienation and a universal reification. “It is not just that the relationship to commodities is now plain to see, commodities are now all that there is to see; the world we see is the world of the commodity.” Debord concludes that individuals are “condemned to the passive acceptance of an alien everyday reality” and are driven to “resorting to magical devices” to “entertain the illusion” of “reacting to this fate.” TikTok could be considered as one of those magical devices (along with the phone in its entirety) that manages that dialectic. Under the guise of “entertainment,” passivity reappears to the entertained individual as a kind of perfected agency; alienation is redeemed as the requisite precursor to consumer delectation.

“The spectacle is essentially tautological,” Debord writes, “for the simple reason that its means and ends are identical. It is the sun that never sets on the empire of modern passivity. It covers the entire globe, basking in the perpetual warmth of its own glory.”

Source: Internal exile

Absurd design

We were using the CC0-licensed Humaaans for some work this week when the client decided they didn’t particularly like them. When searching for alternatives, I stumbled across Absurd Designs which doesn’t work any better, but which I’ve used for a couple of posts on my personal blog.

What about absurd illustrations for your projects? Take every user on an individual journey through their own imagination.

Source: Absurd Designs

The AI Egg

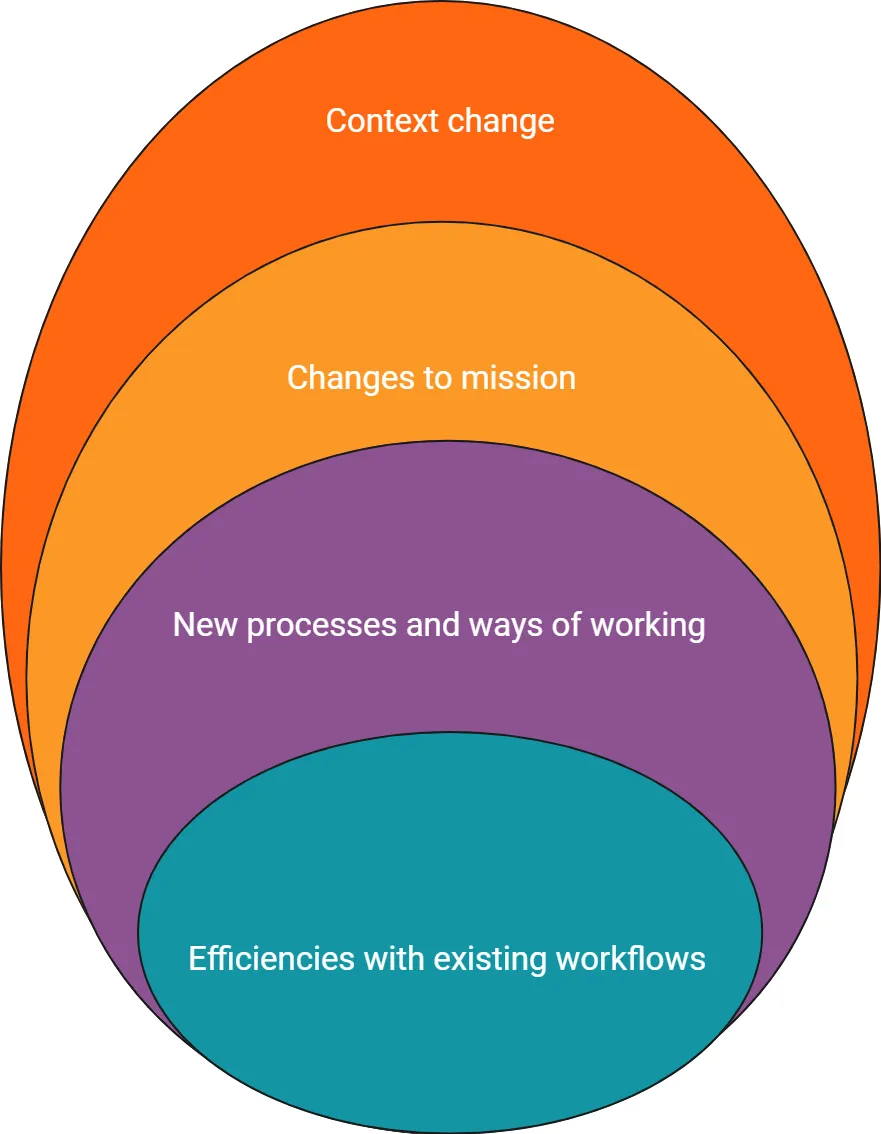

Dan Sutch, who I’ve known at this point in various roles for around 17 years, introduces the ‘AI Egg’ to make sense different perspectives / discussion contexts, for Generative AI.

I think it bears more than a passing resemblance to the SAMR model, which focuses on educational technology transformation. I talked about that last year in relation to AI. What can I say, it’s a curse being ahead of the curve.

We’ve held thousands of conversations with charities, trusts and foundations, digital agencies and community groups discussing the opportunities and challenges of Generative AI (GenAI). One thing we’ve learnt is that the scale and speed of the changes means there are thousands more conversations to have (and much more action too). The reason is that there are many discussions, debates (and again, action!) to be had at multiple levels, because of the scale of the implications of GenAI.

Source: CAST Writers

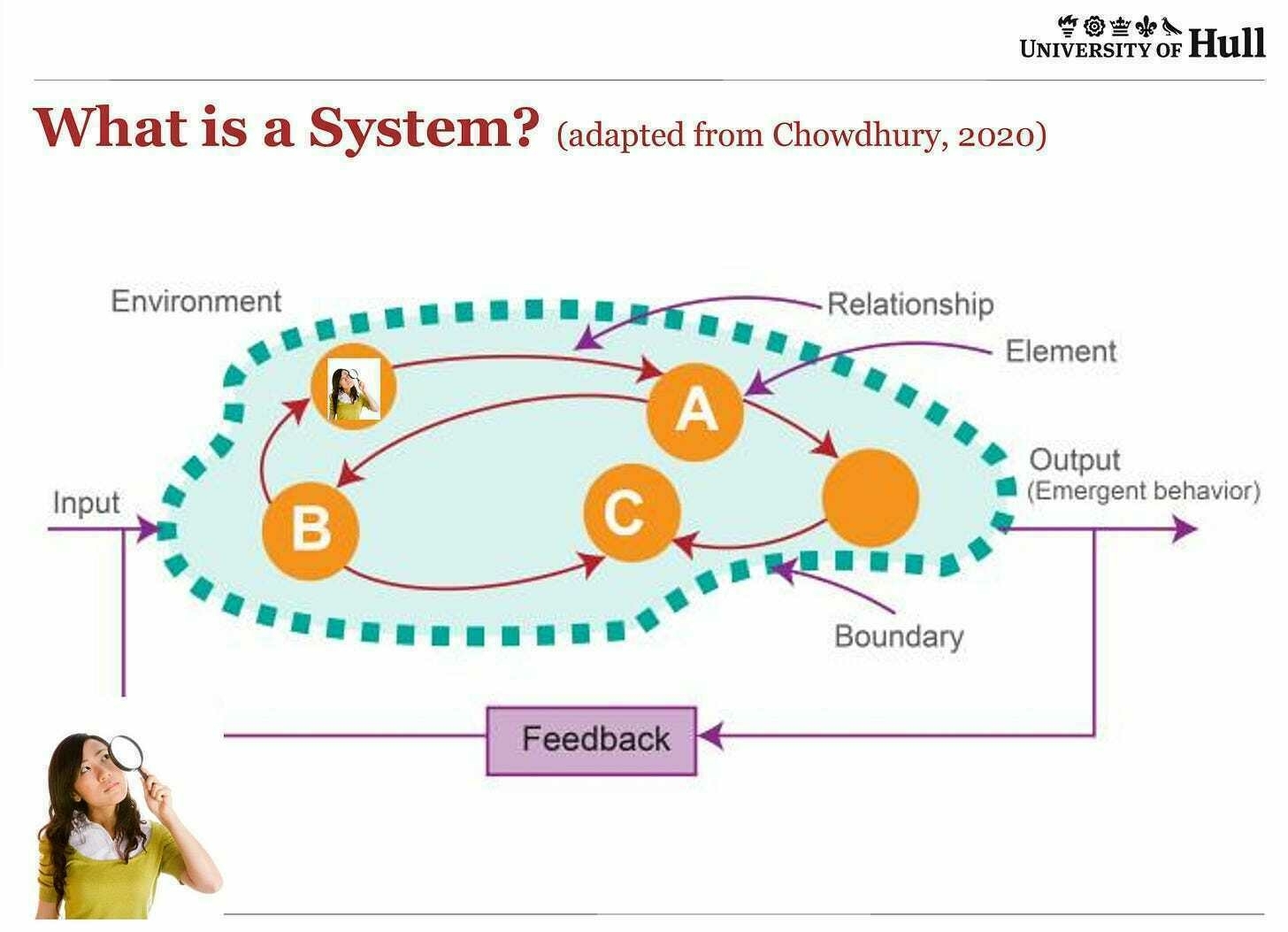

What is systems thinking?

I only found out about this online event featuring Gerald Midgley shortly before it occurred. I couldn’t make it, but I’m glad there’s a recording so that I can watch it at some point as part of my learning around systems thinking.

In this post, Andrew Curry, whose newsletter is well worth subscribing to, summarises the main points that Midgley made on his blog.

I’m a member of the Agri-Foods for Net Zero network, and it runs a good series of knowledge sharing events. (I’ve written about AFNZ here before). Last month it invited one of Britain’s leading systems academics, Gerald Midgley, to do an introductory talk on using systems thinking to explore complex problems.

[…] The questions he addressed were:

What are highly complex problems?

What is systems thinking?

Different systems approaches for different purposes

Source: The Next Wave

Alone time

I haven’t spent enough time alone recently. I need to get back out into the mountains with my tent.

Neuroscientists have discovered that, regardless of your clinical label, those of us who prefer solitude have something in common. We tend to have low levels of oxytocin in our brains, and higher levels of vasopressin. That’s the recipe for introverts and recluses, even hermits. Michael Finkel talks about this brain profile in his book The Stranger in The Woods, about a hermit named Christopher Knight. He lived in the backwoods of Maine for nearly three decades, living off goods pillaged from cabins and vacation homes. He terrified residents, but nobody could ever find him.

When police finally found Knight, they were shocked. The guy was in nearly perfect mental and physical health. Locals didn’t believe his story. They expected the Unabomber. Instead, Knight turned out to be a pleasant guy who loved reading. He was easy to get along with. He had no grudge against society. Therapists got exhausted trying to diagnose him and gave up. “I diagnose him as a hermit,” they said.

[…]

Society doesn’t leave hermits alone. They’re doing everything they can to force social interaction on everyone. They insist it’s good for you, ignoring the evidence that solitude can benefit people, lowering their blood pressure and even encouraging brain cell growth. It just so happens that social activity drives this twisted economy.

It makes sense why you want to be alone.

Source: OK Doomer

The effort required to maintain internally consistent and intellectually honest positions in the current environment is daunting

Albert Wenger, the only Venture Capitalist I pay any attention to, writes on his blog that… he misses writing on his blog. He talks about a couple of reasons for this, the first of which is the usual excuse of “being too busy”.

It’s the second reason that interests me, though, especially as I feel the same futility:

[T]he world is continuing to descend back into tribalism. And it has been exhausting trying to maintain a high rung approach to topics amid an onslaught of low rung bullshit. Whether it is Israel-Gaza, the Climate Crisis or Artificial Intelligence, the online dialog is dominated by the loudest voices. Words have been rendered devoid of meaning and reduced to pledges of allegiance to a tribe. I start reading what people are saying and often wind up feeling isolated and exhausted. I don’t belong to any of the tribes nor would I want to. But the effort required to maintain internally consistent and intellectually honest positions in such an environment is daunting. And it often seems futile.

Source: Continuations

Image: Timon Studler

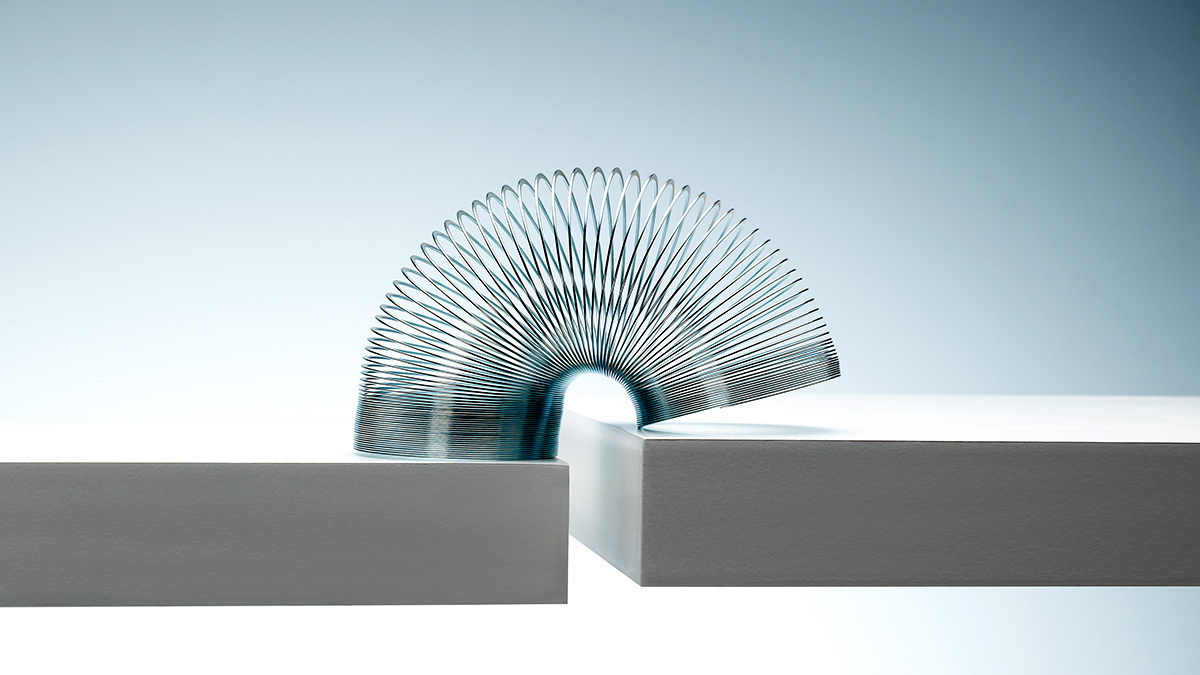

3 strategies to counter the unseen costs of boundary work within organisations

This article focuses on research that reveals people who do ‘boundary work’ within organisations, that is to say, individuals who span different silos, are more likely to suffer burnout and exhibit negative social behaviours.

The researchers used “field data, surveys, and experiments involving more than 2,000 working adults across two countries” and found that there are three ways that organisations can reap the benefits of this boundary work while mitigating the downsides:

- Strategically integrate cross-silo collaboration into formal roles (i.e. acknowledge their role as “cross-team, cross-function collaborator[s]")

- Provide adequate resources (e.g. training programmes and tools for collaboration, but also reward and recognition)

- Develop multifaceted check-in mechanisms and provide opportunities to disengage (i.e. gain feedback in multiple ways to gauge when boundary spanners need additional space and/or support)

While past research has documented many benefits of boundary-spanning, we suspected that individuals collaborating across silos may be faced with higher levels of cognitive and emotional demands, which could lead to higher levels of burnout. We also wanted to understand if the exhaustion and burnout they faced may lead to abusive behavior toward others.

[…]

Cross-silo collaboration is a double-edged sword in the modern workplace. While it undeniably serves as a catalyst for expedited coordination and innovation, it can adversely affect the well-being of those who engage in it. The good news is that organizations can adopt a multifaceted approach to support their boundary-spanning employees.

Source: Harvard Business Review

Just because we cannot imagine a future does not mean it cannot happen

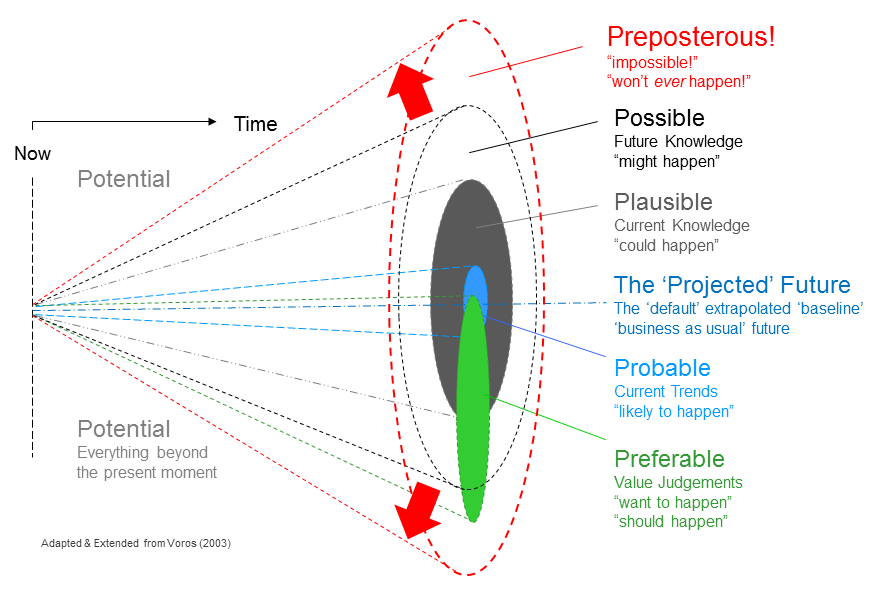

I came across this post yesterday in which I was interested primarily for the graphic. The author didn’t specifically acknowledge the source, but I found Joseph Voros' blog in which he explains how he came up with what he calls The Futures Cone:

The above descriptions are best considered not as rigidly-separate categories, but rather as nested sets or nested classes of futures, with the progression down through the list moving from the broadest towards more narrow classes, ultimately to a class of one — the ‘projected’. Thus, every future is a potential future, including those we cannot even imagine — these latter are outside the cone, in the ‘dark’ area, as it were. The cone metaphor can be likened to a spotlight or car headlight: bright in the centre and diffusing to darkness at the edge — a nice visual metaphor of the extent of our futures ‘vision’, so to speak. There is a key lesson to the listener when using this metaphor—just because we cannot imagine a future does not mean it cannot happen…

Source: The Voroscope