- More workers want to slow down to get things right — "In reality, 61% of workers said they wanted to “slow down to get things right” while only 41%* wanted to “go fast to achieve more.” The divide was even starker among older workers."

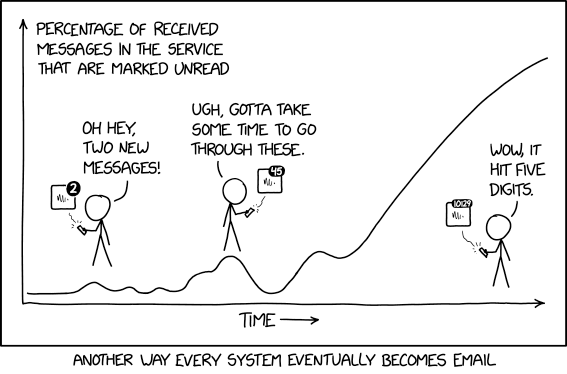

- Workers strongly value uninterrupted focus at work, but most will make an exception to help others — "The results suggest we need to be more thoughtful about when we break our concentration, or ask others to do so. When people know they are helping others in a meaningful way, they tend to be okay with some distraction. But the busywork of meetings, alerts, and emails can quickly disrupt a person’s flow—one of the most important values we polled."

- Most workers have slightly more trust in people closest to the work, rather than people in upper management — "Among all respondents, 53% trusted people “closest to the work,” while only 45% trusted “upper management.” You might assume that younger workers would be the most likely to trust peers over management, but in fact, the opposite was true."

- Workers are torn between idealism and pragmatism — "It’s tempting to assume that addressing just one piece—like taking a stand on societal issues—will necessarily get in the way of the work itself. But our research suggests we can begin to solve the two in tandem, as more equality, inclusion, and diversity tends to come hand-in-hand with a healthier mindset about work."

- Health effects of job insecurity (IZA) — "Workers’ health is not just a matter for employees and employers, but also for public policy. Governments should count the health cost of restrictive policies that generate unemployment and insecurity, while promoting employability through skills training."

- Will your organization change itself to death? (opensource.com) — "Sometimes, an organization returns to the same state after sensing a stimulus. Think about a kid's balancing doll: You can push it and it'll wobble around, but it always returns to its upright state... Resilient organizations undergo change, but they do so in the service of maintaining equilibrium."

- Your Brain Can Only Take So Much Focus (HBR) — "The problem is that excessive focus exhausts the focus circuits in your brain. It can drain your energy and make you lose self-control. This energy drain can also make you more impulsive and less helpful. As a result, decisions are poorly thought-out, and you become less collaborative."

- Slow Thought is marked by peripatetic Socratic walks, the face-to-face encounter of Levinas, and Bakhtin’s dialogic conversations

- Slow Thought creates its own time and place

- Slow Thought has no other object than itself

- Slow Thought is porous

- Slow Thought is playful

- Slow Thought is a counter-method, rather than a method, for thinking as it relaxes, releases and liberates thought from its constraints and the trauma of tradition

- Slow Thought is deliberate

Pre-committed defaults

Uri from Atoms vs Bits identifies a useful trick to quell indecisiveness. They call it a ‘release valve principle’ but I like what he calls it in the body text: a pre-committed default.

Basically, it’s knowing what you’re going to do if you can’t decide on something. This can be particularly useful if you’re conflicted between short-term pain for long-term gain.

One thing that is far too easy to do is get into mental loops of indecision, where you're weighing up options against options, never quite knowing what to do, but also not-knowing how to get out the loop.Source: Release Valve Principles | Atoms vs Bits[…]

There’s a partial solution to this which I call “release valve principles”: basically, a pre-committed default decision rule you’ll use if you haven’t decided something within a given time frame.

I watched a friend do this when we were hanging out in a big city, vaguely looking for a bookshop we could visit but helplessly scrolling through googlemaps to try to find a good one; after five minutes he said “right” and just started walking in a random direction. He said he has a principle where if he hasn’t decided after five minutes where to go he just goes somewhere, instead of spending more time deliberating.

[…]

The release valve principle is an attempt to prod yourself into doing what your long-term self prefers, without forcing you into always doing X or never doing Y – it just kicks in when you’re on the fence.

Image: Unsplash

Everyone hustles his life along, and is troubled by a longing for the future and weariness of the present

Thanks to Seneca for today's quotation, taken from his still-all-too-relevant On the Shortness of Life. We're constantly being told that we need to 'hustle' to make it in today's society. However, as Dan Lyons points out in a book I'm currently reading called Lab Rats: how Silicon Valley made work miserable for the rest of us, we're actually being 'immiserated' for the benefit of Venture Capitalists.

As anyone who's read Daniel Kahneman's book Thinking, Fast and Slow will know, there are two dominant types of thinking:

The central thesis is a dichotomy between two modes of thought: "System 1" is fast, instinctive and emotional; "System 2" is slower, more deliberative, and more logical. The book delineates cognitive biases associated with each type of thinking, starting with Kahneman's own research on loss aversion. From framing choices to people's tendency to replace a difficult question with one which is easy to answer, the book highlights several decades of academic research to suggest that people place too much confidence in human judgement.

WIkipedia

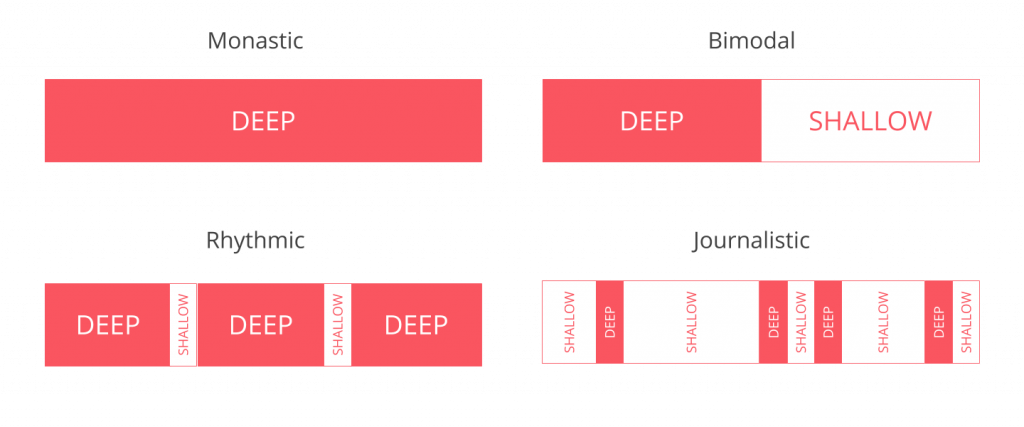

Cal Newport, in a book of the same name, calls 'System 2' something else: Deep Work. Seneca, Kahneman, and Newport, are all basically saying the same thing but with different emphasis. We need to allow ourselves time for the slower and deliberative work that makes us uniquely human.

That kind of work doesn't happen when you're being constantly interrupted, nor when you're in an environment that isn't comfortable, nor when you're fearful that your job may not exist next week. A post for the Nuclino blog entitled Slack Is Not Where 'Deep Work' Happens uses a potentially-apocryphal tale to illustrate the point:

On one morning in 1797, the English poet Samuel Taylor Coleridge was composing his famous poem Kubla Khan, which came to him in an opium-induced dream the night before. Upon waking, he set about writing until he was interrupted by an unknown person from Porlock. The interruption caused him to forget the rest of the lines, and Kubla Khan, only 54 lines long, was never completed.

Nuclino blog

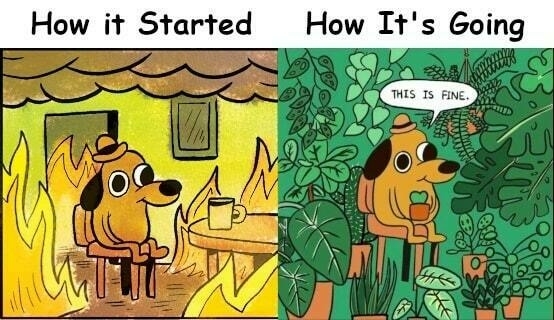

What we're actually doing by forcing everyone to use synchronous tools like Slack is a form of journalistic rhythm — but without everyone being synced-up:

If you haven't read Deep Work, never fear, because there's an epic article by Fadeke Adegbuyi for doist entitled The Complete Guide to Deep Work which is particularly useful:

This is an actionable guide based directly on Newport’s strategies in Deep Work. While we fully recommend reading the book in its entirety, this guide distills all of the research and recommendations into a single actionable resource that you can reference again and again as you build your deep work practice. You’ll learn how to integrate deep work into your life in order to execute at a higher level and discover the rewards that come with regularly losing yourself in meaningful work.

Fadeke Adegbuyi

Lots of articles and podcast episodes say they're 'actionable' or provide 'tactics' for success. I have to say this one delivers. I'd still read Newport's book, though.

Interestingly, despite all of the ridiculousness spouted by VC's, people are pretty clear about how they can do their best work. After a Dropbox survey of 500 US-based workers in the knowledge economy, Ben Taylor outlines four 'lessons' they've learned:

I think we need to reclaim workplace culture from the hustlers, shallow thinkers, and those focused on short-term profit. Let's reflect on how things actually work in practice. As Nassim Nicholas Taleb says about being 'antifragile', let's "look for habits and rules that have been around for a long time".

Also check out:

Useful mental models

While there’s nothing worse than a pedantic philosopher (I’m looking at you Socrates) it’s definitely worth remembering that, as human beings, we’re subject to biases.

This long list of mental models from Farnam Street is worth going through. I particularly like Hanlon’s Razor:

Hard to trace in its origin, Hanlon's Razor states that we should not attribute to malice that which is more easily explained by stupidity. In a complex world, using this model helps us avoid paranoia and ideology. By not generally assuming that bad results are the fault of a bad actor, we look for options instead of missing opportunities. This model reminds us that people do make mistakes. It demands that we ask if there is another reasonable explanation for the events that have occurred. The explanation most likely to be right is the one that contains the least amount of intent.Another that's come in handy is the Fundamental Attribution Error:

We tend to over-ascribe the behavior of others to their innate traits rather than to situational factors, leading us to overestimate how consistent that behavior will be in the future. In such a situation, predicting behavior seems not very difficult. Of course, in practice this assumption is consistently demonstrated to be wrong, and we are consequently surprised when others do not act in accordance with the “innate” traits we’ve endowed them with.A list to return to time and again.

Source: Farnam Street

The four things you need to become an intellectual

I came across this, I think, via one of the aggregation sites I skim. It’s a letter in the form of an article by Paul J. Griffiths, who is a Professor of Catholic Theology at Duke Divinity School. In it, he replies to a student who has asked how to become an intellectual.

Griffiths breaks it down into four requirements, and then at the end gives a warning.

The first requirement is that you find something to think about. This may be easy to arrive at, or almost impossibly difficult. It’s something like falling in love. There’s an infinite number of topics you might think about, just as there’s an almost infinite number of people you might fall in love with. But in neither case is the choice made by consulting all possibilities and choosing among them. You can only love what you see, and what you see is given, in large part, by location and chance.There's a tension here, isn't there? Given the almost infinite multiplicity of things it's possible to spend life thinking about and concentrating upon, how does one choose between them? Griffiths mentions the role of location and chance, but I'd also through in tendencies. If you notice yourself liking a particular style of art, captivated by a certain style of writing, or enthralled by a way of approaching the world, this may be a clue that you should investigate it further.

The second requirement is time: You need a life in which you can spend a minimum of three uninterrupted hours every day, excepting sabbaths and occasional vacations, on your intellectual work. Those hours need to be free from distractions: no telephone calls, no email, no texts, no visits. Just you. Just thinking and whatever serves as a direct aid to and support of thinking (reading, writing, experiment, etc.). Nothing else. You need this because intellectual work is, typically, cumulative and has momentum. It doesn’t leap from one eureka moment to the next, even though there may be such moments in your life if you’re fortunate. No, it builds slowly from one day to the next, one month to the next. Whatever it is you’re thinking about will demand of you that you think about it a lot and for a long time, and you won’t be able to do that if you’re distracted from moment to moment, or if you allow long gaps between one session of work and the next. Undistracted time is the space in which intellectual work is done: It’s the space for that work in the same way that the factory floor is the space for the assembly line.This chimes with a quotation from Mark Manson I referenced yesterday, in which he talks about the joy you feel and meaning you experience when you've spent decades dedicated to one thing in particular. You have to carve out time for that, whether through your occupation, or through putting aside leisure time to pursue it.

The third requirement is training. Once you know what you want to think about, you need to learn whatever skills are necessary for good thinking about it, and whatever body of knowledge is requisite for such thinking. These days we tend to think of this as requiring university studies.This section was difficult to quote as it weaves in specific details from the original student’s letter, but the gist is that people assume that universities are good places for intellectual pursuits. Griffiths responds that this may or may not be the case, and, in fact, is less likely to be true as the 21st century progresses.[…]

The most essential skill is surprisingly hard to come by. That skill is attention. Intellectuals always think about something, and that means they need to know how to attend to what they’re thinking about. Attention can be thought of as a long, slow, surprised gaze at whatever it is.

[…]

The long, slow, surprised gaze requires cultivation. We’re quickly and easily habituated, with the result that once we’ve seen something a few times it comes to seem unsurprising, and if it’s neither threatening nor useful it rapidly becomes invisible. There are many reasons for this (the necessities of survival; the fact of the Fall), but whatever a full account of those might be (“full account” being itself a matter for thinking about), their result is that we can’t easily attend.

Instead, we need to cultivate attention, which he describes as being almost like a muscle. Griffiths suggests “intentionally engaging in repetitive activity” such as “practicing a musical instrument, attending Mass daily, meditating on the rhythms of your breath, taking the same walk every day (Kant in Königsberg)” to “foster attentiveness”.

[The] fourth requirement is interlocutors. You can’t develop the needed skills or appropriate the needed body of knowledge without them. You can’t do it by yourself. Solitude and loneliness, yes, very well; but that solitude must grow out of and continually be nourished by conversation with others who’ve thought and are thinking about what you’re thinking about. Those are your interlocutors. They may be dead, in which case they’ll be available to you in their postmortem traces: written texts, recordings, reports by others, and so on. Or they may be living, in which case you may benefit from face-to-face interactions, whether public or private. But in either case, you need them. You can neither decide what to think about nor learn to think about it well without getting the right training, and the best training is to be had by apprenticeship: Observe the work—or the traces of the work—of those who’ve done what you’d like to do; try to discriminate good instances of such work from less good; and then be formed by imitation.I talked in my thesis about the impossibility of being 'literate' unless you've got a community in which to engage in literate practices. The same is true of intellectual activity: you can't be an intellectual in a vacuum.

As a society, we worship at the altar of the lone genius but, in fact, that idea is fundamentally flawed. Progress and breakthroughs come through discussion and collaboration, not sitting in a darkened room by yourself with a wet tea-towel over your head, thinking very hard.

Interestingly, and importantly, Griffiths points out to the student to whom he’s replying that the life of an intellectual might seem attractive, but that it’s a long, hard road.

And lastly: Don’t do any of the things I’ve recommended unless it seems to you that you must. The world doesn’t need many intellectuals. Most people have neither the talent nor the taste for intellectual work, and most that is admirable and good about human life (love, self-sacrifice, justice, passion, martyrdom, hope) has little or nothing to do with what intellectuals do. Intellectual skill, and even intellectual greatness, is as likely to be accompanied by moral vice as moral virtue. And the world—certainly the American world—has little interest in and few rewards for intellectuals. The life of an intellectual is lonely, hard, and usually penurious; don’t undertake it if you hope for better than that. Don’t undertake it if you think the intellectual vocation the most important there is: It isn’t. Don’t undertake it if you have the least tincture in you of contempt or pity for those without intellectual talents: You shouldn’t. Don’t undertake it if you think it will make you a better person: It won’t. Undertake it if, and only if, nothing else seems possible.A long read, but a rewarding one.

Source: First Things

The tenets of 'Slow Thought'

The slow movement began with ‘slow food’ which was in opposition to, unsurprisingly, ‘fast food’. Since then there’s been, with greater and lesser success, ‘slow’ versions of many things: education, cinema, religion… you name it.

In this article, the author suggests ‘slow thought’. Unfortunately, the connotation around ‘slow thinking’ is already negative so I don’t think the manifesto they provide will catch on. They also quote French philosophers…

In the tradition of the Slow Movement, I hereby declare my manifesto for ‘Slow Thought’. This is the first step toward a psychiatry of the event, based on the French philosopher Alain Badiou’s central notion of the event, a new foundation for ontology – how we think of being or existence. An event is an unpredictable break in our everyday worlds that opens new possibilities. The three conditions for an event are: that something happens to us (by pure accident, no destiny, no determinism), that we name what happens, and that we remain faithful to it. In Badiou’s philosophy, we become subjects through the event. By naming it and maintaining fidelity to the event, the subject emerges as a subject to its truth. ‘Being there,’ as traditional phenomenology would have it, is not enough. My proposal for ‘evental psychiatry’ will describe both how we get stuck in our everyday worlds, and what makes change and new things possible for us.That being said, if only the author could state them more simple and standalone, I think the 'seven proclamations' do have value:

Isn't this just Philosophy? In any case, my favourite paragraph is probably this one:

Slow Thought is a porous way of thinking that is non-categorical, open to contingency, allowing people to adapt spontaneously to the exigencies and vicissitudes of life. Italians have a name for this: arrangiarsi – more than ‘making do’ or ‘getting by’, it is the art of improvisation, a way of using the resources at hand to forge solutions. The porosity of Slow Thought opens the way for potential responses to human predicaments.We definitely need more 'arrangiarsi' in the world.

Source: Aeon

Different ways of knowing

The Book of Life from the School of Life is an ever-expanding treasure trove of wisdom. In this entry, entitled Knowing Things Intellectually vs. Knowing Them Emotionally the focus is on different ways of how we ‘know’ things:

An intellectual understanding of the past, though not wrong, won’t by itself be effective in the sense of being able to release us from the true intensity of our neurotic symptoms. For this, we have to edge our way towards a far more close-up, detailed, visceral appreciation of where we have come from and what we have suffered. We need to strive for what we can call an emotional understanding of the past – as opposed to a top-down, abbreviated intellectual one.I've no idea about my own intellectual abilities, although I guess I do have a terminal degree. What I do know is that I've spoken with many smart people who, like me, have found it difficult to deal with emotions such as anxiety. There's definitely a difference between 'knowing' as in 'knowing what's wrong with you' and 'knowing how to fix it'.

Psychotherapy has long recognised this distinction. It knows that thinking is hugely important – but on its own, within the therapeutic process itself, it is not the key to fixing our psychological problems.The article has threaded through it the example of having an abusive relationship as a child. Thankfully, I didn’t experience that, but it does make a great suggestion that finding the source of one’s anxiety and fully experiencing the emotion at its core might be helpful.[…]

Therapy builds on the idea of a return to live feelings. It’s only when we’re properly in touch with feelings that we can correct them with the help of our more mature faculties – and thereby address the real troubles of our adult lives.

And it is on the basis of this kind of hard-won emotional knowledge, not its more painless intellectual kind, that we may one day, with a fair wind, discover a measure of relief for some of the troubles within.Source: The Book of Life

Archives of Radical Philosophy

A quick one to note that the entire archive (1972-2018) of Radical Philosophy is now online. It describes itself as a “UK-based journal of socialist and feminist philosophy” and there’s articles in there from Pierre Bourdieu, Judith Butler, and Richard Rorty.

If nothing else, these essays and many others should upend facile notions of leftist academic philosophy as dominated by “postmodern” denials of truth, morality, freedom, and Enlightenment thought, as doctrinaire Stalinism, or little more than thought policing through dogmatic political correctness. For every argument in the pages of Radical Philosophy that might confirm certain readers' biases, there are dozens more that will challenge their assumptions, bearing out Foucault’s observation that “philosophy cannot be an endless scrutiny of its own propositions.”That's my bedtime reading sorted for the foreseeable, then...

Source: Open Culture

Is your smartphone a very real part of who you are?

I really enjoy Aeon’s articles, and probably should think about becoming a paying subscriber. They make me think.

This one is about your identity and how much of it is bound up with your smartphone:

After all, your smartphone is much more than just a phone. It can tell a more intimate story about you than your best friend. No other piece of hardware in history, not even your brain, contains the quality or quantity of information held on your phone: it ‘knows’ whom you speak to, when you speak to them, what you said, where you have been, your purchases, photos, biometric data, even your notes to yourself – and all this dating back years.I did some work on mind, brain, and personal identity as part of my undergraduate studies in Philosophy. I'm certainly sympathetic to the argument that things outside our body can become part of who we are:

Andy Clark and David Chalmers... argued in ‘The Extended Mind’ (1998) that technology is actually part of us. According to traditional cognitive science, ‘thinking’ is a process of symbol manipulation or neural computation, which gets carried out by the brain. Clark and Chalmers broadly accept this computational theory of mind, but claim that tools can become seamlessly integrated into how we think. Objects such as smartphones or notepads are often just as functionally essential to our cognition as the synapses firing in our heads. They augment and extend our minds by increasing our cognitive power and freeing up internal resources.So if you've always got your smartphone with you, it's possible to outsource things to it. For example, you don't have to remember so many things, you just need to know how to retrieve them. In the age of voice assistants, that becomes ever-easier.

This is known as the ‘extended mind thesis’.

This line of reasoning leads to some potentially radical conclusions. Some philosophers have argued that when we die, our digital devices should be handled as remains: if your smartphone is a part of who you are, then perhaps it should be treated more like your corpse than your couch. Similarly, one might argue that trashing someone’s smartphone should be seen as a form of ‘extended’ assault, equivalent to a blow to the head, rather than just destruction of property. If your memories are erased because someone attacks you with a club, a court would have no trouble characterising the episode as a violent incident. So if someone breaks your smartphone and wipes its contents, perhaps the perpetrator should be punished as they would be if they had caused a head trauma.These are certainly questions I'm interested in. I've seen some predictions that Philosphy graduates are going to be earning more than Computer Science graduates in a decade's time. I can see why (and I certainly hope so!)

Source: Aeon

The 'loudness' of our thoughts affects how we judge external sounds

This is really interesting:

No-one but you knows what it's like to be inside your head and be subject to the constant barrage of hopes, fears, dreams — and thoughts:The "loudness" of our thoughts -- or how we imagine saying something -- influences how we judge the loudness of real, external sounds, a team of researchers from NYU Shanghai and NYU has found.

"Our 'thoughts' are silent to others -- but not to ourselves, in our own heads -- so the loudness in our thoughts influences the loudness of what we hear," says Poeppel, a professor of psychology and neural science.This is why meditation, both in terms of trying to still your mind, and meditating on positive things you read, is such a useful activity.Using an imagery-perception repetition paradigm, the team found that auditory imagery will decrease the sensitivity of actual loudness perception, with support from both behavioural loudness ratings and human electrophysiological (EEG and MEG) results.

“That is, after imagined speaking in your mind, the actual sounds you hear will become softer – the louder the volume during imagery, the softer perception will be,” explains Tian, assistant professor of neural and cognitive sciences at NYU Shanghai. “This is because imagery and perception activate the same auditory brain areas. The preceding imagery already activates the auditory areas once, and when the same brain regions are needed for perception, they are ‘tired’ and will respond less."

As anyone who’s studied philosophy, psychology, and/or neuroscience knows, we don’t experience the world directly, but find ways to interpret the “bloomin' buzzin' confusion”:

According to Tian, the study demonstrates that perception is a result of interaction between top-down (e.g. our cognition) and bottom-up (e.g. sensory processing of external stimulation) processes. This is because human beings not only receive and analyze upcoming external signals passively, but also interpret and manipulate them actively to form perception.Source: Science Daily

What we can learn from Seneca about dying well

As I’ve shared before, next to my bed at home I have a memento mori, an object to remind me before I go to sleep and when I get up that one day I will die. It kind of puts things in perspective.

“Study death always,” Seneca counseled his friend Lucilius, and he took his own advice. From what is likely his earliest work, the Consolation to Marcia (written around AD 40), to the magnum opus of his last years (63–65), the Moral Epistles, Seneca returned again and again to this theme. It crops up in the midst of unrelated discussions, as though never far from his mind; a ringing endorsement of rational suicide, for example, intrudes without warning into advice about keeping one’s temper, in On Anger. Examined together, Seneca’s thoughts organize themselves around a few key themes: the universality of death; its importance as life’s final and most defining rite of passage; its part in purely natural cycles and processes; and its ability to liberate us, by freeing souls from bodies or, in the case of suicide, to give us an escape from pain, from the degradation of enslavement, or from cruel kings and tyrants who might otherwise destroy our moral integrity.Seneca was forced to take his own life by his own pupil, the more-than-a-little-crazy Roman Emperor, Nero. However, his whole life had been a preparation for such an eventuality.

Seneca, like many leading Romans of his day, found that larger moral framework in Stoicism, a Greek school of thought that had been imported to Rome in the preceding century and had begun to flourish there. The Stoics taught their followers to seek an inner kingdom, the kingdom of the mind, where adherence to virtue and contemplation of nature could bring happiness even to an abused slave, an impoverished exile, or a prisoner on the rack. Wealth and position were regarded by the Stoics as adiaphora, “indifferents,” conducing neither to happiness nor to its opposite. Freedom and health were desirable only in that they allowed one to keep one’s thoughts and ethical choices in harmony with Logos, the divine Reason that, in the Stoic view, ruled the cosmos and gave rise to all true happiness. If freedom were destroyed by a tyrant or health were forever compromised, such that the promptings of Reason could no longer be obeyed, then death might be preferable to life, and suicide, or self-euthanasia, might be justified.Given that death is the last taboo in our society, it's an interesting way to live your life. Being ready at any time to die, having lived a life that you're satisfied with, seems like the right approach to me.

“Study death,” “rehearse for death,” “practice death”—this constant refrain in his writings did not, in Seneca’s eyes, spring from a morbid fixation but rather from a recognition of how much was at stake in navigating this essential, and final, rite of passage. As he wrote in On the Shortness of Life, “A whole lifetime is needed to learn how to live, and—perhaps you’ll find this more surprising—a whole lifetime is needed to learn how to die.”Source: Lapham's Quarterly