Meredith Whittaker on AI doomerism

This interview with Signal CEO Meredith Whittaker in Slate is so awesome. She brings the AI 'doomer' narrative back time and again both to surveillance capitalism, and the massive mismatch between marginalised people currently having harm done to them and the potential harm done to very powerful people.

Source: A.I. Doom Narratives Are Hiding What We Should Be Most Afraid Of | SlateWhat we’re calling machine learning or artificial intelligence is basically statistical systems that make predictions based on large amounts of data. So in the case of the companies we’re talking about, we’re talking about data that was gathered through surveillance, or some variant of the surveillance business model, that is then used to train these systems, that are then being claimed to be intelligent, or capable of making significant decisions that shape our lives and opportunities—even though this data is often very flimsy.

[...]

We are in a world where private corporations have unfathomably complex and detailed dossiers about billions and billions of people, and increasingly provide the infrastructures for our social and economic institutions. Whether that is providing so-called A.I. models that are outsourcing decision-making or providing cloud support that is ultimately placing incredibly sensitive information, again, in the hands of a handful of corporations that are centralizing these functions with very little transparency and almost no accountability. That is not an inevitable situation: We know who the actors are, we know where they live. We have some sense of what interventions could be healthy for moving toward something that is more supportive of the public good.

[...]

My concern with some of the arguments that are so-called existential, the most existential, is that they are implicitly arguing that we need to wait until the people who are most privileged now, who are not threatened currently, are in fact threatened before we consider a risk big enough to care about. Right now, low-wage workers, people who are historically marginalized, Black people, women, disabled people, people in countries that are on the cusp of climate catastrophe—many, many folks are at risk. Their existence is threatened or otherwise shaped and harmed by the deployment of these systems.... So my concern is that if we wait for an existential threat that also includes the most privileged person in the entire world, we are implicitly saying—maybe not out loud, but the structure of that argument is—that the threats to people who are minoritized and harmed now don’t matter until they matter for that most privileged person in the world. That’s another way of sitting on our hands while these harms play out. That is my core concern with the focus on the long-term, instead of the focus on the short-term.

Saturday soundings

Black Lives Matter. The money from this month's kind supporters of Thought Shrapnel has gone directly to the 70+ community bail funds, mutual aid funds, and racial justice organizers listed here.

IBM abandons 'biased' facial recognition tech

A 2019 study conducted by the Massachusetts Institute of Technology found that none of the facial recognition tools from Microsoft, Amazon and IBM were 100% accurate when it came to recognising men and women with dark skin.

And a study from the US National Institute of Standards and Technology suggested facial recognition algorithms were far less accurate at identifying African-American and Asian faces compared with Caucasian ones.

Amazon, whose Rekognition software is used by police departments in the US, is one of the biggest players in the field, but there are also a host of smaller players such as Facewatch, which operates in the UK. Clearview AI, which has been told to stop using images from Facebook, Twitter and YouTube, also sells its software to US police forces.

Maria Axente, AI ethics expert at consultancy firm PwC, said facial recognition had demonstrated "significant ethical risks, mainly in enhancing existing bias and discrimination".

BBC News

Like many newer technologies, facial recognition is already a battleground for people of colour. This is a welcome, if potential cynical move, by IBM who let's not forget literally provided technology to the Nazis.

How Wikipedia Became a Battleground for Racial Justice

If there is one reason to be optimistic about Wikipedia’s coverage of racial justice, it’s this: The project is by nature open-ended and, well, editable. The spike in volunteer Wikipedia contributions stemming from the George Floyd protests is certainly not neutral, at least to the extent that word means being passive in this moment. Still, Koerner cautioned that any long-term change of focus to knowledge equity was unlikely to be easy for the Wikipedia editing community. “I hope that instead of struggling against it they instead lean into their discomfort,” she said. “When we’re uncomfortable, change happens.”

Stephen Harrison (Slate)

This is a fascinating glimpse into Wikipedia and how the commitment to 'neutrality' affects coverage of different types of people and event feeds.

Deeds, not words

Recent events have revealed, again, that the systems we inhabit and use as educators are perfectly designed to get the results they get. The stated desire is there to change the systems we use. Let’s be able to look back to this point in two years and say that we have made a genuine difference.

Nick Dennis

Some great questions here from Nick, some of which are specific to education, whereas others are applicable everywhere.

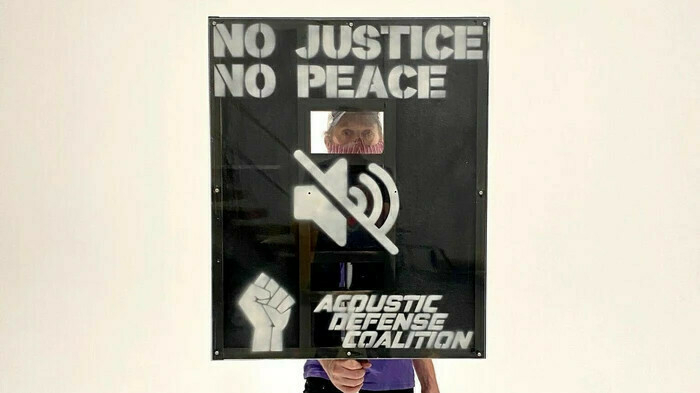

Audio Engineers Built a Shield to Deflect Police Sound Cannons

Since the protests began, demonstrators in multiple cities have reported spotting LRADs, or Long-Range Acoustic Devices, sonic weapons that blast sound waves at crowds over large distances and can cause permanent hearing loss. In response, two audio engineers from New York City have designed and built a shield which they say can block and even partially reflect these harmful sonic blasts back at the police.

Janus Rose (Vice)

For those not familiar with the increasing militarisation of police in the US, this is an interesting read.

CMA to look into Facebook's purchase of gif search engine

The Competition and Markets Authority (CMA) is inviting comments about Facebook’s purchase of a company that currently provides gif search across many of the social network’s competitors, including Twitter and the messaging service Signal.

[...]

[F]or Facebook, the more compelling reason for the purchase may be the data that Giphy has about communication across the web. Since many services that integrate with the platform not only use it to find gifs, but also leave the original clip hosted on Giphy’s servers, the company receives information such as when a message is sent and received, the IP address of both parties, and details about the platforms they are using.

Alex Hern (The Guardian)

In my 2012 TEDx Talk I discussed the memetic power of gifs. Others might find this news surprising, but I don't think I would have been surprised even back then that it would be such a hot topic in 2020.

Also by the Hern this week is an article on Twitter's experiments around getting people to actually read things before they tweet/retweet them. What times we live in.

Human cycles: History as science

To Peter Turchin, who studies population dynamics at the University of Connecticut in Storrs, the appearance of three peaks of political instability at roughly 50-year intervals is not a coincidence. For the past 15 years, Turchin has been taking the mathematical techniques that once allowed him to track predator–prey cycles in forest ecosystems, and applying them to human history. He has analysed historical records on economic activity, demographic trends and outbursts of violence in the United States, and has come to the conclusion that a new wave of internal strife is already on its way1. The peak should occur in about 2020, he says, and will probably be at least as high as the one in around 1970. “I hope it won't be as bad as 1870,” he adds.

Laura Spinney (Nature)

I'm not sure about this at all, because if you go looking for examples of something to fit your theory, you'll find it. Especially when your theory is as generic as this one. It seems like a kind of reverse fortune-telling?

Universal Basic Everything

Much of our economies in the west have been built on the idea of unique ideas, or inventions, which are then protected and monetised. It’s a centuries old way of looking at ideas, but today we also recognise that this method of creating and growing markets around IP protected products has created an unsustainable use of the world’s natural resources and generated too much carbon emission and waste.

Open source and creative commons moves us significantly in the right direction. From open sharing of ideas we can start to think of ideas, services, systems, products and activities which might be essential or basic for sustaining life within the ecological ceiling, whilst also re-inforcing social foundations.

TessyBritton

I'm proud to be part of a co-op that focuses on openness of all forms. This article is a great introduction to anyone who wants a new way of looking at our post-COVID future.

World faces worst food crisis for at least 50 years, UN warns

Lockdowns are slowing harvests, while millions of seasonal labourers are unable to work. Food waste has reached damaging levels, with farmers forced to dump perishable produce as the result of supply chain problems, and in the meat industry plants have been forced to close in some countries.

Even before the lockdowns, the global food system was failing in many areas, according to the UN. The report pointed to conflict, natural disasters, the climate crisis, and the arrival of pests and plant and animal plagues as existing problems. East Africa, for instance, is facing the worst swarms of locusts for decades, while heavy rain is hampering relief efforts.

The additional impact of the coronavirus crisis and lockdowns, and the resulting recession, would compound the damage and tip millions into dire hunger, experts warned.

Fiona Harvey (The Guardian)

The knock-on effects of COVID-19 are going to be with us for a long time yet. And these second-order effects will themselves have effects which, with climate change also being in the mix, could lead to mass migrations and conflict by 2025.

Mice on Acid

What exactly a mouse sees when she’s tripping on DOI—whether the plexiglass walls of her cage begin to melt, or whether the wood chips begin to crawl around like caterpillars—is tied up in the private mysteries of what it’s like to be a mouse. We can’t ask her directly, and, even if we did, her answer probably wouldn’t be of much help.

Cody Kommers (Nautilus)

The bit about 'ego disillusion' in this article, which is ostensibly about how to get legal hallucinogens to market, is really interesting.

Header image by Dmitry Demidov

Friday fumings

My bet is that you've spent most of this week reading news about the global pandemic. Me too. That's why I decided to ensure it's not mentioned at all in this week's link roundup!

Let me know what resonates with you... 😷

Finding comfort in the chaos: How Cory Doctorow learned to write from literally anywhere

My writing epiphany — which arrived decades into my writing career — was that even though there were days when the writing felt unbearably awful, and some when it felt like I was mainlining some kind of powdered genius and sweating it out through my fingertips, there was no relation between the way I felt about the words I was writing and their objective quality, assessed in the cold light of day at a safe distance from the day I wrote them. The biggest predictor of how I felt about my writing was how I felt about me. If I was stressed, underslept, insecure, sad, hungry or hungover, my writing felt terrible. If I was brimming over with joy, the writing felt brilliant.

Cory Doctorow (CBC)

Such great advice in here from the prolific Cory Doctorow. Not only is he a great writer, he's a great speaker, too. I think both come from practice and clarity of thought.

Slower News

Trends, micro-trends & edge cases.

This is a site that specialises in important and interesting news that is updated regularly, but not on an hour-by-hour (or even daily) basis. A wonderful antidote to staring at your social media feed for updates!

SCARF: The 5 key ingredients for psychological safety in your team

There’s actually a mountain of compelling evidence that the single most important ingredient for healthy, high-performing teams is simple: it’s trust. When Google famously crunched the data on hundreds of high-performing teams, they were surprised to find that one variable mattered more than any other: “emotional safety.” Also known as: “psychological security.” Also known as: trust.

Matt Thompson

I used to work with Matt at Mozilla, and he's a pretty great person to work alongside. He's got a book coming out this year, and Laura (another former Mozilla colleague, but also a current co-op colleague!) drew my attention to this.

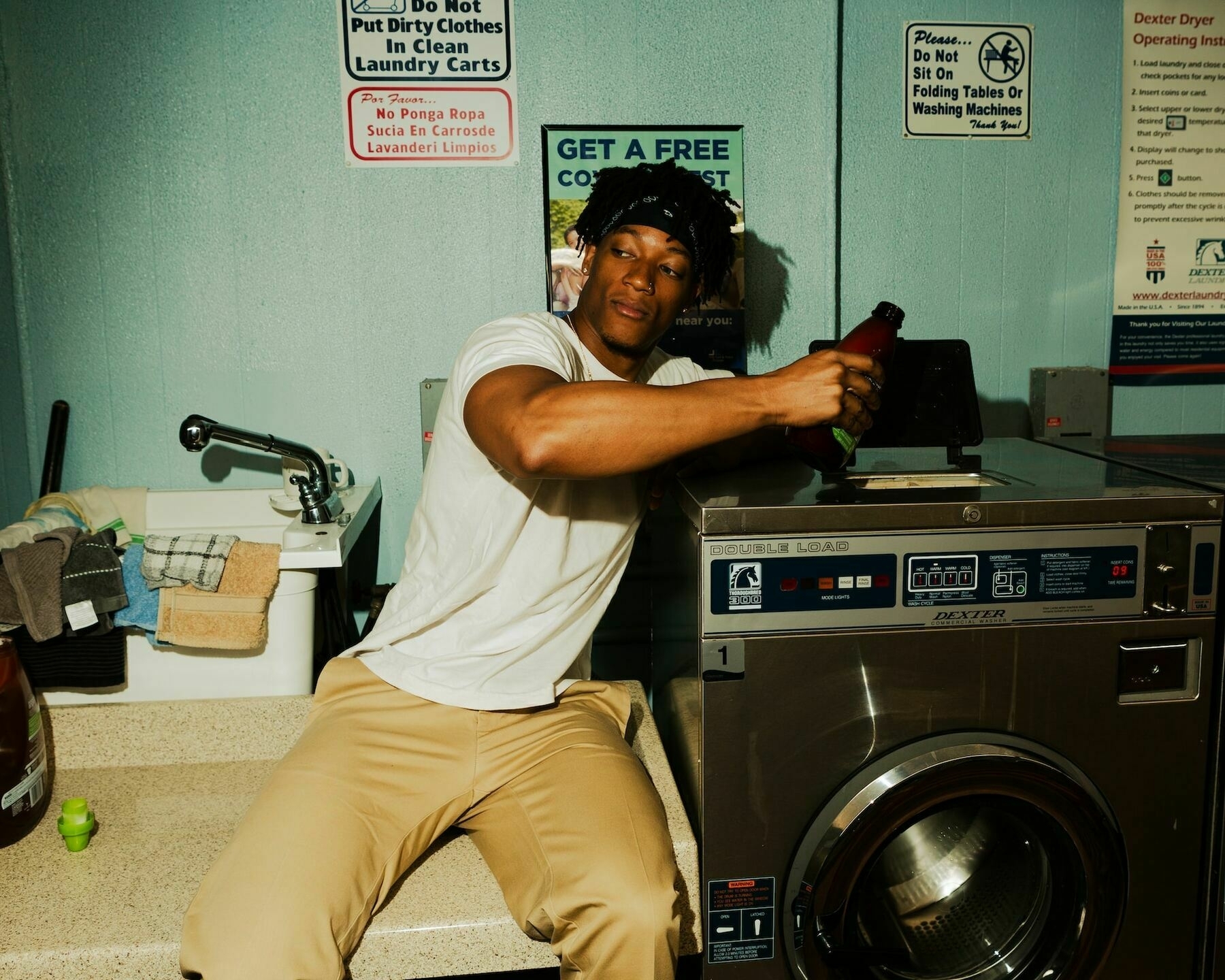

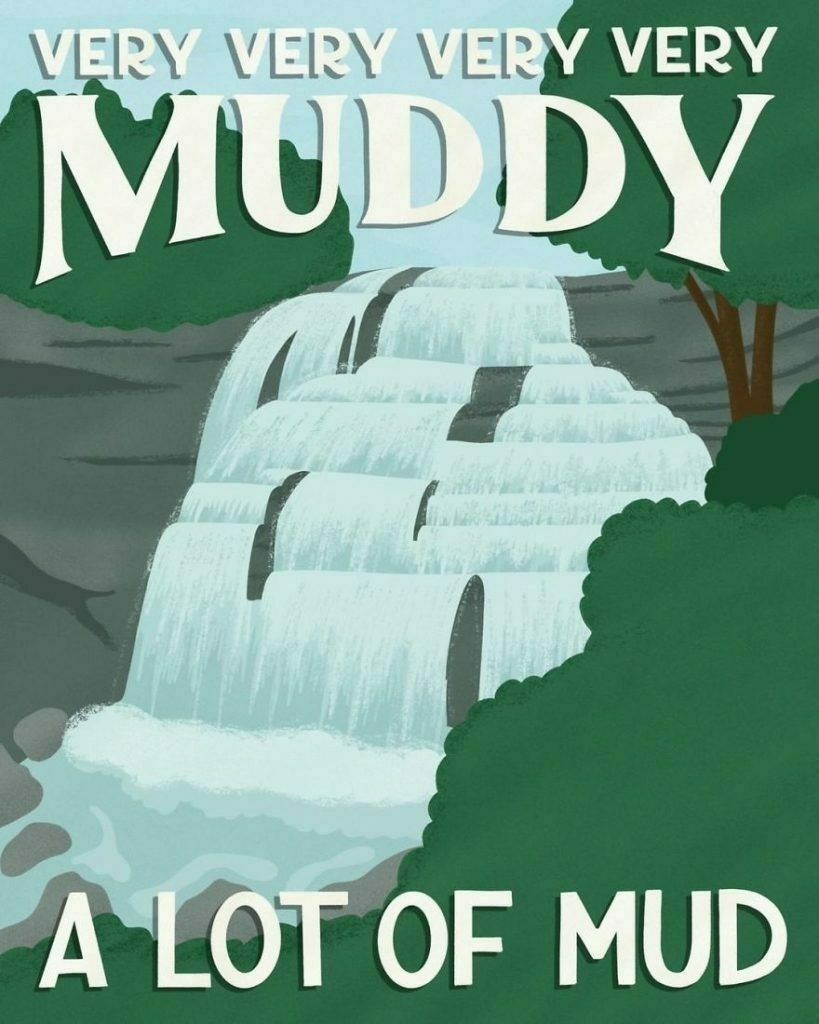

I Illustrated National Parks In America Based On Their Worst Review And I Hope They Will Make You Laugh (16 Pics)

I'm an illustrator and I have always had a personal goal to draw all 62 US National Parks, but I wanted to find a unique twist for the project. When I found that there are one-star reviews for every single park, the idea for Subpar Parks was born. For each park, I hand-letter a line from the one-star reviews alongside my illustration of each park as my way of putting a fun and beautiful twist on the negativity.

Amber Share (Bored Panda)

I love this, especially as the illustrations are so beautiful and the comments so banal.

What Does a Screen Do?

We know, for instance, that smartphone use is associated with depression in teens. Smartphone use certainly could be the culprit, but it’s also possible the story is more complicated; perhaps the causal relationship works the other way around, and depression drives teenagers to spend more time on their devices. Or, perhaps other details about their life—say, their family background or level of physical activity—affect both their mental health and their screen time. In short: Human behavior is messy, and measuring that behavior is even messier.

Jane C. Hu (Slate)

This, via Ian O'Byrne, is a useful read for anyone who deals with kids, especially teenagers.

13 reads to save for later: An open organization roundup

For months, writers have been showering us with multiple, ongoing series of articles, all focused on different dimensions of open organizational theory and practice. That's led to to a real embarrassment of riches—so many great pieces, so little time to catch them all.

So let's take moment to reflect. If you missed one (or several) now's your chance to catch up.

Bryan Behrenshausen (Opensource.com)

I've already shared some of the articles in this roundup, but I encourage you to check out the rest, and subscribe to opensource.com. It's a great source of information and guidance.

It Doesn’t Matter If Anyone Exists or Not

Capitalism has always transformed people into latent resources, whether as labor to exploit for making products or as consumers to devour those products. But now, online services make ordinary people enact both roles: Twitter or Instagram followers for conversion into scrap income for an influencer side hustle; Facebook likes transformed into News Feed-delivery refinements; Tinder swipes that avoid the nuisance of the casual encounters that previously fueled urban delight. Every profile pic becomes a passerby—no need for an encounter, even.

Ian Bogost (The Atlantic)

An amazing piece of writing, in which Ian Bogost not only surveys previous experiences with 'strangers' but applies it to the internet. As he points out, there is a huge convenience factor in not knowing who made your sandwich. I've pointed out before that capitalism is all about scale, and at the end of the day, caring doesn't scale, and scaling doesn't care.

You don't want quality time, you want garbage time

We desire quality moments and to make quality memories. It's tempting to think that we can create quality time just by designating it so, such as via a vacation. That generally ends up backfiring due to our raised expectations being let down by reality. If we expect that our vacation is going to be perfect, any single mistake ruins the experience

In contrast, you are likely to get a positive surprise when you have low expectations, which is likely the case during a "normal day". It’s hard to match perfection, and easy to beat normal. Because of this, it's more likely quality moments come out of chance

If you can't engineer quality time, and it's more a matter of random events, it follows that you want to increase how often such events happen. You can't increase the probability, but you can increase the duration for such events to occur. Put another way, you want to increase quantity of time, and not engineer quality time.

Leon Lin (Avoid boring people)

There's a lot of other interesting-but-irrelevant things in this newsletter, so scroll to the bottom for the juicy bit. I've quoted the most pertinent point, which I definitely agree with. There's wisdom in Gramsci's quotation about having "pessimism of the intellect, optimism of the will".

The Prodigal Techbro

The prodigal tech bro doesn’t want structural change. He is reassurance, not revolution. He’s invested in the status quo, if we can only restore the founders’ purity of intent. Sure, we got some things wrong, he says, but that’s because we were over-optimistic / moved too fast / have a growth mindset. Just put the engineers back in charge / refocus on the original mission / get marketing out of the c-suite. Government “needs to step up”, but just enough to level the playing field / tweak the incentives. Because the prodigal techbro is a moderate, centrist, regular guy. Dammit, he’s a Democrat. Those others who said years ago what he’s telling you right now? They’re troublemakers, disgruntled outsiders obsessed with scandal and grievance. He gets why you ignored them. Hey, he did, too. He knows you want to fix this stuff. But it’s complicated. It needs nuance. He knows you’ll listen to him. Dude, he’s just like you…

Maria Farrell (The Conversationalist)

Now that we're experiencing something of a 'techlash' it's unsurprising that those who created surveillance capitalism have had a 'road to Damascus' experience. That doesn't mean, as Maria Farrell points out, that we should all of a sudden consider them to be moral authorities.

Enjoy this? Sign up for the weekly roundup, become a supporter, or download Thought Shrapnel Vol.1: Personal Productivity!

Technology is the name we give to stuff that doesn't work properly yet

So said my namesake Douglas Adams. In fact, he said lots of wise things about technology, most of them too long to serve as a title.

I'm in a weird place, emotionally, at the moment, but sometimes this can be a good thing. Being taken out of your usual 'autopilot' can be a useful way to see things differently. So I'm going to take this opportunity to share three things that, to be honest, make me a bit concerned about the next few years...

Attempts to put microphones everywhere

In an article for Slate, Shannon Palus ranks all of Amazon's new products by 'creepiness'. The Echo Frames are, in her words:

A microphone that stays on your person all day and doesn’t look like anything resembling a microphone, nor follows any established social codes for wearable microphones? How is anyone around you supposed to have any idea that you are wearing a microphone?

Shannon Palus

When we're not talking about weapons of mass destruction, it's not the tech that concerns me, but the context in which the tech is used. As Palus points out, how are you going to be able to have a 'quiet word' with anyone wearing glasses ever again?

It's not just Amazon, of course. Google and Facebook are at it, too.

Full-body deepfakes

With the exception, perhaps, of populist politicians, I don't think we're ready for a post-truth society. Check out the video above, which shows Chinese technology that allows for 'full body deepfakes'.

The video is embedded, along with a couple of others in an article for Fast Company by DJ Pangburn, who also notes that AI is learning human body movements from videos. Not only will you be able to prank your friends by showing them a convincing video of your ability to do 100 pull-ups, but the fake news it engenders will mean we can't trust anything any more.

Neuromarketing

If you clicked on the 'super-secret link' in Sunday's newsletter, you will have come across STEALING UR FEELINGS which is nothing short of incredible. As powerful as it is in showing you the kind of data that organisations have on us, it's the tip of the iceberg.

Kaveh Waddell, in an article for Axios, explains that brains are the last frontier for privacy:

"The sort of future we're looking ahead toward is a world where our neural data — which we don't even have access to — could be used" against us, says Tim Brown, a researcher at the University of Washington Center for Neurotechnology.

Kaveh Waddell

This would lead to 'neuromarketing', with advertisers knowing what triggers and influences you better than you know yourself. Also, it will no doubt be used for discriminatory purposes and, because it's coming directly from your brainwaves, short of literally wearing a tinfoil hat, there's nothing much you can do.

So there we are. Am I being too fearful here?

Is Google becoming more like Facebook?

I’m composing this post on ChromeOS, which is a little bit hypocritical, but yesterday I was shocked to discover how much data I was ‘accidentally’ sharing with Google. Check it out for yourself by going to your Google account’s activity controls page.

This article talks about how Google have become less trustworthy of late:

[Google] announced a forthcoming update last Wednesday: Chrome’s auto-sign-in feature will still be the default behavior of Chrome. But you’ll be able to turn it off through an optional switch buried in Chrome’s settings.

This pattern of behavior by tech companies is so routine that we take it for granted. Let’s call it “pulling a Facebook” in honor of the many times that Facebook has “accidentally” relaxed the privacy settings for user profile data, and then—following a bout of bad press coverage—apologized and quietly reversed course. A key feature of these episodes is that management rarely takes the blame: It’s usually laid at the feet of some anonymous engineer moving fast and breaking things. Maybe it’s just a coincidence that these changes consistently err in the direction of increasing “user engagement” and never make your experience more private.

What’s new here, and is a very recent development indeed, is that we’re finally starting to see that this approach has costs. For example, it now seems like Facebook executives spend an awful lot of time answering questions in front of Congress. In 2017, when Facebook announced it had handed more than 80 million user profiles to the sketchy election strategy firm Cambridge Analytica, Facebook received surprisingly little sympathy and a notable stock drop. Losing the trust of your users, we’re learning, does not immediately make them flee your business. But it does matter. It’s just that the consequences are cumulative, like spending too much time in the sun.

I'm certainly questioning my tech choices. And I've (re-)locked down my Google account.

Source: SlateAn incorrect approach to teaching History

My thanks to Amy Burvall for bringing to my attention this article about how we’re teaching History incorrectly. Its focus is on how ‘fact-checking’ is so different with the internet than it was beforehand. There’s a lot of similarities between what the interviewee, Sam Wineburg, has to say and what Mike Caulfield has been working on with Web Literacy for Student Fact-Checkers:

Source: SlateFact-checkers know that in a digital medium, the web is a web. It’s not just a metaphor. You understand a particular node by its relationship in a web. So the smartest thing to do is to consult the web to understand any particular node. That is very different from reading Thucydides, where you look at internal criticism and consistency because there really isn’t a documentary record beyond Thucydides.