- How does a computer ‘see’ gender? (Pew Research Center) — "Machine learning tools can bring substantial efficiency gains to analyzing large quantities of data, which is why we used this type of system to examine thousands of image search results in our own studies. But unlike traditional computer programs – which follow a highly prescribed set of steps to reach their conclusions – these systems make their decisions in ways that are largely hidden from public view, and highly dependent on the data used to train them. As such, they can be prone to systematic biases and can fail in ways that are difficult to understand and hard to predict in advance."

- The Communication We Share with Apes (Nautilus) — "Many primate species use gestures to communicate with others in their groups. Wild chimpanzees have been seen to use at least 66 different hand signals and movements to communicate with each other. Lifting a foot toward another chimp means “climb on me,” while stroking their mouth can mean “give me the object.” In the past, researchers have also successfully taught apes more than 100 words in sign language."

- Why degrowth is the only responsible way forward (openDemocracy) — "If we free our imagination from the liberal idea that well-being is best measured by the amount of stuff that we consume, we may discover that a good life could also be materially light. This is the idea of voluntary sufficiency. If we manage to decide collectively and democratically what is necessary and enough for a good life, then we could have plenty."

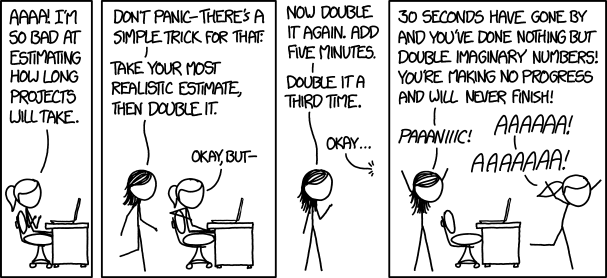

- 3 times when procrastination can be a good thing (Fast Company) — "It took Leonardo da Vinci years to finish painting the Mona Lisa. You could say the masterpiece was created by a master procrastinator. Sure, da Vinci wasn’t under a tight deadline, but his lengthy process demonstrates the idea that we need to work through a lot of bad ideas before we get down to the good ones."

- Why can’t we agree on what’s true any more? (The Guardian) — "What if, instead, we accepted the claim that all reports about the world are simply framings of one kind or another, which cannot but involve political and moral ideas about what counts as important? After all, reality becomes incoherent and overwhelming unless it is simplified and narrated in some way or other.

- A good teacher voice strikes fear into grown men (TES) — "A good teacher voice can cut glass if used with care. It can silence a class of children; it can strike fear into the hearts of grown men. A quiet, carefully placed “Excuse me”, with just the slightest emphasis on the “-se”, is more effective at stopping an argument between adults or children than any amount of reason."

- Freeing software (John Ohno) — "The only way to set software free is to unshackle it from the needs of capital. And, capital has become so dependent upon software that an independent ecosystem of anti-capitalist software, sufficiently popular, can starve it of access to the speed and violence it needs to consume ever-doubling quantities of to survive."

- Young People Are Going to Save Us All From Office Life (The New York Times) — "Today’s young workers have been called lazy and entitled. Could they, instead, be among the first to understand the proper role of work in life — and end up remaking work for everyone else?"

- Global climate strikes: Don’t say you’re sorry. We need people who can take action to TAKE ACTUAL ACTION (The Guardian) — "Brenda the civil disobedience penguin gives some handy dos and don’ts for your civil disobedience"

Emoji, we salute you 🫡

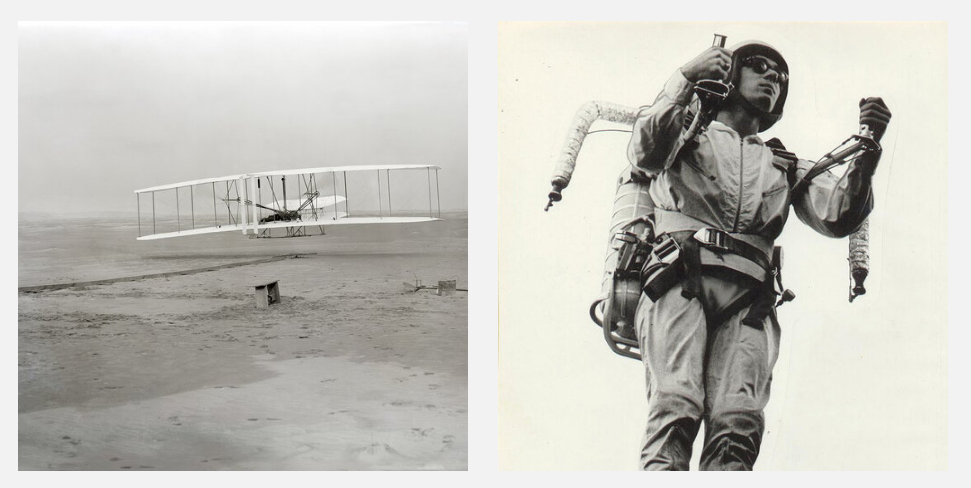

I remember going to a conference session about a decade ago when people were still on the fence about emoji and the presenter said that they were the most important form of visual communication since hieroglyphics.

It’s hard to argue otherwise. I’ve been a huge fan since I noticed that adding a smiley to my emails made a huge difference to the way that people received and understood them. It’s a way of communication at a distance; how would we navigate group chats and social networks without them? 😅

Valeria Pfeifer is a cognitive scientist at the University of Arizona. She is one of a small group of researchers who has studied how emojis affect our thinking. She tells me that my newfound joy makes sense. Emojis “convey this additional complex layer of meaning that words just don’t really seem to get at,” she says. Many a word nerd has fretted that emojis are making us—and our communication—dumber. But Pfeifer and other cognitive scientists and linguists are beginning to explain what makes them special.Source: Your 🧠 On Emoji | NautilusIn a book called The Emoji Code, British cognitive linguist Vyvyan Evans describes emojis as “incontrovertibly the world’s first truly universal communication.” That might seem like a tall claim for an ever-expanding set of symbols whose meanings can be fickle. But language evolves, and these ideograms have become the lingua franca of digital communication.

[…]

Perhaps the first study of how these visual representations activate the brain was presented at a conference in 2006.1 Computer scientist Masahide Yuasa, then at Tokyo Denki University in Japan, and his colleagues wanted to see whether our noggins interpret abstract symbolic representations of faces—emoticons made of punctuation marks—in the same way as photographic images of them. They popped several college students into a brain scanning machine (they used functional magnetic resonance imaging, or fMRI) and showed them realistic images of happy and sad faces, as well as scrambled versions of these pictures. They also showed them happy and sad emoticons, along with short random collections of punctuation.

The photos lit up a brain region associated with faces. The emoticons didn’t. But they did activate a different area thought to be involved in deciding whether something is emotionally negative or positive. The group’s later work, published in 2011, extended this finding, reporting that emoticons at the end of a sentence made verbal and nonverbal areas of the brain respond more enthusiastically to written text.2 “Just as prosody enriches vocal expressions,” the researchers wrote in their earlier paper, the emoticons seemed to be layering on more meaning and impact. The effect is like a shot of meaning-making caffeine—pure emotional charge.

Even those of a harsh and unyielding nature will endure gentle treatment: no creature is fierce and frightening if it is stroked

🌼 Digital gardens let you cultivate your own little bit of the internet

⛏️ Dorset mega henge may be ‘last hurrah’ of stone-age builders

📺 Culture to cheer you up during the second lockdown: part one

Quotation-as-title by Seneca. Image from top-linked post.

Saturday shakings

Whew, so many useful bookmarks to re-read for this week’s roundup! It took me a while, so let’s get on with it…

What is the future of distributed work?

To Bharat Mediratta, chief technology officer at Dropbox, the quarantine experience has highlighted a huge gap in the market. “What we have right now is a bunch of different productivity and collaboration tools that are stitched together. So I will do my product design in Figma, and then I will submit the code change on GitHub, I will push the product out live on AWS, and then I will communicate with my team using Gmail and Slack and Zoom,” he says. “We have all that technology now, but we don't yet have the ‘digital knowledge worker operating system’ to bring it all together.”

WIRED

OK, so this is a sponsored post by Dropbox on the WIRED website, but what it highlights is interesting. For example, Monday.com (which our co-op uses) rebranded itself a few months ago as a 'Work OS'. There's definitely a lot of money to be made for whoever manages to build an integrated solution, although I think we're a long way off something which is flexible enough for every use case.

The Definition of Success Is Autonomy

Today, I don’t define success the way that I did when I was younger. I don’t measure it in copies sold or dollars earned. I measure it in what my days look like and the quality of my creative expression: Do I have time to write? Can I say what I think? Do I direct my schedule or does my schedule direct me? Is my life enjoyable or is it a chore?

Ryan Holiday

Tim Ferriss has this question he asks podcast guests: "If you could have a gigantic billboard anywhere with anything on it what would it say and why?" I feel like the title of this blog post is one of the answers I would give to that question.

Do The Work

We are a small group of volunteers who met as members of the Higher Ed Learning Collective. We were inspired by the initial demand, and the idea of self-study, interracial groups. The initial decision to form this initiative is based on the myriad calls from people of color for white-bodied people to do internal work. To do the work, we are developing a space for all individuals to read, share, discuss, and interrogate perspectives on race, racism, anti-racism, identity in an educational setting. To ensure that the fight continues for justice, we need to participate in our own ongoing reflection of self and biases. We need to examine ourselves, ask questions, and learn to examine our own perspectives. We need to get uncomfortable in asking ourselves tough questions, with an understanding that this is a lifelong, ongoing process of learning.

Ian O'Byrne

This is a fantastic resource for people who, like me, are going on a learning journey at the moment. I've found the podcast Seeing White by Scene on Radio particularly enlightening, and at times mind-blowing. Also, the Netflix documentary 13th is excellent, and available on YouTube.

How to Make Your Tech Last Longer

If we put a small amount of time into caring for our gadgets, they can last indefinitely. We’d also be doing the world a favor. By elongating the life of our gadgets, we put more use into the energy, materials and human labor invested in creating the product.

Brian X. Chen (The new York times)

This is a pretty surface-level article that basically suggests people take their smartphone to a repair shop instead of buying a new one. What it doesn't mention is that aftermarket operating systems such as the Android-based LineageOS can extend the lifetime of smartphones by providing security updates long beyond those provided by vendors.

Law enforcement arrests hundreds after compromising encrypted chat system

EncroChat sold customized Android handsets with GPS, camera, and microphone functionality removed. They were loaded with encrypted messaging apps as well as a secure secondary operating system (in addition to Android). The phones also came with a self-destruct feature that wiped the device if you entered a PIN.

The service had customers in 140 countries. While it was billed as a legitimate platform, anonymous sources told Motherboard that it was widely used among criminal groups, including drug trafficking organizations, cartels, and gangs, as well as hitmen and assassins.

EncroChat didn’t become aware that its devices had been breached until May after some users noticed that the wipe function wasn’t working. After trying and failing to restore the features and monitor the malware, EncroChat cut its SIM service and shut down the network, advising customers to dispose of their devices.

Monica Chin (The Verge)

It goes without saying that I don't want assassins, drug traffickers, and mafia types to be successful in life. However, I'm always a little concerned when there are attacks on encryption, as they're compromising systems also potentially used by protesters, activists, and those who oppose the status quo.

Uncovered: 1,000 phrases that incorrectly trigger Alexa, Siri, and Google Assistant

The findings demonstrate how common it is for dialog in TV shows and other sources to produce false triggers that cause the devices to turn on, sometimes sending nearby sounds to Amazon, Apple, Google, or other manufacturers. In all, researchers uncovered more than 1,000 word sequences—including those from Game of Thrones, Modern Family, House of Cards, and news broadcasts—that incorrectly trigger the devices.

“The devices are intentionally programmed in a somewhat forgiving manner, because they are supposed to be able to understand their humans,” one of the researchers, Dorothea Kolossa, said. “Therefore, they are more likely to start up once too often rather than not at all.”

Dan Goodin (Ars Technica)

As anyone with voice assistant-enabled devices in their home will testify, the number of times they accidentally spin up, or misunderstand what you're saying can be amusing. But we can and should be wary of what's being listened to, and why.

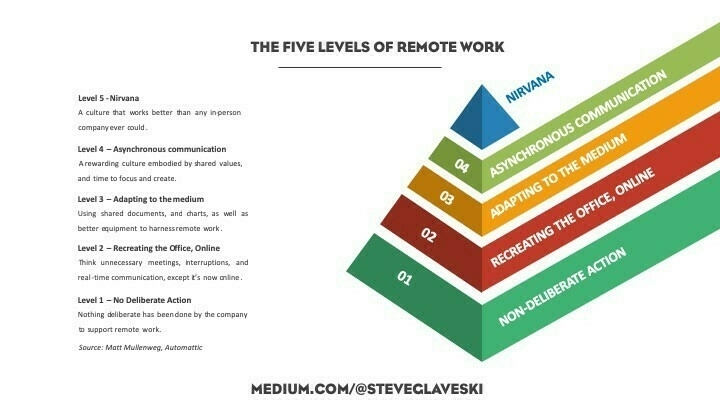

The Five Levels of Remote Work — and why you’re probably at Level 2

Effective written communication becomes critical the more companies embrace remote work. With an aversion to ‘jumping on calls’ at a whim, and a preference for asynchronous communication... [most] communications [are] text-based, and so articulate and timely articulation becomes key.

Steve Glaveski (The Startup)

This is from March and pretty clickbait-y, but everyone wants to know how they can improve - especially if didn't work remotely before the pandemic. My experience is that actually most people are at Level 3 and, of course, I'd say that I and my co-op colleagues are at Level 5 given our experience...

Why Birds Can Fly Over Mount Everest

All mammals, including us, breathe in through the same opening that we breathe out. Can you imagine if our digestive system worked the same way? What if the food we put in our mouths, after digestion, came out the same way? It doesn’t bear thinking about! Luckily, for digestion, we have a separate in and out. And that’s what the birds have with their lungs: an in point and an out point. They also have air sacs and hollow spaces in their bones. When they breathe in, half of the good air (with oxygen) goes into these hollow spaces, and the other half goes into their lungs through the rear entrance. When they breathe out, the good air that has been stored in the hollow places now also goes into their lungs through that rear entrance, and the bad air (carbon dioxide and water vapor) is pushed out the front exit. So it doesn’t matter whether birds are breathing in or out: Good air is always going in one direction through their lungs, pushing all the bad air out ahead of it.

Walter Murch (Nautilus)

Incredible. Birds are badass (and also basically dinosaurs).

Montaigne Fled the Plague, and Found Himself

In the many essays of his life he discovered the importance of the moderate life. In his final essay, “On Experience,” Montaigne reveals that “greatness of soul is not so much pressing upward and forward as knowing how to circumscribe and set oneself in order.” What he finds, quite simply, is the importance of the moderate life. We must then, he writes, “compose our character, not compose books.” There is nothing paradoxical about this because his literary essays helped him better essay his life. The lesson he takes from this trial might be relevant for our own trial: “Our great and glorious masterpiece is to live properly.”

Robert Zaresky (The New York Times)

Every week, Bryan Alexander replies to the weekly Thought Shrapnel newsletter. Last week, he sent this article to both me and Chris Lott (who produces the excellent Notabilia).

We had a bit of a chat, with us sharing our love of How to Live: A Life of Montaigne in One Question and Twenty Attempts at An Answer by Sarah Bakewell, and well as the useful tidbits it's possible glean from Stefan Zweig's short biography simply entitled Montaigne.

Header image by Nicolas Comte

Friday filchings

I'm having to write this ahead of time due to travel commitments. Still, there's the usual mixed bag of content in here, everything from digital credentials through to survival, with a bit of panpsychism thrown in for good measure.

Did any of these resonate with you? Let me know!

Competency Badges: the tail wagging the dog?

Recognition is from a certain point of view hyperlocal, and it is this hyperlocality that gives it its global value – not the other way around. The space of recognition is the community in which the competency is developed and activated. The recognition of a practitioner in a community is not reduced to those generally considered to belong to a “community of practice”, but to the intersection of multiple communities and practices, starting with the clients of these practices: the community of practice of chefs does not exist independently of the communities of their suppliers and clients. There is also a very strong link between individual recognition and that of the community to which the person is identified: shady notaries and politicians can bring discredit on an entire community.

Serge Ravet

As this roundup goes live I'll be at Open Belgium, and I'm looking forward to catching up with Serge while I'm there! My take on the points that he's making in this (long) post is actually what I'm talking about at the event: open initiatives need open organisations.

Universities do not exist ‘to produce students who are useful’, President says

Mr Higgins, who was opening a celebration of Trinity College Dublin’s College Historical Debating Society, said “universities are not there merely to produce students who are useful”.

“They are there to produce citizens who are respectful of the rights of others to participate and also to be able to participate fully, drawing on a wide range of scholarship,” he said on Monday night.

The President said there is a growing cohort of people who are alienated and “who feel they have lost their attachment to society and decision making”.

Jack Horgan-Jones (The Irish Times)

As a Philosophy graduate, I wholeheartedly agree with this, and also with his assessment of how people are obsessed with 'markets'.

Perennial philosophy

Not everyone will accept this sort of inclusivism. Some will insist on a stark choice between Jesus or hell, the Quran or hell. In some ways, overcertain exclusivism is a much better marketing strategy than sympathetic inclusivism. But if just some of the world’s population opened their minds to the wisdom of other religions, without having to leave their own faith, the world would be a better, more peaceful place. Like Aldous Huxley, I still believe in the possibility of growing spiritual convergence between different religions and philosophies, even if right now the tide seems to be going the other way.

Jules Evans (Aeon)

This is an interesting article about the philosophy of Aldous Huxley, whose books have always fascinated me. For some reason, I hadn't twigged that he was related to Thomas Henry Huxley (aka "Darwin's bulldog").

What the Death of iTunes Says About Our Digital Habits

So what really failed, maybe, wasn’t iTunes at all—it was the implicit promise of Gmail-style computing. The explosion of cloud storage and the invention of smartphones both arrived at roughly the same time, and they both subverted the idea that we should organize our computer. What they offered in its place was a vision of ease and readiness. What the idealized iPhone user and the idealized Gmail user shared was a perfect executive-functioning system: Every time they picked up their phone or opened their web browser, they knew exactly what they wanted to do, got it done with a calm single-mindedness, and then closed their device. This dream illuminated Inbox Zero and Kinfolk and minimalist writing apps. It didn’t work. What we got instead was Inbox Infinity and the algorithmic timeline. Each of us became a wanderer in a sea of content. Each of us adopted the tacit—but still shameful—assumption that we are just treading water, that the clock is always running, and that the work will never end.

Robinson Meyer (The Atlantic)

This is curiously-written (and well-written) piece, in the form of an ordered list, that takes you through the changes since iTunes launched. It's hard to disagree with the author's arguments.

Imagine a world without YouTube

But what if YouTube had failed? Would we have missed out on decades of cultural phenomena and innovative ideas? Would we have avoided a wave of dystopian propaganda and misinformation? Or would the internet have simply spiraled into new — yet strangely familiar — shapes, with their own joys and disasters?

Adi Robertson (The Verge)

I love this approach of imagining how the world would have been different had YouTube not been the massive success it's been over the last 15 years. Food for thought.

Big Tech Is Testing You

It’s tempting to look for laws of people the way we look for the laws of gravity. But science is hard, people are complex, and generalizing can be problematic. Although experiments might be the ultimate truthtellers, they can also lead us astray in surprising ways.

Hannah Fry (The New Yorker)

A balanced look at the way that companies, especially those we classify as 'Big Tech' tend to experiment for the purposes of engagement and, ultimately, profit. Definitely worth a read.

Trust people, not companies

The trend to tap into is the changing nature of trust. One of the biggest social trends of our time is the loss of faith in institutions and previously trusted authorities. People no longer trust the Government to tell them the truth. Banks are less trusted than ever since the Financial Crisis. The mainstream media can no longer be trusted by many. Fake news. The anti-vac movement. At the same time, we have a generation of people who are looking to their peers for information.

Lawrence Lundy (Outlier Ventures)

This post is making the case for blockchain-based technologies. But the wider point is a better one, that we should trust people rather than companies.

The Forest Spirits of Today Are Computers

Any sufficiently advanced technology is indistinguishable from nature. Agriculture de-wilded the meadows and the forests, so that even a seemingly pristine landscape can be a heavily processed environment. Manufactured products have become thoroughly mixed in with natural structures. Now, our machines are becoming so lifelike we can’t tell the difference. Each stage of technological development adds layers of abstraction between us and the physical world. Few people experience nature red in tooth and claw, or would want to. So, although the world of basic physics may always remain mindless, we do not live in that world. We live in the world of those abstractions.

George Musser (Nautilus)

This article, about artificial 'panpsychism' is really challenging to the reader's initial assumptions (well, mine at least) and really makes you think.

The man who refused to freeze to death

It would appear that our brains are much better at coping in the cold than dealing with being too hot. This is because our bodies’ survival strategies centre around keeping our vital organs running at the expense of less essential body parts. The most essential of all, of course, is our brain. By the time that Shatayeva and her fellow climbers were experiencing cognitive issues, they were probably already experiencing other organ failures elsewhere in their bodies.

William Park (BBC Future)

Not just one story in this article, but several with fascinating links and information.

Enjoy this? Sign up for the weekly roundup and/or become a supporter!

Header image by Tim Mossholder.

Saturday strikings

This week's roundup is going out a day later than usual, as yesterday was the Global Climate Strike and Thought Shrapnel was striking too!

Here's what I've been paying attention to this week:

Childhood amnesia

My kids will often ask me about what I was like at their age. It might be about how fast I swam a couple of length freestyle, it could be what music I was into, or when I went on a particular holiday I mentioned in passing. Of course, as I didn’t keep a diary as a child, these questions are almost impossible to answer. I simply can’t remember how old I was when certain things happened.

Over and above that, though, there’s some things that I’ve just completely forgotten. I only realise this when I see, hear, or perhaps smell something that reminds me of a thing that my conscious mind had chosen to leave behind. It’s particularly true of experiences from when we are very young. This phenomenon is known as ‘childhood amnesia’, as an article in Nautilus explains:

On average, people’s memories stretch no farther than age three and a half. Everything before then is a dark abyss. “This is a phenomenon of longstanding focus,” says Patricia Bauer of Emory University, a leading expert on memory development. “It demands our attention because it’s a paradox: Very young children show evidence of memory for events in their lives, yet as adults we have relatively few of these memories.”Interestingly, our seven year-old daughter is on the cusp of this forgetting. She’s slowly forgetting things that she had no problem recalling even last year, and has to be prompted by photographs of the event or experience.In the last few years, scientists have finally started to unravel precisely what is happening in the brain around the time that we forsake recollection of our earliest years. “What we are adding to the story now is the biological basis,” says Paul Frankland, a neuroscientist at the Hospital for Sick Children in Toronto. This new science suggests that as a necessary part of the passage into adulthood, the brain must let go of much of our childhood.

One experiment after another revealed that the memories of children 3 and younger do in fact persist, albeit with limitations. At 6 months of age, infants’ memories last for at least a day; at 9 months, for a month; by age 2, for a year. And in a landmark 1991 study, researchers discovered that four-and-a-half-year-olds could recall detailed memories from a trip to Disney World 18 months prior. Around age 6, however, children begin to forget many of these earliest memories. In a 2005 experiment by Bauer and her colleagues, five-and-a-half-year-olds remembered more than 80 percent of experiences they had at age 3, whereas seven-and-a-half-year-olds remembered less than 40 percent.It's fascinating, and also true of later experiences, although to a lesser extent. Our brains conceal some of our memories by rewiring our brain. This is all part of growing up.

This restructuring of memory circuits means that, while some of our childhood memories are truly gone, others persist in a scrambled, refracted way. Studies have shown that people can retrieve at least some childhood memories by responding to specific prompts—dredging up the earliest recollection associated with the word “milk,” for example—or by imagining a house, school, or specific location tied to a certain age and allowing the relevant memories to bubble up on their own.So we shouldn't worry too much about remembering childhood experiences in high-fidelity. After all, it's important to be able to tell new stories to both ourselves and other people, casting prior experiences in a new light.

Source: Nautilus

Childhood amnesia

My kids will often ask me about what I was like at their age. It might be about how fast I swam a couple of length freestyle, it could be what music I was into, or when I went on a particular holiday I mentioned in passing. Of course, as I didn’t keep a diary as a child, these questions are almost impossible to answer. I simply can’t remember how old I was when certain things happened.

Over and above that, though, there’s some things that I’ve just completely forgotten. I only realise this when I see, hear, or perhaps smell something that reminds me of a thing that my conscious mind had chosen to leave behind. It’s particularly true of experiences from when we are very young. This phenomenon is known as ‘childhood amnesia’, as an article in Nautilus explains:

On average, people’s memories stretch no farther than age three and a half. Everything before then is a dark abyss. “This is a phenomenon of longstanding focus,” says Patricia Bauer of Emory University, a leading expert on memory development. “It demands our attention because it’s a paradox: Very young children show evidence of memory for events in their lives, yet as adults we have relatively few of these memories.”Interestingly, our seven year-old daughter is on the cusp of this forgetting. She’s slowly forgetting things that she had no problem recalling even last year, and has to be prompted by photographs of the event or experience.In the last few years, scientists have finally started to unravel precisely what is happening in the brain around the time that we forsake recollection of our earliest years. “What we are adding to the story now is the biological basis,” says Paul Frankland, a neuroscientist at the Hospital for Sick Children in Toronto. This new science suggests that as a necessary part of the passage into adulthood, the brain must let go of much of our childhood.

One experiment after another revealed that the memories of children 3 and younger do in fact persist, albeit with limitations. At 6 months of age, infants’ memories last for at least a day; at 9 months, for a month; by age 2, for a year. And in a landmark 1991 study, researchers discovered that four-and-a-half-year-olds could recall detailed memories from a trip to Disney World 18 months prior. Around age 6, however, children begin to forget many of these earliest memories. In a 2005 experiment by Bauer and her colleagues, five-and-a-half-year-olds remembered more than 80 percent of experiences they had at age 3, whereas seven-and-a-half-year-olds remembered less than 40 percent.It's fascinating, and also true of later experiences, although to a lesser extent. Our brains conceal some of our memories by rewiring our brain. This is all part of growing up.

This restructuring of memory circuits means that, while some of our childhood memories are truly gone, others persist in a scrambled, refracted way. Studies have shown that people can retrieve at least some childhood memories by responding to specific prompts—dredging up the earliest recollection associated with the word “milk,” for example—or by imagining a house, school, or specific location tied to a certain age and allowing the relevant memories to bubble up on their own.So we shouldn't worry too much about remembering childhood experiences in high-fidelity. After all, it's important to be able to tell new stories to both ourselves and other people, casting prior experiences in a new light.

Source: Nautilus